代码是我几个月前,不知道哪里下载的,原始版权不在我,也没法给出处。

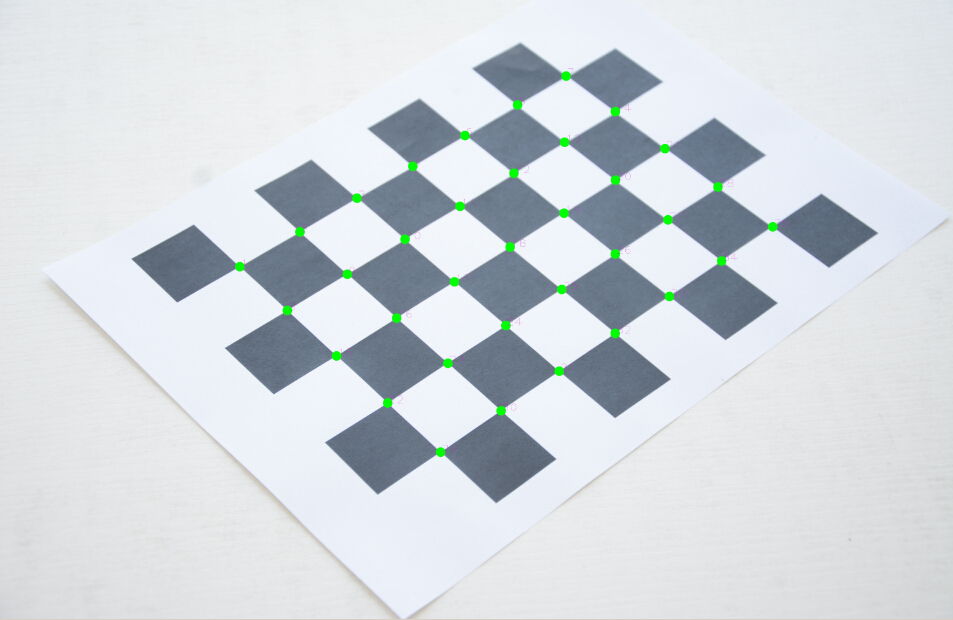

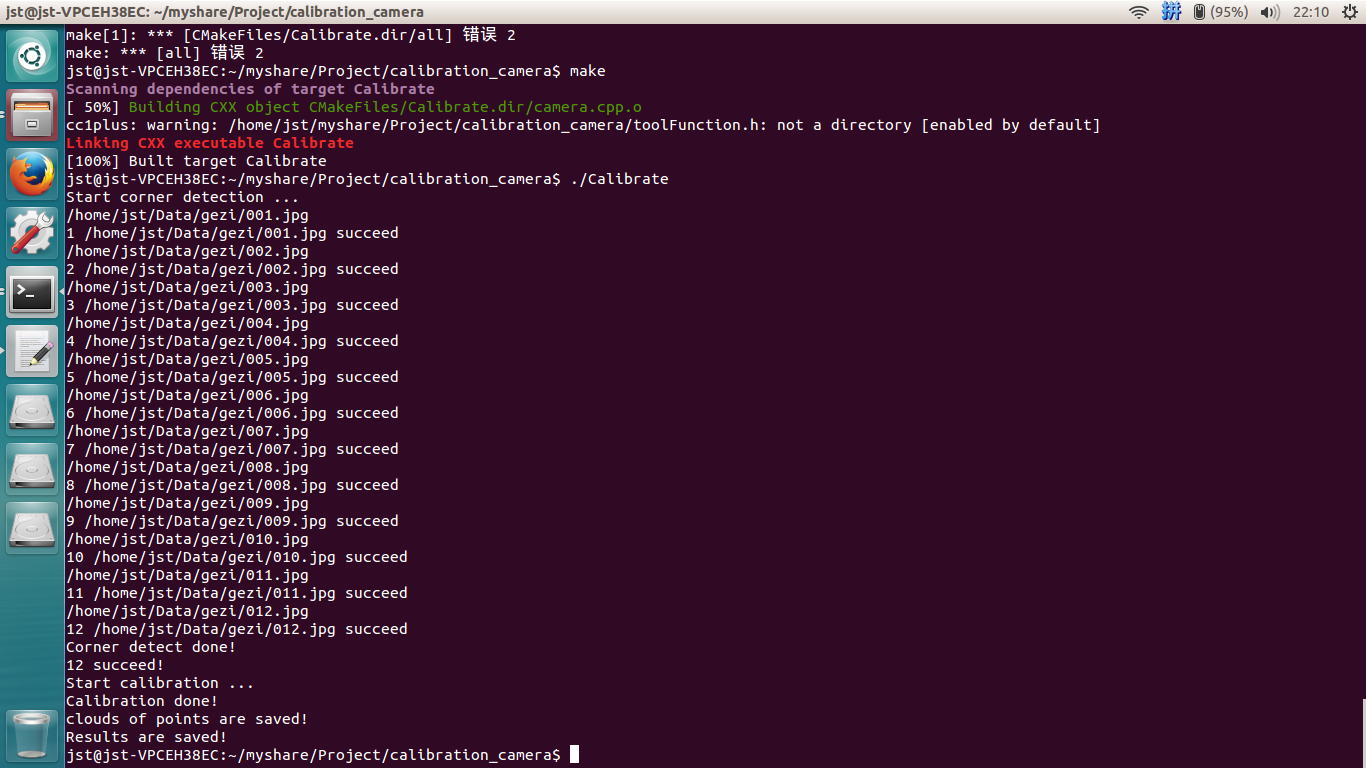

opencv做相机标定经常碰到问题,就是超大图片无法找到角点。我做了小修改,就是把图片先缩小,等找到角点了,再放大到原来比例。

输入参数:

方格的数量,注意是内圈角点数量 boardsize

方格的物理 尺寸,单位毫米 squaresize

CMakeLists:

cmake_minimum_required(VERSION 2.8)

project( Calibrate )

find_package( OpenCV REQUIRED )

include_directories(toolFunction.h)

add_executable( Calibrate camera.cpp toolFunction.cpp)

target_link_libraries( Calibrate ${OpenCV_LIBS} )

camera.cpp

#include<iostream>

#include <vector>

#include <string>

#include <opencv2/photo.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include "toolFunction.h"

#define DEBUG_OUTPUT_INFO

using namespace std;

using namespace cv;

int main()

{

//char* folderPath = "E:/Images/New"; // image folder

//std::vector<std::string> graphPaths;

std::vector<std::string> graphSuccess;

char mypath[50];

CalibrationAssist calAssist;

cv::Size msize(600,400); //for resolution 6000*4000

int downsize = 10; //downsize scale factor

//graphPaths = calAssist.get_filelist(folderPath); // collect image list

std::cout << "Start corner detection ..." << std::endl;

cv::Mat curGraph; // current image

cv::Mat gray; // gray image of current image

cv::Mat small; // temp file to downsize the image

int imageCount = 12;

int imageCountSuccess = 0;

cv::Size image_size;

cv::Size boardSize = cv::Size(7, 5); // chess board pattern size,only compute the inside square!!

cv::Size squareSize = cv::Size(30, 30); // grid physical size, as a scale factor

std::vector<cv::Point2f> corners; // one image corner list

std::vector<std::vector<cv::Point2f> > seqCorners; // n images corner list

for ( int i=1; i<=imageCount; i++ )

{

sprintf(mypath,"/home/jst/Data/gezi/%03d.jpg", i);

std::cout<<mypath<<endl;

curGraph = cv::imread(mypath);

cv::resize(curGraph, small, msize);

if ( curGraph.channels() == 3 )

cv::cvtColor( curGraph, gray, CV_BGR2GRAY );

else

curGraph.copyTo( gray );

// for every image, empty the corner list

std::vector<cv::Point2f>().swap( corners );

// corners detection

bool success = cv::findChessboardCorners( small, boardSize, corners );

if ( success ) // succeed

{

std::cout << i << " " << mypath << " succeed"<< std::endl;

int row = curGraph.rows;

int col = curGraph.cols;

imageCountSuccess ++;

image_size = cv::Size( col, row );

//rectify the corner

for(size_t j=0;j<corners.size();j++)

{

corners[j].x = corners[j].x*downsize;

corners[j].y = corners[j].y*downsize;

}

// find sub-pixels

cv::cornerSubPix(

gray,

corners,

cv::Size( 11, 11 ),

cv::Size( -1, -1 ),

cv::TermCriteria( CV_TERMCRIT_EPS + CV_TERMCRIT_ITER, 30, 0.1 ) );

seqCorners.push_back( corners );

// draw corners and show them in current image

cv::Mat imageDrawCorners;

if ( curGraph.channels() == 3 )

curGraph.copyTo( imageDrawCorners );

else

cv::cvtColor( curGraph, imageDrawCorners, CV_GRAY2RGB );

for ( int j = 0; j < corners.size(); j ++)

{

cv::Point2f dotPoint = corners[j];

cv::circle( imageDrawCorners, dotPoint, 3.0, cv::Scalar( 0, 255, 0 ), -1 );

cv::Point2f pt_m = dotPoint + cv::Point2f(4,4);

char text[100];

sprintf( text, "%d", j+1 ); // corner indexes which start from 1

cv::putText( imageDrawCorners, text, pt_m, 1, 0.5, cv::Scalar( 255, 0, 255 ) );

}

sprintf(mypath,"./corners_%d.jpg",i);

// save image drawn with corners and labeled with indexes

cv::imwrite( mypath, imageDrawCorners );

}

else // failed

{

std::cout << mypath << " corner detect failed!" << std::endl;

}

}

std::cout << "Corner detect done!" << std::endl

<< imageCountSuccess << " succeed! " << std::endl;

if ( imageCountSuccess < 3 )

{

std::cout << "Calibrated success " << imageCountSuccess

<< " images, less than 3 images." << std::endl;

return 0;

}

else

{

std::cout << "Start calibration ..." << std::endl;

cv::Point3f point3D;

std::vector<cv::Point3f> objectPoints;

std::vector<double> distCoeffs;

std::vector<double> rotation;

std::vector<double> translation;

std::vector<std::vector<cv::Point3f> > seqObjectPoints;

std::vector<std::vector<double> > seqRotation;

std::vector<std::vector<double> > seqTranslation;

cv::Mat_<double> cameraMatrix;

// calibration pattern points in the calibration pattern coordinate space

for ( int t=0; t<imageCountSuccess; t++ )

{

objectPoints.clear();

for ( int i=0; i<boardSize.height; i++ )

{

for ( int j=0; j<boardSize.width; j++ )

{

point3D.x = i * squareSize.width;

point3D.y = j * squareSize.height;

point3D.z = 0;

objectPoints.push_back(point3D);

}

}

seqObjectPoints.push_back(objectPoints);

}

double reprojectionError = calibrateCamera(

seqObjectPoints,

seqCorners,

image_size,

cameraMatrix,

distCoeffs,

seqRotation,

seqTranslation,

CV_CALIB_FIX_ASPECT_RATIO|CV_CALIB_FIX_PRINCIPAL_POINT );

std::cout << "Calibration done!" << std::endl;

// calculate the calibration pattern points with the camera model

std::vector<cv::Mat_<double> > projectMats;

for ( int i=0; i<imageCountSuccess; i++ )

{

cv::Mat_<double> R, T;

// translate rotation vector to rotation matrix via Rodrigues transformation

cv::Rodrigues( seqRotation[i], R );

T = cv::Mat( cv::Matx31d(

seqTranslation[i][0],

seqTranslation[i][1],

seqTranslation[i][2]) );

cv::Mat_<double> P = cameraMatrix * cv::Mat( cv::Matx34d(

R(0,0), R(0,1), R(0,2), T(0),

R(1,0), R(1,1), R(1,2), T(1),

R(2,0), R(2,1), R(2,2), T(2) ) );

projectMats.push_back(P);

}

std::vector<cv::Point2d> PointSet;

int pointNum = boardSize.width*boardSize.height;

std::vector<cv::Point3d> objectClouds;

for ( int i=0; i<pointNum; i++ )

{

PointSet.clear();

for ( int j=0; j<imageCountSuccess; j++ )

{

cv::Point2d tempPoint = seqCorners[j][i];

PointSet.push_back(tempPoint);

}

// calculate calibration pattern points

cv::Point3d objectPoint = calAssist.triangulate(projectMats,PointSet);

objectClouds.push_back(objectPoint);

}

std::string pathTemp_point;

pathTemp_point = ".";

pathTemp_point += "/point.txt";

calAssist.save3dPoint(pathTemp_point,objectClouds);

std::string pathTemp_calib;

pathTemp_calib = ".";

pathTemp_calib += "/calibration.txt";

FILE* fp = fopen( pathTemp_calib.c_str(), "w" );

fprintf( fp, "The average of re-projection error : %lf\n", reprojectionError );

for ( int i=0; i<imageCountSuccess; i++ )

{

std::vector<cv::Point2f> errorList;

cv::projectPoints(

seqObjectPoints[i],

seqRotation[i],

seqTranslation[i],

cameraMatrix,

distCoeffs,

errorList );

corners.clear();

corners = seqCorners[i];

double meanError(0.0);

for ( int j=0; j<corners.size(); j++ )

{

meanError += std::sqrt((errorList[j].x - corners[j].x)*(errorList[j].x - corners[j].x) +

(errorList[j].y - corners[j].y)*(errorList[j].y - corners[j].y));

}

rotation.clear();

translation.clear();

rotation = seqRotation[i];

translation = seqTranslation[i];

fprintf( fp, "Re-projection of image %d:%lf\n", i+1, meanError/corners.size() );

fprintf( fp, "Rotation vector :\n" );

fprintf( fp, "%lf %lf %lf\n", rotation[0], rotation[1], rotation[2] );

fprintf( fp, "Translation vector :\n" );

fprintf( fp, "%lf %lf %lf\n\n", translation[0], translation[1], translation[2] );

}

fprintf( fp, "Camera internal matrix :\n" );

fprintf( fp, "%lf %lf %lf\n%lf %lf %lf\n%lf %lf %lf\n",

cameraMatrix(0,0), cameraMatrix(0,1), cameraMatrix(0,2),

cameraMatrix(1,0), cameraMatrix(1,1), cameraMatrix(1,2),

cameraMatrix(2,0), cameraMatrix(2,1), cameraMatrix(2,2));

fprintf( fp,"Distortion coefficient :\n" );

for ( int k=0; k<distCoeffs.size(); k++)

fprintf( fp, "%lf ", distCoeffs[k] );

std::cout << "Results are saved!" << std::endl;

}

return 0;

}

toolFunction.cpp

#include "toolFunction.h"

cv::Point3d CalibrationAssist::triangulate(

std::vector<cv::Mat_<double> > &ProjectMats,

std::vector<cv::Point2d> &imagePoints)

{

int i,j;

std::vector<cv::Point2d> pointSet;

int frameSum = ProjectMats.size();

cv::Mat A(2*frameSum,3,CV_32FC1);

cv::Mat B(2*frameSum,1,CV_32FC1);

cv::Point2d u,u1;

cv::Mat_<double> P;

cv::Mat_<double> rowA1,rowA2,rowB1,rowB2;

int k = 0;

for ( i = 0; i < frameSum; i++ ) //get the coefficient matrix A and B

{

u = imagePoints[i];

P = ProjectMats[i];

cv::Mat( cv::Matx13d(

u.x*P(2,0)-P(0,0),

u.x*P(2,1)-P(0,1),

u.x*P(2,2)-P(0,2) ) ).copyTo( A.row(k) );

cv::Mat( cv::Matx13d(

u.y*P(2,0)-P(1,0),

u.y*P(2,1)-P(1,1),

u.y*P(2,2)-P(1,2) ) ).copyTo( A.row(k+1) );

cv::Mat rowB1( 1, 1, CV_32FC1, cv::Scalar( -(u.x*P(2,3)-P(0,3)) ) );

cv::Mat rowB2( 1, 1, CV_32FC1, cv::Scalar(-(u.y*P(2,3)-P(1,3)) ) );

rowB1.copyTo( B.row(k) );

rowB2.copyTo( B.row(k+1) );

k += 2;

}

cv::Mat X;

cv::solve( A, B, X, DECOMP_SVD );

return Point3d(X);

}

void CalibrationAssist::save3dPoint( std::string path_, std::vector<cv::Point3d> &Point3dLists)

{

const char * path = path_.c_str();

FILE* fp = fopen( path, "w" );

for ( int i = 0; i < Point3dLists.size(); i ++)

{

// fprintf(fp,"%d ",i);

fprintf( fp, "%lf %lf %lf\n",

Point3dLists[i].x, Point3dLists[i].y, Point3dLists[i].z);

}

fclose(fp);

#if 1

std::cout << "clouds of points are saved!" << std::endl;

#endif

}#ifndef TOOL_FUNCTION_H

#pragma once

#define TOOL_FUNCTION_H

#include<iostream>

#include <math.h>

#include <fstream>

#include <vector>

#include <string>

#include <opencv2/photo.hpp>

#include <opencv2/highgui.hpp>

using namespace cv;

using namespace std;

class CalibrationAssist

{

public:

CalibrationAssist() {}

~CalibrationAssist() {}

public:

cv::Point3d triangulate( std::vector<cv::Mat_<double> > &ProjectMats,

std::vector<cv::Point2d> &imagePoints );

void save3dPoint( std::string path_, std::vector<cv::Point3d> &Point3dLists );

};

#endif // TOOL_FUNCTION_H

Camera internal matrix :

11964.095146 0.000000 2999.500000

0.000000 11964.095146 1999.500000

0.000000 0.000000 1.000000

Distortion coefficient :

0.163781 6.243557 -0.000678 0.000548 -190.849777

这图可是6000*4000分辨率!我缩小的10倍做的