一、概述

要想优化log file sync等待事件,那么懂得其触发机制和原理是很优必要的。这里先引用官方文档来对log file sync等待进行解释(任何信息的描述应以官方文档为准,百度到的结果通常会有一些错误,错误的信息会导致错误的思路以及错误的结果):

What is a ‘log file sync’ wait?

When a user session commits, all redo records generated by that session’s transaction need to be flushed from memory to the redo logfile to insure changes to the database made by that transaction become permanent.

【翻译:当用户会话提交时,该会话的事务生成的所有redo记录都需要从内存中刷新到重做日志文件,以确保该事务永久性的记录到数据库变更中。】

At the time of commit, the user session will post LGWR to write the log buffer (containing the current unwritten redo, including this session’s redo records) to the redo log file. Once LGWR knows that its write requests have completed, it will post the user session to notify it that this has completed. The user session waits on ‘log file sync’ while waiting for LGWR to post it back to confirm all redo it generated have made it safely onto disk.

【翻译:在提交时,用户会话将发布LGWR以将日志缓冲区(包含当前未写入的redo,包括该会话的redo记录)写入redo log。 一旦LGWR知道其写请求已完成,它将发布通知给用户会话告知其已完成。 用户会话等待“log file sync”,同时等待LGWR将其回发,以确认其生成的所有redo操作已将其安全地保存到磁盘上。】

The time between the user session posting the LGWR and the LGWR posting the user after the write has completed is the wait time for ‘log file sync’ that the user session will show.

【翻译:用户会话通知LGWR和LGWR在写入完成后通知用户之间的时间就是“log file sync”显示在用户会话的等待时间。】

Note that in 11.2 and higher LGWR may dynamically switch from the default post/wait mode to a polling mode where it will maintain it’s writing progress in an in-memory structure and sessions waiting on ‘log file sync’ can periodically check that structure (i.e. poll) to see if LGWR has progressed far enough such that the redo records representing their transactions have made it to disk. In that case the wait time will span from posting LGWR until the session sees sufficient progress has been made.

【翻译:请注意,在11.2(确切版本是11.2.0.3.0)及更高版本中,LGWR可以动态地从默认的post/wait模式切换到polling模式(原模式为:post/wait),在这种模式下,它将保持其在内存结构中的写入进度,并且等待“log file sync”的会话可以定期检查该结构(即polling)以查看LGWR是否已取得足够的进展,以使代表其事务的redo记录已进入磁盘。 在这种情况下,等待时间将从发布LGWR到会话看到足够的进展为止。】

Compare the average wait time for ‘log file sync’ to the average wait time for ‘log file parallel write’.

【翻译:将“log file sync”的平均等待时间与“log file parallel write”的平均等待时间进行比较】

Wait event ‘log file parallel’ write is waited for by LGWR while the actual write operation to the redo is occurring. The duration of the event shows the time waited for the IO portion of the operation to occur. For more information on “log file parallel write” see: Document:34583.1 WAITEVENT: “log file parallel write” Reference Note

【翻译:“log file paralle write”是等待lgwr对redo记录进行实际的写入操作,事件的持续表示为等待操作的IO部分发生的时间。 有关“日志文件并行写入”的更多信息,请参见:Document:34583.1 WAITEVENT: “log file parallel write” Reference Note】

To identify a potential high commit rate, if the average wait time for ‘log file sync’ is much higher than the average wait time for ‘log file parallel write’, then this means that most of the time waiting is not due to waiting for the redo to be written and thus slow IO is not the cause of the problem. The surplus time is CPU activity and is most commonly contention caused by over committing.

【为了确定潜在的高提交率,如果“log file sync”的平均等待时间比“log file parallel write”的平均等待时间高得多,那么这意味着大多数等待时间不是由于等待redo记录的写入,从而导致的问题的原因并不是IO慢。 (排除IO消耗的时间后,log file sync等待的)剩余时间是CPU的活动 ,最常见的争用是由于过量提交引起的。】

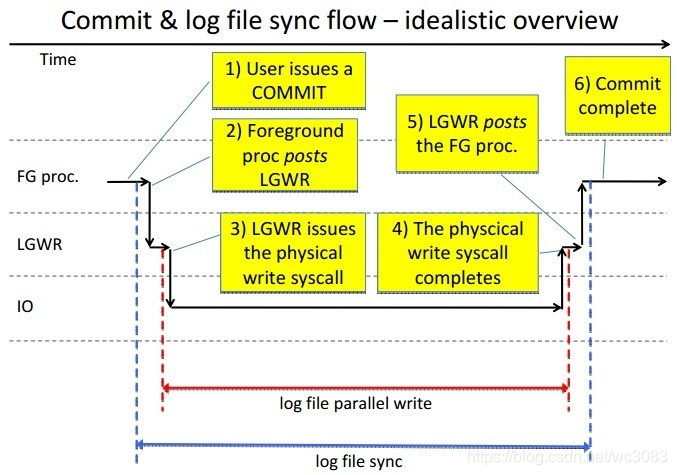

从官方文档的解释来看,commit后大体分为以下几个阶段:

1)用户进程发起commit;【CPU资源消耗】

2)用户进程通知lgwr写日志;【CPU资源消耗】

3)lgwr接收到请求,并开始写日志(从内存写入物理文件);【I/O资源消耗】

4)lgwr完成日志写入;【I/O资源消耗】

5)lgwr通知用户进程已写入完成;【CPU资源消耗】

6)用户进程接收到写入完成的通知。【CPU资源消耗】

而log file sync和log file parallel write就是在1-6的阶段中,通过官方文档的理解,可以将判断出这两个等待事件各自对应哪几个阶段。为了看起来更直观,这里引用一下tanel poder大师的图:

从图中以及对应阶段来看能清晰的看到:

log file sync等待包含阶段为:2,3,4,5

log file parallel write等待包含阶段为:3,4

我们可以看出,正常情况下最慢的环节应该是在3,4阶段,即物理I/O操作,而其他阶段涉及到的动作都是如CPU调度,进程间通信等,而这些动作一般都是非常快的。

说到最慢的物理I/O环节,再对应2个等待事件以及6个阶段,可以这么说:log file parallel write等待是log file sync等待全过程的一部分。这也是为什么碰到log file sync等待就需要同步分析log file parallel write等待的原因了,那就是评估存储的IO性能问题。因为log file parallel write的3,4步就是一个物理写的耗时,且是log file sync等待的一部分。如果log file parallel write等待耗时较高,且和log file sync差不了太多,那就说明log file sync和log file parallel wirte最大的瓶颈在于存储IO性能。而如果log file parallel write耗时不高,但是log file sync耗时较高,那就说明存储性能是没有问题的,问题就出在log file sync的其他阶段了。我们可以根据得到的结论对log file sync进行以下几个方面的分析。

二、分析与方法

1、COMMIT次数过多(即图中的1-2,5-6阶段)

既然log file sync是由于commit导致的,那么commit次数过多自然会导致log file sync等待次数的增加,导致比较严重的等待。反之,如果log file sync的次数很高,但是等待的平均时间不高,则很可能是由于commit过于频繁导致的。

我们可以到数据库分析commit次数的变化量是否有突增:

--commit次数变化量

select b.end_interval_time ,

value-lag(value,1) over(order by a.snap_id) "user commits"

from DBA_HIST_SYSSTAT a,dba_hist_snapshot b

where a.snap_id=b.snap_id and a.snap_id >=&begin_snapid and a.stat_name in('user commits')

--当前内存中commits较高的语句

SELECT SYSDATE,

SE.USERNAME,

SE.SID,

SE.SERIAL#,

SE.SQL_ID,

SE.STATUS,

SE.MACHINE,

SE.OSUSER,

ROUND(ST.VALUE) "COMMIT TIMES",

SA.SQL_TEXT

FROM V$SESSION SE, V$SESSTAT ST, V$SQLAREA SA

WHERE SE.SID = ST.SID

AND ST.STATISTIC# =

(SELECT STATISTIC# FROM V$STATNAME WHERE NAME = 'user commits')

--AND SE.USERNAME IS NOT NULL

AND ST.VALUE > 1000

AND SE.SQL_ADDRESS = SA.ADDRESS

AND SE.SQL_HASH_VALUE = SA.HASH_VALUE

ORDER BY "COMMIT TIMES" DESC;

如果commit次数过多,则建议解决异常commit语句,考虑合并commit

2、IO性能问题(即图中的3-4阶段)

概述的最后面有说明到,可以与log file parallel write等待事件进行比较:

如果log file sync突增期间,log file parallel write也突增,则很可能是IO问题导致。可以排查这几个方面:

(1)存储端整体性能问题(这就不多解释了,通常都建议使用IO性能较好存储,而且是写性能优先)

(2)IO尖刺:如果log file parallel write的等待时间比较高,而log file sync的平均等待时间恰好在正常的I/O范围内,请注意这可能是因为I/O中存在峰值,而平均值是没法显示出来的,这种情况就可以借助等待事件直方图,或者直接看AWR、OSWatcher等来进行分析。

(3)高IO消耗sql/应用(导致IO瓶颈,自然整体效率下降):分析服务器,是什么导致了高IO消耗。大部分数据库服务器只有数据库,所以一般情况下都是SQL导致的。

(4)IO争用:redolog和其他文件防止一起,存在IO争用。此时就建议将online redo log分离出来,放到独立的磁盘上。

--iowait 检查log file sync、log file parallel write以及各类等待事件的耗时

with t as (

select s.snap_id,

s.instance_number,

s.end_interval_time,

total_waits - lag(total_waits, 1) over(partition by s.instance_number order by s.snap_id) waits,

(time_waited_micro - lag(time_waited_micro, 1) over(partition by s.instance_number order by s.snap_id)) / 1000 twt

from dba_hist_system_event ev, dba_hist_snapshot s

where ev.instance_number = s.instance_number

and ev.snap_id = s.snap_id

and event_name = 'log file sync' --带入所需的等待事件名

and s.end_interval_time BETWEEN sysdate - 3 AND sysdate)

select to_char(end_interval_time, 'YYYYMMDD HH24:MI'),

instance_number,

sum(waits),

sum(twt),

round(sum(twt) / sum(waits), 2) wt

from t

group by to_char(end_interval_time, 'YYYYMMDD HH24:MI'), instance_number

order by 1, instance_number;

--iowait直方图 检查各类等待事件在对应毫秒区间的触发次数

select b.end_interval_time,

sum(decode(a.wait_time_milli,1,a.wait_count,null))-sum(decode(c.wait_time_milli,1,c.wait_count,null)) wait_time_milli_1,

sum(decode(a.wait_time_milli,2,a.wait_count,null))-sum(decode(c.wait_time_milli,2,c.wait_count,null)) wait_time_milli_2,

sum(decode(a.wait_time_milli,4,a.wait_count,null))-sum(decode(c.wait_time_milli,4,c.wait_count,null)) wait_time_milli_4,

sum(decode(a.wait_time_milli,8,a.wait_count,null))-sum(decode(c.wait_time_milli,8,c.wait_count,null)) wait_time_milli_8,

sum(decode(a.wait_time_milli,16,a.wait_count,null))-sum(decode(c.wait_time_milli,16,c.wait_count,null)) wait_time_milli_16,

sum(decode(a.wait_time_milli,32,a.wait_count,null))-sum(decode(c.wait_time_milli,32,c.wait_count,null)) wait_time_milli_32,

sum(decode(a.wait_time_milli,64,a.wait_count,null))-sum(decode(c.wait_time_milli,64,c.wait_count,null)) wait_time_milli_64,

sum(decode(a.wait_time_milli,128,a.wait_count,null))-sum(decode(c.wait_time_milli,128,c.wait_count,null)) wait_time_milli_128,

sum(decode(a.wait_time_milli,256,a.wait_count,null))-sum(decode(c.wait_time_milli,256,c.wait_count,null)) wait_time_milli_256,

sum(decode(a.wait_time_milli,512,a.wait_count,null))-sum(decode(c.wait_time_milli,512,c.wait_count,null)) wait_time_milli_512,

sum(decode(a.wait_time_milli,1024,a.wait_count,null))-sum(decode(c.wait_time_milli,1024,c.wait_count,null)) wait_time_milli_1024,

sum(decode(a.wait_time_milli,2048,a.wait_count,null))-sum(decode(c.wait_time_milli,2048,c.wait_count,null)) wait_time_milli_2048,

sum(decode(a.wait_time_milli,4096,a.wait_count,null))-sum(decode(c.wait_time_milli,4096,c.wait_count,null)) wait_time_milli_4096,

sum(decode(a.wait_time_milli,8192,a.wait_count,null))-sum(decode(c.wait_time_milli,8192,c.wait_count,null)) wait_time_milli_8192

from dba_hist_event_histogram a ,dba_hist_snapshot b,dba_hist_event_histogram c

where a.event_name='log file sync' and a.snap_id=b.snap_id --带入所需的等待事件名

and b.end_interval_time BETWEEN sysdate - 3 AND sysdate

and a.snap_id=c.snap_id + 1

and a.wait_time_milli=c.wait_time_milli

and a.event_name=c.event_name

group by b.end_interval_time

order by end_interval_time desc;

3、日志/日志量问题(全面阶段)

(1)redo log 大小/组数量不合理,一般伴随着各类log file switch等待

(2)语句导致了大量的日志生成:

日志量激增,导致日志频繁切换(一般同样伴随着各类log file switch等待)。而日志切换期间无法写日志,又阻塞commit引起的日志写,所以之前的1,2步变慢。

日志切换期间lgwr要做更多的工作,比如通知arch进行归档,从控制文件中找出新日志组,打开新的日志组,在新日志组中写入初始化信息等等,导致3-5步变慢,总体自然更慢

4、log buffer问题(全面阶段)

log buffer不足:

(1)前台进程需要等待lgwr释放log buffer才能继续分配。

(2)log buffer较小也会导致频繁的日志写操作

5、隐含参数问题(lgwr工作机制问题)

ORACLE从11gR2开始,为lgwr写日志引入了polling机制,而在以前只有post/wait机制。【官方文档说明请参考:Adaptive Log File Sync Optimization (Doc ID 1541136.1)】

同时引入了一个隐含参数,“_use_adaptive_log_file_sync”即通过定义该参数来确定使用哪个机制。

在11.2.0.3以下,该参数的默认值为false,即只启用post/wait机制。

从11.2.0.3开始,该参数的默认值为true,即ORACLE会在post/wait和polling机制之间自适应切换。

Post/wait机制下:lgwr执行写入操作完成后,会立刻通知前台进程已经写入完成,因此log file sync等待时间短,但lgwr/cpu的负担会稍重一点。

Polling机制下:lgwr执行写入操作完成后,不再通知前台进程已经写入完成。而前台进程会sleep并timeout后去检查log buffer中的内容是否被写入了磁盘。该机制下,解放了一部分lgwr的工作,并节约CPU资源,但是会导致待commit的进程较长时间处于log file sync等待下。

查看隐含参数值:

select a.ksppinm name,b.ksppstvl value, a.ksppdesc description from x$ksppi a, x$ksppcv b where a.indx = b.indx and a.ksppinm in ('_use_adaptive_log_file_sync');

我们可以在lgwr进程的trace文件中看到一些切换记录:

cd $ORACLE_BASE/diag/rdbms/$DB_UNIQUE_NAME/$ORACLE_SID/trace

ls -ltr | grep lgwr

egrep -i "polling|post" testdb_lgwr_29068.trc

结果类似为:

Log file sync switching to polling

Log file sync switching to post/wait

建议:在11.2.0.3.0以及后续的版本上,调整该参数,设置为false:

alter system set "_use_adaptive_log_file_sync"=false;

6、CPU问题

在网上甚至不少书里,都把log file sync等待的责任推到IO问题上。虽然在大多数情况下,这样的推断是没有问题的,但是却不能一概而论。从一开始官方文档以及我们分析得出的6个阶段和图表来看,log file sync也是很依赖CPU资源的。这里为了证明这个理论,再次引用上面举例出来官方文档的那句话 “The surplus time is CPU activity and is most commonly contention caused by over committing.”

个人认为这句话最重要的并不是后面那段“大部分是由于过量commit导致的”。而应该是前面那一句“(排除IO消耗的时间后,log file sync等待的)剩余时间是CPU的动作”。这就表明了CPU也是造成log file sync慢的一个重要原因,只不过commit次数太多在这种场景下比较显眼而已。

(1)CPU资源瓶颈:比如load average较高(top命令),CPU使用率较高(top命令)、CPU运行队列过长(vmstat命令)。导致了进程间的通信、调度延迟。

检查导致CPU瓶颈的原因,到底是硬件问题,还是业务(大部分情况下应该都是SQL)导致。然后根据结论反馈问题给对应的人员进行处理(硬件厂商/应用研发)

(2)lgwr优先级不够:_high_priority_processes隐含参数,或者$ORACLE_HOME/bin/oradism文件属主和权限异常(一般是由于root.sh未执行/执行出错),都会影响lgwr进程是否可以运行在高优先级。

正确权限/属主为:

cnsz17pl7300:testdb > ls -l $ORACLE_HOME/bin/oradism

-rwsr-x— 1 root oinstall 71758 Sep 17 2011 /dba/oracle/product/11.2.0.3.15/bin/oradism

7、其他场景/优化

(1)参数commit_logging:了解即可,极不推荐修改。类似mysql的双1模式。

(2)BUG,略