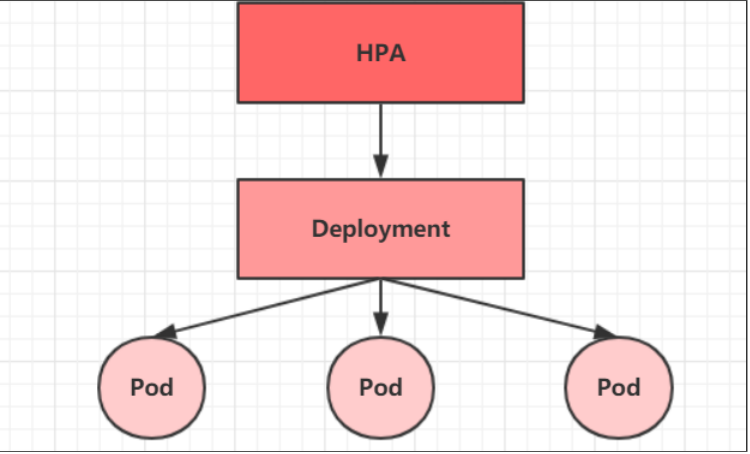

Horizontal Pod Autoscaler(HPA)控制器

-

Horizontal Pod Autoscaler(HPA)是Kubernetes中用于自动根据当前的负载情况,自动调整Pod数量的一种控制器。HPA能够根据CPU使用率、内存使用量或其他选择的度量指标来自动扩展Pod的数量,以确保应用的性能。

-

HPA可以获取每个Pod利用率,然后和HPA中定义的指标进行对比,同时计算出需要伸缩的具体值,最后实现Pod的数量的调整。其实HPA与之前Deployment一样,也属于一种Kubernetes资源对象,它通过追踪分析RC控制的所有目标Pod的负载变化情况,来确定是否需要针对性地调整目标Pod的副本数,这是HPA的实现原理。

-

HPA的工作原理如下:

-

指标收集:Kubernetes会收集Pod的度量指标,如CPU和内存的使用情况。

-

比较阈值:HPA会将这些指标与预设的阈值进行比较,这些阈值可以在HPA的配置中定义。

-

自动扩缩容:如果当前的负载超出了预设的阈值,HPA会增加Pod的数量以分散负载;如果负载低于阈值,HPA会减少Pod的数量以节省资源。

-

持续监控:HPA会持续监控Pod的负载,并根据需要调整Pod的数量。

-

安装Metrics Server

-

Metrics Server 是 Kubernetes 集群中的一个关键组件,用于收集和报告集群中资源的使用情况,如 CPU 和内存的使用率。这对于使用 HPA(Horizontal Pod Autoscaler)等自动化工具来管理资源至关重要。

-

选择 Metrics Server 版本:您需要选择一个与您的 Kubernetes 版本兼容的 Metrics Server 版本。以下是一些版本的兼容性信息43:

Metrics Server 版本 Metrics API group/version 支持的 Kubernetes 版本 0.6.x metrics.k8s.io/v1beta1 1.19+ 0.5.x metrics.k8s.io/v1beta1 *1.8+ 0.4.x metrics.k8s.io/v1beta1 *1.8+ 0.3.x metrics.k8s.io/v1beta1 1.8-1.21

[root@k8s-master ~]# cat components.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=10250

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.7.1

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 10250

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

seccompProfile:

type: RuntimeDefault

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

[root@k8s-master ~]# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

[root@k8s-master ~]# kubectl top node

W0119 04:05:38.819138 80109 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

Error from server (ServiceUnavailable): the server is currently unable to handle the request (get nodes.metrics.k8s.io)

执行kubectl apply 之后需要等待一段时间等待pod启动

- 查看node的CPU使用率,内存

[root@k8s-master ~]# kubectl top node

W0119 04:08:07.973065 81776 top_node.go:119] Using json format to get metrics. Next release will switch to protocol-buffers, switch early by passing --use-protocol-buffers flag

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 351m 17% 1907Mi 51%

k8s-node1 231m 11% 1350Mi 36%

k8s-node2 208m 10% 826Mi 48% -

查看Pod资源占用情况

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-697d846cf4-79hpj 1/1 Running 1 24d

calico-node-58ss2 1/1 Running 1 24d

calico-node-gc547 1/1 Running 1 24d

calico-node-hdhxf 1/1 Running 1 24d

coredns-6f6b8cc4f6-5nbb6 1/1 Running 1 24d

coredns-6f6b8cc4f6-q9rhc 1/1 Running 1 24d

etcd-k8s-master 1/1 Running 1 24d

kube-apiserver-k8s-master 1/1 Running 1 24d

kube-controller-manager-k8s-master 1/1 Running 1 24d

kube-proxy-7hp6l 1/1 Running 1 24d

kube-proxy-ddhnb 1/1 Running 1 24d

kube-proxy-dwcgd 1/1 Running 1 24d

kube-scheduler-k8s-master 1/1 Running 1 24d

metrics-server-84d7958dc4-p7gwp 1/1 Running 0 15m

准备deployment和servie

[root@k8s-master ~]# cat k8s-hpa-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: dev

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

resources: # 资源限制

requests:

cpu: "100m" # 100m 表示100 milli cpu,即 0.1 个CPU

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

selector:

app: nginx

type: NodePort

ports:

- port: 80 # svc 的访问端口

name: nginx

targetPort: 80 # Pod 的访问端口

protocol: TCP

nodePort: 30010 # 在机器上开端口,浏览器访问

[root@k8s-master ~]# kubectl get svc,deploy,pod -n dev -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/nginx-deploy 1/1 1 1 6s nginx nginx:1.17.1 app=nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx 1/1 Running 0 12m 10.244.36.73 k8s-node1 <none> <none>

pod/nginx-deploy-65794dcb96-phscq 1/1 Running 0 6s 10.244.36.75 k8s-node1 <none> <none>

[root@k8s-master ~]# kubectl get svc,deployment,pod -n dev -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/nginx-deploy 1/1 1 1 47s nginx nginx:1.17.1 app=nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx 1/1 Running 0 12m 10.244.36.73 k8s-node1 <none> <none>

pod/nginx-deploy-65794dcb96-phscq 1/1 Running 0 47s 10.244.36.75 k8s-node1 <none> <none>

-

创建service

[root@k8s-master ~]# kubectl expose deployment nginx-deploy --type=NodePort --port=80 -n dev

service/nginx-deploy exposed

[root@k8s-master ~]# kubectl get svc,deployment,pod -n dev -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/nginx-deploy NodePort 10.96.115.240 <none> 80:31580/TCP 4s app=nginx

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/nginx-deploy 1/1 1 1 2m10s nginx nginx:1.17.1 app=nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx 1/1 Running 0 14m 10.244.36.73 k8s-node1 <none> <none>

pod/nginx-deploy-65794dcb96-phscq 1/1 Running 0 2m10s 10.244.36.75 k8s-node1 <none> <none>

[root@k8s-master ~]# kubectl get svc,deployment,pod -n dev -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/nginx-deploy NodePort 10.96.115.240 <none> 80:31580/TCP 15s app=nginx

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/nginx-deploy 1/1 1 1 2m21s nginx nginx:1.17.1 app=nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx 1/1 Running 0 14m 10.244.36.73 k8s-node1 <none> <none>

pod/nginx-deploy-65794dcb96-phscq 1/1 Running 0 2m21s 10.244.36.75 k8s-node1 <none> <none>

[root@k8s-master ~]# curl 10.96.115.240:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

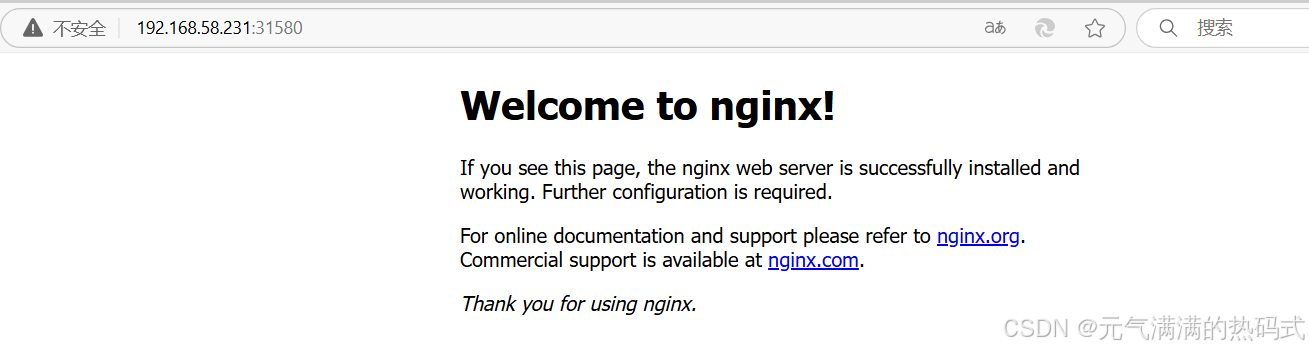

提供虚拟机ip加31580访问nginx页面

- 创建 HPA :

[root@k8s-master ~]# cat k8s-hpa.yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: k8s-hpa

spec:

minReplicas: 1 # 最小 Pod 数量

maxReplicas: 10 # 最大 Pod 数量

targetCPUUtilizationPercentage: 3 # CPU 使用率指标,即 CPU 超过 3%(Pod 的 limit 的 cpu ) 就进行扩容

scaleTargetRef: # 指定要控制的Nginx的信息

apiVersion: apps/v1

kind: Deployment

name: nginx-deploy

测试:

kubectl run -i --tty load-generator --rm --image=busybox --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://192.168.65.100:30010; done"通过 kubectl 创建了一个名为 load-generator 的 Pod,它会持续执行 wget 请求,目标地址是 http://192.168.65.100:30010,并且每次请求之间等待 0.01 秒。

- kubectl get deploy -w

[root@k8s-master ~]# kubectl get deploy -w

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 1/1 1 1 149m

nginx-deploy 1/4 1 1 151m

nginx-deploy 1/4 1 1 151m

nginx-deploy 1/4 1 1 151m

nginx-deploy 1/4 4 1 151m

nginx-deploy 2/4 4 2 151m

nginx-deploy 3/4 4 3 151m

nginx-deploy 4/4 4 4 151m

nginx-deploy 4/8 4 4 151m

nginx-deploy 4/8 4 4 151m

nginx-deploy 4/8 4 4 151m

nginx-deploy 4/8 8 4 151m

nginx-deploy 5/8 8 5 151m

nginx-deploy 6/8 8 6 152m

nginx-deploy 7/8 8 7 152m

nginx-deploy 8/8 8 8 152m

nginx-deploy 8/10 8 8 152m

nginx-deploy 8/10 8 8 152m

nginx-deploy 8/10 8 8 152m

nginx-deploy 8/10 10 8 152m

nginx-deploy 9/10 10 9 152m

nginx-deploy 10/10 10 10 152m

- kubectl get pods -w

[root@k8s-master ~]# kubectl get pods -w

NAME READY STATUS RESTARTS AGE

nginx-deploy-65794dcb96-8c9r8 1/1 Running 0 149m

load-generator 0/1 Pending 0 0s

load-generator 0/1 Pending 0 0s

load-generator 0/1 ContainerCreating 0 0s

load-generator 0/1 ContainerCreating 0 2s

load-generator 0/1 ErrImagePull 0 33s

load-generator 0/1 ImagePullBackOff 0 46s

load-generator 1/1 Running 0 55s

nginx-deploy-65794dcb96-9dp9v 0/1 Pending 0 0s

nginx-deploy-65794dcb96-9dp9v 0/1 Pending 0 0s

nginx-deploy-65794dcb96-drgxc 0/1 Pending 0 0s

nginx-deploy-65794dcb96-6s8ng 0/1 Pending 0 0s

nginx-deploy-65794dcb96-6s8ng 0/1 Pending 0 0s

nginx-deploy-65794dcb96-drgxc 0/1 Pending 0 0s

nginx-deploy-65794dcb96-9dp9v 0/1 ContainerCreating 0 0s

nginx-deploy-65794dcb96-drgxc 0/1 ContainerCreating 0 0s

nginx-deploy-65794dcb96-6s8ng 0/1 ContainerCreating 0 0s

nginx-deploy-65794dcb96-drgxc 0/1 ContainerCreating 0 1s

nginx-deploy-65794dcb96-drgxc 1/1 Running 0 3s

nginx-deploy-65794dcb96-9dp9v 0/1 ContainerCreating 0 4s

nginx-deploy-65794dcb96-6s8ng 0/1 ContainerCreating 0 4s

nginx-deploy-65794dcb96-9dp9v 1/1 Running 0 5s

nginx-deploy-65794dcb96-6s8ng 1/1 Running 0 6s

nginx-deploy-65794dcb96-ctl4k 0/1 Pending 0 0s

nginx-deploy-65794dcb96-ctl4k 0/1 Pending 0 0s

nginx-deploy-65794dcb96-mklzt 0/1 Pending 0 0s

nginx-deploy-65794dcb96-7vj6q 0/1 Pending 0 0s

nginx-deploy-65794dcb96-ctl4k 0/1 ContainerCreating 0 0s

nginx-deploy-65794dcb96-bf2v5 0/1 Pending 0 0s

nginx-deploy-65794dcb96-7vj6q 0/1 Pending 0 0s

nginx-deploy-65794dcb96-mklzt 0/1 Pending 0 0s

nginx-deploy-65794dcb96-bf2v5 0/1 Pending 0 0s

nginx-deploy-65794dcb96-7vj6q 0/1 ContainerCreating 0 0s

nginx-deploy-65794dcb96-mklzt 0/1 ContainerCreating 0 0s

nginx-deploy-65794dcb96-bf2v5 0/1 ContainerCreating 0 0s

nginx-deploy-65794dcb96-ctl4k 0/1 ContainerCreating 0 2s

nginx-deploy-65794dcb96-mklzt 0/1 ContainerCreating 0 3s

nginx-deploy-65794dcb96-7vj6q 0/1 ContainerCreating 0 3s

nginx-deploy-65794dcb96-bf2v5 0/1 ContainerCreating 0 3s

nginx-deploy-65794dcb96-mklzt 1/1 Running 0 3s

nginx-deploy-65794dcb96-ctl4k 1/1 Running 0 4s

nginx-deploy-65794dcb96-7vj6q 1/1 Running 0 4s

nginx-deploy-65794dcb96-bf2v5 1/1 Running 0 5s

nginx-deploy-65794dcb96-drvg4 0/1 Pending 0 0s

nginx-deploy-65794dcb96-drvg4 0/1 Pending 0 0s

nginx-deploy-65794dcb96-6c8lr 0/1 Pending 0 0s

nginx-deploy-65794dcb96-6c8lr 0/1 Pending 0 0s

nginx-deploy-65794dcb96-drvg4 0/1 ContainerCreating 0 0s

nginx-deploy-65794dcb96-6c8lr 0/1 ContainerCreating 0 0s

nginx-deploy-65794dcb96-drvg4 0/1 ContainerCreating 0 3s

nginx-deploy-65794dcb96-6c8lr 0/1 ContainerCreating 0 4s

nginx-deploy-65794dcb96-drvg4 1/1 Running 0 4s

nginx-deploy-65794dcb96-6c8lr 1/1 Running 0 5s

- kubectl get hpa -w

[root@k8s-master ~]# kubectl get hpa -w

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

k8s-hpa Deployment/nginx-deploy <unknown>/3% 1 10 1 2m40s

k8s-hpa Deployment/nginx-deploy 28%/3% 1 10 1 4m32s

k8s-hpa Deployment/nginx-deploy 29%/3% 1 10 4 4m47s

k8s-hpa Deployment/nginx-deploy 9%/3% 1 10 8 5m2s

k8s-hpa Deployment/nginx-deploy 5%/3% 1 10 10 5m17s

k8s-hpa Deployment/nginx-deploy 3%/3% 1 10 10 5m32s

k8s-hpa Deployment/nginx-deploy 2%/3% 1 10 10 6m47s

k8s-hpa Deployment/nginx-deploy 3%/3% 1 10 10 7m2s

k8s-hpa Deployment/nginx-deploy 2%/3% 1 10 10 7m17s

k8s-hpa Deployment/nginx-deploy 3%/3% 1 10 10 7m33s

k8s-hpa Deployment/nginx-deploy 4%/3% 1 10 10 15m

k8s-hpa Deployment/nginx-deploy 3%/3% 1 10 10 16m

k8s-hpa Deployment/nginx-deploy 2%/3% 1 10 10 16m

k8s-hpa Deployment/nginx-deploy 3%/3% 1 10 10 16m

解释:

- 初始时,

nginx-deploy的 HPA 目标 CPU 使用率为 3%。当nginx-deploy的 CPU 使用率达到 28% 时,HPA 会自动增加 Pod 副本数(从 1 增加到 4)。 - 随着负载的变化,Pod 副本数不断调整,最大可扩展到 10 个副本。

- 通过这种机制,Kubernetes 根据实际负载动态调整 Pod 数量,确保服务能够处理增加的流量,并且避免资源浪费。