NeurIPS 2024于2024年12月10号-12月15号在加拿大温哥华举行(Vancouver, Canada)。截止目前,NeurIPS 2024最全论文清单已经重磅出炉了,录取率25.8%。时间序列方向依旧是其中的重要研究领域。

我整理了NeurIPS 2024时间序列(time series data)方向的相关论文,总计61篇,其中正会55篇,D&B Track6篇。

本文列出了包含预测,插补,分类,生成,因果分析,异常检测,LLM以及基础模型、扩散模型的论文标题。需要的同学添加工中号【真AI至上】 回复 NIPS时序 即可全部领取。

Attractor Memory for Long-Term Time Series Forecasting: A Chaos Perspective

文章解析:

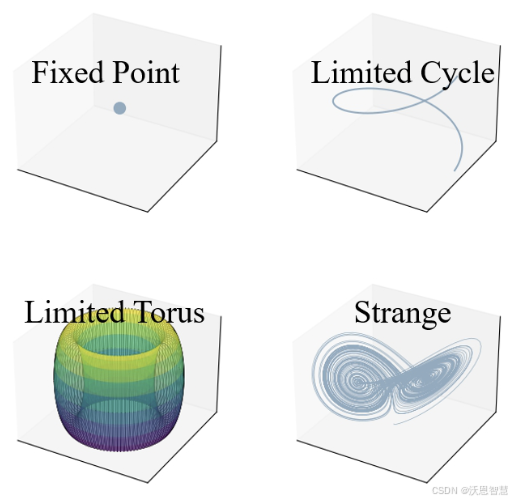

本文提出了一种新的模型Attraos,该模型结合了混沌理论,将现实世界的时间序列视为来自未知高维混沌动态系统的观测数据。通过非参数相空间重构嵌入和多尺度动态记忆单元,Attraos能够捕捉历史动态结构,并通过频率增强的局部演化策略进行预测。

实验结果表明,Attraos在主流LTSF数据集和混沌数据集上表现出色,且参数量仅为PatchTST的十二分之一。

创新点:

1.将混沌理论引入长期时间序列预测任务中,利用吸引子不变性的概念建模连续动态系统。

2.提出了一种非参数相空间重构方法,用于恢复时间序列数据中的动态结构。

3.设计了多分辨率动态记忆单元(MDMU),用于捕捉历史采样数据中的结构动态。

4.开发了频率增强的局部演化策略,以在频率域中推导未来状态,提高预测性能。

研究方法:

1.采用非参数相空间重构方法恢复时间序列数据中的动态结构。

2.设计多分辨率动态记忆单元(MDMU)来捕捉历史采样数据中的多尺度动态结构。

3.利用频率增强的局部演化策略,在频率域中推导动态系统组件的未来状态。

4.利用Blelloch扫描算法实现MDMU的高效计算。

研究结论:

1.Attraos在主流LTSF数据集和混沌数据集上表现优于多种LTSF方法,且参数量仅为PatchTST的十二分之一。

2.通过理论分析和实验证据,证明了Attraos的有效性和优越性。

3.Attraos提供了一种新的视角,即利用混沌理论建模和预测时间序列数据。

需要的同学添加工中号【真AI至上】 回复 NIPS时序 即可全部领取。

Frequency Adaptive Normalization For Non-stationary Time Series Forecasting

文章解析:

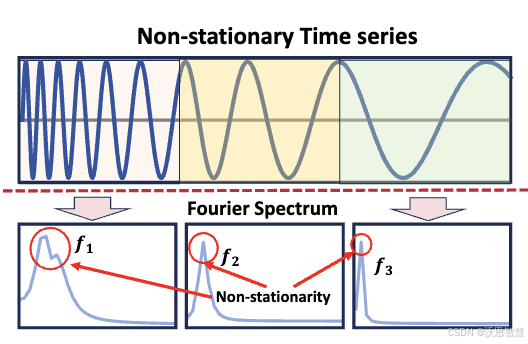

本文提出了一种新的实例归一化方法——频率自适应归一化(FAN),通过傅里叶变换识别每个输入实例的主要频率成分,并显式建模这些频率成分在输入和输出之间的差异,从而有效处理非平稳时间序列中的动态趋势和季节性模式。FAN方法可以应用于任意预测模型,显著提高了预测性能。

创新点:

1.提出了频率自适应归一化(FAN)方法,能够同时处理动态趋势和季节性模式。

2.利用傅里叶变换识别每个输入实例的主要频率成分,更好地捕捉非平稳因素。

3.显式建模频率成分在输入和输出之间的差异,通过简单的MLP模型进行预测。

4.FAN方法可以应用于任意预测模型,具有广泛的适用性。

研究方法:

1.通过傅里叶变换识别每个输入实例的主要频率成分。

2.显式建模频率成分在输入和输出之间的差异,使用简单的MLP模型进行预测。

3.将FAN方法应用于四个广泛使用的预测模型,评估其在八个基准数据集上的预测性能改进。

4.FAN方法是模型无关的,可以应用于任意预测模型。

研究结论:

1.FAN方法显著提高了预测性能,在MSE指标上平均提升了7.76%到37.90%。

2.与现有的最先进的归一化技术相比,FAN方法表现出优越性。

3.FAN方法不仅能够处理动态趋势,还能有效处理季节性模式,显著提升了非平稳时间序列的预测能力。

NeurIPS 2024 时间序列论文汇总 ↓

预测:

-

Retrieval-Augumented Diffusion Models for Time Series Forecasting

-

Attractor Memory for Long-Term Time Series Forecasting: A Chaos Perspective

-

Attractor Memory for Long-Term Time Series Forecasting: A Chaos Perspective

-

FilterNet: Harnessing Frequency Filters for Time Series Forecasting

-

Frequency Adaptive Normalization For Non-stationary Time Series Forecasting

-

Rethinking the Power of Timestamps for Robust Time Series Forecasting: A Global-Local Fusion Perspective

-

AutoTimes: Autoregressive Time Series Forecasters via Large Language Models

-

DDN: Dual-domain Dynamic Normalization for Non-stationary Time Series Forecasting

-

BackTime: Backdoor Attacks on Multivariate Time Series Forecasting

-

Are Language Models Actually Useful for Time Series Forecasting?

-

Rethinking Fourier Transform for Long-term Time Series Forecasting: A Basis Functions Perspective

-

Introducing Spectral Attention for Long-Range Dependency in Time Series Forecasting

-

Parsimony or Capability? Decomposition Delivers Both in Long-term Time Series Forecasting

-

Structured Matrix Basis for Multivariate Time Series Forecasting with Interpretable Dynamics

-

DeformableTST: Transformer for Time Series Forecasting without Over-reliance on Patching

-

Time-FFM: Towards LM-Empowered Federated Foundation Model for Time Series Forecasting

-

PGN: The RNN's New Successor is Effective for Long-Range Time Series Forecasting

-

SOFTS: Efficient Multivariate Time Series Forecasting with Series-Core Fusion

-

Multivariate Probabilistic Time Series Forecasting with Correlated Errors

-

CycleNet: Enhancing Time Series Forecasting through Modeling Periodic Patterns

-

Analysing Multi-Task Regression via Random Matrix Theory with Application to Time Series Forecasting

-

CondTSF: One-line Plugin of Dataset Condensation for Time Series Forecasting

-

Scaling Law for Time Series Forecasting

-

From News to Forecast: Integrating Event Analysis in LLM-Based Time Series Forecasting with Reflection

-

From Similarity to Superiority: Channel Clustering for Time Series Forecasting

-

TimeXer: Empowering Transformers for Time Series Forecasting with Exogenous Variables

-

ElasTST: Towards Robust Varied-Horizon Forecasting with Elastic Time-Series Transformer

-

Are Self-Attentions Effective for Time Series Forecasting?

-

Tiny Time Mixers (TTMs): Fast Pre-trained Models for Enhanced Zero/Few-Shot Forecasting of Multivariate Time Series

异常检测:

-

SARAD: Spatial Association-Aware Anomaly Detection and Diagnosis for Multivariate Time Series

-

The Elephant in the Room: Towards A Reliable Time-Series Anomaly Detection Benchmark(D&B Track)

分类:

-

Con4m: Context-aware Consistency Learning Framework for Segmented Time Series Classification

-

Medformer: A Multi-Granularity Patching Transformer for Medical Time-Series Classification

-

Abstracted Shapes as Tokens - A Generalizable and Interpretable Model for Time-series Classification

表示学习:

-

"Segment, Shuffle, and Stitch: A Simple Mechanism for Improving Time-Series Representations"

-

Exploiting Representation Curvature for Boundary Detection in Time Series

-

Learning diverse causally emergent representations from time series data

生成:

-

Utilizing Image Transforms and Diffusion Models for Generative Modeling of Short and Long Time Series

-

SDformer: Similarity-driven Discrete Transformer For Time Series Generation

-

FIDE: Frequency-Inflated Conditional Diffusion Model for Extreme-Aware Time Series Generation

-

TSGM: A Flexible Framework for Generative Modeling of Synthetic Time Series(D&B Track)

时序分析:

-

Peri-midFormer: Periodic Pyramid Transformer for Time Series Analysis

-

Shape analysis for time series

-

Large Pre-trained time series models for cross-domain Time series analysis tasks

大语言模型:

-

AutoTimes: Autoregressive Time Series Forecasters via Large Language Models

-

Are Language Models Actually Useful for Time Series Forecasting?

-

From News to Forecast: Integrating Event Analysis in LLM-Based Time Series Forecasting with Reflection

-

Tri-Level Navigator: LLM-Empowered Tri-Level Learning for Time Series OOD Generalization

基础模型:

-

Time-FFM: Towards LM-Empowered Federated Foundation Model for Time Series Forecasting

-

Tiny Time Mixers (TTMs): Fast Pre-trained Models for Enhanced Zero/Few-Shot Forecasting of Multivariate Time Series

-

UNITS: A Unified Multi-Task Time Series Model

-

UniMTS: Unified Pre-training for Motion Time Series

扩散模型:

-

Retrieval-Augumented Diffusion Models for Time Series Forecasting

-

Utilizing Image Transforms and Diffusion Models for Generative Modeling of Short and Long Time Series

-

FIDE: Frequency-Inflated Conditional Diffusion Model for Extreme-Aware Time Series Generation

-

ANT: Adaptive Noise Schedule for Time Series Diffusion Models