文章目录

准备工作

hadoop全分布式环境至少需要三个节点,3台虚拟机,本教材使用4台虚拟机节点

- 所有的主机安装jdk

- 所有主机都需要关闭防火墙

- 所有主机都配置主机名映射关系 vi /etc/hosts

- 配置免密登录(两两节点之间)

推荐的全分布式虚拟机配置

| IP | 主机名 | 角色 |

|---|---|---|

| 192.168.137.110 | node1 | master |

| 192.168.137.111 | node2 | slave1 |

| 192.168.137.112 | node3 | slave2 |

| 192.168.137.113 | node4 | slave3 |

软件版本信息

| 工具名称 | 说明 |

|---|---|

| VMware-workstation-full-15.5.1-15018445.exe | 虚拟机安装包 |

| MobaXterm_Portable_v20.3.zip | 解压使用,远程连接Centos系统远程访问使用,支持登录和上传文件 |

| CentOS-7-x86_64-DVD-1511.iso | Centos7系统ISO镜像,不需要解压,VMware安装时需要 |

| jdk-8u171-linuxx64.tar.gz | jdk安装包,上传到Centos系统中使用 |

| hadoop-2.7.3.tar.gz | hadoop的安装包,需要上传到虚拟机中 |

(可选配置)创建用户组

创建一个虚拟机master和hadoop用户,hadoop组。创建这些是为了便于管理且安全,如果是为了学习方便使用的话可以直接使用root用户。

groupadd hadoop

useradd hadoop -d /home/hadoop -g hadoop

关闭防火墙

# 先查看防火墙状态

systemctl status firewalld.service

# 如果还在运行则关闭防火墙

systemctl stop firewalld.service

# 禁用防火墙

systemctl disable firewalld.service

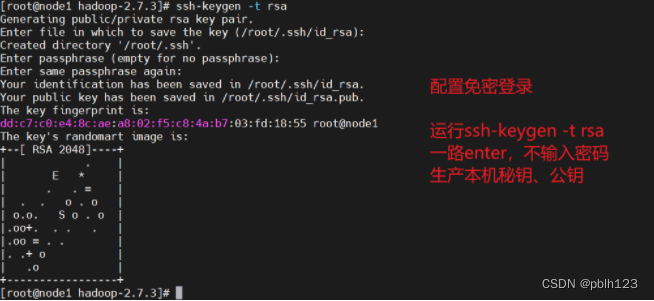

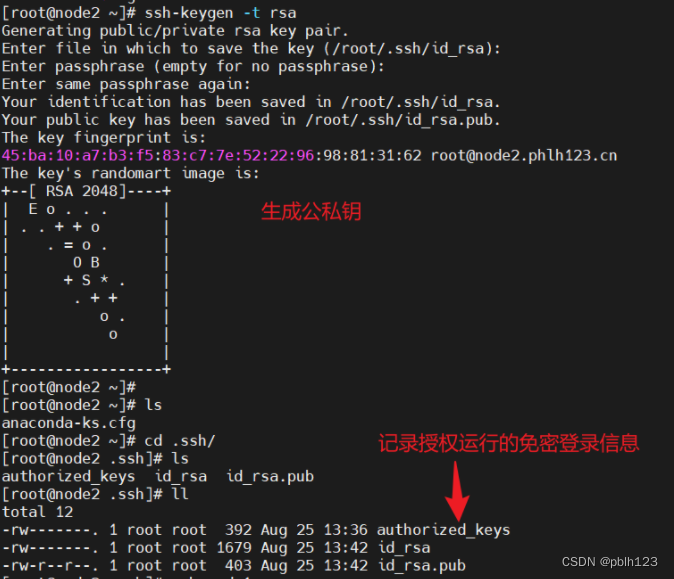

节点之间免密登录

在node1节点生成公钥、私钥

# 配置登录秘钥

ssh-keygen -t rsa

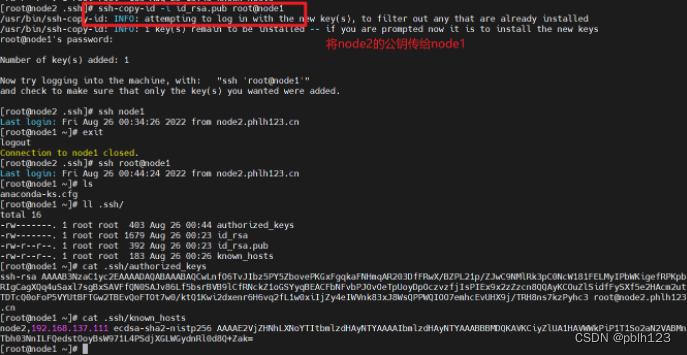

# 将公钥发给免密登录的节点

ssh-copy-id -i id_rsa.pub root@node2

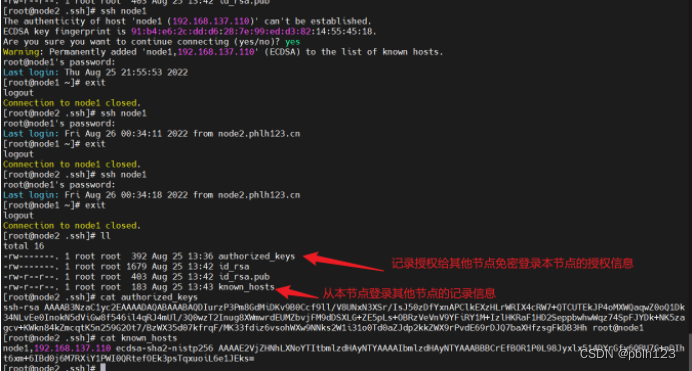

node1节点到node2节点

配置免密登录

配置并测试

node2节点到node1节点

生成秘钥

将node2的公钥传给node1

相同的操作,打通从node1到node3,node3到node1的免密登录

保证每个节点时间是一致的

目的:如果服务器在公网环境(能连接外网),可以不采用集群时间同步,因为服务器会定期和公网时间进行校准;

如果服务器在内网环境,必须要配置集群时间同步,否则时间久了,会产生时间偏差,导致集群执行任务时间不同步。

解决方案:通过ntp服务解决,用一个节点作为时间服务器,所有的机器都和这台机器时间进行定时的同步,比如每隔十分钟,同步一次时间。保证所有节点的时间一致

3台虚拟机中,master节点承担的角色较多,为了保证服务的稳定性,选择node3节点作为时间服务器

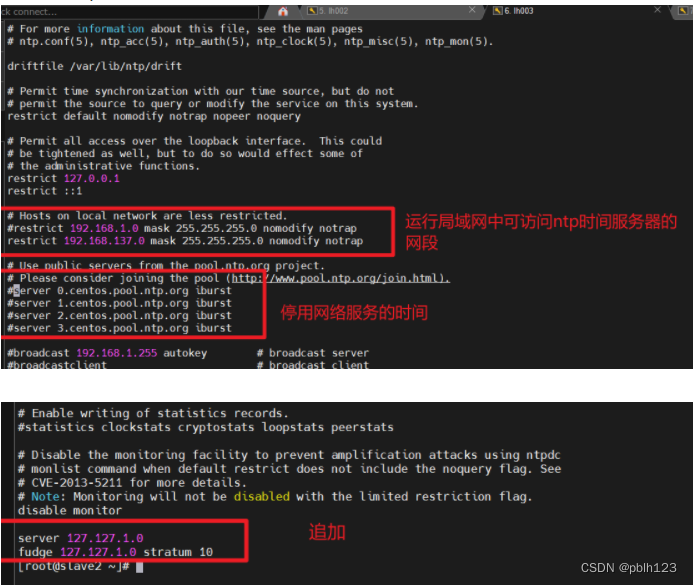

时间服务器配置ntp

使用root用户进行配置

# 切换root账户

su

# 检查ntp是否安装(没有的话需要安装)

rpm -qa | grep ntp

# 安装ntp

yum install -y ntp

# 在node3节点配置ntp时间服务

# 编辑/etc/ntp.conf

vi /etc/ntp.conf

# step1:授权 192.168.137.0 - 192.168.137.255 网段上的所有主机可以从这台主机上查询和同步时间

restrict 192.168.137.0 mask 255.255.255.0 nomodify notrap

# step2:设置集群在局域网中,不使用其它互联网上的时间

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

# step3:设置当该节点丢失网络连接,依然可以采用本地时间作为时间服务器为集群中的其它节点提供时间同步

# CVE-2013-5211 for more details.

# Note: Monitoring will not be disabled with the limited restriction flag.

disable monitor

server 127.127.1.0

fudge 127.127.1.0 stratum 10

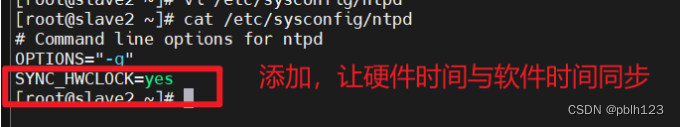

# 编辑 /etc/sysconfig/ntpd 文件:

# 在最后一行添加 SYNC_HWCLOCK=yes ,让硬件时间与系统时间一起同步

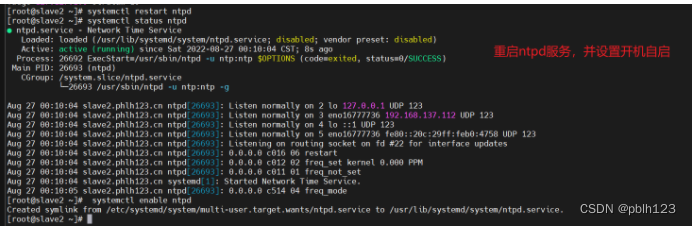

# 重启 ntpd 服务,并设置开机自启:

systemctl restart ntpd

systemctl status ntpd

systemctl enable ntpd

配置/etc/ntp.conf

配置/etc/sysconfig/ntpd

重启 ntpd 服务,并设置开机自启

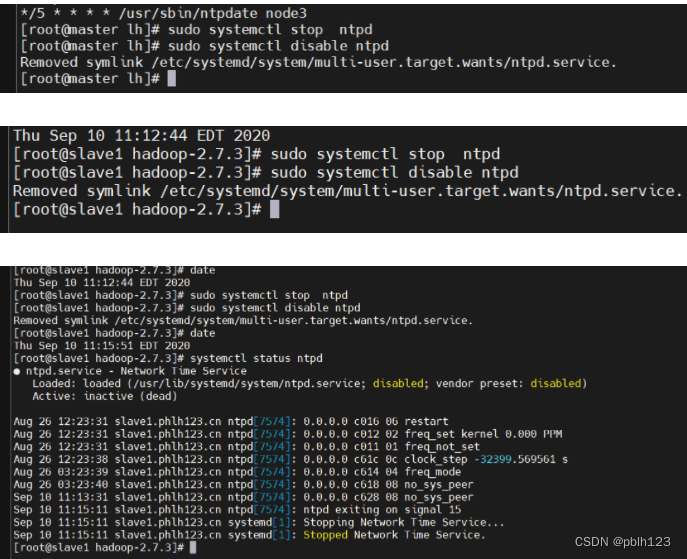

所有非ntpd时间服务器节点关闭ntpd服务及关闭开机自启

sudo systemctl stop ntpd

sudo systemctl disable ntpd

所有非ntpd时间服务器节点

在其它主机下编写定时任务 crontab -e

验证node2节点

用命令 date -s 修改系统时间

[root@slave1 hadoop-2.7.3]# crontab -e

no crontab for root - using an empty one

crontab: installing new crontab

[root@slave1 hadoop-2.7.3]# crontab -l

# ntp时间同步设置

*/5 * * * * /usr/sbin/ntpdate node3

[root@slave1 hadoop-2.7.3]# date -s

date: option requires an argument -- 's'

Try 'date --help' for more information.

[root@slave1 hadoop-2.7.3]# date -s '2020-9-10 11:12:11'

Thu Sep 10 11:12:11 EDT 2020

[root@slave1 hadoop-2.7.3]# date

Thu Sep 10 11:13:00 EDT 2020

[root@slave1 hadoop-2.7.3]#

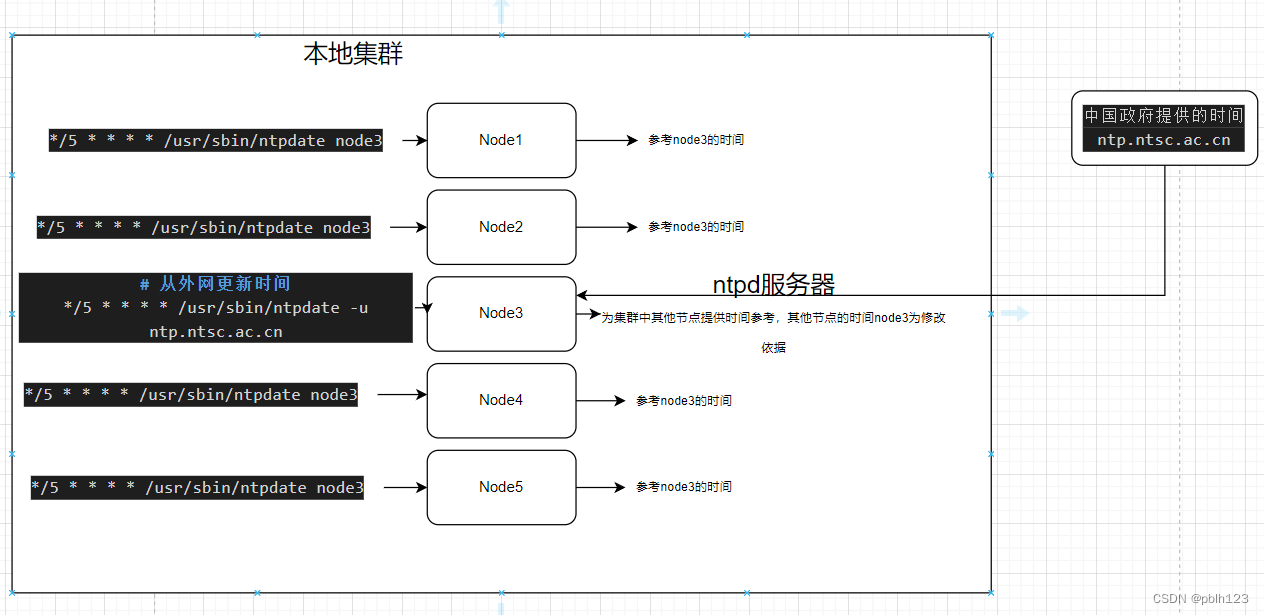

20221005更新内容,为了解决node3节点时间与外网时间不一致导致的集群时间滞后问题,修改如下。

修改原理,配置crontab,让node3定时依据外网时间(中国)更新node3时间,从而保证集群时间与外网时间一致

修改逻辑图:

# 通过crontab -e 配置

crontab -e

# crontab -l 查看

[root@slave2 ~]# crontab -l

# 从外网更新时间

# ntp时间同步设置

*/5 * * * * /usr/sbin/ntpdate -u ntp.ntsc.ac.cn

参考:

- https://blog.csdn.net/m0_46413065/article/details/116378004

- https://blog.csdn.net/sinat_40471574/article/details/105422507

在master节点上进行hadoop的配置

- 上传hadoop安装包,解压到相应的hadoop安装配置环境,并配置环境变量

- 创建hadoop的tmp目录

- 修改hadoop的配置文件

- 将master节点上配置好的hadoop分发到其他节点

- master节点上格式化hdfs,启动hadoop

上传hadoop安装包,解压到相应的hadoop安装配置环境,并配置环境变量

tar -zvcf /home/lh/softs/hadoop-2.7.3.tar.gz -C /opt/soft_installed

创建hadoop的tmp目录

mkdir -p /opt/soft_installed/tmp

修改hadoop配置文件

- hadoop-env.sh

- hdfs-site.xml

- core-site.xml

- mapper-site.xml

- yarn-site.xml

- slaves

修改hadoop-env.sh配置

vi /opt/soft_installed/hadoop-2.7.3/etc/hadoop/hadoop-env.sh

# export JAVA_HOME=${JAVA_HOME}

export JAVA_HOME=/opt/soft_installed/jdk1.8.0_171

#export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-"/etc/hadoop"}

export HADOOP_CONF_DIR=/opt/soft_installed/hadoop-2.7.3/etc/hadoop

修改hdfs-site.xml配置

vi /opt/soft_installed/hadoop-2.7.3/etc/hadoop/hdfs-site.xml

[root@master hadoop-2.7.3]# cat /opt/soft_installed/hadoop-2.7.3/etc/hadoop/hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

<description>datenode数,默认是3,应小于datanode机器数量</description>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/opt/soft_installed/hadoop-2.7.3/hdfs/name</value>

<description>namenode上存储hdfs名字空间元数据</description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/opt/soft_installed/hadoop-2.7.3/hdfs/data</value>

<description>datanode上数据块的物理存储位置</description>

</property>

<property>

<name>dfs.http.address</name>

<value>node1:50070</value>

<description>2nn位置</description>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node2:50090</value>

<description>2nn位置</description>

</property>

</configuration>

配置core-site.xml

vi /opt/soft_installed/hadoop-2.7.3/etc/hadoop/core-site.xml

[root@master hadoop-2.7.3]# cat /opt/soft_installed/hadoop-2.7.3/etc/hadoop/core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://node1:9000</value>

<description>指定HDFS的默认名称</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://node1:9000</value>

<description>HDFS的URL</description>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/soft_installed/hadoop-2.7.3/tmp</value>

<description>节点上本地的hadoop临时文件夹</description>

</property>

<property>

<name>fs.checkpoint.period</name>

<value>3600</value>

<description>两次checkpoint间隔时间</description>

</property>

<property>

<name>fs.checkpoint.size</name>

<value>67108864</value>

<description>checkpoint大小</description>

</property>

</configuration>

配置mapper-site.xml

[root@master hadoop-2.7.3]# cat /opt/soft_installed/hadoop-2.7.3/etc/hadoop/mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<description>指定mapreduce使用yarn框架</description>

</property>

<!-- 历史服务器端地址 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>node1:10020</value>

</property>

<!-- 历史服务器Web端地址 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>node1:19888</value>

</property>

</configuration>

配置yarn-site.xml

[root@master hadoop-2.7.3]# cat /opt/soft_installed/hadoop-2.7.3/etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>node3</value>

<description>指定mapreduce使用yarn框架</description>

</property>

<!-- 历史服务器端地址 -->

<property>

<name>yarn.resourcemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 历史服务器Web端地址 -->

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>node3:8088</value>

</property>

</configuration>

注意配置resourcemanager的节点在其他主机时(node3),需要打通node3节点到其他节点的免密登录(node3到node1,node2,node3,node4)节点的免密登录。

配置salves

[root@master hadoop-2.7.3]# cat /opt/soft_installed/hadoop-2.7.3/etc/hadoop/slaves

node2

node3

node4

将master节点上配置好的hadoop分发到其他节点

scp -r /opt/soft_installed/hadoop-2.7.3/ node2:/opt/soft_installed/

scp -r /opt/soft_installed/hadoop-2.7.3/ node3:/opt/soft_installed/

scp -r /opt/soft_installed/hadoop-2.7.3/ node4:/opt/soft_installed/

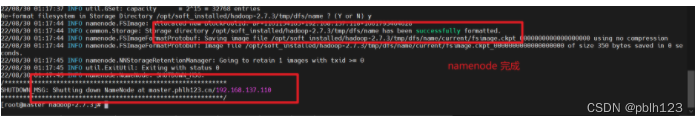

master节点上格式化hdfs,启动hadoop

格式化hdfs

hdfs namenode -format

[root@master hadoop-2.7.3]# hdfs namenode -format

22/08/30 01:17:35 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master.phlh123.cn/192.168.137.110

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.3

STARTUP_MSG: classpath = /opt/soft_installed/hadoop-2.7.3/etc/hadoop:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/stax-api-1.0-2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/activation-1.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jersey-server-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/asm-3.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/log4j-1.2.17.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jets3t-0.9.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/httpclient-4.2.5.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/httpcore-4.2.5.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-lang-2.6.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-configuration-1.6.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-digester-1.8.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/slf4j-api-1.7.10.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/avro-1.7.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/paranamer-2.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-compress-1.4.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/xz-1.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/gson-2.2.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/hadoop-auth-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/zookeeper-3.4.6.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/netty-3.6.2.Final.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/curator-framework-2.7.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/curator-client-2.7.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jsch-0.1.42.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/curator-recipes-2.7.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/htrace-core-3.1.0-incubating.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/junit-4.11.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/hamcrest-core-1.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/mockito-all-1.8.5.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/hadoop-annotations-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/guava-11.0.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jsr305-3.0.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-cli-1.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-math3-3.1.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/xmlenc-0.52.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-httpclient-3.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-logging-1.1.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-codec-1.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-io-2.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-net-3.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/commons-collections-3.2.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/servlet-api-2.5.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jetty-6.1.26.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jetty-util-6.1.26.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jsp-api-2.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jersey-core-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jersey-json-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/jettison-1.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/hadoop-common-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/hadoop-common-2.7.3-tests.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/hadoop-nfs-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-io-2.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/asm-3.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/htrace-core-3.1.0-incubating.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/netty-all-4.0.23.Final.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/hadoop-hdfs-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/hadoop-hdfs-2.7.3-tests.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/hdfs/hadoop-hdfs-nfs-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/zookeeper-3.4.6-tests.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/commons-lang-2.6.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/guava-11.0.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jsr305-3.0.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/commons-cli-1.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/activation-1.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/xz-1.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/servlet-api-2.5.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/commons-codec-1.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jersey-client-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/guice-3.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/commons-io-2.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jersey-server-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/asm-3.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jersey-json-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jettison-1.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/lib/jetty-6.1.26.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-api-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-common-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-common-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-tests-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-client-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/yarn/hadoop-yarn-registry-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/xz-1.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/hadoop-annotations-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/asm-3.2.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/guice-3.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/javax.inject-1.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/junit-4.11.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar:/opt/soft_installed/hadoop-2.7.3/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.7.3-tests.jar:/opt/soft_installed/hadoop-2.7.3/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r baa91f7c6bc9cb92be5982de4719c1c8af91ccff; compiled by 'root' on 2016-08-18T01:41Z

STARTUP_MSG: java = 1.8.0_171

************************************************************/

22/08/30 01:17:35 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

22/08/30 01:17:35 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-912122d8-d7d8-4035-afa6-6d3309ecbcc1

22/08/30 01:17:36 INFO namenode.FSNamesystem: No KeyProvider found.

22/08/30 01:17:36 INFO namenode.FSNamesystem: fsLock is fair:true

22/08/30 01:17:36 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

22/08/30 01:17:36 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

22/08/30 01:17:36 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

22/08/30 01:17:36 INFO blockmanagement.BlockManager: The block deletion will start around 2022 八月 30 01:17:36

22/08/30 01:17:36 INFO util.GSet: Computing capacity for map BlocksMap

22/08/30 01:17:36 INFO util.GSet: VM type = 64-bit

22/08/30 01:17:36 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB

22/08/30 01:17:36 INFO util.GSet: capacity = 2^21 = 2097152 entries

22/08/30 01:17:37 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

22/08/30 01:17:37 INFO blockmanagement.BlockManager: defaultReplication = 2

22/08/30 01:17:37 INFO blockmanagement.BlockManager: maxReplication = 512

22/08/30 01:17:37 INFO blockmanagement.BlockManager: minReplication = 1

22/08/30 01:17:37 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

22/08/30 01:17:37 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

22/08/30 01:17:37 INFO blockmanagement.BlockManager: encryptDataTransfer = false

22/08/30 01:17:37 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

22/08/30 01:17:37 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

22/08/30 01:17:37 INFO namenode.FSNamesystem: supergroup = supergroup

22/08/30 01:17:37 INFO namenode.FSNamesystem: isPermissionEnabled = false

22/08/30 01:17:37 INFO namenode.FSNamesystem: HA Enabled: false

22/08/30 01:17:37 INFO namenode.FSNamesystem: Append Enabled: true

22/08/30 01:17:37 INFO util.GSet: Computing capacity for map INodeMap

22/08/30 01:17:37 INFO util.GSet: VM type = 64-bit

22/08/30 01:17:37 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB

22/08/30 01:17:37 INFO util.GSet: capacity = 2^20 = 1048576 entries

22/08/30 01:17:37 INFO namenode.FSDirectory: ACLs enabled? false

22/08/30 01:17:37 INFO namenode.FSDirectory: XAttrs enabled? true

22/08/30 01:17:37 INFO namenode.FSDirectory: Maximum size of an xattr: 16384

22/08/30 01:17:37 INFO namenode.NameNode: Caching file names occuring more than 10 times

22/08/30 01:17:37 INFO util.GSet: Computing capacity for map cachedBlocks

22/08/30 01:17:37 INFO util.GSet: VM type = 64-bit

22/08/30 01:17:37 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB

22/08/30 01:17:37 INFO util.GSet: capacity = 2^18 = 262144 entries

22/08/30 01:17:37 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

22/08/30 01:17:37 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

22/08/30 01:17:37 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

22/08/30 01:17:37 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

22/08/30 01:17:37 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

22/08/30 01:17:37 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

22/08/30 01:17:37 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

22/08/30 01:17:37 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

22/08/30 01:17:37 INFO util.GSet: Computing capacity for map NameNodeRetryCache

22/08/30 01:17:37 INFO util.GSet: VM type = 64-bit

22/08/30 01:17:37 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB

22/08/30 01:17:37 INFO util.GSet: capacity = 2^15 = 32768 entries

Re-format filesystem in Storage Directory /opt/soft_installed/hadoop-2.7.3/tmp/dfs/name ? (Y or N) y

22/08/30 01:17:44 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1161154185-192.168.137.110-1661793464628

22/08/30 01:17:44 INFO common.Storage: Storage directory /opt/soft_installed/hadoop-2.7.3/tmp/dfs/name has been successfully formatted.

22/08/30 01:17:44 INFO namenode.FSImageFormatProtobuf: Saving image file /opt/soft_installed/hadoop-2.7.3/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

22/08/30 01:17:44 INFO namenode.FSImageFormatProtobuf: Image file /opt/soft_installed/hadoop-2.7.3/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 350 bytes saved in 0 seconds.

22/08/30 01:17:45 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

22/08/30 01:17:45 INFO util.ExitUtil: Exiting with status 0

22/08/30 01:17:45 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master.phlh123.cn/192.168.137.110

************************************************************/

[root@master hadoop-2.7.3]#

启动及关闭hadoop

# 在node1节点master上

# 启动hadoop

/opt/soft_installed/hadoop-2.7.3/sbin/start-dfs.sh

/opt/soft_installed/hadoop-2.7.3/sbin/start-yarn.sh

/opt/soft_installed/hadoop-2.7.3/sbin/mr-jobhistory-daemon.sh start historyserver

# 关闭hadoop

/opt/soft_installed/hadoop-2.7.3/sbin/mr-jobhistory-daemon.sh stop historyserver

/opt/soft_installed/hadoop-2.7.3/sbin/stop-yarn.sh

/opt/soft_installed/hadoop-2.7.3/sbin/stop-dfs.sh

配置环境变量

# 配置环境变量

vi /etc/profile

# 配置JAVA环境变量

export JAVA_HOME=/opt/soft_installed/jdk1.8.0_171

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

# 配置Hadoop环境变量

export HADOOP_HOME=/opt/soft_installed/hadoop-2.7.3

export HADOOP_LOG_DIR=/opt/soft_installed/hadoop-2.7.3/logs

export YARN_LOG_DIR=$HADOOP_LOG_DIR

export PATH=.:$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$JAVA_HOME

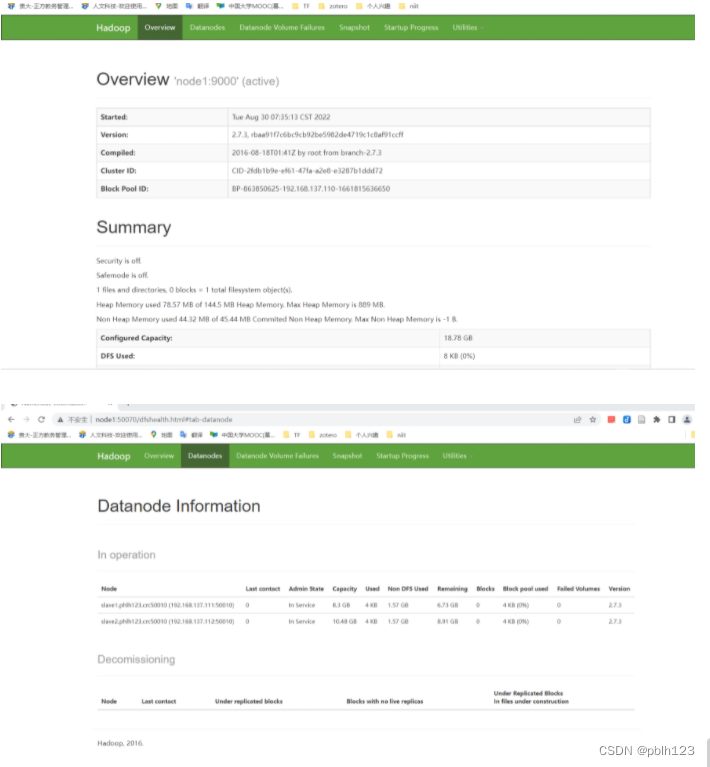

web 验证

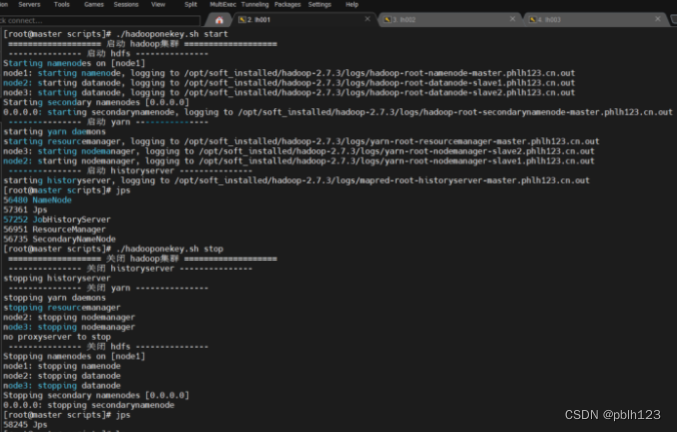

配置hadoop一键启停

[root@master scripts]# cat hadooponekey.sh

#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

echo "启动集群输入:./hadooponekey.sh start"

echo "停止集群输入:./hadooponekey.sh stop"

exit ;

fi

case $1 in

"start")

echo " =================== 启动 hadoop集群 ==================="

echo " --------------- 启动 hdfs ---------------"

ssh node1 "/opt/soft_installed/hadoop-2.7.3/sbin/start-dfs.sh"

echo " --------------- 启动 yarn ---------------"

ssh node1 "/opt/soft_installed/hadoop-2.7.3/sbin/start-yarn.sh"

echo " --------------- 启动 historyserver ---------------"

ssh node1 "/opt/soft_installed/hadoop-2.7.3/sbin/mr-jobhistory-daemon.sh start historyserver"

;;

"stop")

echo " =================== 关闭 hadoop集群 ==================="

echo " --------------- 关闭 historyserver ---------------"

ssh node1 "/opt/soft_installed/hadoop-2.7.3/sbin/mr-jobhistory-daemon.sh stop historyserver"

echo " --------------- 关闭 yarn ---------------"

ssh node1 "/opt/soft_installed/hadoop-2.7.3/sbin/stop-yarn.sh"

echo " --------------- 关闭 hdfs ---------------"

ssh node1 "/opt/soft_installed/hadoop-2.7.3/sbin/stop-dfs.sh"

;;

*)

echo "Input Args Error..."

;;

esac

# 配置onekey权限

chmod 777 hadooponekey.sh

# 启动

./hadooponekey.sh start

# 停止

./hadooponekey.sh stop

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-U2R3ZpcU-1662433961065)(:/a213ef8d8fad4f259b7da0f842ab9323)]](/image/aHR0cHM6Ly9pLWJsb2cuY3NkbmltZy5jbi9ibG9nX21pZ3JhdGUvYmM0ODY2YjNjODVmYzlkMTUzMzE5NmIxMGI3ODdjZjIucG5n)

![[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-drXTnLvu-1662435070684)(:/709995e3d5d149a7892b34670d537156)]](/image/aHR0cHM6Ly9pLWJsb2cuY3NkbmltZy5jbi9ibG9nX21pZ3JhdGUvNTVmODFiNjY4OWFiYzUyMWY2ZTUwOGFkYmNkNjI1YzEucG5n)