一、概述

kube-prometheus 是一整套监控解决方案,它使用 Prometheus 采集集群指标,Grafana 做展示,包含如下组件:

- The Prometheus Operator

- Highly available Prometheus

- Highly available Alertmanager

- Prometheus node-exporter

- Prometheus Adapter for Kubernetes Metrics APIs (k8s-prometheus-adapter)

- kube-state-metrics

- Grafana

二、部署 Kube-Prometheus

1、下载 Kube-Prometheus 代码

方法一:

git clone https://github.com/prometheus-operator/kube-prometheus.git

cd kube-prometheus

git branch -r # 查看当前分支有哪些

git checkout release-0.9 # 切换到自己 Kubernetes 兼容的版本

方法二:

git clone -b release-0.9 https://github.com/prometheus-operator/kube-prometheus.git

注:在

release-0.11版本之后新增了NetworkPolicy

默认是允许自己访问,如果了解NetworkPolicy可以修改一下默认的规则,可以用查看ls *networkPolicy*,如果不修改的话则会影响到修改NodePort类型也无法访问

如果不会Networkpolicy可以直接删除就行

2、修改 Kube-Prometheus 镜像源

国外镜像源某些镜像无法拉取,我们这里修改prometheus-operator,prometheus,alertmanager,kube-state-metrics,node-exporter,prometheus-adapter的镜像源为国内镜像源。我这里使用的是中科大的镜像源。

cd ./kube-prometheus/manifests/

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' setup/prometheus-operator-deployment.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheus-prometheus.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' alertmanager-alertmanager.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' kube-state-metrics-deployment.yaml

sed -i 's/k8s.gcr.io/lank8s.cn/g' kube-state-metrics-deployment.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' node-exporter-daemonset.yaml

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheus-adapter-deployment.yaml

sed -i 's/k8s.gcr.io/lank8s.cn/g' prometheus-adapter-deployment.yaml

grep "image: " * -r # 确认一下是否还有国外镜像

3、修改类型为 NodePort

为了可以从外部访问prometheus,alertmanager,grafana,我们这里修改promethes,alertmanager,grafana的service类型为NodePort类型。

- 修改prometheus的service

cat prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.29.1

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort # 新增

ports:

- name: web

port: 9090

nodePort: 30090 # 新增

targetPort: web

selector:

app: prometheus

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

prometheus: k8s

sessionAffinity: ClientIP

- 修改alertmanager的service

apiVersion: v1

kind: Service

metadata:

labels:

alertmanager: main

app.kubernetes.io/component: alert-router

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.22.2

name: alertmanager-main

namespace: monitoring

spec:

type: NodePort # 新增

ports:

- name: web

port: 9093

nodePort: 30093 # 新增

targetPort: web

selector:

alertmanager: main

app: alertmanager

app.kubernetes.io/component: alert-router

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

- 修改grafana的service

cat grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 8.1.1

name: grafana

namespace: monitoring

spec:

type: NodePort # 新增

ports:

- name: http

port: 3000

nodePort: 32000 # 新增

targetPort: http

selector:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

4、修改Prometheus保留时间

vim prometheus-prometheus.yaml

新增: spec.retention: 15d

5、安装 kube-Prometheus 并确认状态

kubectl apply -f setup/

# 下载prometheus-operator镜像需要花费几分钟,这里等待几分钟,直到prometheus-operator变成running状态

kubectl apply -f .

watch kubectl get po -n monitoring # 等待所有镜像变成Running状态

6、通过Ingres访问Prometheus

cat > prometheus-ingress.yaml << 'EOF'

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

namespace: monitoring

name: prometheus-ingress

spec:

ingressClassName: nginx

rules:

- host: grafana.xxx.net # 访问 Grafana 域名

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: 3000

- host: prometheus.xxx.net # 访问 Prometheus 域名

http:

paths:

- path: /

backend:

serviceName: prometheus-k8s

servicePort: 9090

- host: alertmanager.xxx.net # 访问 alertmanager 域名

http:

paths:

- path: /

backend:

serviceName: alertmanager-main

servicePort: 9093

EOF

三、访问

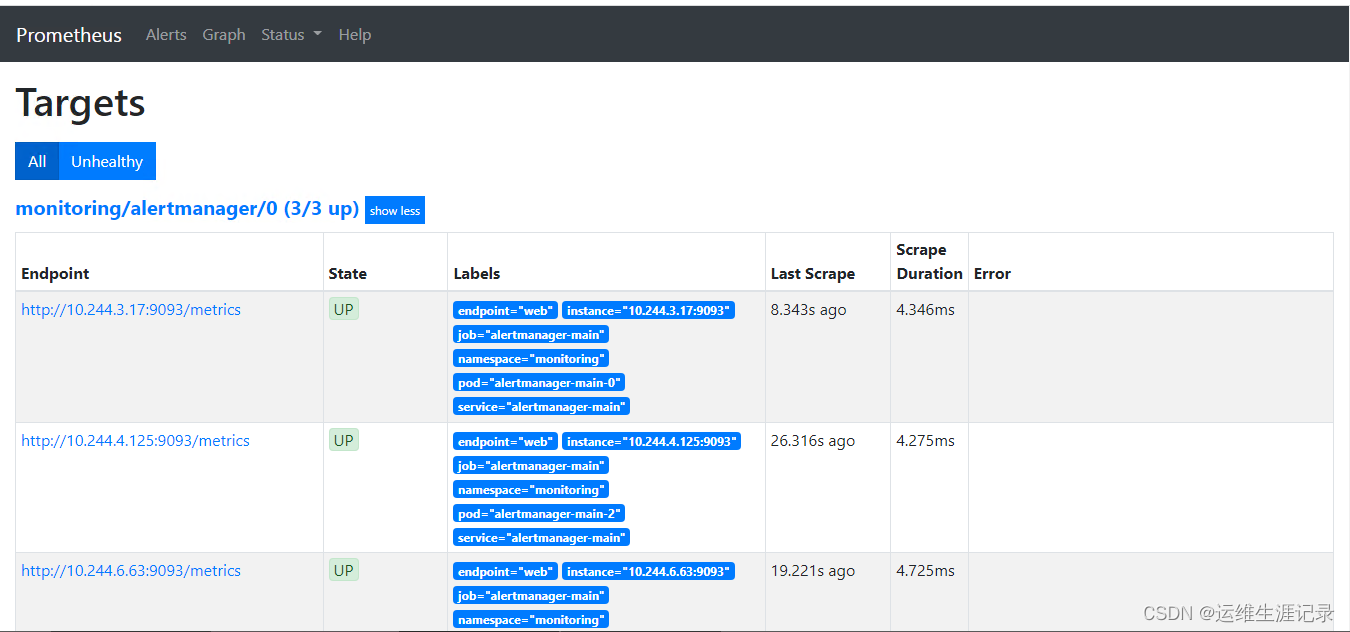

访问 Prometheus:

浏览器打开: http://prometheus.xxx.net

确保state的状态都是UP

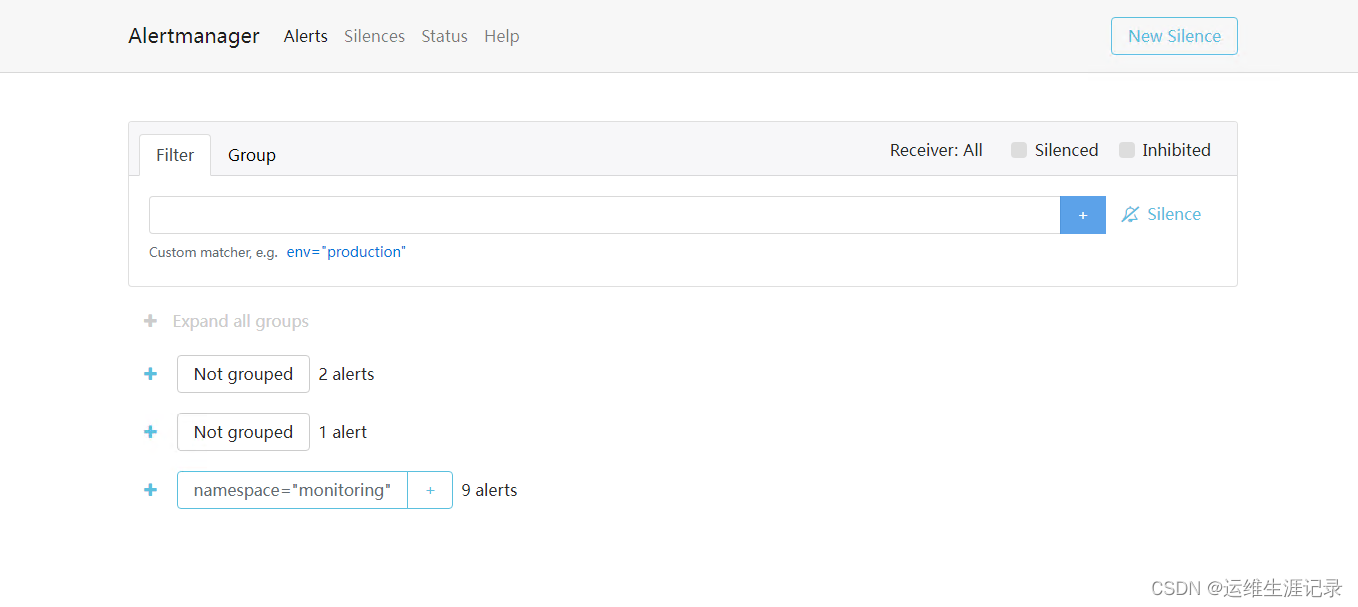

访问 Alertmanager:

浏览器打开:alertmanager.xxx.net

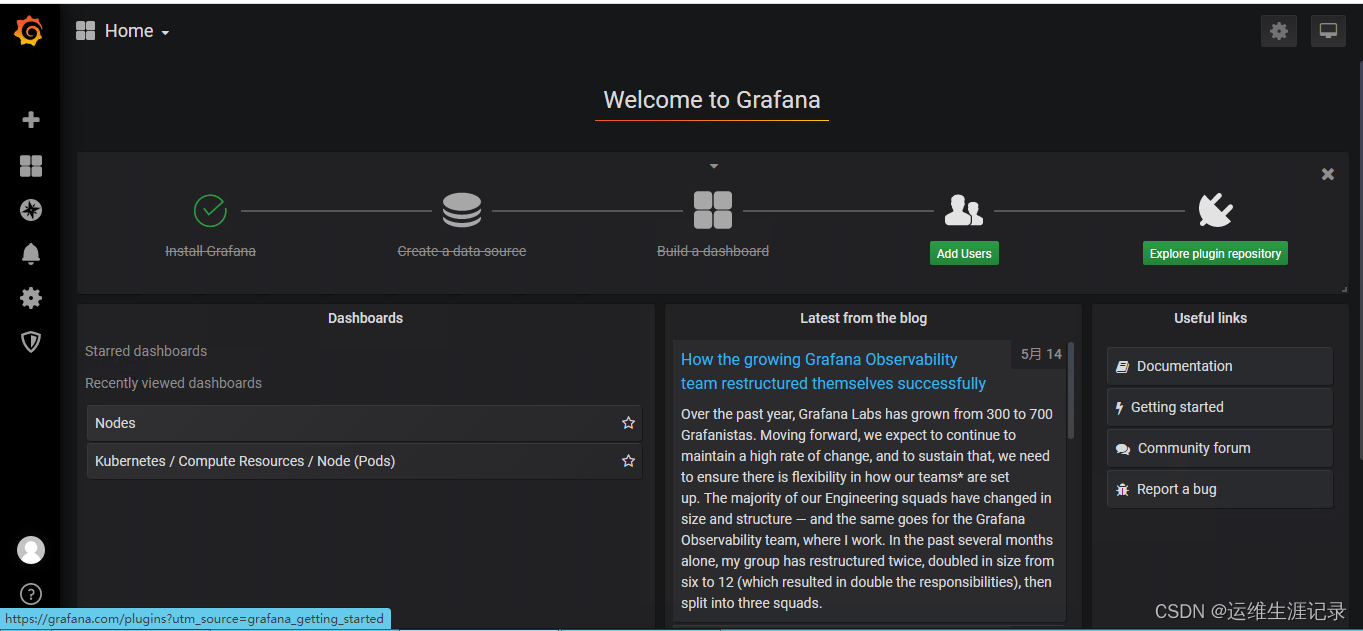

访问 Grafana:

浏览器打开:grafana.xxx.net

首次登录,用户名和密码,都是admin , 登录之后,会提示修改密码,可以选择跳过skip

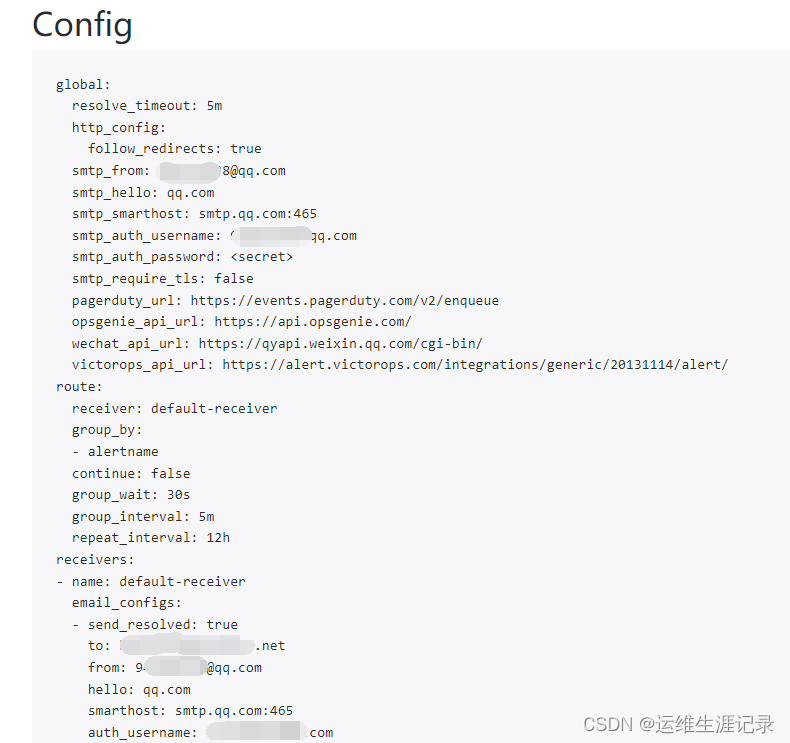

四、Alertmanager 邮件报警

cat > alertmanager-secret.yaml <<'EOF'

apiVersion: v1

data: {}

kind: Secret

metadata:

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

global:

resolve_timeout: 5m

smtp_smarthost: 'smtp.qq.com:465'

smtp_from: '[email protected]' # 发件人邮箱

smtp_auth_username: '[email protected]' # 发件人邮箱

smtp_auth_password: 'lkjtrbnmqfesbdhj' # QQ授权码

smtp_hello: 'qq.com'

smtp_require_tls: false

route:

group_by: ['alertname']

group_interval: 5m

group_wait: 30s

receiver: default-receiver

repeat_interval: 12h

routes:

- receiver: 'lms-saas'

match_re:

namespace: ^(lms-saas|lms-standard).*$ # 根据namespace进行区分报警

receivers:

- name: 'default-receiver'

email_configs:

- to: '[email protected]' # 收件人邮箱,设置多个收件人

send_resolved: true

- to: '[email protected]'

send_resolved: true

- name: 'lms-saas' # 跟上面定义的名字必须一样

email_configs:

- to: '[email protected]' # 收件人邮箱,也可以设置多个

send_resolved: true

- to: '[email protected]'

send_resolved: true

type: Opaque

EOF

kubectl delete -f alertmanager-secret.yaml

kubectl apply -f alertmanager-secret.yaml

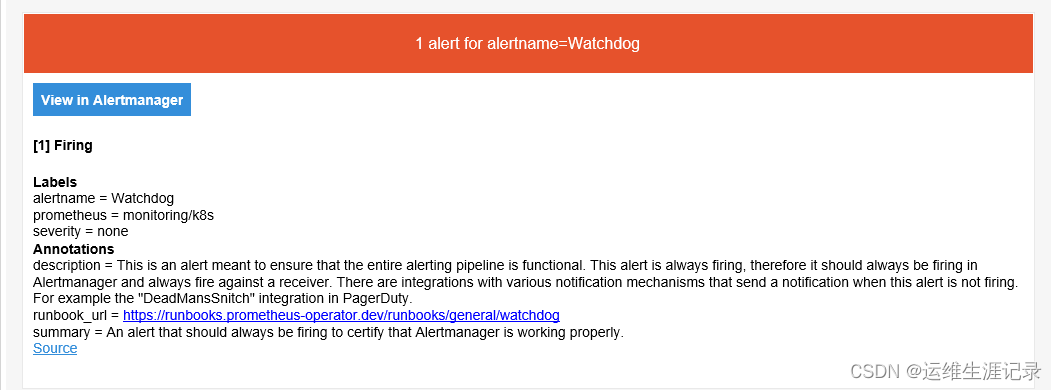

验证一下邮件报警是否生效

验证一下是否可以收到报警邮件

五、Alertmanager 钉钉报警

1、注册钉钉账号->新建报警群->机器人管理

2、自定义(通过webhook接入自定义服务)

- 复制生成的

Webhook

3、编写钉钉yaml

cat > dingtalk-webhook.yaml <<'EOF'

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

run: dingtalk

name: webhook-dingtalk

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

run: dingtalk

template:

metadata:

labels:

run: dingtalk

spec:

containers:

- name: dingtalk

image: timonwong/prometheus-webhook-dingtalk:v1.4.0

imagePullPolicy: IfNotPresent

# 设置钉钉群聊自定义机器人后,使用实际 access_token 替换下面 xxxxxx部分

args:

- --ding.profile=webhook1=https://oapi.dingtalk.com/robot/send?access_token=你的token

ports:

- containerPort: 8060

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

labels:

run: dingtalk

name: webhook-dingtalk

namespace: monitoring

spec:

ports:

- port: 8060

protocol: TCP

targetPort: 8060

selector:

run: dingtalk

sessionAffinity: None

EOF

kubectl apply -f dingtalk-webhook.yaml # 应用配置后,对应的pod和service就起来了,我们可以看到侦听的端口为8060.

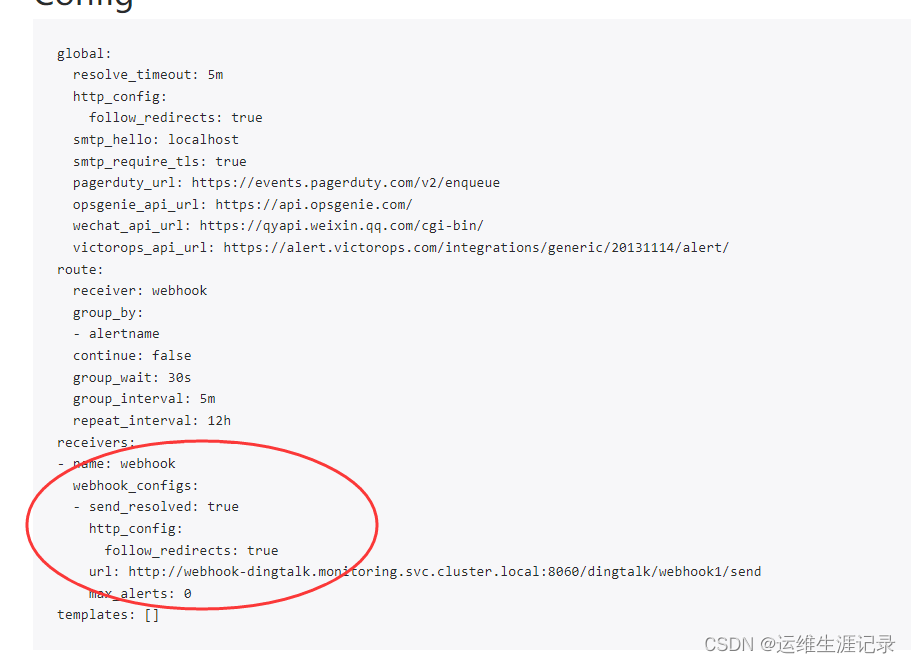

4、alertmanager配置告警通知

cat > alertmanager-secret.yaml <<'EOF'

apiVersion: v1

data: {}

kind: Secret

metadata:

name: alertmanager-main

namespace: monitoring

stringData:

alertmanager.yaml: |-

global:

resolve_timeout: 5m

route:

group_by: ['alertname']

group_interval: 5m

group_wait: 30s

receiver: "webhook"

repeat_interval: 12h

receivers:

#配置钉钉告警的webhook

- name: 'webhook'

webhook_configs:

- url: 'http://webhook-dingtalk.monitoring.svc.cluster.local:8060/dingtalk/webhook1/send'

send_resolved: true

type: Opaque

EOF

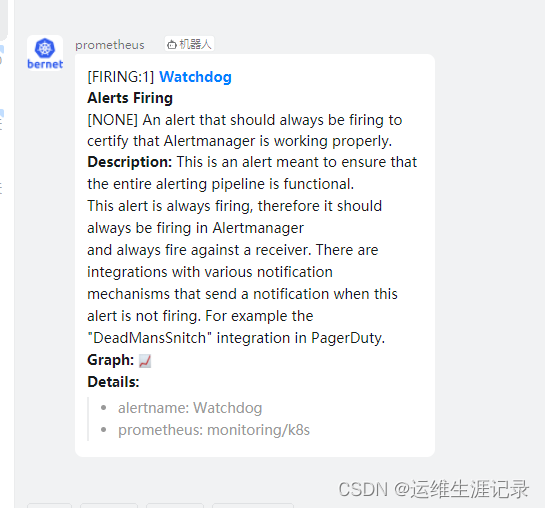

5、验证一下钉钉报警是否生效

6、验证一下钉钉可以收到报警

kubectl run busybox --image=busybox # 启动一个错误的容器