cpu:最低2C

内存:最低4G

-

Linux操作系统主机,基于Debian 和 RedHat的操作系统

-

2G以上内存,少于2G可能程序无法运行

-

2核心以上CPU

-

网络连接,确保集群中各个主机能够互相访问,最好可以直接访问互联网

-

集群内的每台主机拥有唯一的主机名,MAC地址,UUID,

-

禁用Swap,一定要禁用Swap设备,这样Kubernetes才能工作的更好。

|

软件

|

版本

|

项目地址

|

| 操作系统 |

7.3.1611

| |

|

内核版本

|

5.18.0

| |

|

ipvsadm

|

1.27

| |

|

ipset

|

7.1

| |

|

docker

|

20.10.16

| |

|

docker-compose

|

1.26.1

| |

|

Kubernetes

|

1.21.0

| |

|

bash-completion

|

2.1

| |

|

kuboard

|

3.3.0

|

| ip |

hostname

|

role

|

|

192.168.80.130

|

master

|

master,etcd

|

|

192.168.80.131

|

node1

|

node1

|

|

192.168.80.132

|

node2

|

node2

|

一.环境准备

1.时间同步

启动chronyd服务

systemctl start chronyd

设置chronyd服务开机自启

systemctl enable chronyd

使用date命令验证时间同步了

date

2.内核升级(四台)

(1)查看centos版本

[root@server1 ~]# cat /etc/centos-release

CentOS Linux release 7.3.1611 (Core)

(2)查看内核版本

[root@server1 ~]# uname -sr

Linux 3.10.0-514.el7.x86_64

(3)在centos7上启用ELRepo仓库

[root@server1 ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

[root@server1 ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

获取http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

获取http://elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm

准备中... ################################# [100%]

正在升级/安装...

1:elrepo-release-7.0-4.el7.elrepo ################################# [100%]

(4)完成之后查看相应的可用内核相关包

[root@harbor ~]# yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

已加载插件:fastestmirror, langpacks

源 'yum' 在配置文件中未指定名字,使用标识代替

elrepo-kernel | 3.0 kB 00:00:00

elrepo-kernel/primary_db | 2.1 MB 00:00:08

Loading mirror speeds from cached hostfile

* elrepo-kernel: hkg.mirror.rackspace.com

可安装的软件包

elrepo-release.noarch 7.0-5.el7.elrepo elrepo-kernel

kernel-lt.x86_64 5.4.196-1.el7.elrepo elrepo-kernel

kernel-lt-devel.x86_64 5.4.196-1.el7.elrepo elrepo-kernel

kernel-lt-doc.noarch 5.4.196-1.el7.elrepo elrepo-kernel

kernel-lt-headers.x86_64 5.4.196-1.el7.elrepo elrepo-kernel

kernel-lt-tools.x86_64 5.4.196-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs.x86_64 5.4.196-1.el7.elrepo elrepo-kernel

kernel-lt-tools-libs-devel.x86_64 5.4.196-1.el7.elrepo elrepo-kernel

kernel-ml.x86_64 5.18.0-1.el7.elrepo elrepo-kernel

kernel-ml-devel.x86_64 5.18.0-1.el7.elrepo elrepo-kernel

kernel-ml-doc.noarch 5.18.0-1.el7.elrepo elrepo-kernel

kernel-ml-headers.x86_64 5.18.0-1.el7.elrepo elrepo-kernel

kernel-ml-tools.x86_64 5.18.0-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs.x86_64 5.18.0-1.el7.elrepo elrepo-kernel

kernel-ml-tools-libs-devel.x86_64 5.18.0-1.el7.elrepo elrepo-kernel

perf.x86_64 5.18.0-1.el7.elrepo elrepo-kernel

python-perf.x86_64 5.18.0-1.el7.elrepo elrepo-kernel

(5)安装最新的主线内核

[root@harbor ~]# yum --enablerepo=elrepo-kernel install kernel-ml

已加载插件:fastestmirror, langpacks

源 'yum' 在配置文件中未指定名字,使用标识代替

base | 3.6 kB 00:00:00

elrepo | 3.0 kB 00:00:00

elrepo-kernel | 3.0 kB 00:00:00

extras | 2.9 kB 00:00:00

updates | 2.9 kB 00:00:00

file:///media/repodata/repomd.xml: [Errno 14] curl#37 - "Couldn't open file /media/repodata/repomd.xml"

正在尝试其它镜像。

elrepo/primary_db | 547 kB 00:00:51

Loading mirror speeds from cached hostfile

* elrepo: mirrors.tuna.tsinghua.edu.cn

* elrepo-kernel: mirrors.tuna.tsinghua.edu.cn

正在解决依赖关系

--> 正在检查事务

---> 软件包 kernel-ml.x86_64.0.5.18.0-1.el7.elrepo 将被 安装

--> 解决依赖关系完成

依赖关系解决

======================================================================================================

Package 架构 版本 源 大小

======================================================================================================

正在安装:

kernel-ml x86_64 5.18.0-1.el7.elrepo elrepo-kernel 56 M

事务概要

======================================================================================================

安装 1 软件包

总下载量:56 M

安装大小:257 M

Is this ok [y/d/N]: y

Downloading packages:

kernel-ml-5.18.0-1.el7.elrepo. FAILED 22 MB 9668:25:40 ETA

http://elrepo.reloumirrors.net/kernel/el7/x86_64/RPMS/kernel-ml-5.18.0-1.el7.elrepo.x86_64.rpm: [Errno 12] Timeout on http://elrepo.reloumirrors.net/kernel/el7/x86_64/RPMS/kernel-ml-5.18.0-1.el7.elrepo.x86_64.rpm: (28, 'Operation too slow. Less than 1000 bytes/sec transferred the last 30 seconds')

正在尝试其它镜像。

kernel-ml-5.18.0-1.el7.elrepo.x86_64.rpm | 56 MB 00:18:33

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

警告:RPM 数据库已被非 yum 程序修改。

** 发现 3 个已存在的 RPM 数据库问题, 'yum check' 输出如下:

ipa-client-4.4.0-12.el7.centos.x86_64 有已安装冲突 freeipa-client: ipa-client-4.4.0-12.el7.centos.x86_64

ipa-client-common-4.4.0-12.el7.centos.noarch 有已安装冲突 freeipa-client-common: ipa-client-common-4.4.0-12.el7.centos.noarch

ipa-common-4.4.0-12.el7.centos.noarch 有已安装冲突 freeipa-common: ipa-common-4.4.0-12.el7.centos.noarch

正在安装 : kernel-ml-5.18.0-1.el7.elrepo.x86_64 1/1

验证中 : kernel-ml-5.18.0-1.el7.elrepo.x86_64 1/1

已安装:

kernel-ml.x86_64 0:5.18.0-1.el7.elrepo

完毕!

(6)设置为默认启动项,修改GRUB

[root@harbor ~]# vim /etc/default/grub

GRUB_DEFAULT=0

#将GRUB 初始化页面的第一个内核将作为默认内核

(7)重新创建内核配置

[root@harbor ~]# grub2-mkconfig -o /boot/grub2/grub.cfg

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-5.18.0-1.el7.elrepo.x86_64

Found initrd image: /boot/initramfs-5.18.0-1.el7.elrepo.x86_64.img

Found linux image: /boot/vmlinuz-3.10.0-514.el7.x86_64

Found initrd image: /boot/initramfs-3.10.0-514.el7.x86_64.img

Found linux image: /boot/vmlinuz-0-rescue-37d488903aad4a53a78ffddf50376cba

Found initrd image: /boot/initramfs-0-rescue-37d488903aad4a53a78ffddf50376cba.img

done

(8)重启系统验证

reboot

3.修改主机名(四台)

[root@harbor ~]# vim /etc/hostname

master

4.将四台主机写好hosts文件

vim /etc/hosts

192.168.80.130 master

192.168.80.131 node1

192.168.80.132 node2

192.168.80.133 harbor

5.关闭swap(四台)

临时关闭

swapoff -a

永久关闭(删除或注释掉swap那一行重启即可)

vim /etc/fstab

#/dev/mapper/cl-swap swap swap defaults 0 0

6.关闭所有防火墙

systemctl disable firewalld.service && systemctl stop firewalld.service

7.禁用selinux(沙盒)

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

cat /etc/selinux/config

重启使其selinux生效

8.清空iptables

iptables -F

9.将桥接的ipv4流量传递到iptables的链,使设置生效(四台)

vim /etc/sysctl.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness = 0

sysctl -p

10.kube-proxy开启ipvs的前置条件(四台)

由于ipvs已经加入到内核的主干,所以为kube-proxy开启ipvs的前提需要加载以下的内核模块:在所有的kubernetes节点master,node1和node2上执行以下脚本

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4脚本创建了的/etc/sysconfig/modules/ipvs.modules文件,保证在节点重启后能自动加载所需模块。使用lsmod | grep -e ip_vs -e nf_conntrack_ipv4命令查看是否已经正确加载所需的内核模块。在所有节点上安装ipset软件包,同时为了方便查看ipvs规则我们要安装ipvsadm(可选)

yum install ipset ipvsadm

reboot

二.安装底层容器环境-docker ce(四台)

1.安装docker依赖环境

[root@master ~]# yum install -y yum-utils

[root@master ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

[root@node2 ~]# sudo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

2.安装Docker-CE

[root@master ~]# sudo yum makecache fast

[root@master ~]# sudo yum -y install docker-ce

3.启动docker

[root@master ~]# systemctl start docker

4.运行docker容器环境

[root@master ~]# docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

2db29710123e: Pull complete

Digest: sha256:80f31da1ac7b312ba29d65080fddf797dd76acfb870e677f390d5acba9741b17

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

5.配置阿里镜像加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://hg8sb1a6.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

6.将Docker设置为服务器开机自启动

systemctl enable docker

三.安装docker-compose

1.安装docker-compose

curl -L https://github.com/docker/compose/releases/download/1.26.1/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

systemctl restart docker

docker info

2.查看docker-compose版本

docker-compose --version

四.使用部署工具(Deployment tools)安装kubernetes

#官方介绍了3种部署工具部署k8s1.使用kubeadm部署自举式集群;(本部署使用)2.使用kops安装在AWS上安装k8s集群;3.使用kubespray将k8s部署在GCE(谷歌云),Azure(微软云),OpenStack(私有云)AWS(亚马逊云),vSphere(VMware vSphere),Packet(bare metal)(裸金属服务器),Oracle Cloud Infrastructure(Experimental)(甲骨文云基础设施)上的。本次部署直接使用kubeadm部署工具直接将k8s集群安装在自己的数据中心中。

1.配置yum仓库

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2.安装kubeadm,kubelet and kubectl(所有节点都要安装,包括worker,其中kubeclt在workder上是可选安装)

yum --disablerepo="*" --enablerepo="kubernetes" list available --showduplicates

yum install --enablerepo="kubernetes" kubelet-1.21.0-0.x86_64 kubeadm-1.21.0-0.x86_64 kubectl-1.21.0-0.x86_64 -y

3.设置kubelet跟随服务器开机启动

systemctl enable kubelet && systemctl start kubelet

4.安装bash-completion

yum install bash-completion

source <(kubectl completion bash)

source <(kubeadm completion bash)

vim .bashrc

source <(kubectl completion bash)

source <(kubeadm completion bash)

5.初始化(master节点)

kubeadm init --apiserver-advertise-address=192.168.80.130 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.21.0 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.2.0.0/16 --ignore-preflight-errors=all --upload-certs|

选项

|

意义

|

|

–kubernetes-version=1.21.2

|

指明需要初始化的kubernetes的版本,默认值为stable-1

|

|

–apiserver-advertise-address=172.16.133.56

|

Master服务器的API对外监听的IP地址是哪个IP,有的服务器有多个IP,可以指明一下IP地址,以示明确

|

|

–control-plane-endpoint=cluster-endpoint.microservice.for-best.cn

|

Master高可用时用到的,另外一篇博客详解Master的集群

|

|

–service-cidr=10.1.0.0/16

|

Service的IP地址分配段

|

|

–pod-network-cidr=10.2.0.0/16

|

Pod的IP地址分配段

|

|

–service-dns-domain=microservice.for-best.cn

|

Service的域名设置,默认是cluster.local,企业内部通常会更改

|

|

–upload-certs

|

用于新建master集群的时候直接在master之间共享证书,如没有此选项,后期配置集群的时候需要手动复制证书文件

|

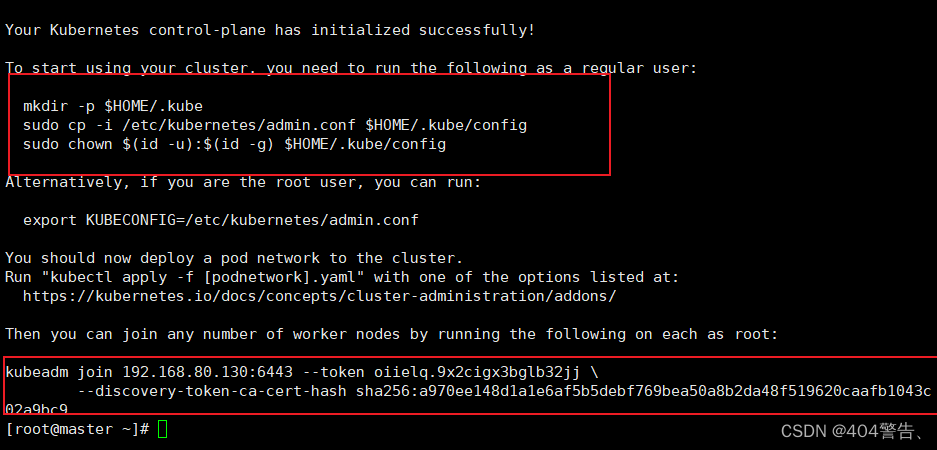

Your Kubernetes control-plane has initialized successfully! 标志着Master初始化成功注意,在Master初始化完成,在最后的输出中,会提示如何如何其它的Master,提示如何加入worker节点

6.主节点配置变量环境

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

7.Worker加入Master做为运行Pod的节点

直接在安装完成之后的Worker上输入Master初始化后的加入提示以下命令输入到shell窗口中

kubeadm join 192.168.80.130:6443 --token oiielq.9x2cigx3bglb32jj \

--discovery-token-ca-cert-hash sha256:a970ee148d1a1e6af5b5debf769bea50a8b2da48f519620caafb1043c02a9bc9注意!安装完网络插件在加入集群注意!安装完网络插件在加入集群注意!安装完网络插件在加入集群

8.安装calico网络功能附件

# 下载

curl https://projectcalico.docs.tigera.io/manifests/calico.yaml -O -k

vim calico.yaml

前面#去掉

- name: CALICO_IPV4POOL_CIDR

value: "10.2.0.0/16"

#写给pod分配的网段

# 执行

kubectl apply -f calico.yaml

9.在Master上查看集群

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 40m v1.21.0

node1 NotReady <none> 34s v1.21.0

node2 NotReady <none> 31s v1.21.0

[root@master ~]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-685b65ddf9-9w6cw 1/1 Running 0 18m

kube-system calico-node-khpms 1/1 Running 0 17m

kube-system calico-node-p4t78 1/1 Running 0 18m

kube-system calico-node-qs7m4 1/1 Running 0 17m

kube-system coredns-545d6fc579-46vrn 1/1 Running 0 57m

kube-system coredns-545d6fc579-zknxv 1/1 Running 0 57m

kube-system etcd-master 1/1 Running 0 57m

kube-system kube-apiserver-master 1/1 Running 0 57m

kube-system kube-controller-manager-master 1/1 Running 0 57m

kube-system kube-proxy-4mjsq 1/1 Running 0 17m

kube-system kube-proxy-bgqrt 1/1 Running 0 57m

kube-system kube-proxy-r9z2j 1/1 Running 0 17m

kube-system kube-scheduler-master 1/1 Running 0 57m

四、安装kuboard图形化界面

1.创建kuboard-pv-pvc.yaml

mkdir -p /home/yaml/kuboard

cd /home/yaml/kuboard

cat > kuboard-pv-pvc.yaml<<EOF

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-kuboard1

spec:

storageClassName: data-kuboard

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

hostPath:

path: /data-kuboard1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-kuboard2

spec:

storageClassName: data-kuboard

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

hostPath:

path: /data-kuboard2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: data-kuboard3

spec:

storageClassName: data-kuboard

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

hostPath:

path: /data-kuboard3

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: data-kuboard

provisioner: fuseim.pri/ifs

EOF

2.运行kuboard-pv-pvc.yaml

kubectl apply -f kuboard-pv-pvc.yaml

curl -o kuboard-v3.yaml https://addons.kuboard.cn/kuboard/kuboard-v3.yaml

sed -i "s#KUBOARD_ENDPOINT.*#KUBOARD_ENDPOINT: 'http://192.168.80.130:30080'#g" kuboard-v3.yaml

sed -i 's#storageClassName.*#storageClassName: data-kuboard#g' kuboard-v3.yaml

4.访问kuboard

192.168.80.130:30080

3.kuboard-agent导入

curl -k 'http://192.168.80.130:30080/kuboard-api/cluster/default/kind/KubernetesCluster/default/resource/installAgentToKubernetes?token=rgwDI52lcI9ymuNxgoVZyoJJRV66EJdS' > kuboard-agent.yaml

kubectl apply -f ./kuboard-agent.yaml