第一步创建容器的目录

mkdir -p /my_elk/es1/data /my_elk/es2/data /my_elk/es3/data

mkdir -p /my_elk/kibana/conf

mkdir -p /my_elk/logstash/config

mkdir -p /my_elk/plugins/ik

第二步创建各个配置文件

kibana.yml

在/my_elk/kibana/conf目录下创建

server:

host: "0.0.0.0"

shutdownTimeout: "5s"

elasticsearch:

hosts: ["http://es1:9200","http://es2:9200","http://es3:9200"]

# es的账号和密码

username: "elastic"

password: "elastic"

monitoring:

ui:

container:

elasticsearch:

enabled: true

i18n:

locale: "zh-CN"

logstash.yml

在/my_elk/logstash/config目录下创建

http.host: "0.0.0.0"

xpack.monitoring.enabled: true

#es地址

xpack.monitoring.elasticsearch.hosts: ["http://es1:9200", "http://es2:9200", "http://es3:9200"]

#es xpack账号密码

xpack.monitoring.elasticsearch.username: "elastic"

#es xpack账号密码

xpack.monitoring.elasticsearch.password: "elastic"

path.config: /usr/share/logstash/config/conf.d/*.conf

path.logs: /usr/share/logstash/logs

logstash.conf

这是默认的配置

input {

stdin {}

}

filter {

# 添加过滤器插件配置(如果需要)

}

output {

stdout {}

}

第三步将ik分词器下载到插件目录中

手动下载

在物理机把下载好的elasticsearch-analysis-ik-7.14.0.zip压缩包解压后的文件以及目录上传到/my_elk/plugins/ik文件夹下

在线下载

cd /my_elk/plugins

wget https://release.infinilabs.com/analysis-ik/stable/elasticsearch-analysis-ik-7.14.0.zip

unzip elasticsearch-analysis-ik-7.14.0.zip -d ./ik

解压后要把elasticsearch-analysis-ik-7.14.0.zip文件删除

第四步文件夹文件授权——重点

如果不授权,容器启动基本都会报权限不足的错误

-R 一定要加上,否则也会报错

chmod -R 777 /my_elk/es1/data /my_elk/es2/data /my_elk/es3/data

chmod -R 777 /my_elk/kibana/conf

chmod -R 777 /my_elk/logstash/config

chmod -R 777 /my_elk/plugins/ik

第五步编写docker-compose启动文件es.yml

services:

es1:

restart: always

image: elasticsearch:7.14.0

container_name: es1

environment:

- node.name=es1

- cluster.name=es-cluster

- discovery.seed_hosts=es2,es3

- cluster.initial_master_nodes=es1,es2,es3

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xmx512m -Xms512m"

# 用户名密码一样

- ELASTIC_PASSWORD=elastic

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- ./es1/data:/usr/share/elasticsearch/data

- ./plugins:/usr/share/elasticsearch/plugins

ports:

- 9200:9200

networks:

- elk_net

es2:

restart: always

image: elasticsearch:7.14.0

container_name: es2

environment:

- node.name=es2

- cluster.name=es-cluster

- discovery.seed_hosts=es1,es3

- cluster.initial_master_nodes=es1,es2,es3

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xmx512m -Xms512m"

- ELASTIC_PASSWORD=elastic

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- ./es2/data:/usr/share/elasticsearch/data

- ./plugins:/usr/share/elasticsearch/plugins

ports:

- 9201:9200

networks:

- elk_net

es3:

restart: always

image: elasticsearch:7.14.0

container_name: es3

environment:

- node.name=es3

- cluster.name=es-cluster

- discovery.seed_hosts=es1,es2

- cluster.initial_master_nodes=es1,es2,es3

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xmx512m -Xms512m"

- ELASTIC_PASSWORD=elastic

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- ./es3/data:/usr/share/elasticsearch/data

- ./plugins:/usr/share/elasticsearch/plugins

ports:

- 9202:9200

networks:

- elk_net

kibana:

restart: always

image: kibana:7.14.0

container_name: kibana

ports:

- 5601:5601

volumes:

- ./kibana/conf/kibana.yml:/usr/share/kibana/config/kibana.yml

# environment:

# ELASTICSEARCH_HOSTS: http://es1:9200,http://es2:9200,http://es3:9200

networks:

- elk_net

cerebro:

restart: always

image: lmenezes/cerebro:0.9.4

container_name: cerebro

ports:

- "9800:9000"

command:

- -Dhosts.0.host=http://es1:9200

- -Dhosts.1.host=http://es2:9200

- -Dhosts.2.host=http://es3:9200

networks:

- elk_net

logstash:

image: logstash:7.14.0

container_name: logstash

restart: always

environment:

- LS_JAVA_OPTS=-Xmx512m -Xms512m

volumes:

- ./logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml

- ./logstash/config/logstash.conf:/etc/logstash/conf.d/logstash.conf

networks:

- elk_net

depends_on:

- es1

- es2

- es3

# 有需要执行的管道解除注释

# entrypoint:

# - logstash

# - -f

# - /etc/logstash/conf.d/logstash.conf

logging:

driver: "json-file"

options:

max-size: "200m"

max-file: "3"

ports:

- 9600:9600

networks:

elk_net:

driver: bridge

external: true

第六步为了避免报错修改配置

vim /etc/sysctl.conf

在最后加上

vm.max_map_count = 262144

sysctl -p

第七步启动容器

docker network create elk_net

cd /my_elk

docker-compose -f es.yml up -d

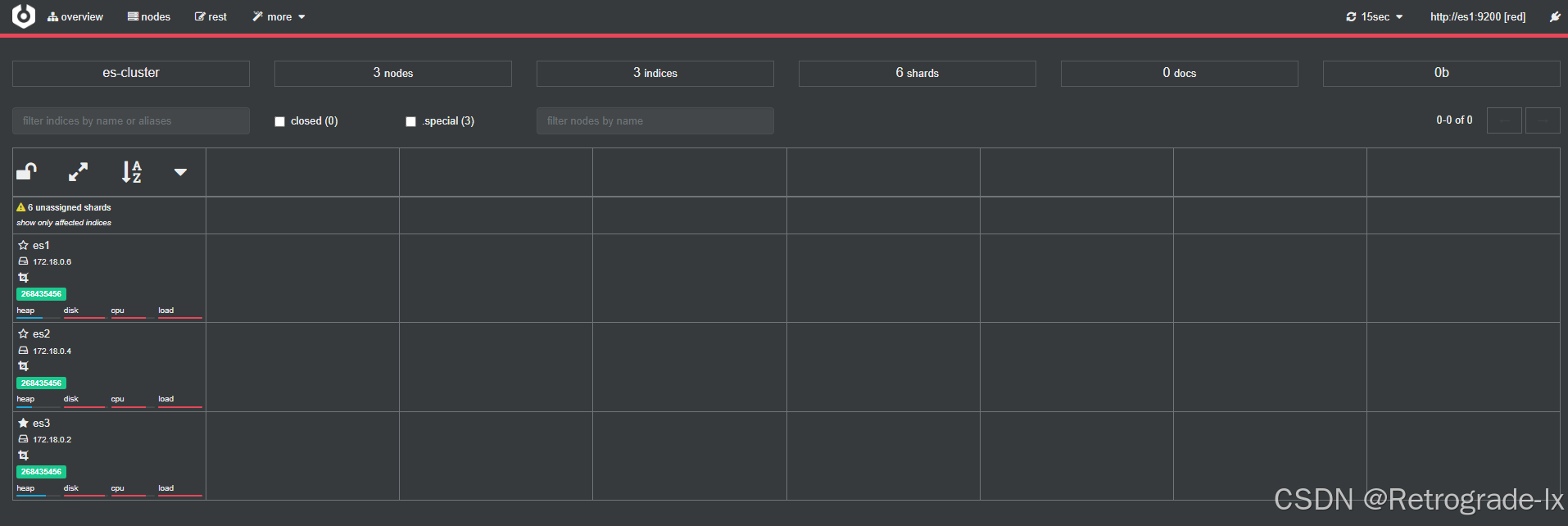

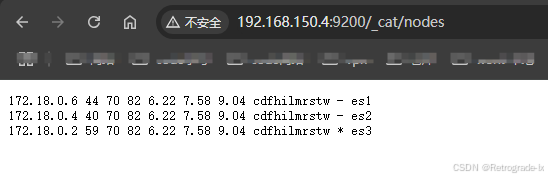

查看cerebro

查看es集群的状态

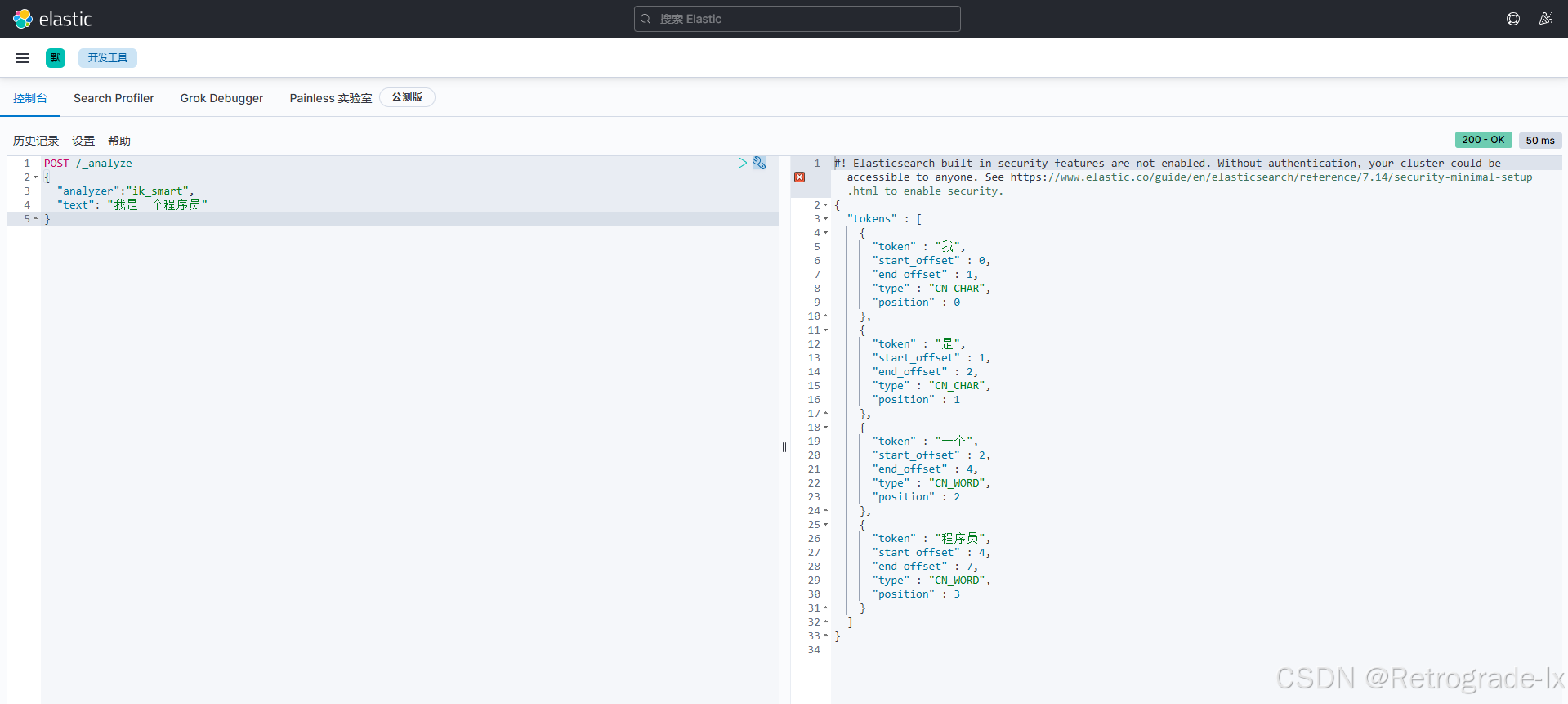

查看kibana和ik分词器是否安装成功

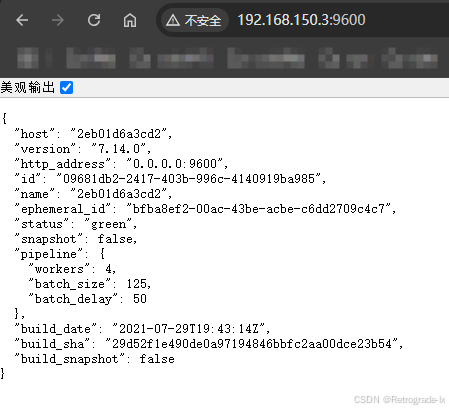

查看logstash是否安装成功

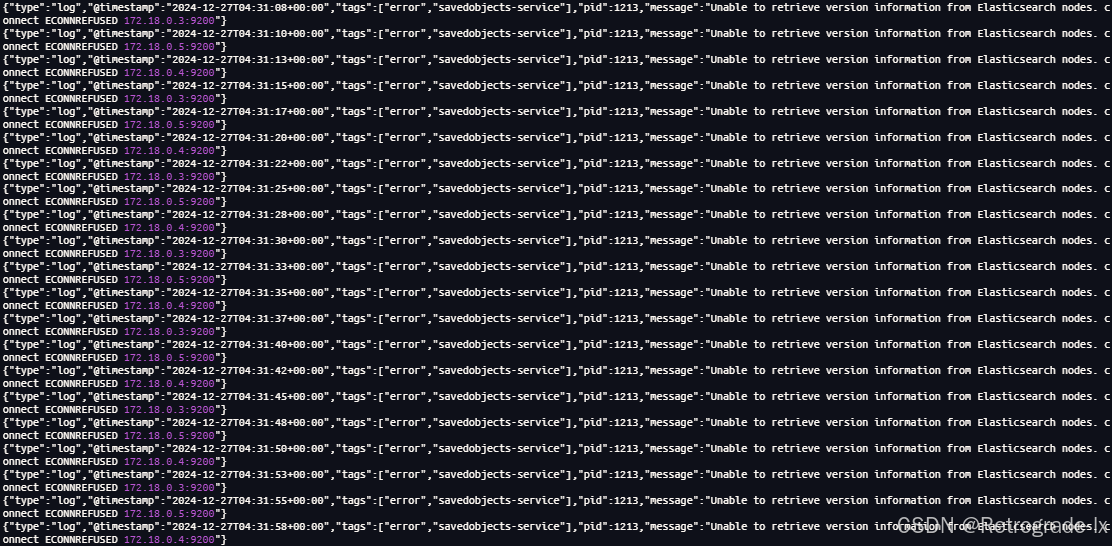

最后如果kibana的日志出现一下错误

尝试重启kibana,这个问题是在docker中连接不到es

下一篇文章给大家带来logstash同步100万mysql数据到es的案例