代码流程思路:

前端调用摄像头然后拍照把图片存入数据库, 后端从数据库把人像图片取出来,再把注册时的人像取出来 ,调用虹软API进行对比识别, 把对比数字返回给前端,前端判断是否大于0.8(可以自己调),就对比成功,否则对比失败

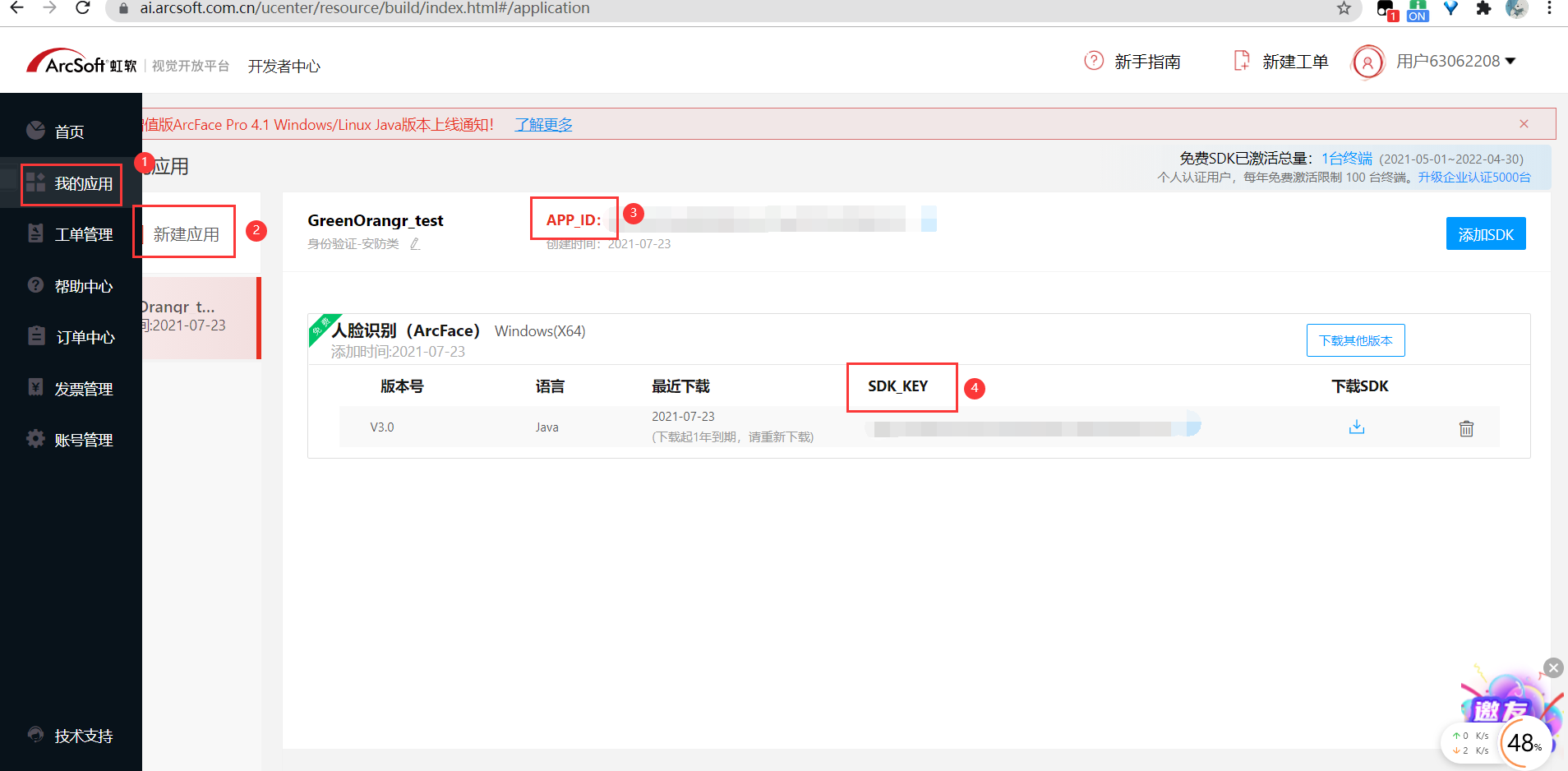

1.注册虹软用户, 创建应用,并找到APP_ID和SDK_KEY

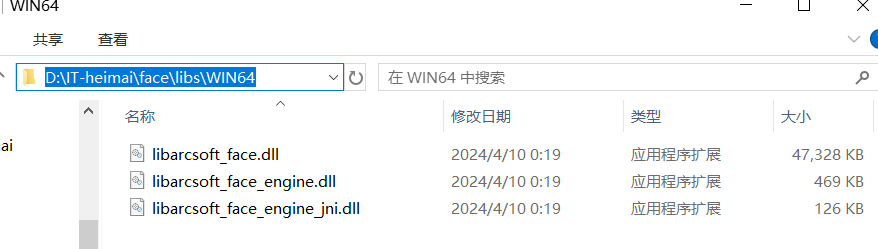

2.下载SDK, 找到下面的地方,把路径复制进环境变量

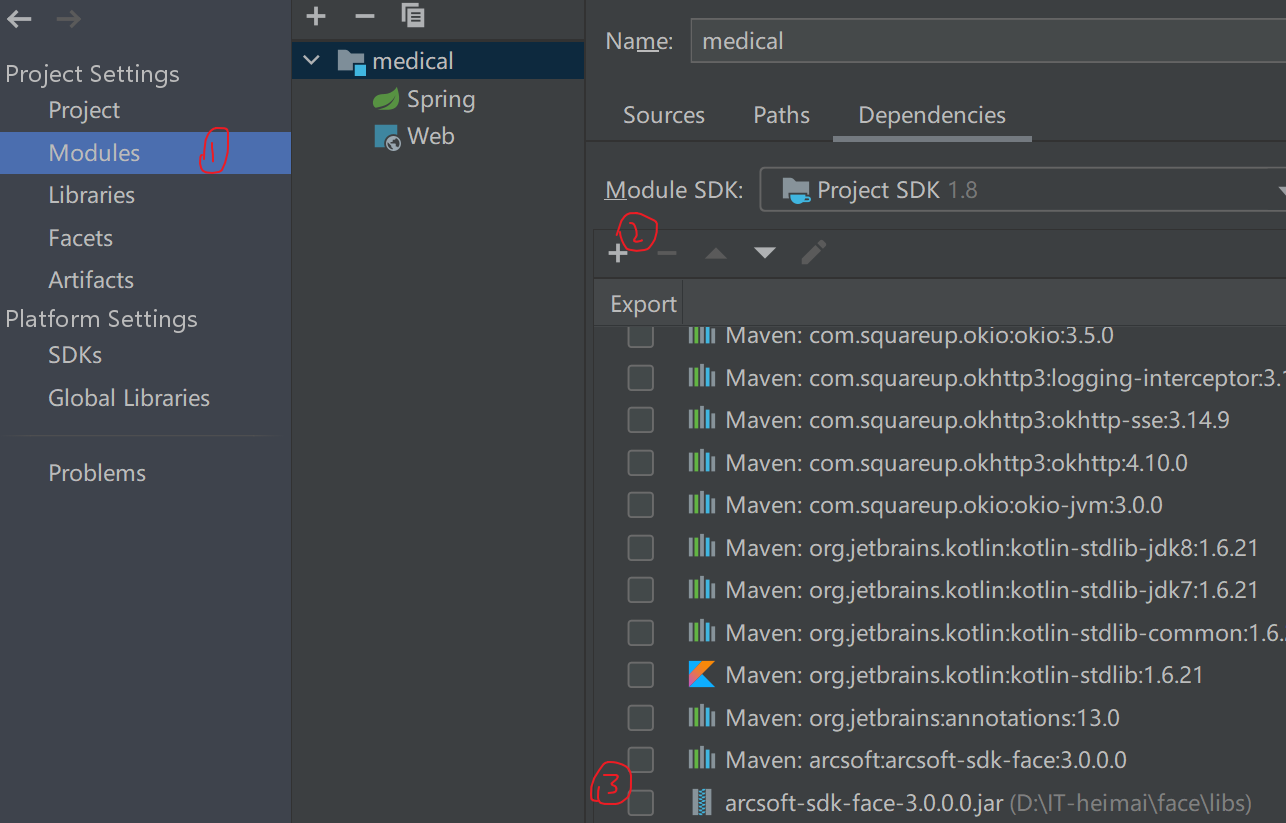

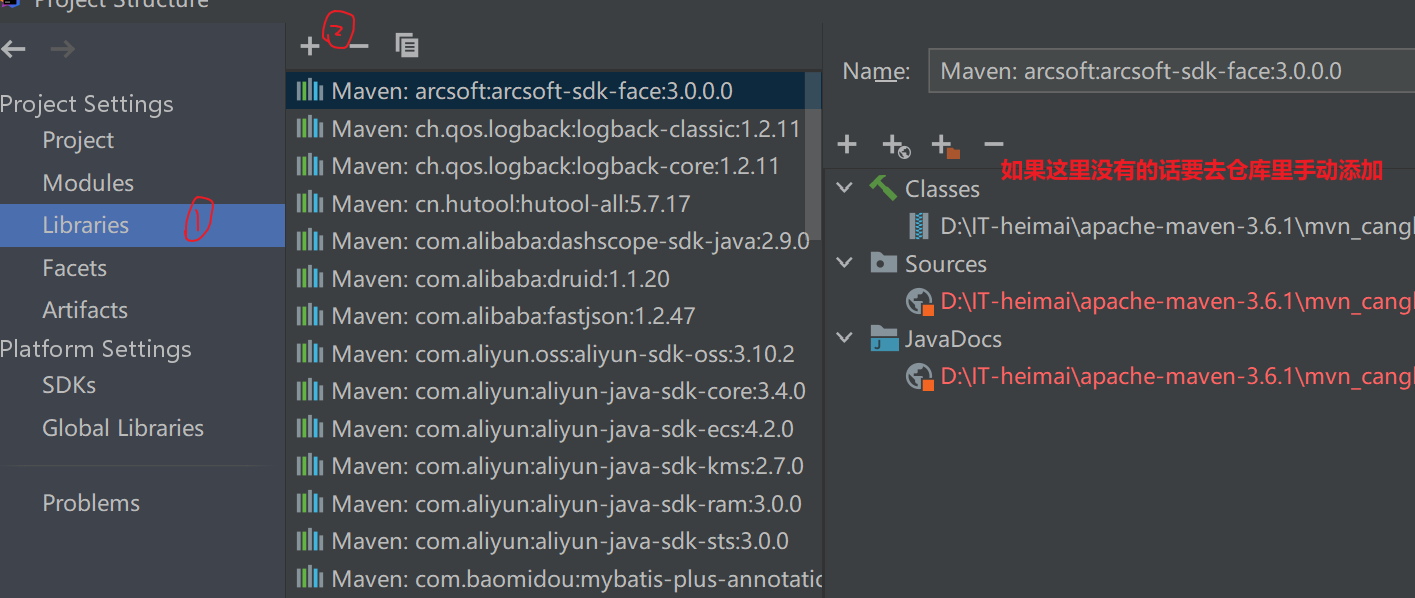

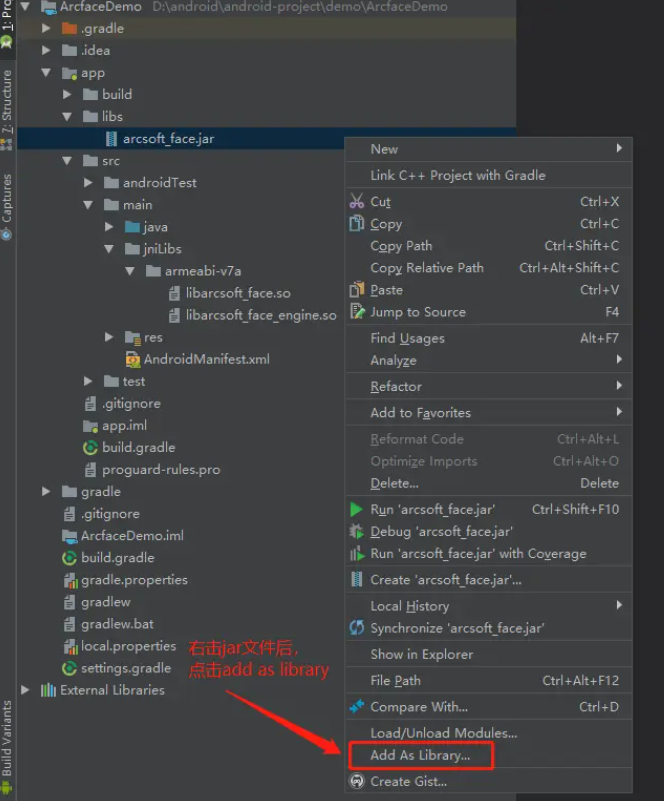

3.把jar包放进项目结构里

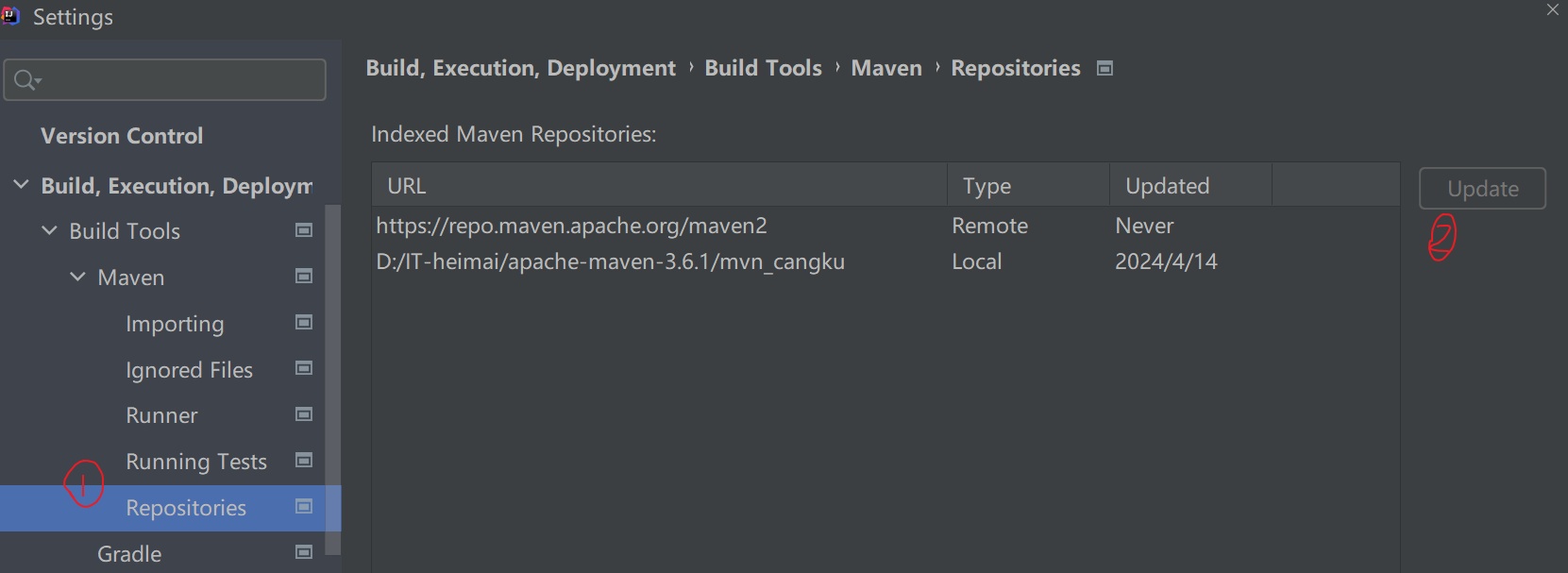

注意: 如果还是不行可以试试更新一下仓库

4.pom添加虹软人脸依赖

<dependency>

<groupId>arcsoft</groupId>

<artifactId>arcsoft-sdk-face</artifactId>

<version>3.0.0.0</version>

</dependency>如果还是报红可以试试

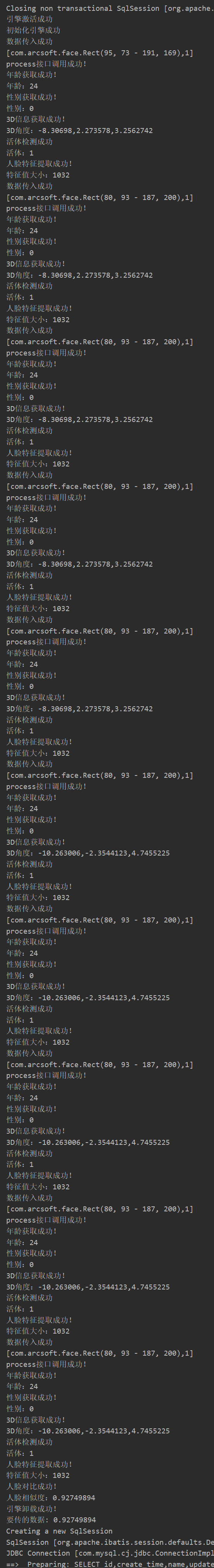

5.可以开始写代码了,首先是controller层

import com.baomidou.mybatisplus.core.conditions.query.LambdaQueryWrapper;

import com.medical.dao.UserDao;

import com.medical.entity.User;

import com.medical.service.FaceService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

/**

* @author HY

* @date 2024/4/14 19:12

*/

@RestController

@RequestMapping("/face")

public class FaceController {

@Autowired

private FaceService faceService;

@Autowired

private UserDao userDao;

@PostMapping("/photo")

public float photo(){

LambdaQueryWrapper<User> queryWrapper = new LambdaQueryWrapper<>();

queryWrapper.eq(User::getUserAccount,"admin");

User user = userDao.selectOne(queryWrapper);

float facegoal = faceService.engineTest(user.getImgPath(),user.getAdminPath());

System.out.println("要传的数据: "+facegoal);

return facegoal;

}

}6.service层代码

import com.arcsoft.face.*;

import com.arcsoft.face.enums.DetectMode;

import com.arcsoft.face.enums.DetectOrient;

import com.arcsoft.face.enums.ErrorInfo;

import com.arcsoft.face.toolkit.ImageInfo;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.stereotype.Service;

import javax.imageio.ImageIO;

import java.awt.image.BufferedImage;

import java.io.IOException;

import java.net.URL;

import java.util.ArrayList;

import java.util.List;

import static com.arcsoft.face.toolkit.ImageFactory.bufferedImage2ImageInfo;

/**

* @program: medical

* @ClassName: FaceService

* @description: 人脸识别

* @author: HY

* @create: 2024-04-10 19:39

*/

@Service

public class FaceService {

@Value("${arcface.appId}")

private String appId1;

@Value("${arcface.sdkKey}")

private String sdkKey1;

public float engineTest(String url1,String url2) {

String appId = appId1;

String sdkKey = sdkKey1;

FaceEngine faceEngine = new FaceEngine();

int errorCode = faceEngine.activeOnline(appId, sdkKey);

if (errorCode != ErrorInfo.MOK.getValue() &&

errorCode != ErrorInfo.MERR_ASF_ALREADY_ACTIVATED.getValue())

{

System.out.println("引擎激活失败:"+errorCode);

} else {

System.out.println("引擎激活成功");

}

//以下为引擎配置

EngineConfiguration engineConfiguration = new EngineConfiguration();

//设置为单张高精度识别

engineConfiguration.setDetectMode(DetectMode.ASF_DETECT_MODE_IMAGE);

//人脸不旋转,为零度

engineConfiguration.setDetectFaceOrientPriority(DetectOrient.ASF_OP_0_ONLY);

//识别的最小人脸比例 = 图片长边 / 人脸框长边的比值

engineConfiguration.setDetectFaceScaleVal(16);

//设置最多能检测的人脸数量

engineConfiguration.setDetectFaceMaxNum(10);

//以下为功能设置

final FunctionConfiguration functionConfiguration = new FunctionConfiguration();

//年龄检测

functionConfiguration.setSupportAge(true);

//启用支持人脸检测

functionConfiguration.setSupportFaceDetect(true);

//启用人脸识别

functionConfiguration.setSupportFaceRecognition(true);

//启用性别识别

functionConfiguration.setSupportGender(true);

//启用3D检测

functionConfiguration.setSupportFace3dAngle(true);

//启用RGB活体检测

functionConfiguration.setSupportLiveness(true);

//不启用IR活体检测

functionConfiguration.setSupportIRLiveness(false);

//需要防止纸张、屏幕等攻击可以传入 supportLiveness 和 supportIRLiveness, RGB 和 IR 活体检测

//将额外的设置注入到引擎中

engineConfiguration.setFunctionConfiguration(functionConfiguration);

int errorCode2 = faceEngine.init(engineConfiguration);

if (errorCode2 != ErrorInfo.MOK.getValue()) {

System.out.println("初始化引擎失败");

} else {

System.out.println("初始化引擎成功");

}

// String imgPath1 = url1;

// String imgPath2 = url2;

FaceFeature faceFeature1 = getFaceFeature(url1, faceEngine);

FaceFeature faceFeature2 = getFaceFeature(url2, faceEngine);

//人脸相似度

FaceSimilar faceSimilar = new FaceSimilar();

int errorCode5 = faceEngine.compareFaceFeature(faceFeature1, faceFeature2, faceSimilar);

if (errorCode5 != ErrorInfo.MOK.getValue()) {

System.out.println("人脸对比操作失败!");

} else {

System.out.println("人脸对比成功!");

System.out.println("人脸相似度:" + faceSimilar.getScore());

}

int errorCode11 = faceEngine.unInit();

if (errorCode11 != ErrorInfo.MOK.getValue()) {

System.out.println("引擎卸载失败,错误码:");

System.out.println(errorCode);

} else {

System.out.println("引擎卸载成功!");

}

return faceSimilar.getScore();

}

/**

*

* @param imgPath 传入图片的地址

* @param faceEngine 传入引擎

* @return faceFeature 输出的人脸特征信息

*/

public FaceFeature getFaceFeature(String imgPath, FaceEngine faceEngine) {

BufferedImage image = loadImage(imgPath);

//这个bufferedImage2ImageInfo(md专门看了源码才知道网络url只能用这个方法名)方法内部调用了几个awt包里面的方法来处理图像数据,由此得到图像数据

ImageInfo imageInfo = bufferedImage2ImageInfo(image);

//新建一个人脸信息列表,获取到的人脸信息将储存在这个列表里面

List<FaceInfo> faceInfoList = new ArrayList<>();

//向引擎传入从图片分离的信息数据

int errorCode3 = faceEngine.detectFaces(imageInfo.getImageData(), imageInfo.getWidth(), imageInfo.getHeight(),

imageInfo.getImageFormat(), faceInfoList);

if (errorCode3 != ErrorInfo.MOK.getValue()) {

System.out.println("数据传入失败");

} else {

System.out.println("数据传入成功");

System.out.println(faceInfoList);

}

//以下实现属性提取,提取某个属性要启用相关的功能

FunctionConfiguration functionConfiguration = new FunctionConfiguration();

functionConfiguration.setSupportAge(true);

functionConfiguration.setSupportFace3dAngle(true);

functionConfiguration.setSupportGender(true);

functionConfiguration.setSupportLiveness(true);

faceEngine.process(imageInfo.getImageData(), imageInfo.getWidth(),

imageInfo.getHeight(), imageInfo.getImageFormat(), faceInfoList, functionConfiguration);

//下面提取属性,首先实现process接口

int errorCode6 = faceEngine.process(imageInfo.getImageData(), imageInfo.getWidth(),

imageInfo.getHeight(), imageInfo.getImageFormat(), faceInfoList, functionConfiguration);

if (errorCode6 != ErrorInfo.MOK.getValue()) {

System.out.println("process接口调用失败,错误码:"+errorCode6);

} else {

System.out.println("process接口调用成功!");

}

//年龄检测

//创建一个存储年龄的列表

List<AgeInfo> ageInfoList = new ArrayList<>();

int errorCode7 = faceEngine.getAge(ageInfoList);

if (errorCode7 != ErrorInfo.MOK.getValue()) {

System.out.print("获取年龄失败,错误码:");

System.out.println(errorCode7);

} else {

System.out.println("年龄获取成功!");

//如果不是人可能会出错

System.out.println("年龄:" + ageInfoList.get(0).getAge());

}

//以下为性别检测

//性别检测

List<GenderInfo> genderInfoList = new ArrayList<GenderInfo>();

int errorCode8 = faceEngine.getGender(genderInfoList);

if (errorCode8 != ErrorInfo.MOK.getValue()) {

System.out.print("获取性别失败,错误码:");

System.out.println(errorCode8);

} else {

System.out.println("性别获取成功!");

System.out.println("性别:" + genderInfoList.get(0).getGender());

}

//3D信息检测

List<Face3DAngle> face3DAngleList = new ArrayList<Face3DAngle>();

int errorCode9 = faceEngine.getFace3DAngle(face3DAngleList);

if (errorCode9 != ErrorInfo.MOK.getValue()) {

System.out.println("3D信息检测失败,错误码:"+errorCode9);

} else {

System.out.println("3D信息获取成功!");

System.out.println("3D角度:" + face3DAngleList.get(0).getPitch() + "," +

face3DAngleList.get(0).getRoll() + "," + face3DAngleList.get(0).getYaw());

}

//活体检测

List<LivenessInfo> livenessInfoList = new ArrayList<LivenessInfo>();

int errorCode10 = faceEngine.getLiveness(livenessInfoList);

if (errorCode10 != ErrorInfo.MOK.getValue()) {

System.out.println("活体检测失败,错误码:"+errorCode10);

} else {

System.out.println("活体检测成功");

System.out.println("活体:" + livenessInfoList.get(0).getLiveness());

}

//下面提取人脸特征

FaceFeature faceFeature = new FaceFeature();

int errorCode4 = faceEngine.extractFaceFeature(imageInfo.getImageData(),

imageInfo.getWidth(), imageInfo.getHeight(), imageInfo.getImageFormat(),

faceInfoList.get(0), faceFeature);

if (errorCode4 != ErrorInfo.MOK.getValue()) {

System.out.println("人脸特征提取失败!");

} else {

System.out.println("人脸特征提取成功!");

System.out.println("特征值大小:" + faceFeature.getFeatureData().length);

}

return faceFeature;

}

//加载非本地图片

public static BufferedImage loadImage(String imgUrl){

try{

URL url = new URL(imgUrl);

return ImageIO.read(url);

} catch (IOException e){

e.printStackTrace();

return null;

}

}

//

}7.记得要自己写一个文件上传下载功能

import com.medical.dto.RespResult;

import com.medical.entity.User;

import com.medical.service.UserService;

import com.medical.utils.Assert;

import com.medical.utils.OssUtil;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

import org.springframework.web.multipart.MultipartFile;

import java.io.IOException;

/**

* @apiNote

* @author HY

*/

@RestController

@RequestMapping("/file")

public class FileController extends BaseController<User> {

@Autowired

private UserService userService;

/**

* 上传文件

*/

@PostMapping("/upload")

public RespResult upload(@RequestParam("file") MultipartFile file) throws IOException {

String url = OssUtil.upload(file, loginUser.getId()+"");

if (Assert.isEmpty(url)) {

return RespResult.fail("上传失败", url);

}

return RespResult.success("上传成功", url);

}

@PostMapping("/uploadface")

public RespResult uploadFace(@RequestParam("file") MultipartFile file) throws IOException {

String url = OssUtil.uploadface(file);

if (Assert.isEmpty(url)) {

return RespResult.fail("上传失败", url);

}

int i = userService.update(url);

return RespResult.success("上传成功", i);

}

}8.前端代码(可作参考)

<!--人脸识别后台登录-->

<div id="myCard" class="card" style="position: fixed;

display: none;

top: 50%;

left: 50%;

transform: translate(-50%, -50%);

background-color: #fff;

border: 1px solid #ccc;

padding: 20px;

z-index: 1000;">

<span class="close" style="position: absolute;

top: 10px;

right: 10px;

font-size: 24px;

font-weight: bold;

cursor: pointer;">×</span> <!-- 叉叉图标 -->

<div style="float: left">

<div style="width: 245px; height: 185px; border: 1px solid red; margin-top: 41px">

<video id="video" autoplay style="width: 100%; height: 100%"></video>

<canvas id="canvas" width="245" height="185" style="display: none"></canvas>

</div>

<button id="captureButton" style="margin-top: 35px; margin-left: 26px; width: 65px; height: 32px;background-color:rgb(9, 150, 248); color: white; border: none; border-radius: 5px;">后台一</button>

<button style="margin-top: 35px; margin-left: 50px; width: 65px; height: 32px;background-color:rgb(9, 150, 248); color: white; border: none; border-radius: 5px;">后台二</button>

</div>

<div style="float: left;">

<img src="assets/images/top.png" style="width: 400px; margin-top: -10px;margin-left: 28px;">

</div>

<div>

<img src="assets/images/bottom.png" style="width: 400px;margin-left: 28px;">

</div>

</div>

<script>

// 初始化时,将摄像头流设置为null

var cameraStream = null;

document.getElementById('triggerText').addEventListener('click', function() {

var card = document.getElementById('myCard');

card.style.display = 'block'; // 显示卡片

// 获取video元素

var video = document.getElementById('video');

// 检查浏览器是否支持getUserMedia

navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia || navigator.msGetUserMedia || navigator.mediaDevices.getUserMedia;

if (navigator.getUserMedia) {

// 请求用户摄像头权限并打开摄像头

navigator.mediaDevices.getUserMedia({ video: true })

.then(function (stream) {

video.srcObject = stream;

cameraStream = stream;

})

.catch(function (error) {

console.error('Error accessing the camera:', error);

});

} else {

alert('Sorry, your browser does not support getUserMedia');

}

// 初始化人脸识别

var canvas = document.getElementById('canvas');

var context = canvas.getContext('2d');

var tracker = new tracking.ObjectTracker('face');

tracker.setInitialScale(4);

tracker.setStepSize(2);

tracker.setEdgesDensity(0.1);

tracking.track('#video', tracker, { camera: true });

tracker.on('track', function (event) {

context.clearRect(0, 0, canvas.width, canvas.height);

event.data.forEach(function (rect) {

context.strokeStyle = '#a64ceb';

context.strokeRect(rect.x, rect.y, rect.width, rect.height);

context.font = '11px Helvetica';

context.fillStyle = "#fff";

context.fillText('x: ' + rect.x + 'px', rect.x + rect.width + 5, rect.y + 11);

context.fillText('y: ' + rect.y + 'px', rect.x + rect.width + 5, rect.y + 22);

});

});

// 拍照按钮点击事件处理

var captureButton = document.getElementById('captureButton');

captureButton.addEventListener('click', function () {

// 将当前视频帧绘制到canvas上

context.drawImage(video, 0, 0, canvas.width, canvas.height);

// 将canvas上的图像转换为 Blob 对象

canvas.toBlob(function (blob) {

// 使用 FileSaver.js 保存文件

// saveAs(blob, 'face_capture.png');

var formdata = new FormData();

formdata.append('file',blob,'image.png');

$.ajax({

async: false,

type: "POST",

url: "file/uploadface",

dataType: "json",

data: formdata,

contentType: false,//ajax上传图片需要添加

processData: false,//ajax上传图片需要添加

success: function (data) {

console.log(data);

layer.msg(data['message']);

$("#img-preview").attr('src', data['data']);

$("#img").attr('value', data['data']);

callPhotoEndpoint(function (facegoal){

if (facegoal > 0.8){

window.location.href = 'doctor';

}

});

}

})

});

});

// 定义一个新方法来调用 @PostMapping("/photo") 接口

function callPhotoEndpoint(callback) {

$.ajax({

type: "POST",

url: "face/photo", // 确保这是正确的 URL

dataType: "json",

success: function (response) {

if (typeof callback === 'function'){

callback(response);

}

},

error: function (xhr, status, error) {

// 处理错误情况

console.error("An error occurred: " + error);

}

});

}

});

// 为叉叉图标添加点击事件监听器

document.querySelector('.card .close').addEventListener('click', function() {

var card = document.getElementById('myCard');

card.style.display = 'none'; // 隐藏卡片

if (cameraStream) {

cameraStream.getTracks().forEach(function (track) {

track.stop();

});

cameraStream = null;

}

});

</script>