1 查看存储池信息

查看存储池的名称

[root@ceph141ceph]# ceph osd pool ls

.mgr

查看存储池机器编号

[root@ceph141ceph]# ceph osd pool ls

1 .mgr

查看存储池的详细信息

[root@ceph141ceph]# ceph osd pool ls detail

pool 1 '.mgr' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 1 pgp_num 1

autoscale_mode on last_change 21 flags hashpspool stripe_width 0 pg_num_max 32 pg_num_min 1

application mgr read_balance_score 6.98

2创建存储池

注:在18.2.4版本中,创建存储池若不指定pg默认数量为32个

1.创建存储池

[root@ceph141ceph]# ceph osd pool create wzy

pool 'wzy' created

[root@ceph141ceph]# ceph osd pool ls

.mgr

wzy

[root@ceph141ceph]# ceph osd pool ls detail

pool 1 '.mgr' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 1 pgp_num 1

autoscale_mode on last_change 21 flags hashpspool stripe_width 0 pg_num_max 32 pg_num_min 1 application

mgr read_balance_score 6.98

pool 2 'wzy' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32

autoscale_mode on last_change 78 lfor 0/0/76 flags hashpspool stripe_width 0 read_balance_score 1.7

2.创建存储池时可以指定pg数量 ,一般情况下,pg和pgp数量一致。

[root@ceph141ceph]# ceph osd pool create zhiyong 128 128

pool 'zhiyong' created

[root@ceph141ceph]# ceph osd pool ls detail

pool 1 '.mgr' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 1 pgp_num 1

autoscale_mode on last_change 21 flags hashpspool stripe_width 0 pg_num_max 32 pg_num_min 1 application

mgr read_balance_score 6.98

pool 2 'wzy' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 32 pgp_num 32

autoscale_mode on last_change 78 lfor 0/0/76 flags hashpspool stripe_width 0 read_balance_score 1.75

pool 3 'zhiyong' replicated size 3 min_size 2 crush_rule 0 object_hash rjenkins pg_num 115 pgp_num 112

pg_num_target 32 pgp_num_target 32 autoscale_mode on last_change 141 lfor 0/141/139 flags hashpspool

stripe_width 0 read_balance_score 1.95

3 存储池的属性查看和修改

以下操作在web页面都可以实现

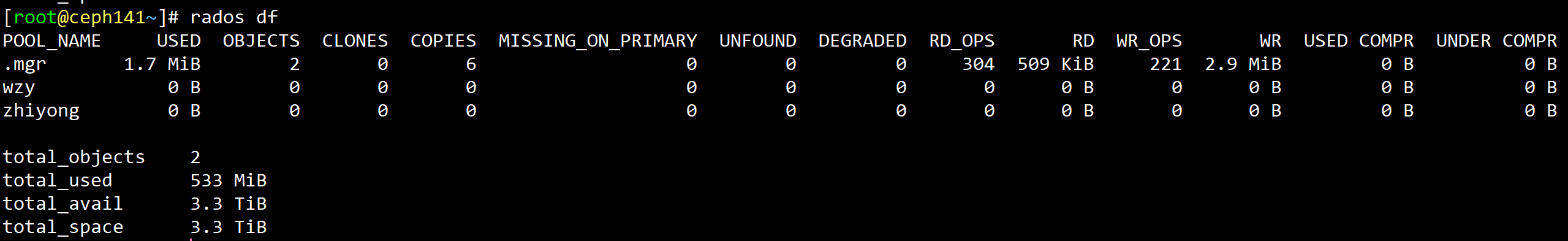

1.查看存储池的使用空间

[root@ceph141~]# rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

.mgr 1.7 MiB 2 0 6 0 0 0 304 509 KiB 221 2.9 MiB 0 B 0 B

wzy 0 B 0 0 0 0 0 0 0 0 B 0 0 B 0 B 0 B

zhiyong 0 B 0 0 0 0 0 0 0 0 B 0 0 B 0 B 0 B

total_objects 2

total_used 533 MiB

total_avail 3.3 TiB

total_space 3.3 TiB

2.查看指定存储池的I/O拷贝信息,nothing表示没有读写进行。

[root@ceph141~]# ceph osd pool stats zhiyong

pool zhiyong id 3

nothing is going on

3.查看存储池的副本属性,副本数量为3

[root@ceph141~]# ceph osd pool get zhiyong size

size: 3

4.修改zhiyong存储池的副本属性为2,再改为4

[root@ceph141~]# ceph osd pool set zhiyong size 2

set pool 3 size to 2

[root@ceph141~]# ceph osd pool set zhiyong size 4

set pool 3 size to 4

4 删除存储池

默认情况下,删除存储池有保护机制;不能轻易删除掉。

下面直接提示:

[root@ceph141~]# ceph osd pool delete zhiyong

Error EPERM: WARNING: this will *PERMANENTLY DESTROY* all data stored in pool zhiyong.

If you are *ABSOLUTELY CERTAIN* that is what you want, pass the pool name *twice*,

followed by --yes-i-really-really-mean-it.

即使按照提示操作后依然不能删除,需要修改这个osd pool的配置后才能修改

[root@ceph141~]# ceph osd pool delete zhiyong zhiyong --yes-i-really-really-mean-it

Error EPERM: pool deletion is disabled; you must first set the mon_allow_pool_delete config option

to true before you can destroy a pool

查看其属性发现是可以删除的,不可被删除为false,那就是可以删除

[root@ceph141~]# ceph osd pool get zhiyong nodelete

nodelete: false

如果想要删除存储池必须让nodelete的值为false,且mon_allow_pool_delete为true

# 告诉mon组件:存储池可以被删除

[root@ceph141~]# ceph tell mon.* injectargs --mon_allow_pool_delete=true

mon.ceph141: {}

mon.ceph141: mon_allow_pool_delete = ''

mon.ceph142: {}

mon.ceph142: mon_allow_pool_delete = ''

mon.ceph143: {}

mon.ceph143: mon_allow_pool_delete = ''

[root@ceph141~]# ceph osd pool delete zhiyong zhiyong --yes-i-really-really-mean-it

pool 'zhiyong' remove

也可以修改其属性为不可以删除

[root@ceph141~]# ceph osd pool ls

.mgr

wzy

[root@ceph141~]# ceph osd pool set wzy nodelete true

set pool 2 nodelete to true

**总结:**nodelete和mon_allow_pool_delete属性都同时满足时,存储池才会被删除!

5 ceph集群存储池的资源配额

ceph集群官方支持基于对象存储数量和数据存储的大小两种方式限制存储资源配额,参考连接

1.创建1个存储池

[root@ceph141~]# ceph osd pool ls

.mgr

zhiyong-rbd

zhiyong18-rbd

[root@ceph141~]# ceph osd pool create zhiyong 16 16

pool 'zhiyong' created

[root@ceph141~]# ceph osd pool ls

.mgr

zhiyong-rbd

zhiyong18-rbd

zhiyong

2.查看限制信息为空

[root@ceph141~]# ceph osd pool get-quota zhiyong

quotas for pool 'zhiyong':

max objects: N/A

max bytes : N/A

3.对象的数量进行限制为1000个

[root@ceph141~]# ceph osd pool get-quota zhiyong

quotas for pool 'zhiyong':

max objects: 1k objects (current num objects: 0 objects)

max bytes : N/A

4.存储容量限制为1G

[root@ceph141~]# echo 10*1024*1024*1024 | bc

10737418240

[root@ceph141~]# ceph osd pool set-quota zhiyong max_bytes 10737418240

set-quota max_bytes = 10737418240 for pool zhiyong

再次查看限制

[root@ceph141~]# ceph osd pool get-quota zhiyong

quotas for pool 'zhiyong':

max objects: 1k objects (current num objects: 0 objects)

max bytes : 10 GiB (current num bytes: 0 bytes)

5.清除限额,清除后又回到原样了

[root@ceph141~]# ceph osd pool set-quota zhiyong max_objects 0

set-quota max_objects = 0 for pool zhiyong

[root@ceph141~]# ceph osd pool set-quota zhiyong max_bytes 0

set-quota max_bytes = 0 for pool zhiyong

[root@ceph141~]# ceph osd pool get-quota zhiyong

quotas for pool 'zhiyong':

max objects: N/A

max bytes : N/A

s = 0 for pool zhiyong

[root@ceph141~]# ceph osd pool set-quota zhiyong max_bytes 0

set-quota max_bytes = 0 for pool zhiyong

[root@ceph141~]# ceph osd pool get-quota zhiyong

quotas for pool ‘zhiyong’:

max objects: N/A

max bytes : N/A