Tensorrt-c++版本

本文将使用C++结合Tensorrt来实现YOLO的推理过程

Tensorrt安装

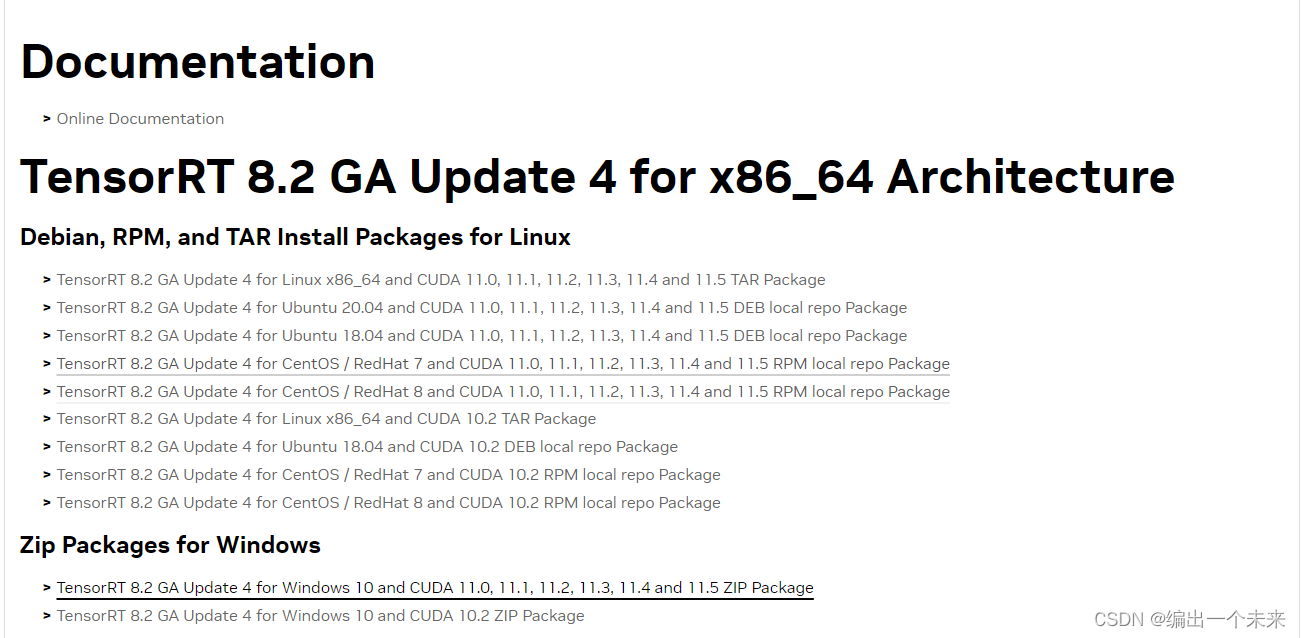

Tensorrt 下载链接 版本选着根据自己电脑的cuda版本,解压后完成一下操作。

2.将TensorRT-8.2.2.1\lib 中所有lib文件 copy 到C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.2\lib\x64

3.将TensorRT-8.2.2.1\lib 中所有dll文件copy 到C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v10.2\bin

验证安装是否成功

进入 TensorRT-8.2.2.1/samples/sampleMNIST中,用vs2017打开sample_mnist.sln项目属性里搞定包含目录(D:\tensorrt\include)和库目录(D:\tensorrt\lib)

项目属性->链接器->输入->附加依赖性->把tensorrt.lib中lib文件的名字加入

在sample_mnist.sln下,重新生成解决方案,再执行,若出现类似数字的图案,即认为配置TensorRT成功

opencv安装

安装详细教程1

安装详细教程2

以上俩个教程需要自己通过cmake工具去编译

这个是github上编译好的

只需要下载解压 添加系统变量环境。

这个是ubuntu的安装方式

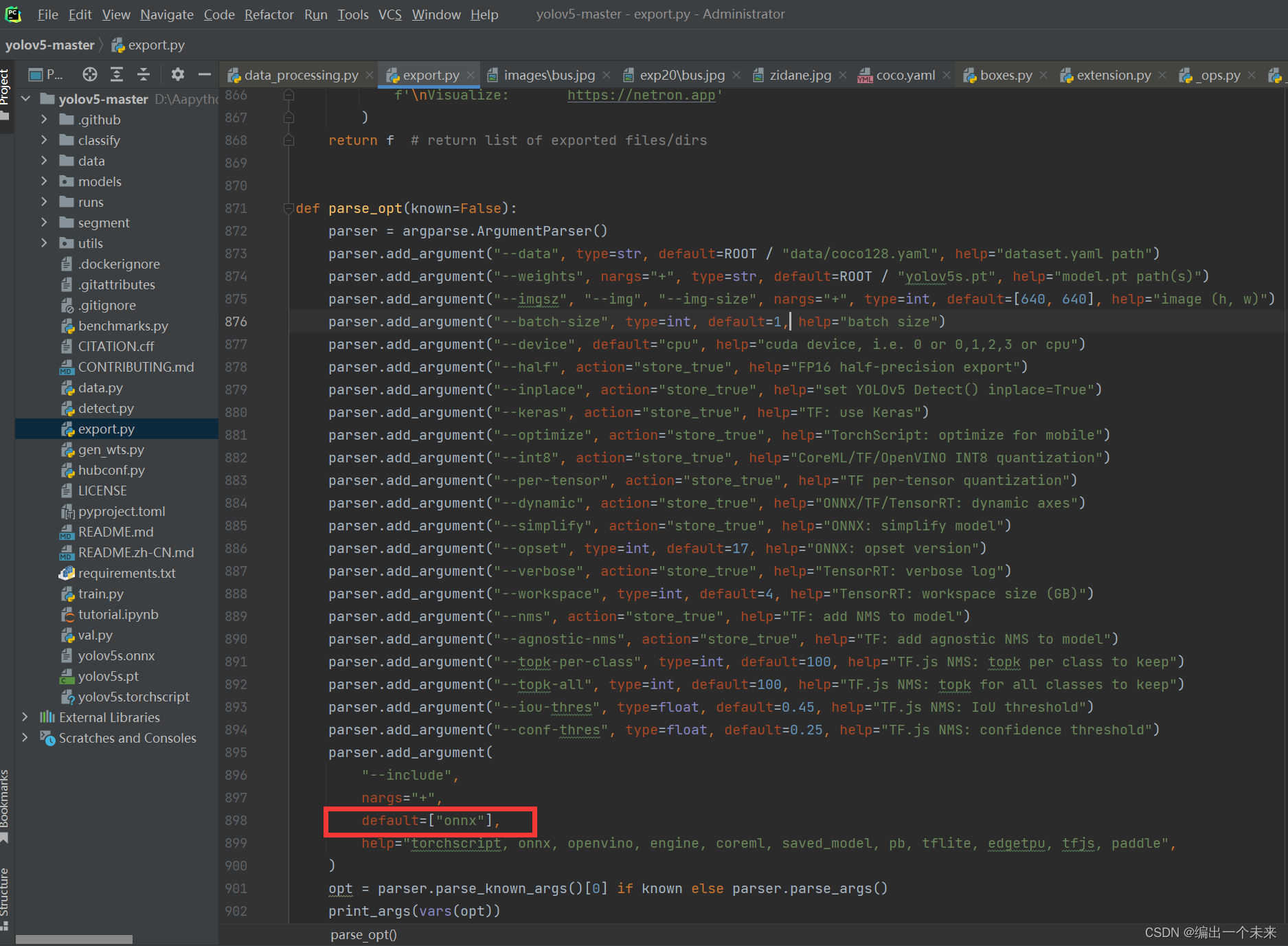

pt转onnx,onnx转engine

#yolov8 导出代码

from ultralytics import YOLO

model = YOLO('yolov8n.pt')

model.export(format='onnx')

yolov5 就按照官方的转就行

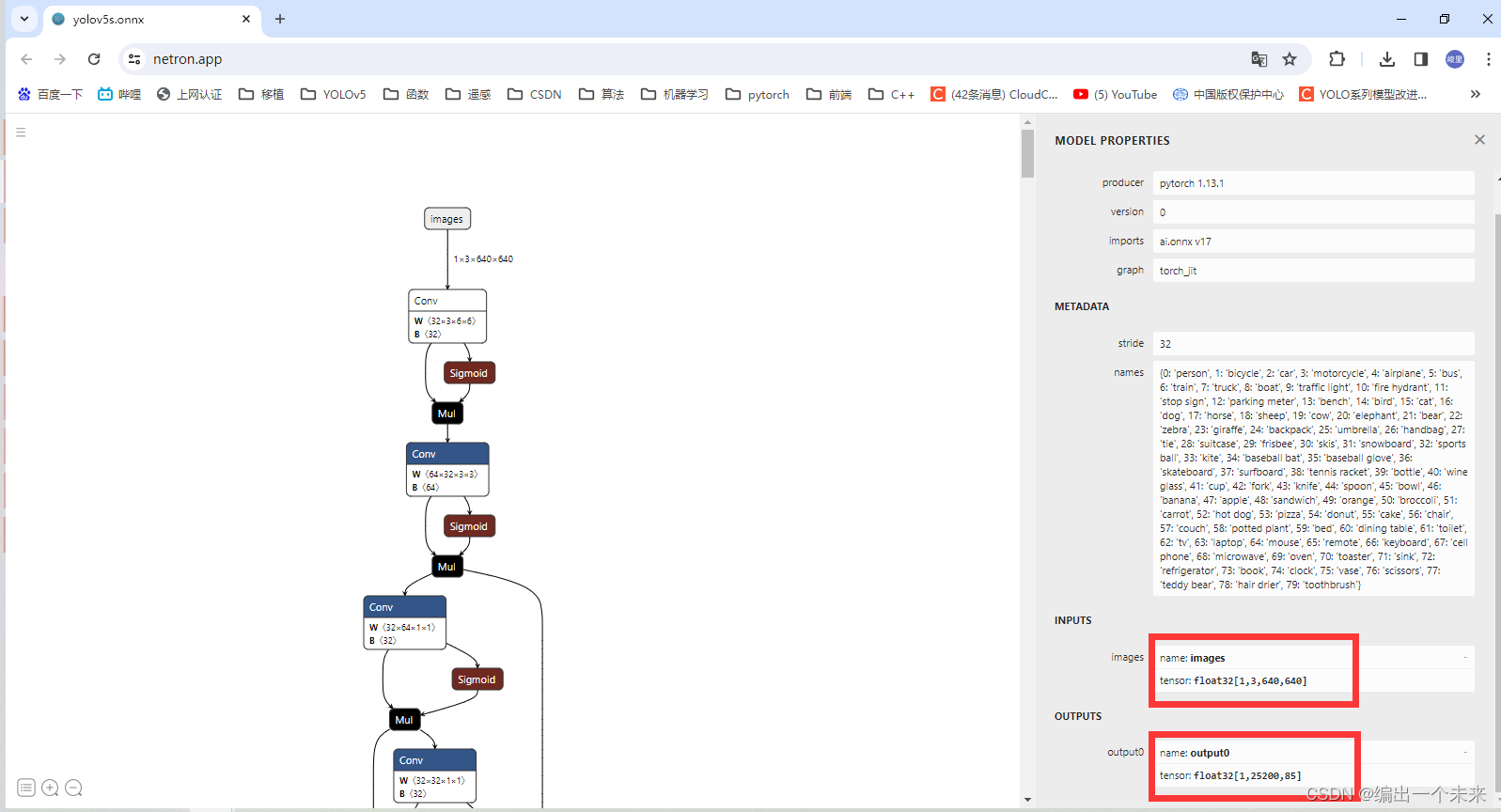

用Netron打开onnx文件,可查看模型的输入和输出。

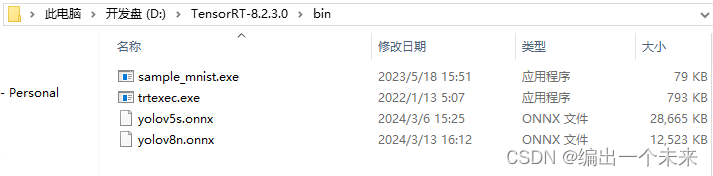

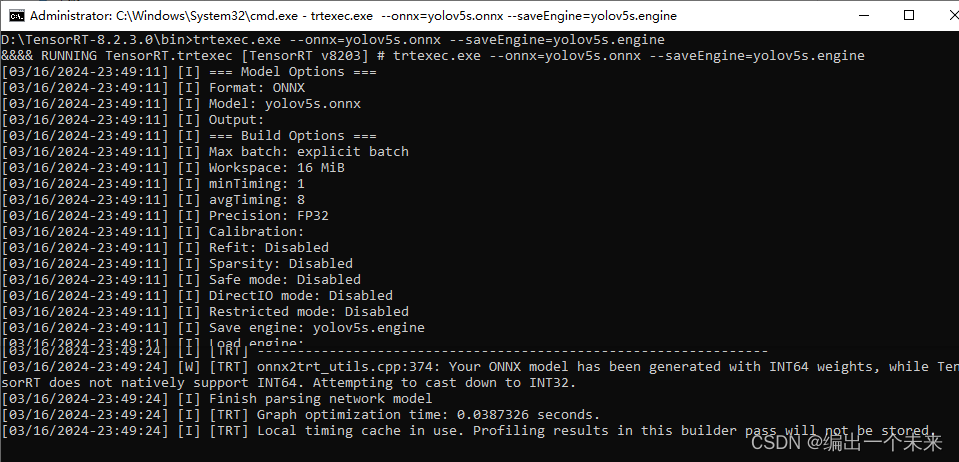

使用tensorrt的工具将onnx转为engine

其他参数自行查询

trtexec.exe --onnx=yolov5s.onnx --saveEngine=yolov5s.engine

!](https://img-blog.csdnimg.cn/direct/88ccd92c8cfc4856849e275b19570d29.png)

CMakeLists.txt

cmake_minimum_required (VERSION 3.8)

project (test)

#opencv

set(OpenCV_DIR "D:/opencv4.7/build")

find_package(OpenCV REQUIRED)

#tensor

include_directories("D:/TensorRT-8.2.3.0/include")

link_directories("D:/TensorRT-8.2.3.0/lib")

include_directories("D:/TensorRT-8.2.3.0/samples/common")

#cuda

include_directories("D:/CUDA/NVIDIA GPU Computing Toolkit/CUDA/v11.3/include")

link_directories("D:/CUDA/NVIDIA GPU Computing Toolkit/CUDA/v11.3/lib")

#可执行文件

add_executable(test "main.cpp" "main.h" "D:/TensorRT-8.2.3.0/samples/common/logger.cpp")

#链接库

target_link_libraries(test ${OpenCV_LIBS})

target_link_libraries(test D:/TensorRT-8.2.3.0/samples/common)

target_link_libraries(test nvinfer)

target_link_libraries(test cudart)

主函数 yolo.cpp

int main() {

std::vector<unsigned char> engine_data;

int fsize = 0;

int DLACore = 0;

nvinfer1::ICudaEngine* engine = load_engine_file2(filename, DLACore);

if (!engine){return -1;}

nvinfer1::IExecutionContext* context = engine->createExecutionContext();

std::vector<void*> buffers;

buffers.resize(2);

CHECK(cudaMalloc(&buffers[0], BATCH_SIZE * 3 * INPUT_H * INPUT_W * sizeof(float)));

CHECK(cudaMalloc(&buffers[1], BATCH_SIZE * OUTPUT_SIZE * sizeof(float)));

cudaStream_t stream;

CHECK(cudaStreamCreate(&stream));

float* data_model = new float[BATCH_SIZE * 3 * INPUT_H * INPUT_W];

proprecess(file_name, data_model);

CHECK(cudaMemcpyAsync((float*)buffers[0], data_model, nInputSize, cudaMemcpyHostToDevice, stream));

context->setBindingDimensions(0, nvinfer1::Dims4(BATCH_SIZE, 3, INPUT_H, INPUT_W));

context->executeV2(buffers.data());

float* prob_model = new float[BATCH_SIZE * OUTPUT_SIZE];

CHECK(cudaMemcpyAsync(prob_model, buffers[1], BATCH_SIZE * OUTPUT_SIZE * sizeof(float), cudaMemcpyDeviceToHost, stream));

cudaStreamSynchronize(stream);

return 0;

}

图片前处理

cv::Mat letterboxs(cv::Mat image) {

int x, y, w, h = 0;

// 计算放缩因子,根据缩放比例,确定以哪个方向(高度或宽度)作为基准进行缩放。

float rh = INPUT_H / (image.rows * 1.0);

float rw = INPUT_W / (image.cols * 1.0);

// 计算缩放后的图像的宽度 w 和高度 h,以及在目标图像中的位置 x 和 y。

if (rh > rw) {

w = INPUT_W;

h = image.rows * rw;

x = 0;

y = (INPUT_H - h) / 2;

}

else {

w = image.cols * rh;

h = INPUT_H;

y = 0;

x = (INPUT_W - w) / 2;

}

// 创建一个目标大小为 INPUT_W x INPUT_H 的灰色图像 (letterbox)。

cv::Mat letterbox(INPUT_W, INPUT_H, image.type(), cv::Scalar(128, 128, 128));

//将原始图像缩放到目标大小 (w, h),并将结果复制到 letterbox 中的指定区域 (x, y, w, h)。

cv::resize(image, letterbox(cv::Rect(x, y, w, h)), cv::Size(w, h));

return letterbox;

}

void proprecess(cv::Mat img, int h, int w, float* data) {

cv::Mat pr_img = letterboxs(img, w, h);

for (int i = 0; i < h * w; i++) {

data[i] = pr_img.at<cv::Vec3b>(i)[0] / 255.0;

data[i + h * w] = pr_img.at<cv::Vec3b>(i)[1] / 255.0;

data[i + 2 * h * w] = pr_img.at<cv::Vec3b>(i)[2] / 255.0;

}

}

构建TensorRT引擎

加载engine文件

nvinfer1::ICudaEngine* load_engine_file2(string filename, int DLACore) {

int fsize;

std::vector<unsigned char> engine_data;

std::ifstream engine_file(filename, std::ios::binary);

engine_file.seekg(0, engine_file.end);

fsize = engine_file.tellg();

engine_data.resize(fsize);

engine_file.seekg(0, engine_file.beg);

engine_file.read(reinterpret_cast<char*>(engine_data.data()), fsize);

static Logger gLogger;

nvinfer1::IRuntime* runtime = nvinfer1::createInferRuntime(gLogger.getTRTLogger());

if (DLACore != -1)

{

runtime->setDLACore(DLACore);

}

return runtime->deserializeCudaEngine(engine_data.data(), fsize, nullptr);

}

后处理

// ========== 8. 获取推理结果 =========

std::vector<std::vector<float>> prediction(25200, std::vector<float>(85));

int index = 0;

for (int i = 0; i < COLS; ++i) {

for (int j = 0; j < ROWS; ++j) {

prediction[i][j] = prob_model[index++];

}

}

// ========== 9. 大于conf_thres加入xc =========

std::vector<std::vector<float>> xc;

for (const auto& row : prediction) {

if (row[4] > conf_thres) {

xc.push_back(row);

}

}

// ========== 10. 置信度 = obj_conf * cls_conf =========

std::cout << xc[0].size() << endl;

for (auto& row : xc) {

for (int i = 5;i<xc[0].size();i++) {

row[i] *= row[4];

}

}

// ========== 11. 切片取出xywh 转为xyxy=========

std::vector<std::vector<float>> xywh;

for (const auto& row : xc) {

std::vector<float> sliced_row(row.begin(), row.begin() + 4);

xywh.push_back(sliced_row);

}

std::vector<std::vector<float>> box(xywh.size(), std::vector<float>(4, 0.0));

xywhtoxxyy(xywh,box);

void xywhtoxxyy(std::vector<std::vector<float>> xywh, std::vector<std::vector<float>>& box) {

// 执行操作 y[..., 0] = x[..., 0] - x[..., 2] / 2

for (std::size_t i = 0; i < xywh.size(); ++i) {

box[i][0] = xywh[i][0] - xywh[i][2] / 2;

}

// 执行操作 y[..., 1] = x[..., 1] - x[..., 3] / 2

for (std::size_t i = 0; i < xywh.size(); ++i) {

box[i][1] = xywh[i][1] - xywh[i][3] / 2;

}

// 执行操作 y[..., 2] = x[..., 0] + x[..., 2] / 2

for (std::size_t i = 0; i < xywh.size(); ++i) {

box[i][2] = xywh[i][0] + xywh[i][2] / 2;

}

// 执行操作 y[..., 3] = x[..., 1] + x[..., 3] / 2

for (std::size_t i = 0; i < xywh.size(); ++i) {

box[i][3] = xywh[i][1] + xywh[i][3] / 2;

}

}

// ========== 12. 获取置信度最高的类别和索引=========

std::size_t mi = xc[0].size();

std::vector<float> conf(xc.size(), 0.0);

std::vector<float> j(xc.size(), 0.0);

for (std::size_t i = 0; i < xc.size(); ++i) {

// 模拟切片操作 x[:, 5:mi]

auto sliced_x = std::vector<float>(xc[i].begin() + 5, xc[i].begin() + mi);

// 计算 max

auto max_it = std::max_element(sliced_x.begin(), sliced_x.end());

// 获取 max 的索引

std::size_t max_index = std::distance(sliced_x.begin(), max_it);

// 将 max 的值和索引存储到相应的向量中

conf[i] = *max_it;

j[i] = max_index; // 加上切片的起始索引

}

// ========== 13. concat x1, y1, x2, y2, score, index;======== =

for (int i = 0; i < xc.size(); i++) {

box[i].push_back(conf[i]);

box[i].push_back(j[i]);

}

std::vector<std::vector<float>> output;

for (int i = 0; i < xc.size(); i++) {

output.push_back(box[i]); // 创建一个空的 float 向量并

}

// ==========14 应用非最大抑制 ==========

std::vector<BoundingBox> result = nonMaximumSuppression(output, overlapThreshold);

struct BoundingBox {

float x1, y1, x2, y2, score, index;

};

float iou(const BoundingBox& box1, const BoundingBox& box2) {

float max_x = max(box1.x1, box2.x1); // 找出左上角坐标哪个大

float min_x = min(box1.x2, box2.x2); // 找出右上角坐标哪个小

float max_y = max(box1.y1, box2.y1);

float min_y = min(box1.y2, box2.y2);

if (min_x <= max_x || min_y <= max_y) // 如果没有重叠

return 0;

float over_area = (min_x - max_x) * (min_y - max_y); // 计算重叠面积

float area_a = (box1.x2 - box1.x1) * (box1.y2 - box1.y1);

float area_b = (box2.x2 - box2.x1) * (box2.y2 - box2.y1);

float iou = over_area / (area_a + area_b - over_area);

return iou;

}

std::vector<BoundingBox> nonMaximumSuppression(std::vector<std::vector<float>>& boxes, float overlapThreshold) {

std::vector<BoundingBox> convertedBoxes;

// 将数据转换为BoundingBox结构体

for (const auto& box : boxes) {

if (box.size() == 6) { // Assuming [x1, y1, x2, y2, score]

BoundingBox bbox;

bbox.x1 = box[0];

bbox.y1 = box[1];

bbox.x2 = box[2];

bbox.y2 = box[3];

bbox.score = box[4];

bbox.index = box[5];

convertedBoxes.push_back(bbox);

}

else {

std::cerr << "Invalid box format!" << std::endl;

}

}

// 对框按照分数降序排序

std::sort(convertedBoxes.begin(), convertedBoxes.end(), [](const BoundingBox& a, const BoundingBox& b) {

return a.score > b.score;

});

// 非最大抑制

std::vector<BoundingBox> result;

std::vector<bool> isSuppressed(convertedBoxes.size(), false);

for (size_t i = 0; i < convertedBoxes.size(); ++i) {

if (!isSuppressed[i]) {

result.push_back(convertedBoxes[i]);

for (size_t j = i + 1; j < convertedBoxes.size(); ++j) {

if (!isSuppressed[j]) {

float overlap = iou(convertedBoxes[i], convertedBoxes[j]);

if (overlap > overlapThreshold) {

isSuppressed[j] = true;

}

}

}

}

}

// 输出结果

std::cout << "NMS Result:" << std::endl;

for (const auto& box : result) {

std::cout << "x1: " << box.x1 << ", y1: " << box.y1

<< ", x2: " << box.x2 << ", y2: " << box.y2

<< ", score: " << box.score << ",index:" << box.index << std::endl;

}

return result;

}

// ==========15 画框 ==========

for (auto& row:result){

cv::Rect r = get_rect(r_image, row);

double rounded_score = round(row.score * 100) / 100;

std::string score_str = cv::format("%.2f", rounded_score); // 格式化浮点数为字符串,保留两位小数

cv::rectangle(r_image, r, cv::Scalar(0x27, 0xC1, 0x36), 2);

cv::putText(r_image, class_names[(int)row.index], cv::Point(r.x, r.y-10), cv::FONT_HERSHEY_PLAIN, 1.2, cv::Scalar(0x27, 0xC1, 0x36), 1);

cv::putText(r_image, score_str, cv::Point(r.x+80, r.y-10), cv::FONT_HERSHEY_PLAIN, 1.2, cv::Scalar(0x27, 0xC1, 0x36), 1);

}

cv::imshow("image", r_image);

cv::waitKey(0);

完整项目代码私信

结果测试