话不多说上源代码,只要把lxml的库安装下就好了

这个程序完全是解放双手,而且没有弹窗网页等困扰

__author__ = 'JianqingJiang'

# -*- coding: utf-8 -*-

import urllib2

from lxml import etree

import os

pre_url ='http://torrentkitty/search/tokyohot/'

os.chdir('/Users/JianqingJiang/Downloads/')

def steve(page_num,file_name):

url = pre_url + str(page_num)

print url

ht = urllib2.urlopen(url).read()

content = etree.HTML(ht.lower().decode('utf-8'))

mags = content.xpath("//a[@rel='magnet']")

with open(file_name,'a') as p: # '''Note''':Append mode, run only once!

for mag in mags:

p.write("%s \n \n"%(mag.attrib['href'])+"\n") ##!!encode here to utf-8 to avoid encoding

print "%s \n \n"%(mag.attrib['href'])

for page_num in range(0,10):

print (page_num)

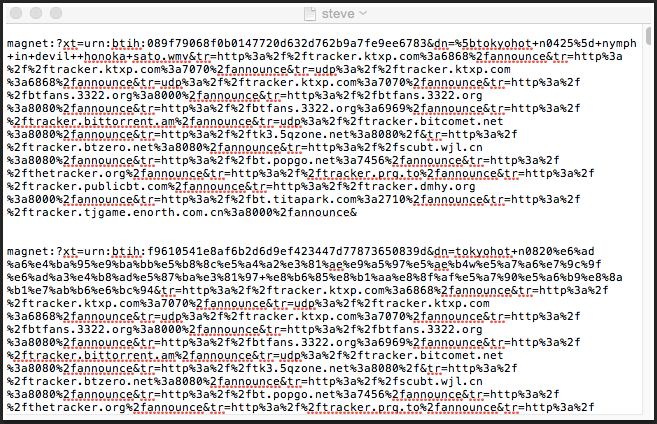

steve(page_num, 'steve.txt')

差不多爬了10页就这样了。。。。