前面配合我司自然拼读phonics产品做了52个字母的定位识别,后面看vuforia AR里有根据图片制作特征图,试了下,用生成特征矩阵的方式更简单高效(无需生成训练图集)。当然能使用特征矩阵的图片是有限制的就是特征足够多,像我们这种色块/字母等的就不行了。

回忆起之前可以根据绘本封面图片找绘本的应用,觉得值得研究下opencv特征提取的方法,和机器学习的方法互为补充。

用了3种方法:

第一种是Hessian Affine detector with SIFT descriptor(https://github.com/perdoch/hesaff) 这个用的opencv太老了,一个开发者(https://github.com/ray0809/Hessian_sift)更新了下,可以在opencv 3.x上跑了,可以直接用后者提供的代码(demo_python.py)做对比图实验。

第二种方法用opencv自带的surf方法:

import numpy as np

import cv2 as cv

import time

path1 = '../Hessian_sift-master/test_pics/204.jpg'

path2 = '../Hessian_sift-master/test_pics/205.jpg'

img1 = cv.imread(path1,0)

img2 = cv.imread(path2,0)

minHessian = 5000

detector = cv.xfeatures2d_SURF.create(hessianThreshold=minHessian)

start_time = time.time()

keypoints1, descriptors1 = detector.detectAndCompute(img1, None)

count = len(keypoints1)

print("Detected {:.5f} points, in {:.5f} sec.".format(count, time.time() - start_time))

keypoints2, descriptors2 = detector.detectAndCompute(img2, None)

count = len(keypoints2)

print("Detected {:.5f} points, in {:.5f} sec.".format(count, time.time() - start_time))

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks = 20) #指定递归次数

begin = time.time()

flann = cv.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(descriptors1, descriptors2, k=2)

# Need to draw only good matches, so create a mask

matchesMask = [[0, 0] for i in range(len(matches))]

del_same = []

mat = 0

for i, (m, n) in enumerate(matches):

# ratio test as per Lowe's paper

if m.distance < 0.5 * n.distance:

mat += 1

matchesMask[i] = [1, 0]

del_same.append(m.trainIdx)

count = len(set(del_same))

print('匹配耗时:{:.5f}, 匹配到的点个数:{}'.format(time.time() - begin, count))

draw_params = dict(#matchColor = (0, 255, 0),

#singlePointColor = (255, 0, 0),

matchesMask = matchesMask,

flags = cv.DrawMatchesFlags_NOT_DRAW_SINGLE_POINTS #0

)

img3 = cv.drawMatchesKnn(img1, keypoints1, img2, keypoints2, matches, None, **draw_params)

cv.namedWindow('img',cv.WINDOW_AUTOSIZE)

cv.imshow('img',img3)

cv.waitKey(0)

cv.destroyAllWindows()

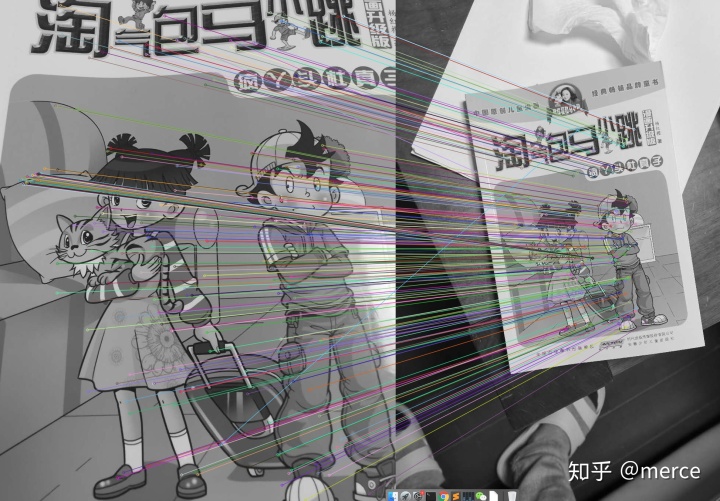

运行:minHessian = 5000的结果:

(base) panyongengde:orb_demo panyongfeng$ python demo_surf.py

Detected 1482.00000 points, in 0.30609 sec.

Detected 794.00000 points, in 0.52564 sec.

匹配耗时:0.05584, 匹配到的点个数:60看性能和结果,和第一种方法基本一致(minHessian取400),特征提取大概在100-200毫秒级,

第三种方法 orb的方法,特征提取快一个量级:

import numpy as np

import cv2 as cv

import time

path1 = '../Hessian_sift-master/test_pics/204.jpg'

path2 = '../Hessian_sift-master/test_pics/205.jpg'

img1 = cv.imread(path1,1)

img2 = cv.imread(path2,1)

# Initiate ORB detector

orb = cv.ORB_create()

# find the keypoints with ORB

start_time = time.time()

kp1, des1 = orb.detectAndCompute(img1,None)

count = len(kp1)

print("Detected {:.5f} points, in {:.5f} sec.".format(count, time.time() - start_time))

start_time = time.time()

kp2, des2 = orb.detectAndCompute(img2,None)

count = len(kp2)

print("Detected {:.5f} points, in {:.5f} sec.".format(count, time.time() - start_time))

start_time = time.time()

bf = cv.BFMatcher(cv.NORM_HAMMING, crossCheck=True)

matches = bf.match(des1,des2)

print("Matches in {:.5f} sec.".format(time.time() - start_time))

matches = sorted(matches, key = lambda x:x.distance)

good = []

for match in matches:

if match.distance < 40:

good.append(match)

print(len(good))

#img3 = cv.drawMatches(img1,kp1,img2,kp2,good,None,flags=cv.DrawMatchesFlags_NOT_DRAW_SINGLE_POINTS)

img3 = cv.drawMatches(img1,kp1,img2,kp2,good,None,flags=0)

cv.namedWindow('img',cv.WINDOW_AUTOSIZE)

cv.imshow('img',img3)

cv.waitKey(0)

cv.destroyAllWindows()

运行:

(base) panyongengde:orb_demo panyongfeng$ python demo_orb.py

Detected 500.00000 points, in 0.05798 sec.

Detected 500.00000 points, in 0.02815 sec.

Matches in 0.00221 sec.

43

感觉都达到了要求,orb速度高,端内可完成实时运算。