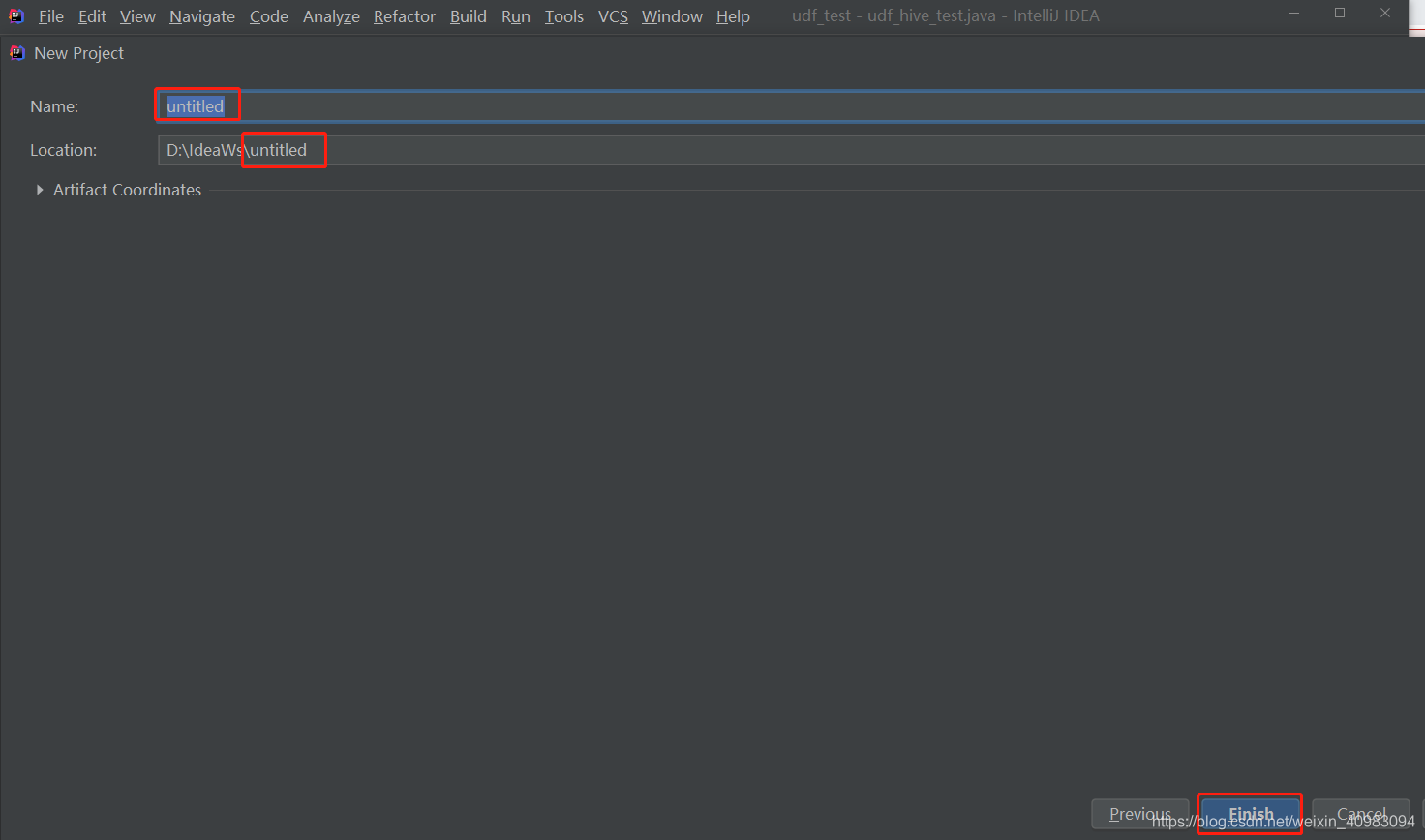

1.创建maven项目

file->new->project

填写项目名称。

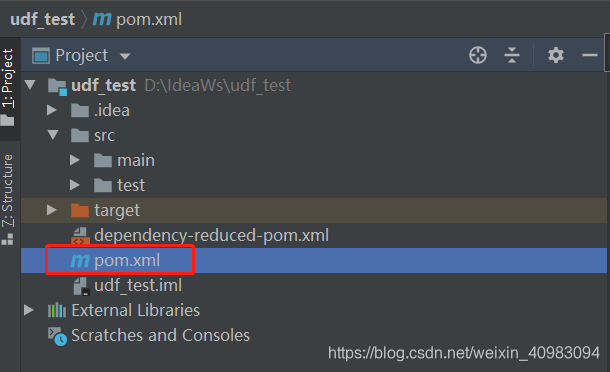

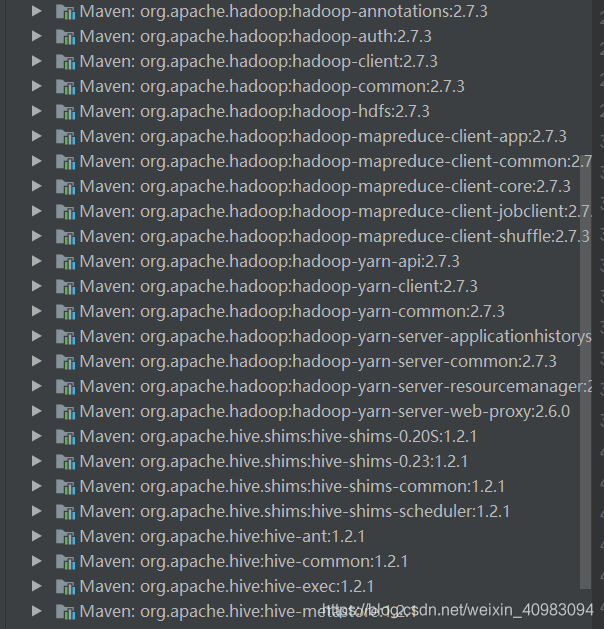

2.pom文件中添加依赖的jar包

pom.xml文件如下:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.example</groupId>

<artifactId>udf_test</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.3</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>1.2.1</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<transformers>

<transformer

implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer">

<resource>META-INF/spring.handlers</resource>

</transformer>

<transformer

implementation="org.apache.maven.plugins.shade.resource.ManifestResourceTransformer">

<mainClass>com.neu.hive.UDF.ToUpperCaseUDF</mainClass>

</transformer>

<transformer

implementation="org.apache.maven.plugins.shade.resource.AppendingTransformer">

<resource>META-INF/spring.schemas</resource>

</transformer>

</transformers>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>前提是配置好maven环境,导入完毕后左侧多出很多jar包,且右侧边栏没有红报错。

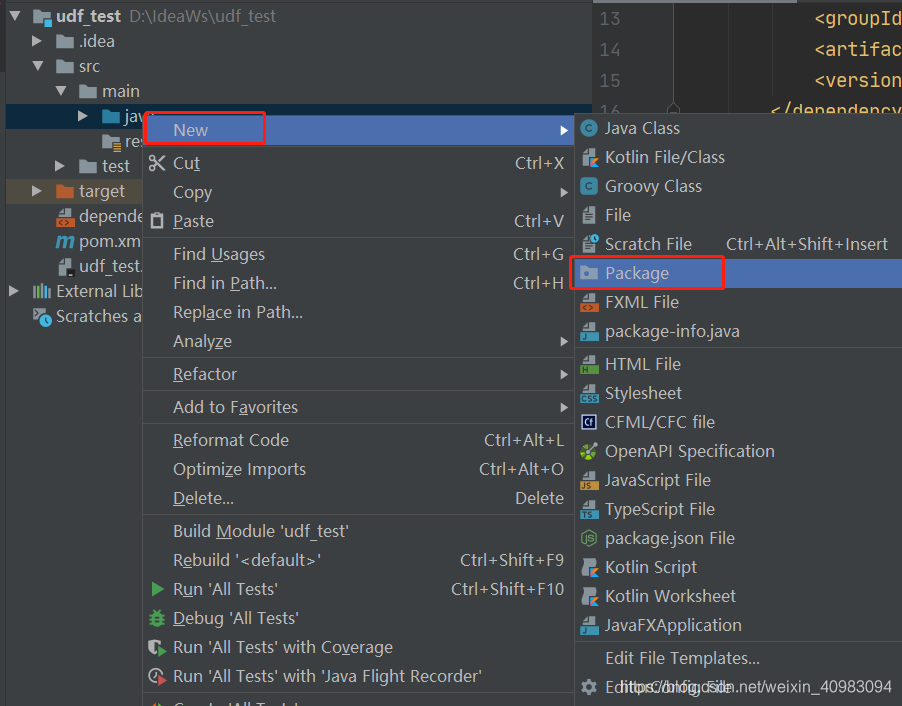

3.udf开发

java文件夹下新建new->package->class

代码如下:

package com.test.udf;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.apache.hadoop.io.Text;

/**

* @Author: Lens

* @Date: 2021/6/18 11:13

*/

public class Udf_Hive_Test extends UDF {

public Text evaluate(Text input){

return new Text("hello:"+input);

}

public static void main(String[] args) {

Udf_Hive_Test udf = new Udf_Hive_Test();

Text result = udf.evaluate(new Text("lens"));

System.out.println(result.toString());

}

}

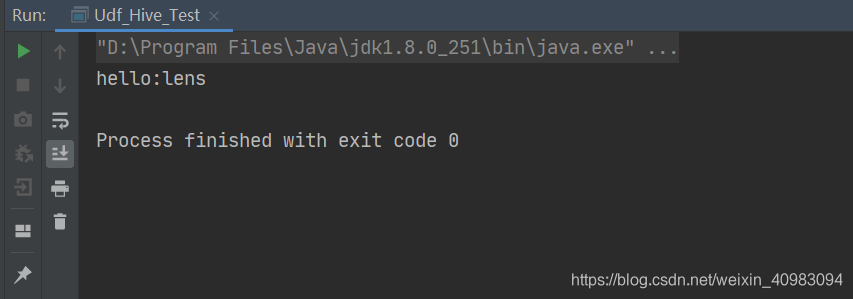

右键运行成功,结果如下:

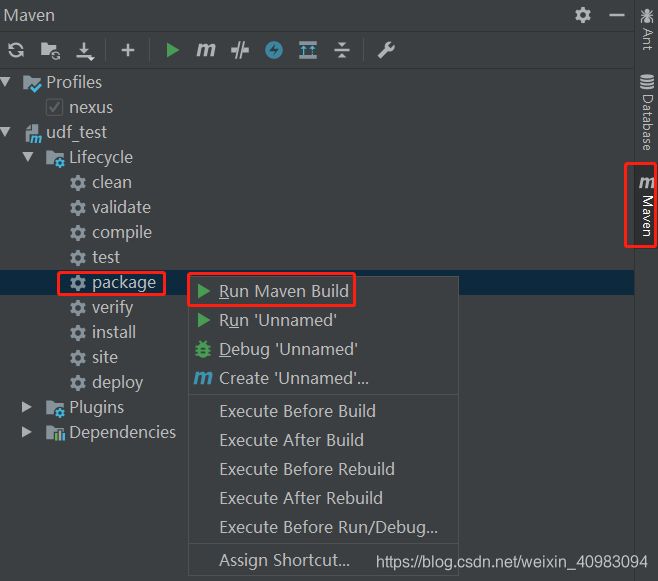

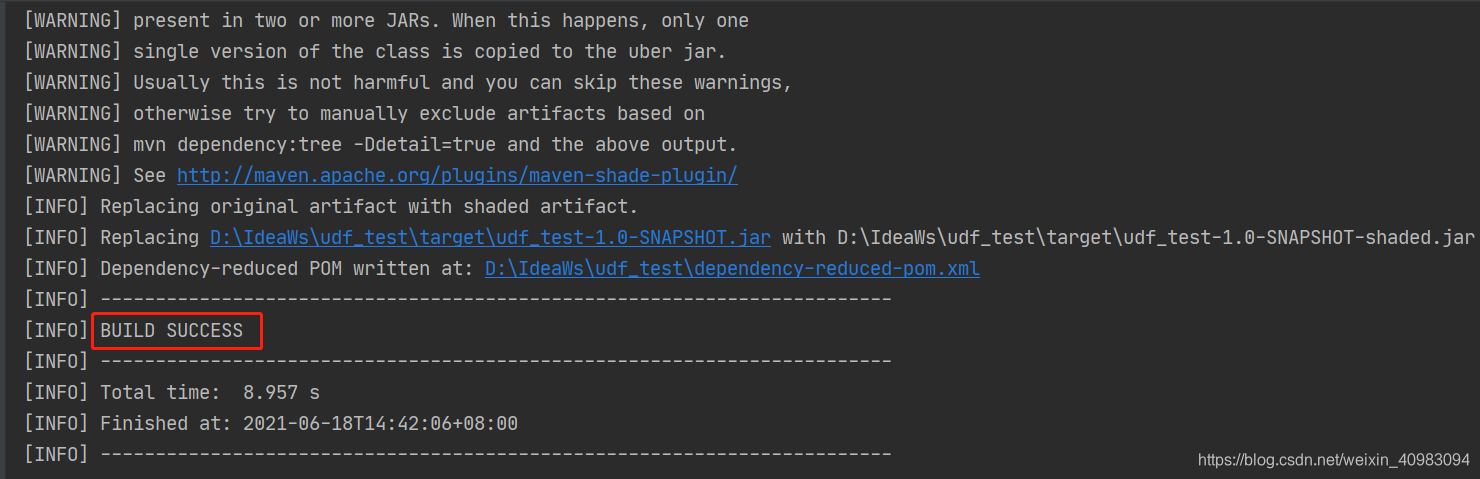

4.导出jar包

5. 上传服务器,加载jar包,创建临时函数

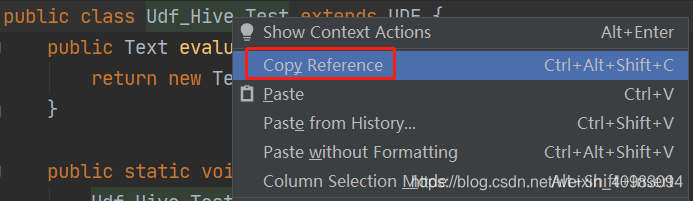

找到该函数,鼠标右键选择copy reference获得该函数的全路径 com.test.udf.Udf_Hive_Test

--- 注册jar包

add jar /root/hadoop/lib/udf_test-1.0-SNAPSHOT.jar;

--- 创建临时函数 包名+类名

create temporary function test_udf as 'com.test.udf.Udf_Hive_Test';

--- 测试函数

select test_udf(name) from dw.test_table;

--- 创建永久函数

CREATE FUNCTION test_udf2 AS 'com.test.udf.Udf_Hive_Test';