前言

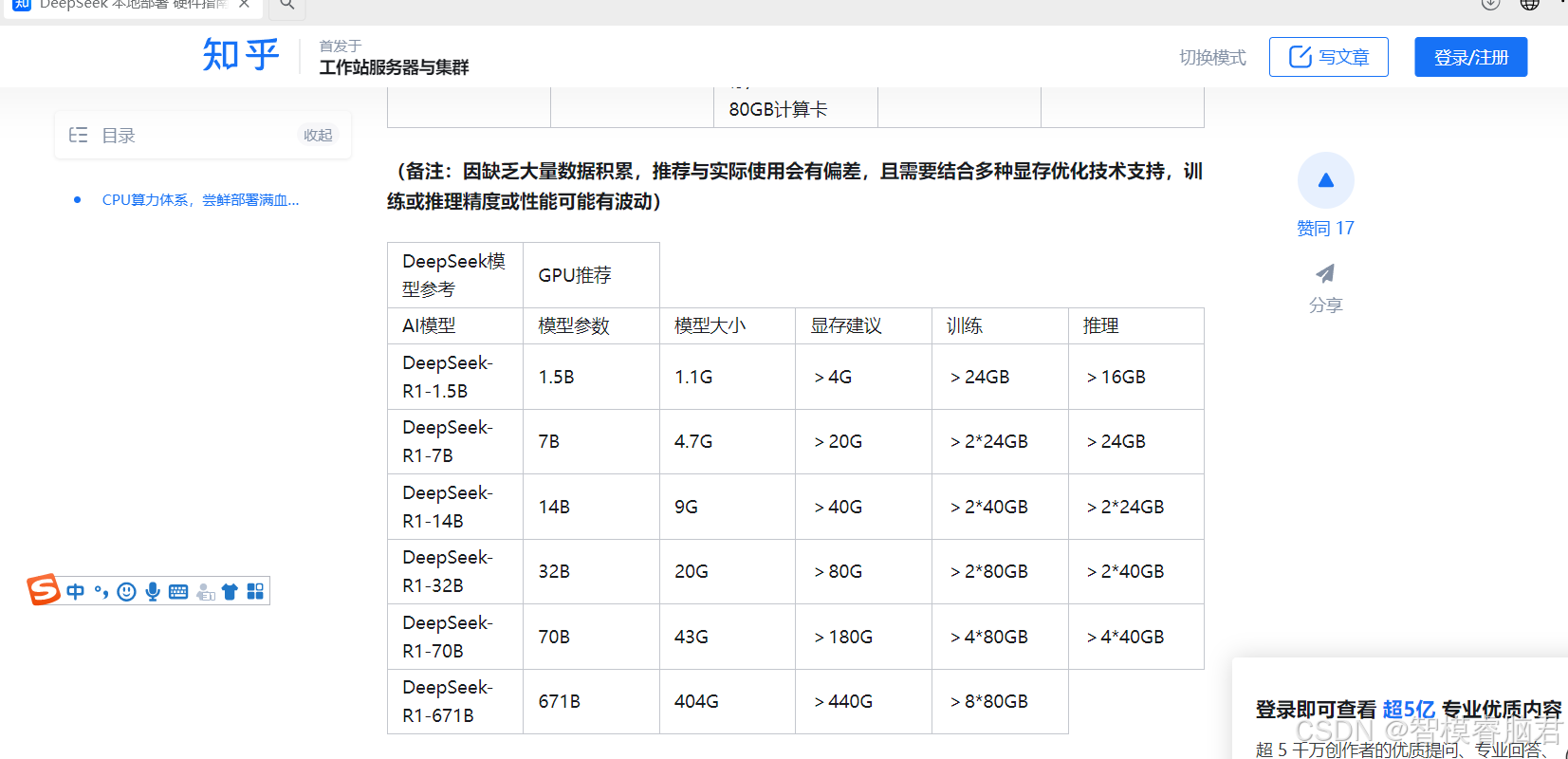

关于满血版网上一找,有很多类似这种表格,但没看家有实际操作去验证,下面就用2种常用的本地大模型加载方法进行验证。

一、huggingface加载

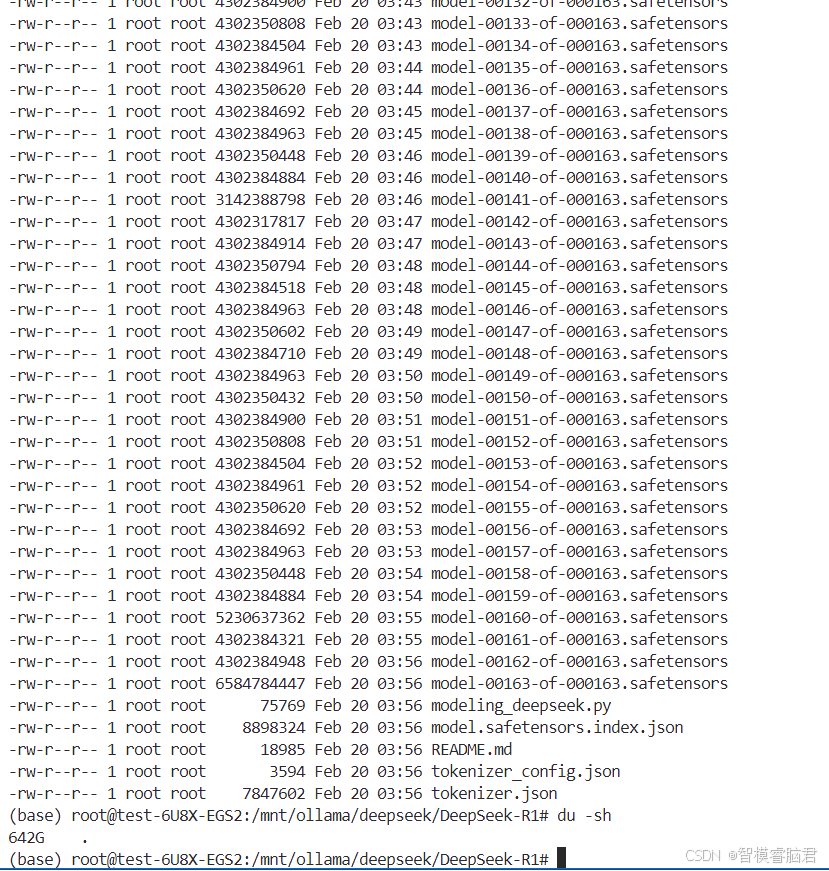

1. 下模

模型来源,魔塔社区,下完后大小642G。

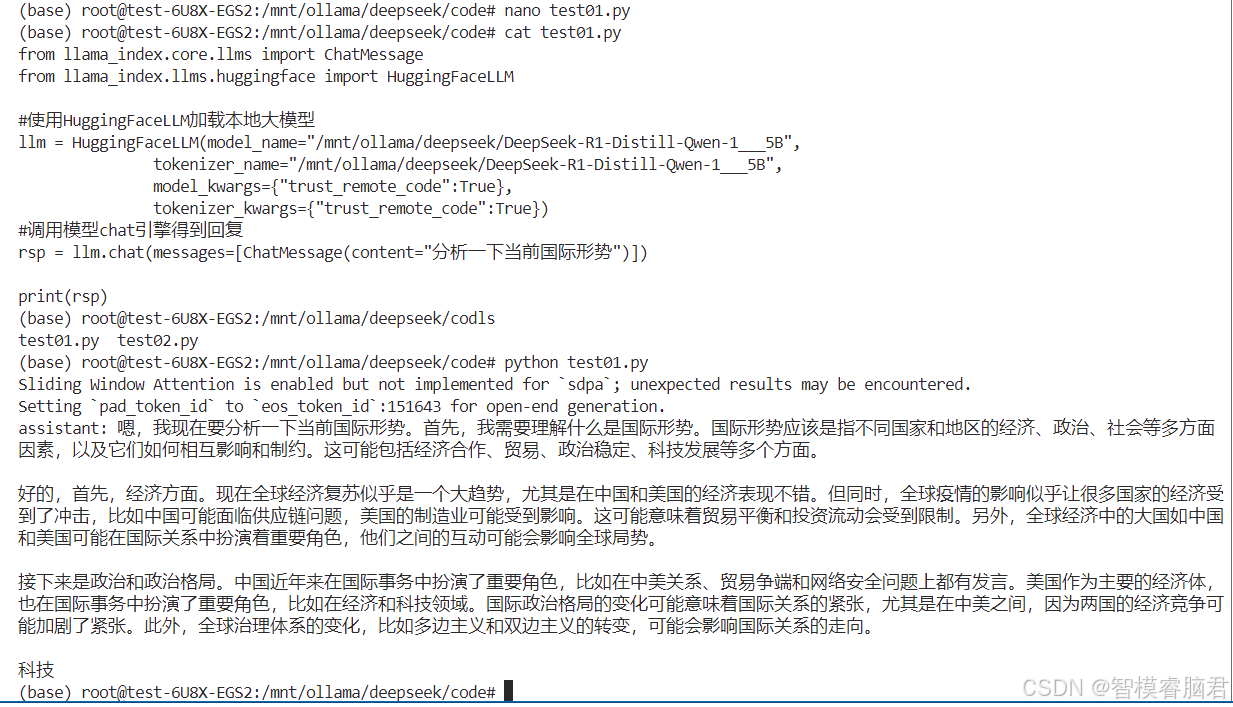

2. 小模测试

先给个deepseek蒸馏版的,qwen当学生,deepseek当老师的1.b模型,确保调用畅通。

调用非常迅速,秒出结果,说明环境配置上都没问题。

3. 大模来了

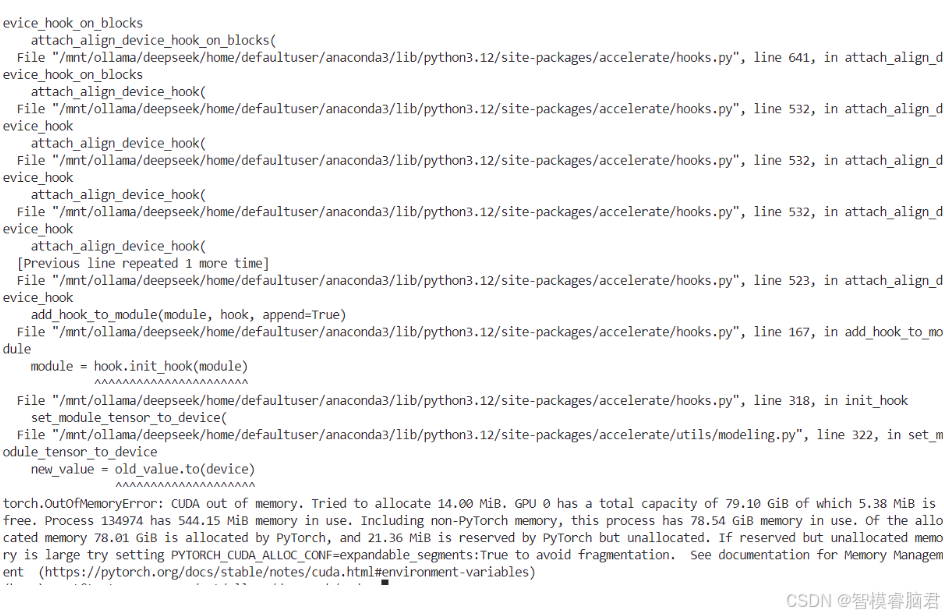

可能模型太大,不像那个小模加载出结果一气呵成。

经过长时间等待,没给来惊喜,也谈不上以外。

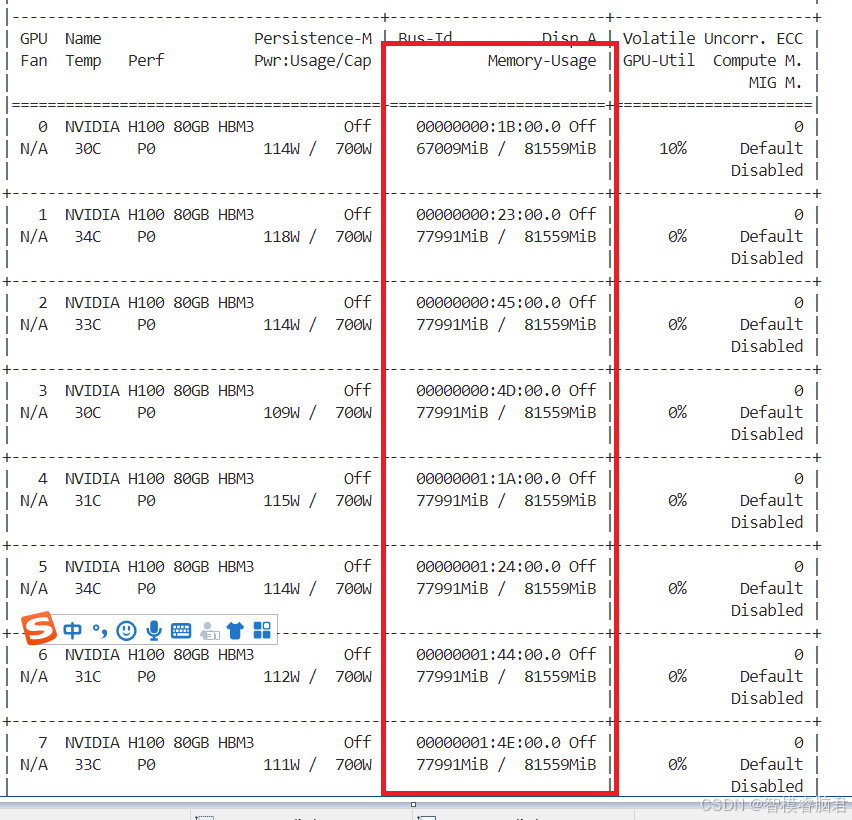

中途有查看显存占用情况,8张H100都在95%左右,可能某个点突然爆掉了。

经过多方咨询,普遍认为都是显存不够引起的。

切换第二种方案。

二、vllm加载

1. 包的安装

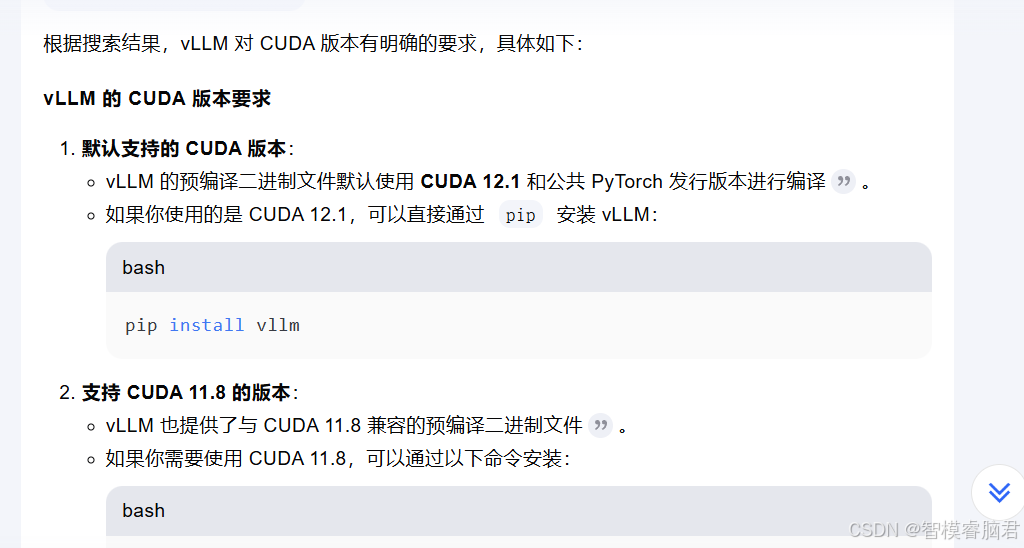

之所以vllm加载本地模型没有huggingface受欢迎,1个就是vllm挑剔,只支持cuda 11.8和12.1. 第二个就是安装包太多,不像前一个llama_index全搞定。

pip install torch torchvision transformers huggingface-hub sentencepiece jinja2 pydantic timm tiktoken accelerate sentence_transformers gradio openai einops pillow sse-starlette bitsandbytes modelscope vllm -i https://pypi.tuna.tsinghua.edu.cn/simple

装包的时候会有各种冲突,就慢慢解吧,顺利的话也许一把就好了。

WARNING: Ignoring invalid distribution ~riton (/home/defaultuser/anaconda3/lib/python3.12/site-packages)

WARNING: Ignoring invalid distribution ~orch (/home/defaultuser/anaconda3/lib/python3.12/site-packages)

Installing collected packages: triton, opencv-python, torch

WARNING: Ignoring invalid distribution ~riton (/home/defaultuser/anaconda3/lib/python3.12/site-packages)

WARNING: Ignoring invalid distribution ~riton (/home/defaultuser/anaconda3/lib/python3.12/site-packages)

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

vllm 0.7.3 requires torch==2.5.1, but you have torch 2.6.0 which is incompatible.

torchaudio 2.5.1 requires torch==2.5.1, but you have torch 2.6.0 which is incompatible.

torchvision 0.20.1 requires torch==2.5.1, but you have torch 2.6.0 which is incompatible.

xformers 0.0.28.post3 requires torch==2.5.1, but you have torch 2.6.0 which is incompatible.

Successfully installed opencv-python-4.11.0.86 torch-2.6.0 triton

解决方法

pip uninstall torch torchvision torchaudio

pip install torch==2.5.1 torchvision==0.20.1 torchaudio==2.5.1

2. 小模测试

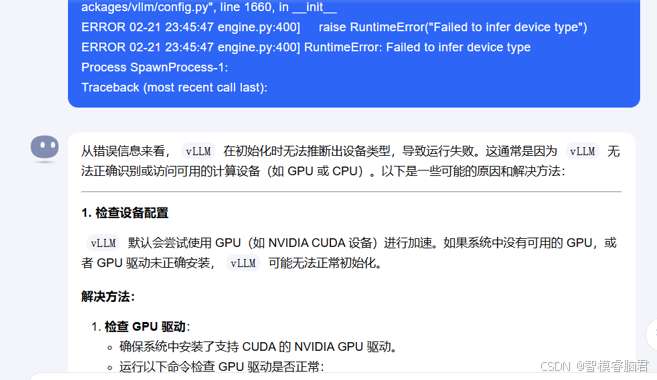

先在一个没有gpu的机器测试

ERROR 02-21 23:45:47 engine.py:400] File "/home/defaultuser/anaconda3/lib/python3.12/site-packages/vllm/engine/multiprocessing/engine.py", line 119, in from_engine_args ERROR 02-21 23:45:47 engine.py:400] engine_config = engine_args.create_engine_config(usage_context) ERROR 02-21 23:45:47 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ ERROR 02-21 23:45:47 engine.py:400] File "/home/defaultuser/anaconda3/lib/python3.12/site-packages/vllm/engine/arg_utils.py", line 1126, in create_engine_config ERROR 02-21 23:45:47 engine.py:400] device_config = DeviceConfig(device=self.device) ERROR 02-21 23:45:47 engine.py:400] ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ ERROR 02-21 23:45:47 engine.py:400] File "/home/defaultuser/anaconda3/lib/python3.12/site-packages/vllm/config.py", line 1660, in __init__ ERROR 02-21 23:45:47 engine.py:400] raise RuntimeError("Failed to infer device type") ERROR 02-21 23:45:47 engine.py:400] RuntimeError: Failed to infer device type Process SpawnProcess-1: Traceback (most recent call last):

kimi给出的原因是vllm必须要有gpu才行,但是haggingface我试过,小模型,cpu也能跑。

测试代码

if __name__ == '__main__':

import vllm.entrypoints.llm.LLM

import vllm

llm = vllm.LLM(

model="/mnt/ollama/deepseek/DeepSeek-R1-Distill-Qwen-1___5B",

quantization="None",

tensor_parallel_size=8,

gpu_memory_utilization=0.95,

trust_remote_code=True,

dtype="half",

max_model_len=512

)

tokenizer =llm.get_tokenizer()

sampling_params =vllm.samplingParams(

max_tokens=512,

temperature=0.7,

top_p=0.9

)

output = llm.generate("描述一下北京的秋天",sampling_params=sampling_params)

print(output)

File "/mnt/ollama/deepseek/code/vllm.py", line 3, in <module>

llm = vllm.LLM(

^^^^^^^^

AttributeError: module 'vllm' has no attribute 'LLM'

已经安装了vllm,总是找不到,查了有说版本问题,cuda版本

只能在重新装一下了。

defaultuser@qin-h100-jumper-server:/mnt/ollama/deepseek/vllm$ sudo wget https://developer.download.nvidia.com/compute/c

uda/12.1.0/local_installers/cuda_12.1.0_530.30.02_linux.run

--2025-02-22 02:51:37-- https://developer.download.nvidia.com/compute/cuda/12.1.0/local_installers/cuda_12.1.0_530.30.02_linux.run

Resolving developer.download.nvidia.com (developer.download.nvidia.com)... 104.115.38.34, 104.115.38.74

Connecting to developer.download.nvidia.com (developer.download.nvidia.com)|104.115.38.34|:443... connected.

HTTP request sent, awaiting response... 301 Moved Permanently

Location: https://developer.download.nvidia.cn/compute/cuda/12.1.0/local_installers/cuda_12.1.0_530.30.02_linux.run [following]

--2025-02-22 02:51:39-- https://developer.download.nvidia.cn/compute/cuda/12.1.0/local_installers/cuda_12.1.0_530.30.02_linux.run

Resolving developer.download.nvidia.cn (developer.download.nvidia.cn)... 175.4.58.179, 125.64.2.195, 125.64.2.194, ...

Connecting to developer.download.nvidia.cn (developer.download.nvidia.cn)|175.4.58.179|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4245586997 (4.0G) [application/octet-stream]

Saving to: ‘cuda_12.1.0_530.30.02_linux.run’

cuda_12.1.0_530.30.02_linux.run 100%[=====================================================>] 3.95G 52.6MB/s in 71s

2025-02-22 02:52:50 (56.8 MB/s) - ‘cuda_12.1.0_530.30.02_linux.run’ saved [4245586997/4245586997]

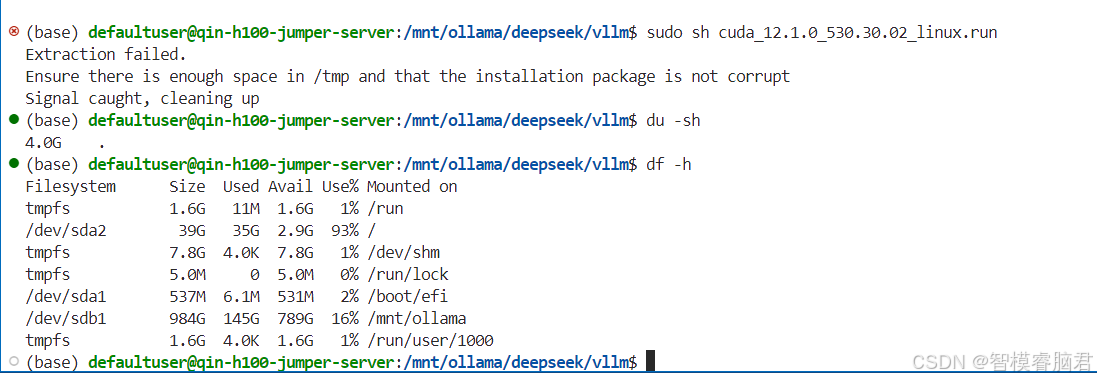

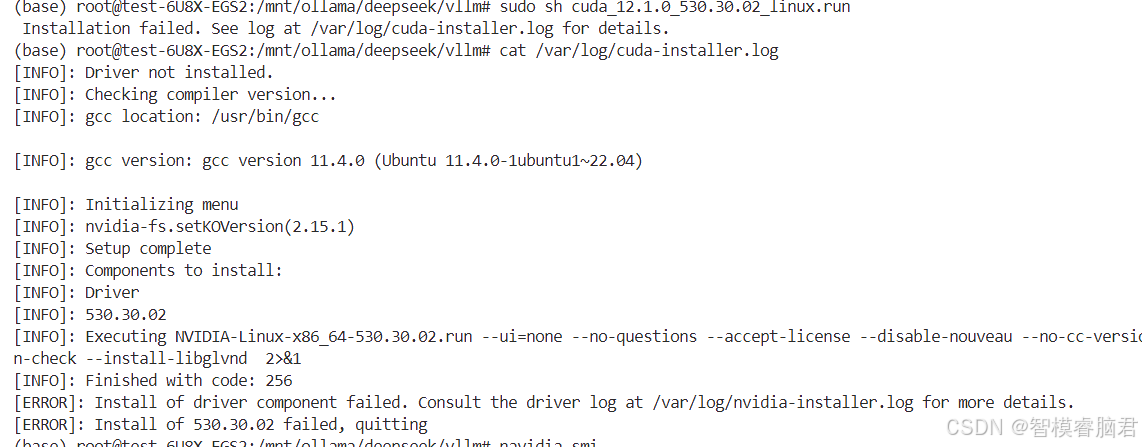

cuda安装失败。这条路走到头了,没辙了。

(base) root@test-6U8X-EGS2:/mnt/ollama/deepseek/vllm# sudo sh cuda_12.1.0_530.30.02_linux.run

Installation failed. See log at /var/log/cuda-installer.log for details.

(base) root@test-6U8X-EGS2:/mnt/ollama/deepseek/vllm# cat /var/log/cuda-installer.log

[INFO]: Driver not installed.

[INFO]: Checking compiler version...

[INFO]: gcc location: /usr/bin/gcc

[INFO]: gcc version: gcc version 11.4.0 (Ubuntu 11.4.0-1ubuntu1~22.04)

[INFO]: Initializing menu

[INFO]: nvidia-fs.setKOVersion(2.15.1)

[INFO]: Setup complete

[INFO]: Components to install:

[INFO]: Driver

[INFO]: 530.30.02

[INFO]: Executing NVIDIA-Linux-x86_64-530.30.02.run --ui=none --no-questions --accept-license --disable-nouveau --no-cc-version-check --install-libglvnd 2>&1

[INFO]: Finished with code: 256

[ERROR]: Install of driver component failed. Consult the driver log at /var/log/nvidia-installer.log for more details.

[ERROR]: Install of 530.30.02 failed, quitting

(base) root@test-6U8X-EGS2:/mnt/ollama/deepseek/vllm#

三、 结果

haggingface加载,最后是8*H100都不够用。

vllm加载,由于他必须要求cuda 12.1,如果不是,调用根本不认,但是电脑装的是12.4,尝试改为12.1,安装失败。测试结束。

cuda版本不匹配问题,我猜可能按的时候需要联网,但也不一定,可能别的原因。

服务器

在跳板机上尝试,没有空间,这个是纯cpu的,没有gpu,按也不会,temp应该是在根目录下,sda2,用了93%