在之前的文章中,我们使用OpenPose模型对一个人进行人体姿势估计。在这篇文章中,我们将讨论如何进行多人姿势估计。

当一张照片中有多个人时,姿态估计会产生多个独立的关键点。我们需要找出哪组关键点属于同一个人。

在本文中,我们将使用在COCO数据集上训练的18点模型。以下是COCO数据集使用的关键点及其编号:

COCO输出格式:鼻子- 0,脖子- 1,右肩- 2,右肘- 3,右腕- 4,左肩- 5,左肘- 6,

左腕- 7,右臀- 8,右膝盖- 9,右脚踝- 10,左臀部- 11,左膝- 12,

脚踝- 13,右眼- 14,左眼- 15,右耳- 16,左耳- 17,背景- 18

1.多人姿态估计模型

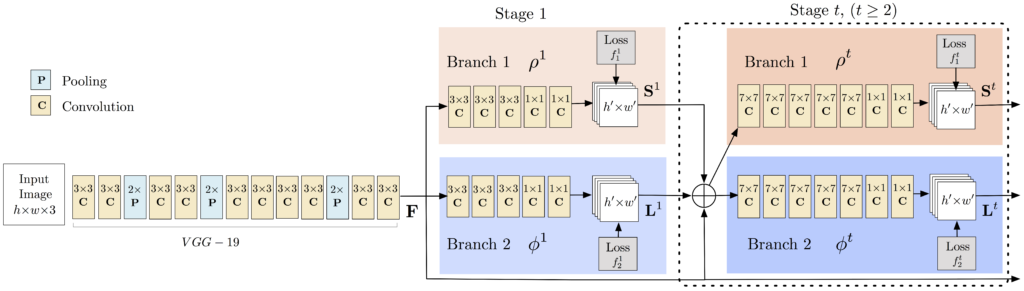

OpenPose架构如下所示,点击放大图片。

1.1结构概述

模型以尺寸为w × h的彩色图像作为输入,输出图像中每个人关键点的2D位置。检测分三个阶段进行:

- 第0阶段:使用VGGNet的前10层为输入图像创建特征图。

- 第1阶段:使用2分支多阶段CNN,其中第一个分支预测一组身体部位位置(如肘、膝等)的2D置信图(S)。下面给出了左肩的关键点置信度图。

第二分支预测了一组部件亲和度的2D向量场(L),用于编码部件之间的关联程度。在下面的图中,颈部和左肩之间的部分亲和力被显示出来。 - 第二阶段:通过贪婪推理对置信度和亲和度映射进行解析,生成图像中所有人的二维关键点。

置信度映射用于查找关键点,亲和度映射用于获取关键点之间的有效连接。

2.OpenCV中多人体姿态估计的代码

在本节中,我们将看到如何在OpenCV中加载训练过的模型并检查输出。我们将讨论用于多人姿态估计的代码。输出由置信映射和关联映射组成,如果有多人在场,这些输出可以用来找到一个帧中每个人的姿势。

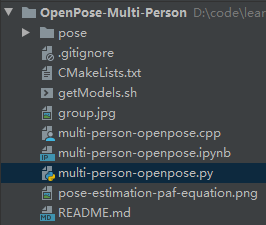

2.1 目录结构

2.2代码展示

链接:https://pan.baidu.com/s/1Z5mRhYcKEJO5KkUE-yKCzw

提取码:123a

(1)Python

import cv2

import time

import numpy as np

from random import randint

import argparse

parser = argparse.ArgumentParser(description='Run keypoint detection')

parser.add_argument("--device", default="cpu", help="Device to inference on")

parser.add_argument("--image_file", default="group.jpg", help="Input image")

args = parser.parse_args()

image1 = cv2.imread(args.image_file)

protoFile = "pose/coco/pose_deploy_linevec.prototxt"

weightsFile = "pose/coco/pose_iter_440000.caffemodel"

nPoints = 18

# COCO输出格式

keypointsMapping = ['Nose', 'Neck', 'R-Sho', 'R-Elb', 'R-Wr', 'L-Sho', 'L-Elb', 'L-Wr', 'R-Hip', 'R-Knee', 'R-Ank', 'L-Hip', 'L-Knee', 'L-Ank', 'R-Eye', 'L-Eye', 'R-Ear', 'L-Ear']

POSE_PAIRS = [[1,2], [1,5], [2,3], [3,4], [5,6], [6,7],

[1,8], [8,9], [9,10], [1,11], [11,12], [12,13],

[1,0], [0,14], [14,16], [0,15], [15,17],

[2,17], [5,16] ]

# 对应于POSE_PAIRS的pafs索引

# 例如POSE_PAIR(1,2), PAFs位于输出的索引为(31,32), 类似的, (1,5) -> (39,40)等等.

mapIdx = [[31,32], [39,40], [33,34], [35,36], [41,42], [43,44],

[19,20], [21,22], [23,24], [25,26], [27,28], [29,30],

[47,48], [49,50], [53,54], [51,52], [55,56],

[37,38], [45,46]]

colors = [ [0,100,255], [0,100,255], [0,255,255], [0,100,255], [0,255,255], [0,100,255],

[0,255,0], [255,200,100], [255,0,255], [0,255,0], [255,200,100], [255,0,255],

[0,0,255], [255,0,0], [200,200,0], [255,0,0], [200,200,0], [0,0,0]]

def getKeypoints(probMap, threshold=0.1):

mapSmooth = cv2.GaussianBlur(probMap,(3,3),0,0)

mapMask = np.uint8(mapSmooth>threshold)

keypoints = []

#发现blobs

contours, _ = cv2.findContours(mapMask, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

#对于每个blob,求最大值

for cnt in contours:

blobMask = np.zeros(mapMask.shape)

blobMask = cv2.fillConvexPoly(blobMask, cnt, 1)

maskedProbMap = mapSmooth * blobMask

_, maxVal, _, maxLoc = cv2.minMaxLoc(maskedProbMap)

keypoints.append(maxLoc + (probMap[maxLoc[1], maxLoc[0]],))

return keypoints

# 找出所有在场人员的不同关节之间的有效连接

def getValidPairs(output):

valid_pairs = []

invalid_pairs = []

n_interp_samples = 10

paf_score_th = 0.1

conf_th = 0.7

# 遍历每一个POSE_PAIR

for k in range(len(mapIdx)):

# A->B 构成一个肢体

pafA = output[0, mapIdx[k][0], :, :]

pafB = output[0, mapIdx[k][1], :, :]

pafA = cv2.resize(pafA, (frameWidth, frameHeight))

pafB = cv2.resize(pafB, (frameWidth, frameHeight))

# 找到第一和第二肢体的关键点

candA = detected_keypoints[POSE_PAIRS[k][0]]

candB = detected_keypoints[POSE_PAIRS[k][1]]

nA = len(candA)

nB = len(candB)

# 如果检测到关节对的关键点,用candA的每个关节检查candB的每个关节。计算两个关节之间的距离向量,在关节之间的插值点集处找到PAF值。使用上面的公式计算一个分数来标记连接有效性

if( nA != 0 and nB != 0):

valid_pair = np.zeros((0,3))

for i in range(nA):

max_j=-1

maxScore = -1

found = 0

for j in range(nB):

# 找到d_ij

d_ij = np.subtract(candB[j][:2], candA[i][:2])

norm = np.linalg.norm(d_ij)

if norm:

d_ij = d_ij / norm

else:

continue

# 找到p(u)

interp_coord = list(zip(np.linspace(candA[i][0], candB[j][0], num=n_interp_samples),

np.linspace(candA[i][1], candB[j][1], num=n_interp_samples)))

# 找到L(p(u))

paf_interp = []

for k in range(len(interp_coord)):

paf_interp.append([pafA[int(round(interp_coord[k][1])), int(round(interp_coord[k][0]))],

pafB[int(round(interp_coord[k][1])), int(round(interp_coord[k][0]))] ])

# 找到E

paf_scores = np.dot(paf_interp, d_ij)

avg_paf_score = sum(paf_scores)/len(paf_scores)

# 检查连接是否有效

# 如果对应于PAF插值向量的分数大于阈值 -> 有效对

if ( len(np.where(paf_scores > paf_score_th)[0]) / n_interp_samples ) > conf_th :

if avg_paf_score > maxScore:

max_j = j

maxScore = avg_paf_score

found = 1

# 将连接附加到列表

if found:

valid_pair = np.append(valid_pair, [[candA[i][3], candB[max_j][3], maxScore]], axis=0)

# 将检测到的连接附加到全局列表

valid_pairs.append(valid_pair)

else: # 如果没有检测到关键点

print("No Connection : k = {}".format(k))

invalid_pairs.append(k)

valid_pairs.append([])

return valid_pairs, invalid_pairs

# 这个函数创建一个属于每个人的关键点列表

# 对于每个检测到的有效对,它将关节分配给一个人

def getPersonwiseKeypoints(valid_pairs, invalid_pairs):

# 每一行的最后一个数字是总分

personwiseKeypoints = -1 * np.ones((0, 19))

for k in range(len(mapIdx)):

if k not in invalid_pairs:

partAs = valid_pairs[k][:,0]

partBs = valid_pairs[k][:,1]

indexA, indexB = np.array(POSE_PAIRS[k])

for i in range(len(valid_pairs[k])):

found = 0

person_idx = -1

for j in range(len(personwiseKeypoints)):

if personwiseKeypoints[j][indexA] == partAs[i]:

person_idx = j

found = 1

break

if found:

personwiseKeypoints[person_idx][indexB] = partBs[i]

personwiseKeypoints[person_idx][-1] += keypoints_list[partBs[i].astype(int), 2] + valid_pairs[k][i][2]

# 如果在子集中没有找到partA,则创建一个新的子集

elif not found and k < 17:

row = -1 * np.ones(19)

row[indexA] = partAs[i]

row[indexB] = partBs[i]

#为两个关键点添加keypoint_scores和paf_score

row[-1] = sum(keypoints_list[valid_pairs[k][i,:2].astype(int), 2]) + valid_pairs[k][i][2]

personwiseKeypoints = np.vstack([personwiseKeypoints, row])

return personwiseKeypoints

frameWidth = image1.shape[1]

frameHeight = image1.shape[0]

t = time.time()

net = cv2.dnn.readNetFromCaffe(protoFile, weightsFile)

if args.device == "cpu":

net.setPreferableBackend(cv2.dnn.DNN_TARGET_CPU)

print("Using CPU device")

elif args.device == "gpu":

net.setPreferableBackend(cv2.dnn.DNN_BACKEND_CUDA)

net.setPreferableTarget(cv2.dnn.DNN_TARGET_CUDA)

print("Using GPU device")

# 修正输入高度,并根据长宽比获得宽度

inHeight = 368

inWidth = int((inHeight/frameHeight)*frameWidth)

inpBlob = cv2.dnn.blobFromImage(image1, 1.0 / 255, (inWidth, inHeight),

(0, 0, 0), swapRB=False, crop=False)

net.setInput(inpBlob)

output = net.forward()

print("Time Taken in forward pass = {}".format(time.time() - t))

detected_keypoints = []

keypoints_list = np.zeros((0,3))

keypoint_id = 0

threshold = 0.1

for part in range(nPoints):

probMap = output[0,part,:,:]

probMap = cv2.resize(probMap, (image1.shape[1], image1.shape[0]))

keypoints = getKeypoints(probMap, threshold)

print("Keypoints - {} : {}".format(keypointsMapping[part], keypoints))

keypoints_with_id = []

for i in range(len(keypoints)):

keypoints_with_id.append(keypoints[i] + (keypoint_id,))

keypoints_list = np.vstack([keypoints_list, keypoints[i]])

keypoint_id += 1

detected_keypoints.append(keypoints_with_id)

frameClone = image1.copy()

for i in range(nPoints):

for j in range(len(detected_keypoints[i])):

cv2.circle(frameClone, detected_keypoints[i][j][0:2], 5, colors[i], -1, cv2.LINE_AA)

cv2.imshow("Keypoints",frameClone)

valid_pairs, invalid_pairs = getValidPairs(output)

personwiseKeypoints = getPersonwiseKeypoints(valid_pairs, invalid_pairs)

for i in range(17):

for n in range(len(personwiseKeypoints)):

index = personwiseKeypoints[n][np.array(POSE_PAIRS[i])]

if -1 in index:

continue

B = np.int32(keypoints_list[index.astype(int), 0])

A = np.int32(keypoints_list[index.astype(int), 1])

cv2.line(frameClone, (B[0], A[0]), (B[1], A[1]), colors[i], 3, cv2.LINE_AA)

cv2.imshow("Detected Pose" , frameClone)

cv2.waitKey(0)

(2)C++

#include<opencv2/dnn.hpp>

#include<opencv2/imgproc.hpp>

#include<opencv2/highgui.hpp>

#include<iostream>

#include<chrono>

#include<random>

#include<set>

#include<cmath>

struct KeyPoint{

KeyPoint(cv::Point point,float probability){

this->id = -1;

this->point = point;

this->probability = probability;

}

int id;

cv::Point point;

float probability;

};

std::ostream& operator << (std::ostream& os, const KeyPoint& kp)

{

os << "Id:" << kp.id << ", Point:" << kp.point << ", Prob:" << kp.probability << std::endl;

return os;

}

struct ValidPair{

ValidPair(int aId,int bId,float score){

this->aId = aId;

this->bId = bId;

this->score = score;

}

int aId;

int bId;

float score;

};

std::ostream& operator << (std::ostream& os, const ValidPair& vp)

{

os << "A:" << vp.aId << ", B:" << vp.bId << ", score:" << vp.score << std::endl;

return os;

}

template < class T > std::ostream& operator << (std::ostream& os, const std::vector<T>& v)

{

os << "[";

bool first = true;

for (typename std::vector<T>::const_iterator ii = v.begin(); ii != v.end(); ++ii, first = false)

{

if(!first) os << ",";

os << " " << *ii;

}

os << "]";

return os;

}

template < class T > std::ostream& operator << (std::ostream& os, const std::set<T>& v)

{

os << "[";

bool first = true;

for (typename std::set<T>::const_iterator ii = v.begin(); ii != v.end(); ++ii, first = false)

{

if(!first) os << ",";

os << " " << *ii;

}

os << "]";

return os;

}

const int nPoints = 18;

const std::string keypointsMapping[] = {

"Nose", "Neck",

"R-Sho", "R-Elb", "R-Wr",

"L-Sho", "L-Elb", "L-Wr",

"R-Hip", "R-Knee", "R-Ank",

"L-Hip", "L-Knee", "L-Ank",

"R-Eye", "L-Eye", "R-Ear", "L-Ear"

};

const std::vector<std::pair<int,int>> mapIdx = {

{31,32}, {39,40}, {33,34}, {35,36}, {41,42}, {43,44},

{19,20}, {21,22}, {23,24}, {25,26}, {27,28}, {29,30},

{47,48}, {49,50}, {53,54}, {51,52}, {55,56}, {37,38},

{45,46}

};

const std::vector<std::pair<int,int>> posePairs = {

{1,2}, {1,5}, {2,3}, {3,4}, {5,6}, {6,7},

{1,8}, {8,9}, {9,10}, {1,11}, {11,12}, {12,13},

{1,0}, {0,14}, {14,16}, {0,15}, {15,17}, {2,17},

{5,16}

};

void getKeyPoints(cv::Mat& probMap,double threshold,std::vector<KeyPoint>& keyPoints){

cv::Mat smoothProbMap;

cv::GaussianBlur( probMap, smoothProbMap, cv::Size( 3, 3 ), 0, 0 );

cv::Mat maskedProbMap;

cv::threshold(smoothProbMap,maskedProbMap,threshold,255,cv::THRESH_BINARY);

maskedProbMap.convertTo(maskedProbMap,CV_8U,1);

std::vector<std::vector<cv::Point> > contours;

cv::findContours(maskedProbMap,contours,cv::RETR_TREE,cv::CHAIN_APPROX_SIMPLE);

for(int i = 0; i < contours.size();++i){

cv::Mat blobMask = cv::Mat::zeros(smoothProbMap.rows,smoothProbMap.cols,smoothProbMap.type());

cv::fillConvexPoly(blobMask,contours[i],cv::Scalar(1));

double maxVal;

cv::Point maxLoc;

cv::minMaxLoc(smoothProbMap.mul(blobMask),0,&maxVal,0,&maxLoc);

keyPoints.push_back(KeyPoint(maxLoc, probMap.at<float>(maxLoc.y,maxLoc.x)));

}

}

void populateColorPalette(std::vector<cv::Scalar>& colors,int nColors){

std::random_device rd;

std::mt19937 gen(rd());

std::uniform_int_distribution<> dis1(64, 200);

std::uniform_int_distribution<> dis2(100, 255);

std::uniform_int_distribution<> dis3(100, 255);

for(int i = 0; i < nColors;++i){

colors.push_back(cv::Scalar(dis1(gen),dis2(gen),dis3(gen)));

}

}

void splitNetOutputBlobToParts(cv::Mat& netOutputBlob,const cv::Size& targetSize,std::vector<cv::Mat>& netOutputParts){

int nParts = netOutputBlob.size[1];

int h = netOutputBlob.size[2];

int w = netOutputBlob.size[3];

for(int i = 0; i< nParts;++i){

cv::Mat part(h, w, CV_32F, netOutputBlob.ptr(0,i));

cv::Mat resizedPart;

cv::resize(part,resizedPart,targetSize);

netOutputParts.push_back(resizedPart);

}

}

void populateInterpPoints(const cv::Point& a,const cv::Point& b,int numPoints,std::vector<cv::Point>& interpCoords){

float xStep = ((float)(b.x - a.x))/(float)(numPoints-1);

float yStep = ((float)(b.y - a.y))/(float)(numPoints-1);

interpCoords.push_back(a);

for(int i = 1; i< numPoints-1;++i){

interpCoords.push_back(cv::Point(a.x + xStep*i,a.y + yStep*i));

}

interpCoords.push_back(b);

}

void getValidPairs(const std::vector<cv::Mat>& netOutputParts,

const std::vector<std::vector<KeyPoint>>& detectedKeypoints,

std::vector<std::vector<ValidPair>>& validPairs,

std::set<int>& invalidPairs) {

int nInterpSamples = 10;

float pafScoreTh = 0.1;

float confTh = 0.7;

for(int k = 0; k < mapIdx.size();++k ){

//A->B 构成一个肢体

cv::Mat pafA = netOutputParts[mapIdx[k].first];

cv::Mat pafB = netOutputParts[mapIdx[k].second];

//找到第一和第二肢体的关键点

const std::vector<KeyPoint>& candA = detectedKeypoints[posePairs[k].first];

const std::vector<KeyPoint>& candB = detectedKeypoints[posePairs[k].second];

int nA = candA.size();

int nB = candB.size();

/*

# If keypoints for the joint-pair is detected

# check every joint in candA with every joint in candB

# Calculate the distance vector between the two joints

# Find the PAF values at a set of interpolated points between the joints

# Use the above formula to compute a score to mark the connection valid

*/

if(nA != 0 && nB != 0){

std::vector<ValidPair> localValidPairs;

for(int i = 0; i< nA;++i){

int maxJ = -1;

float maxScore = -1;

bool found = false;

for(int j = 0; j < nB;++j){

std::pair<float,float> distance(candB[j].point.x - candA[i].point.x,candB[j].point.y - candA[i].point.y);

float norm = std::sqrt(distance.first*distance.first + distance.second*distance.second);

if(!norm){

continue;

}

distance.first /= norm;

distance.second /= norm;

//Find p(u)

std::vector<cv::Point> interpCoords;

populateInterpPoints(candA[i].point,candB[j].point,nInterpSamples,interpCoords);

//Find L(p(u))

std::vector<std::pair<float,float>> pafInterp;

for(int l = 0; l < interpCoords.size();++l){

pafInterp.push_back(

std::pair<float,float>(

pafA.at<float>(interpCoords[l].y,interpCoords[l].x),

pafB.at<float>(interpCoords[l].y,interpCoords[l].x)

));

}

std::vector<float> pafScores;

float sumOfPafScores = 0;

int numOverTh = 0;

for(int l = 0; l< pafInterp.size();++l){

float score = pafInterp[l].first*distance.first + pafInterp[l].second*distance.second;

sumOfPafScores += score;

if(score > pafScoreTh){

++numOverTh;

}

pafScores.push_back(score);

}

float avgPafScore = sumOfPafScores/((float)pafInterp.size());

if(((float)numOverTh)/((float)nInterpSamples) > confTh){

if(avgPafScore > maxScore){

maxJ = j;

maxScore = avgPafScore;

found = true;

}

}

}/* j */

if(found){

localValidPairs.push_back(ValidPair(candA[i].id,candB[maxJ].id,maxScore));

}

}/* i */

validPairs.push_back(localValidPairs);

} else {

invalidPairs.insert(k);

validPairs.push_back(std::vector<ValidPair>());

}

}/* k */

}

void getPersonwiseKeypoints(const std::vector<std::vector<ValidPair>>& validPairs,

const std::set<int>& invalidPairs,

std::vector<std::vector<int>>& personwiseKeypoints) {

for(int k = 0; k < mapIdx.size();++k){

if(invalidPairs.find(k) != invalidPairs.end()){

continue;

}

const std::vector<ValidPair>& localValidPairs(validPairs[k]);

int indexA(posePairs[k].first);

int indexB(posePairs[k].second);

for(int i = 0; i< localValidPairs.size();++i){

bool found = false;

int personIdx = -1;

for(int j = 0; !found && j < personwiseKeypoints.size();++j){

if(indexA < personwiseKeypoints[j].size() &&

personwiseKeypoints[j][indexA] == localValidPairs[i].aId){

personIdx = j;

found = true;

}

}/* j */

if(found){

personwiseKeypoints[personIdx].at(indexB) = localValidPairs[i].bId;

} else if(k < 17){

std::vector<int> lpkp(std::vector<int>(18,-1));

lpkp.at(indexA) = localValidPairs[i].aId;

lpkp.at(indexB) = localValidPairs[i].bId;

personwiseKeypoints.push_back(lpkp);

}

}/* i */

}/* k */

}

int main(int argc,char** argv) {

std::string inputFile = "./group.jpg";

std::string device = "cpu";

std::cout << "USAGE : ./multi-person-openpose <inputFile> <device>" << std::endl;

if (argc == 2){

if((std::string)argv[1] == "gpu")

device = "gpu";

else

inputFile = argv[1];

}

else if (argc == 3){

inputFile = argv[1];

if((std::string)argv[2] == "gpu")

device = "gpu";

}

cv::Mat input = cv::imread(inputFile, cv::IMREAD_COLOR);

std::chrono::time_point<std::chrono::system_clock> startTP = std::chrono::system_clock::now();

cv::dnn::Net inputNet = cv::dnn::readNetFromCaffe("./pose/coco/pose_deploy_linevec.prototxt","./pose/coco/pose_iter_440000.caffemodel");

if (device == "cpu"){

std::cout << "Using CPU device" << std::endl;

inputNet.setPreferableBackend(cv::dnn::DNN_TARGET_CPU);

}

else if (device == "gpu"){

std::cout << "Using GPU device" << std::endl;

inputNet.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);

inputNet.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA);

}

cv::Mat inputBlob = cv::dnn::blobFromImage(input,1.0/255.0,cv::Size((int)((368*input.cols)/input.rows),368),cv::Scalar(0,0,0),false,false);

inputNet.setInput(inputBlob);

cv::Mat netOutputBlob = inputNet.forward();

std::vector<cv::Mat> netOutputParts;

splitNetOutputBlobToParts(netOutputBlob,cv::Size(input.cols,input.rows),netOutputParts);

std::chrono::time_point<std::chrono::system_clock> finishTP = std::chrono::system_clock::now();

std::cout << "Time Taken in forward pass = " << std::chrono::duration_cast<std::chrono::milliseconds>(finishTP - startTP).count() << " ms" << std::endl;

int keyPointId = 0;

std::vector<std::vector<KeyPoint>> detectedKeypoints;

std::vector<KeyPoint> keyPointsList;

for(int i = 0; i < nPoints;++i){

std::vector<KeyPoint> keyPoints;

getKeyPoints(netOutputParts[i],0.1,keyPoints);

std::cout << "Keypoints - " << keypointsMapping[i] << " : " << keyPoints << std::endl;

for(int i = 0; i< keyPoints.size();++i,++keyPointId){

keyPoints[i].id = keyPointId;

}

detectedKeypoints.push_back(keyPoints);

keyPointsList.insert(keyPointsList.end(),keyPoints.begin(),keyPoints.end());

}

std::vector<cv::Scalar> colors;

populateColorPalette(colors,nPoints);

cv::Mat outputFrame = input.clone();

for(int i = 0; i < nPoints;++i){

for(int j = 0; j < detectedKeypoints[i].size();++j){

cv::circle(outputFrame,detectedKeypoints[i][j].point,5,colors[i],-1,cv::LINE_AA);

}

}

std::vector<std::vector<ValidPair>> validPairs;

std::set<int> invalidPairs;

getValidPairs(netOutputParts,detectedKeypoints,validPairs,invalidPairs);

std::vector<std::vector<int>> personwiseKeypoints;

getPersonwiseKeypoints(validPairs,invalidPairs,personwiseKeypoints);

for(int i = 0; i< nPoints-1;++i){

for(int n = 0; n < personwiseKeypoints.size();++n){

const std::pair<int,int>& posePair = posePairs[i];

int indexA = personwiseKeypoints[n][posePair.first];

int indexB = personwiseKeypoints[n][posePair.second];

if(indexA == -1 || indexB == -1){

continue;

}

const KeyPoint& kpA = keyPointsList[indexA];

const KeyPoint& kpB = keyPointsList[indexB];

cv::line(outputFrame,kpA.point,kpB.point,colors[i],3,cv::LINE_AA);

}

}

cv::imshow("Detected Pose",outputFrame);

cv::waitKey(0);

return 0;

}

3.代码解析

3.1 下载模型权重

使用代码提供的getModels.sh文件下载模型权重文件。注意,配置文件已经在文件夹中。

sudo chmod a+x getModels.sh

./getModels.sh

检查文件夹以确保模型二进制文件(.Caffemodel文件)已下载。

3.2 加载模型

(1)Python

protoFile = "pose/coco/pose_deploy_linevec.prototxt"

weightsFile = "pose/coco/pose_iter_440000.caffemodel"

net = cv2.dnn.readNetFromCaffe(protoFile, weightsFile)

(2)C++

cv::dnn::Net inputNet = cv::dnn::readNetFromCaffe("./pose/coco/pose_deploy_linevec.prototxt","./pose/coco/pose_iter_440000.caffemodel");

3.3 加载图像并创建输入blob

(1)Python

image1 = cv2.imread("group.jpg")

# Fix the input Height and get the width according to the Aspect Ratio

inHeight = 368

inWidth = int((inHeight/frameHeight)*frameWidth)

inpBlob = cv2.dnn.blobFromImage(image1, 1.0 / 255, (inWidth, inHeight),

(0, 0, 0), swapRB=False, crop=False)

(2)C++

std::string inputFile = "./group.jpg";

if(argc > 1){

inputFile = std::string(argv[1]);

}

cv::Mat input = cv::imread(inputFile,CV_LOAD_IMAGE_COLOR);

cv::Mat inputBlob = cv::dnn::blobFromImage(input,1.0/255.0,

cv::Size((int)((368*input.cols)/input.rows),368),

cv::Scalar(0,0,0),false,false);

3.4 推理

(1)Python

net.setInput(inpBlob)

output = net.forward()

(2)C++

inputNet.setInput(inputBlob);

cv::Mat netOutputBlob = inputNet.forward();

3.5 样例输出

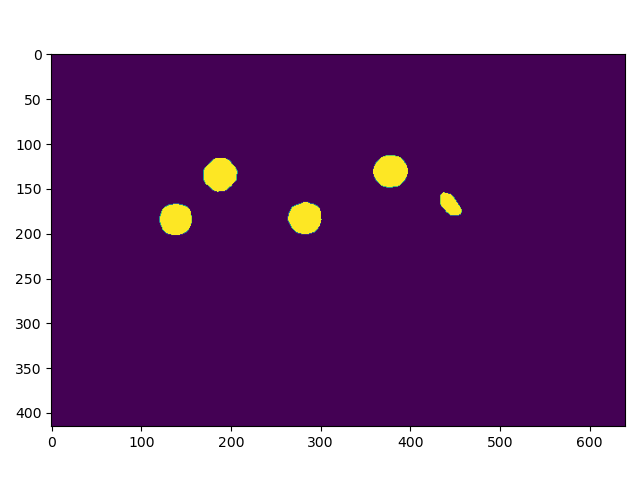

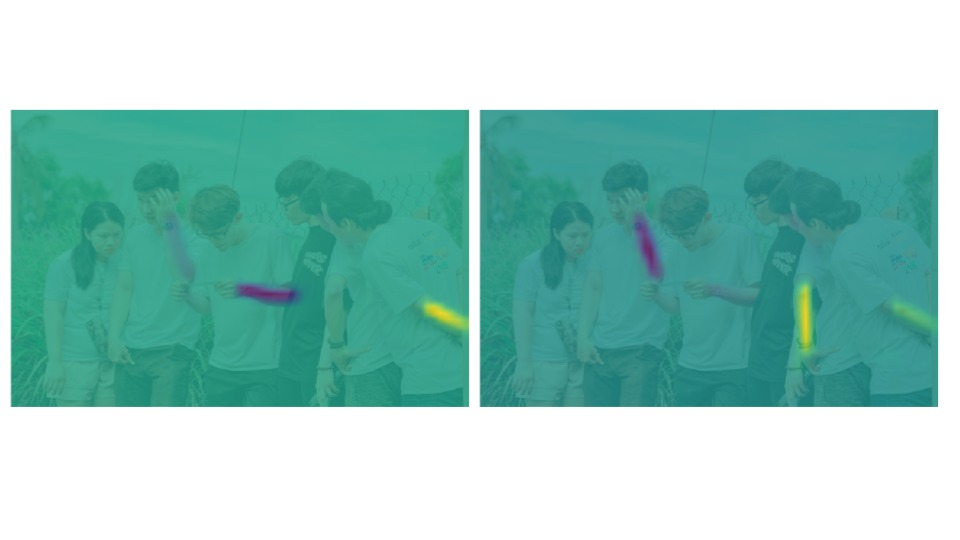

我们首先调整输出的大小,使其与输入的大小相同。然后我们检查了与鼻子关键点对应的置信度图。你也可以使用cv2.addWeighted()函数用alpha参数混合图像上的probMap。

i = 0

probMap = output[0, i, :, :]

probMap = cv2.resize(probMap, (frameWidth, frameHeight))

plt.imshow(cv2.cvtColor(image1, cv2.COLOR_BGR2RGB))

plt.imshow(probMap, alpha=0.6)

3.6 检测关键点

从上图可以看出,第0矩阵给出了鼻子的置信度图。类似地,第一个矩阵对应颈部等等。正如我们在之前的文章中所讨论的,对于一个人来说,只要找到最大置信度图,就很容易找到每个关键点的位置。但对于多人场景,我们不能这样做。

注意:本节中的解释和代码片段属于getKeypoints()函数。

对于每个关键点,我们对置信度图应用一个阈值(本例中为0.1)。

(1)Python

mapSmooth = cv2.GaussianBlur(probMap,(3,3),0,0)

mapMask = np.uint8(mapSmooth>threshold)

plt.imshow(mapMask)

plt.show()

(2)C++

cv::Mat smoothProbMap;

cv::GaussianBlur( probMap, smoothProbMap, cv::Size( 3, 3 ), 0, 0 );

cv::Mat maskedProbMap;

cv::threshold(smoothProbMap,maskedProbMap,threshold,255,cv::THRESH_BINARY);

这将给出一个矩阵,该矩阵包含与关键点对应的区域内的blobs。

为了找到关键点的确切位置,我们需要找到每个blob的最大值。我们做以下事情:

- 1.首先找出与关键点对应的区域的所有轮廓。

- 2.为该区域创建掩码。

- 3.通过将probMap与此掩码相乘,提取该区域的probMap。

- 4.求这个区域的局部极大值。这是为每个轮廓(关键点区域)做的。

(1)Python

#find the blobs

_, contours, _ = cv2.findContours(mapMask, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

#for each blob find the maxima

for cnt in contours:

blobMask = np.zeros(mapMask.shape)

blobMask = cv2.fillConvexPoly(blobMask, cnt, 1)

maskedProbMap = mapSmooth * blobMask

_, maxVal, _, maxLoc = cv2.minMaxLoc(maskedProbMap)

keypoints.append(maxLoc + (probMap[maxLoc[1], maxLoc[0]],))

(2)C++

std::vector<std::vector<cv::Point> > contours;

cv::findContours(maskedProbMap,contours,cv::RETR_TREE,cv::CHAIN_APPROX_SIMPLE);

for(int i = 0; i < contours.size();++i){

cv::Mat blobMask = cv::Mat::zeros(smoothProbMap.rows,smoothProbMap.cols,smoothProbMap.type());

cv::fillConvexPoly(blobMask,contours[i],cv::Scalar(1));

double maxVal;

cv::Point maxLoc;

cv::minMaxLoc(smoothProbMap.mul(blobMask),0,&maxVal,0,&maxLoc);

keyPoints.push_back(KeyPoint(maxLoc, probMap.at<float>(maxLoc.y,maxLoc.x)));

我们保存每个关键点的x, y坐标和概率得分。我们还为找到的每个关键点分配一个ID。这将用于稍后的肢体连接或关键点对之间的连接。

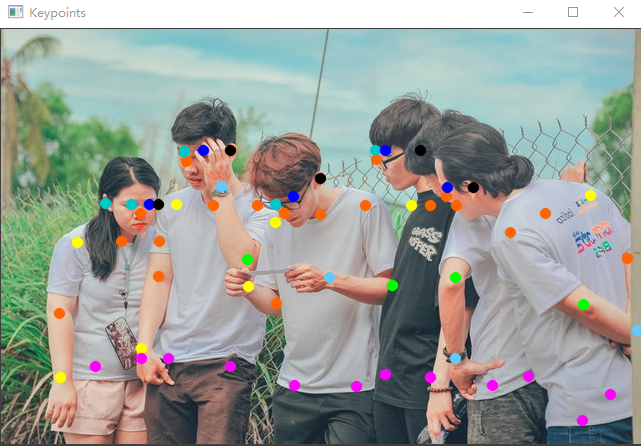

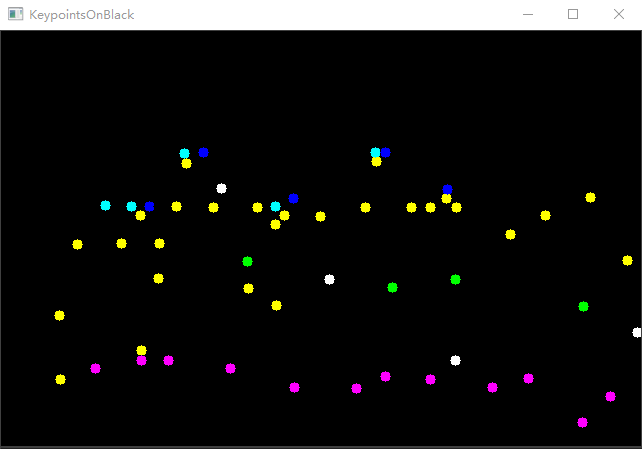

下面给出输入图像检测到的关键点。你可以看到,即使是对部分可见的人,甚至对背对着摄像机的人,它做得很好。

从第一张图可以看出,我们已经找到了所有的关键点。但是当关键点没有叠加在图像上时,我们就无法区分哪个部分属于哪个人。我们必须把每个关键点精确地映射到一个人身上。这一部分不是微不足道的,如果做得不正确,可能会导致很多错误。为此,我们将找到关键点之间的有效连接(或有效对),然后组装这些连接来创建每个人的骨架。

3.7 找到有效对

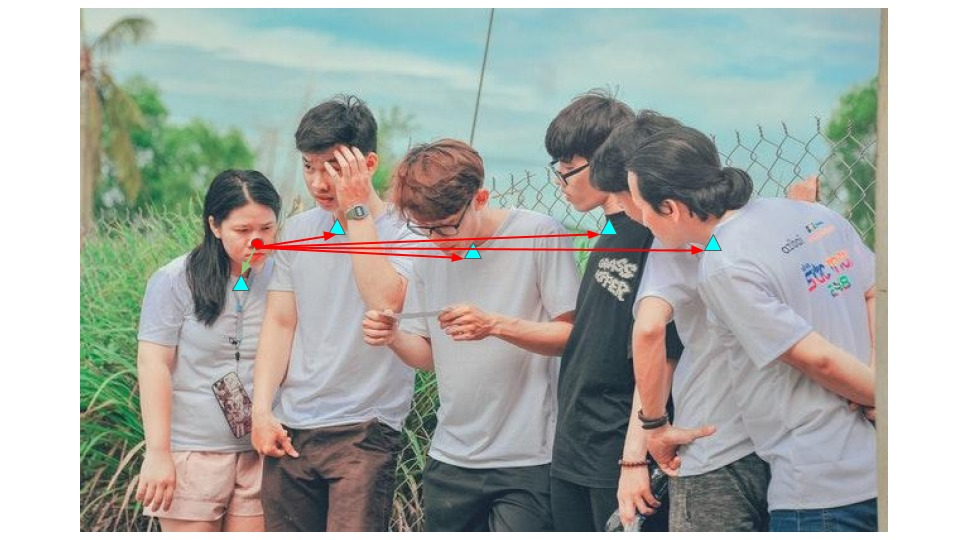

有效对是连接属于同一个人身体部分的两个关键点。找到有效对的一个简单方法是找出一个关节和所有其他可能关节之间的最小距离。例如,在下面的图中,我们可以找到标记的鼻子和所有其他脖子之间的距离。最小距离对应该是对应于同一个人的那一对。

这种方法可能不适用于所有对;特别是当图像包含太多的人或有遮挡的部分。例如,对于这对,左肘->左手腕-第三个人的手腕与第二个人的手腕相比更接近。因此,它将不会产生有效对。

在某些情况下,仅使用关键点之间的距离可能会失败。

这就是部分关联映射(Part Affinity Maps)发挥作用的地方。它们根据两个关节对之间的亲和力给出方向。因此,这一对不仅应该有最小的距离,而且他们的方向也应该符合PAF 热图的方向。

下面给出的是左肘->左腕连接的热图。

因此,在上述情况下,即使距离测量错误地识别了这对,OpenPose也给出了正确的结果,因为PAF只遵循连接第二个人肘部和手腕的单位向量。

本文的研究方法如下:

- 将组成这一对的两点的连线分开。在这条直线上找到n个点。

- 检查这些点上的PAF是否与连接这对点的直线方向相同。

- 如果方向匹配到一定程度,则为有效对。

让我们看看它是如何在代码中完成的;代码片段属于所提供的代码中的getValidPairs()函数。

对于每一对身体部位,我们做以下工作:

- 1.取属于一对的关键点。把它们放在单独的列表中(candA和candB)。candA中的每个点都与candB中的某个点相连。下图显示了颈->右肩对的candA和candB点。

(1)Python

pafA = output[0, mapIdx[k][0], :, :]

pafB = output[0, mapIdx[k][1], :, :]

pafA = cv2.resize(pafA, (frameWidth, frameHeight))

pafB = cv2.resize(pafB, (frameWidth, frameHeight))

# Find the keypoints for the first and second limb

candA = detected_keypoints[POSE_PAIRS[k][0]]

candB = detected_keypoints[POSE_PAIRS[k][1]]

(2)C++

//A->B constitute a limb

cv::Mat pafA = netOutputParts[mapIdx[k].first];

cv::Mat pafB = netOutputParts[mapIdx[k].second];

//Find the keypoints for the first and second limb

const std::vector<KeyPoint>& candA = detectedKeypoints[posePairs[k].first];

const std::vector<KeyPoint>& candB = detectedKeypoints[posePairs[k].second];

- 2.求出候选关键点对的单位向量。这给出了连接它们的线的方向。

(1)Python

d_ij = np.subtract(candB[j][:2], candA[i][:2])

norm = np.linalg.norm(d_ij)

if norm:

d_ij = d_ij / norm

(2)C++

std::pair<float,float> distance(candB[j].point.x - candA[i].point.x,candB[j].point.y - candA[i].point.y);

float norm = std::sqrt(distance.first*distance.first + distance.second*distance.second);

if(!norm){

continue;

}

distance.first /= norm;

distance.second /= norm;

- 3.在连接这两点的直线上创建一个由10个插值点组成的数组。

(1)Python

# Find p(u)

interp_coord = list(zip(np.linspace(candA[i][0], candB[j][0], num=n_interp_samples),

np.linspace(candA[i][1], candB[j][1], num=n_interp_samples)))

# Find L(p(u))

paf_interp = []

for k in range(len(interp_coord)):

paf_interp.append([pafA[int(round(interp_coord[k][1])), int(round(interp_coord[k][0]))],

pafB[int(round(interp_coord[k][1])), int(round(interp_coord[k][0]))] ])

(2)C++

//Find p(u)

std::vector<cv::Point> interpCoords;

populateInterpPoints(candA[i].point,candB[j].point,nInterpSamples,interpCoords);

//Find L(p(u))

std::vector<std::pair<float,float>> pafInterp;

for(int l = 0; l < interpCoords.size();++l){

pafInterp.push_back(

std::pair<float,float>(

pafA.at<float>(interpCoords[l].y,interpCoords[l].x),

pafB.at<float>(interpCoords[l].y,interpCoords[l].x)

));

}

- 4.取这些点上的PAF和单位向量d_ij之间的点积

(1)Python

# Find E

paf_scores = np.dot(paf_interp, d_ij)

avg_paf_score = sum(paf_scores)/len(paf_scores)

(2)C++

std::vector<float> pafScores;

float sumOfPafScores = 0;

int numOverTh = 0;

for(int l = 0; l< pafInterp.size();++l){

float score = pafInterp[l].first*distance.first + pafInterp[l].second*distance.second;

sumOfPafScores += score;

if(score > pafScoreTh){

++numOverTh;

}

pafScores.push_back(score);

}

float avgPafScore = sumOfPafScores/((float)pafInterp.size());

-

- 如果 70% 的点满足标准,则认为该对有效。

(1)Python

- 如果 70% 的点满足标准,则认为该对有效。

# Check if the connection is valid

# If the fraction of interpolated vectors aligned with PAF is higher then threshold -> Valid Pair

if ( len(np.where(paf_scores > paf_score_th)[0]) / n_interp_samples ) > conf_th :

if avg_paf_score > maxScore:

max_j = j

maxScore = avg_paf_score

(2)C++

if(((float)numOverTh)/((float)nInterpSamples) > confTh){

if(avgPafScore > maxScore){

maxJ = j;

maxScore = avgPafScore;

found = true;

}

}

3.8 组装个人关键点

现在我们已经将所有关键点连接成对,我们可以组合成多人的全身姿势。

让我们看看它是如何在代码中完成的;本节中的代码片段属于所提供代码中的getPersonwiseKeypoints()函数:

- 1.我们首先创建空列表来存储每个人的关键点。然后我们检查每一对,检查这对的partA是否已经出现在任何列表中。如果它存在,则意味着关键点属于这个列表,这对中的partB也应该属于这个人。因此,将该对的partB添加到找到partA的列表中。

(1)Python

for j in range(len(personwiseKeypoints)):

if personwiseKeypoints[j][indexA] == partAs[i]:

person_idx = j

found = 1

break

if found:

personwiseKeypoints[person_idx][indexB] = partBs[i]

(2)C++

for(int j = 0; !found && j < personwiseKeypoints.size();++j){

if(indexA < personwiseKeypoints[j].size() &&

personwiseKeypoints[j][indexA] == localValidPairs[i].aId){

personIdx = j;

found = true;

}

}/* j */

if(found){

personwiseKeypoints[personIdx].at(indexB) = localValidPairs[i].bId;

}

- 2.如果在任何列表中都没有partA,则意味着该对属于不在列表中的新人员,因此创建了一个新列表。

(1)Python

elif not found and k < 17:

row = -1 * np.ones(19)

row[indexA] = partAs[i]

row[indexB] = partBs[i]

(2)C++

else if(k < 17){

std::vector<int> lpkp(std::vector<int>(18,-1));

lpkp.at(indexA) = localValidPairs[i].aId;

lpkp.at(indexB) = localValidPairs[i].bId;

personwiseKeypoints.push_back(lpkp);

}

3.9结果

我们检查每个人,并在输入图像上绘制骨架

(1)Python

for i in range(17):

for n in range(len(personwiseKeypoints)):

index = personwiseKeypoints[n][np.array(POSE_PAIRS[i])]

if -1 in index:

continue

B = np.int32(keypoints_list[index.astype(int), 0])

A = np.int32(keypoints_list[index.astype(int), 1])

cv2.line(frameClone, (B[0], A[0]), (B[1], A[1]), colors[i], 2, cv2.LINE_AA)

cv2.imshow("Detected Pose" , frameClone)

cv2.waitKey(0)

(2)C++

for(int i = 0; i< nPoints-1;++i){

for(int n = 0; n < personwiseKeypoints.size();++n){

const std::pair<int,int>& posePair = posePairs[i];

int indexA = personwiseKeypoints[n][posePair.first];

int indexB = personwiseKeypoints[n][posePair.second];

if(indexA == -1 || indexB == -1){

continue;

}

const KeyPoint& kpA = keyPointsList[indexA];

const KeyPoint& kpB = keyPointsList[indexB];

cv::line(outputFrame,kpA.point,kpB.point,colors[i],3,cv::LINE_AA);

}

}

cv::imshow("Detected Pose",outputFrame);

cv::waitKey(0);

参考目录

https://learnopencv.com/multi-person-pose-estimation-in-opencv-using-openpose/