文章目录

Android跨进程通信,IPC,RPC,Binder系统,C语言应用层调用()

1.概念

IPC,进程间通信,a进程发送数据给b进程,就是跨进程通信。

RPC,远程调用,a进程想打开led,点亮led,调用led_open函数,通过IPC发送数据给b进程,b取出数据,然后调用b进程的led_open函数,看似a进程来直接操作led_open函数一样,实际上是a发送数据给b,b操作硬件

b进程服务端程序要先向servicemanager注册服务,a进程查询led_服务,得到一个handle,指向进程b。

数据一般存在char buf[1024]里面。a,b进程通过buffer传递数据双端。

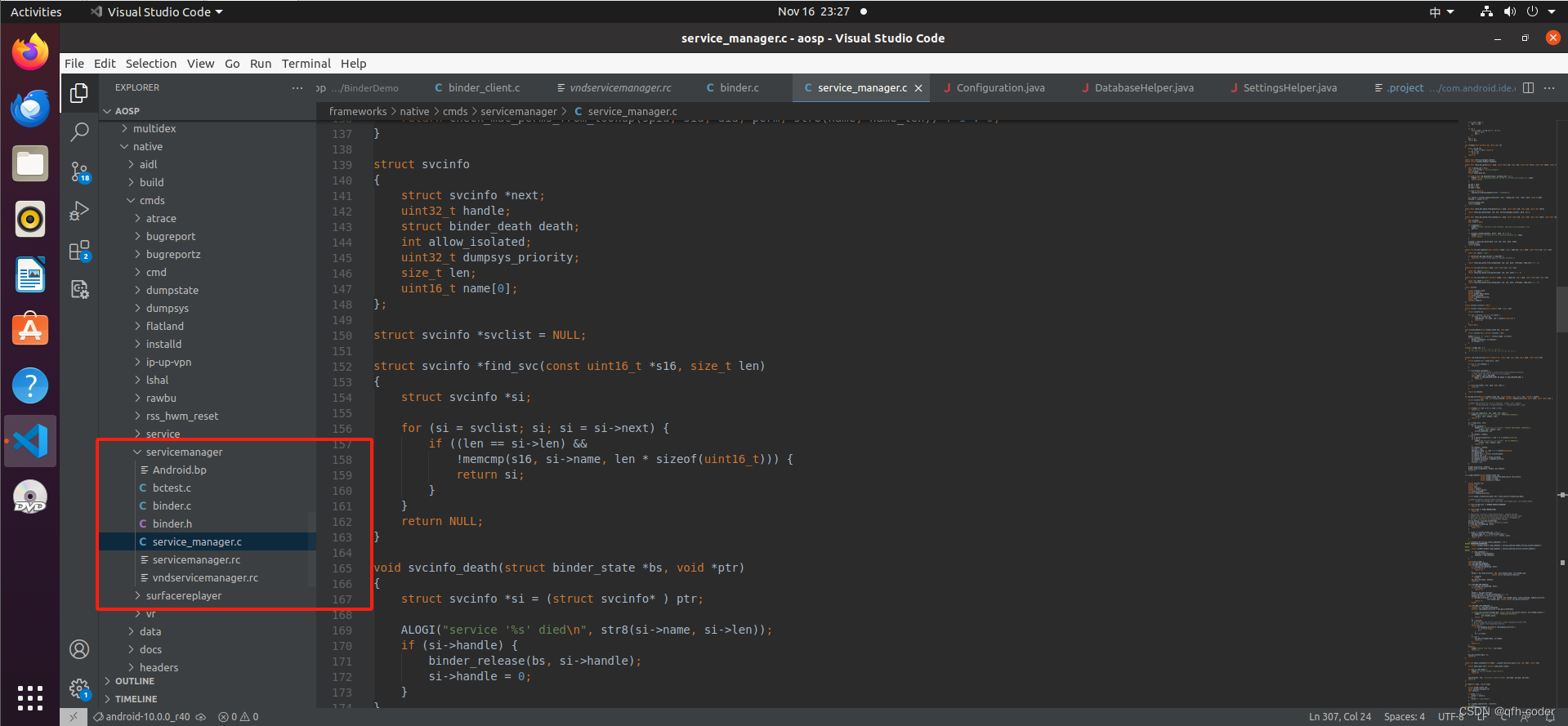

谷歌的源码参考目录

binder.c(谷歌封装好的c函数)

2.流程

servicemanager先由系统先运行,

- open binder驱动

- 告诉驱动程序自己就是servicemanager

- while(1)循环,读数据,读取驱动,获取数据,没有数据就休眠,得到数据就解析数据。

- 服务端注册服务,在链表中记录服务名,

- 客户端获取服务,查询链表中的服务,返回服务端进程

服务端程序:

- open驱动

- 注册服务,向servicemanager发送服务的名字,

- while(1)读驱动,无数据就休眠,解析数据,调用系统对应的底层函数,

客户端程序:

- open驱动。

- 获取服务,向servicemanager查询服务,获得一个handle,

- 向handle句柄发送数据。

打开驱动程序

告诉驱动程序自己就是servicemanager

循环读取数据,

binder_loop读数据,

解析数据

处理回复信息给客户端

客户端获取服务

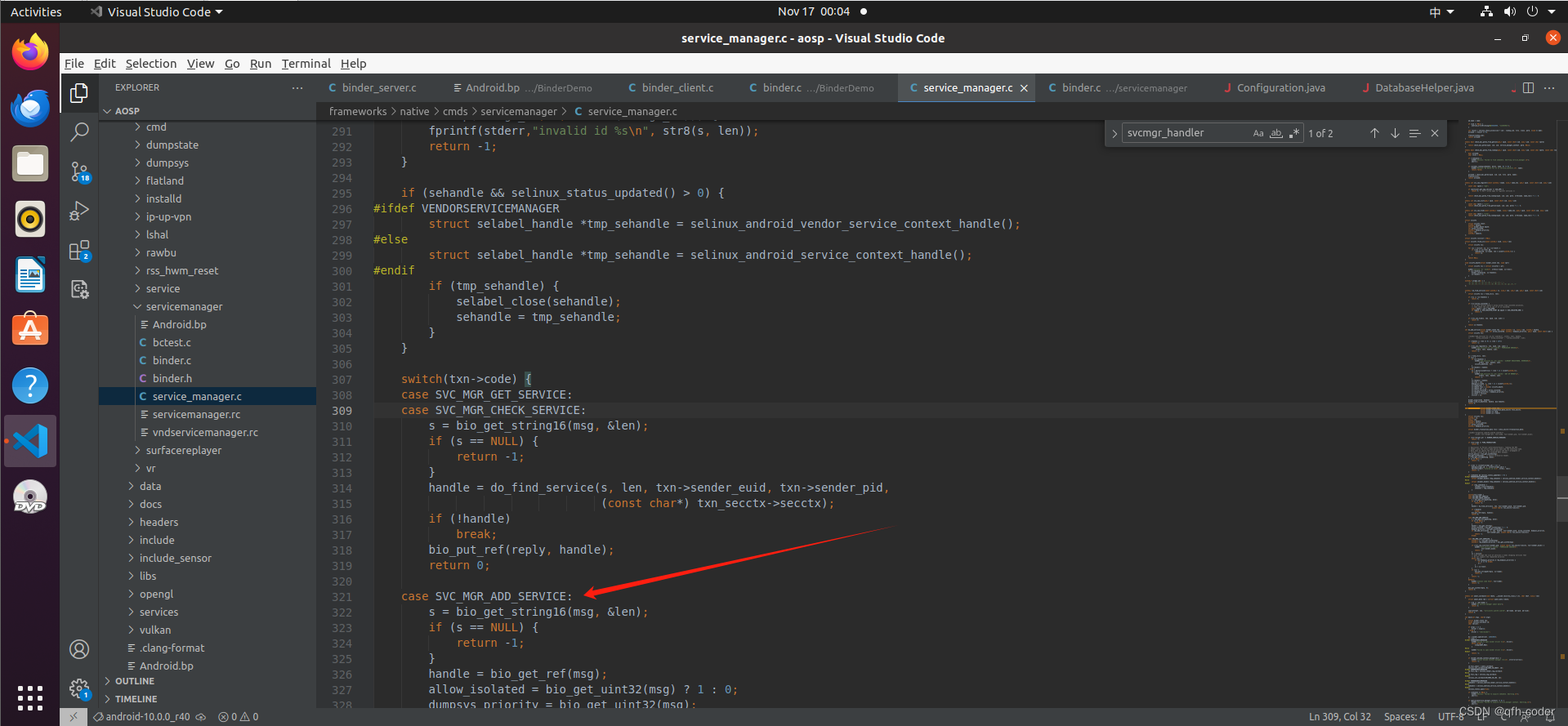

注册服务

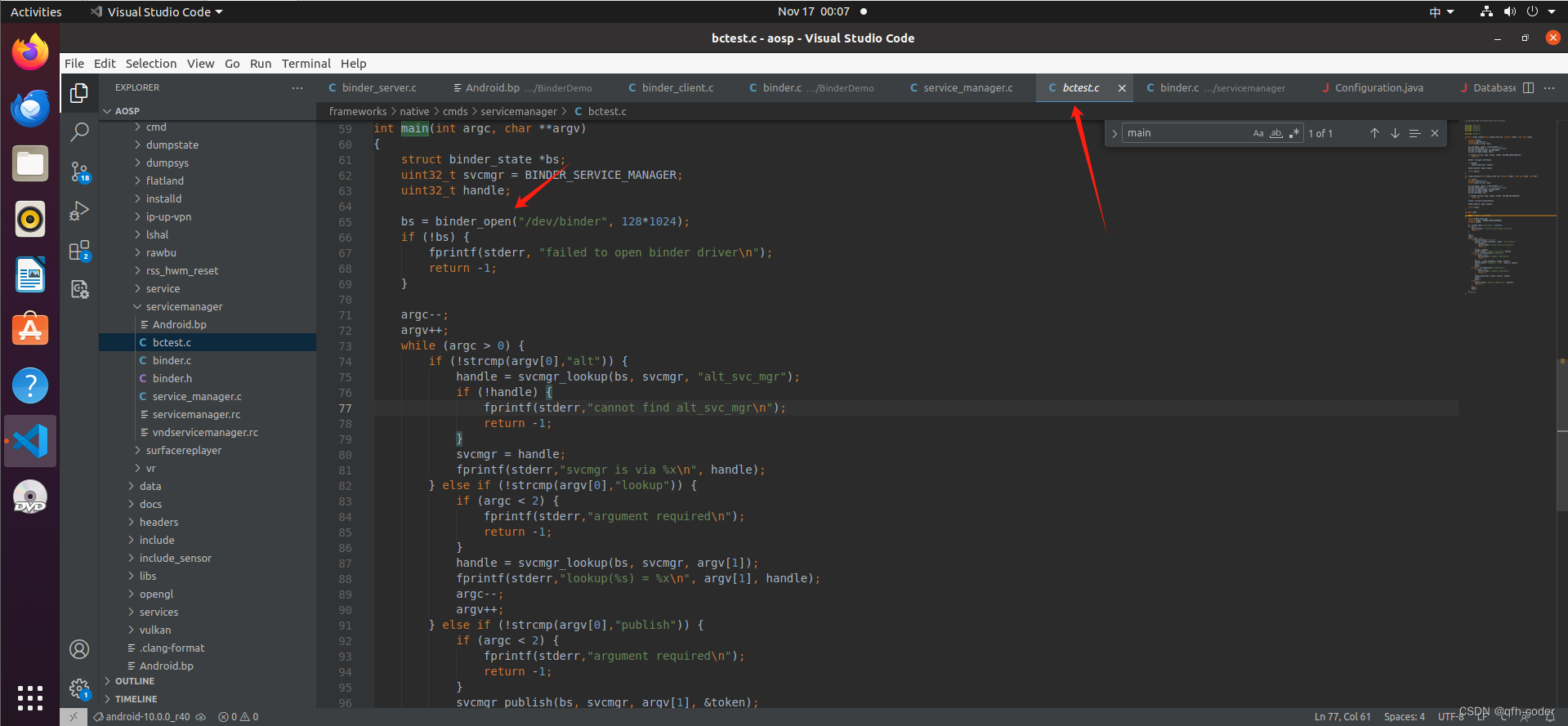

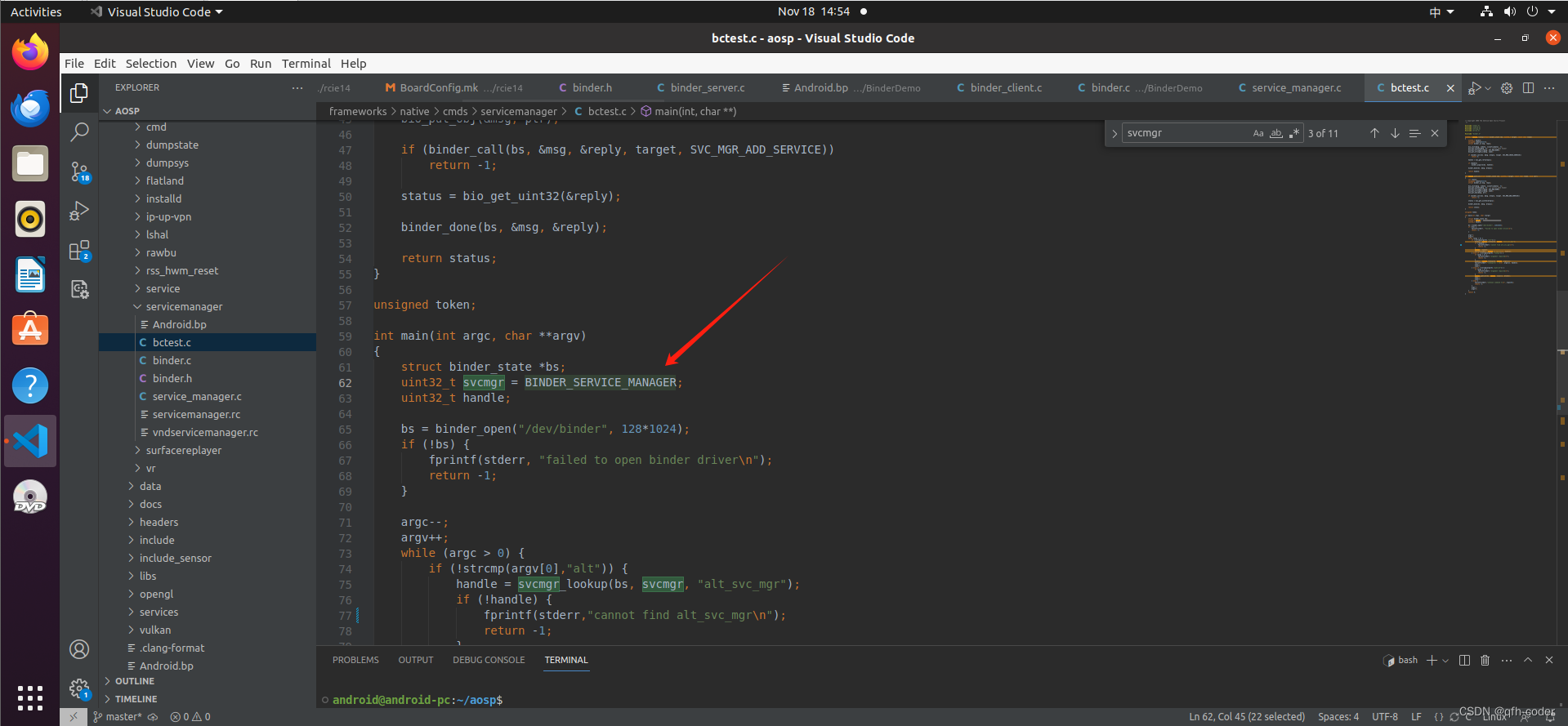

3.bctest.c

3.1 注册服务,打开binder驱动

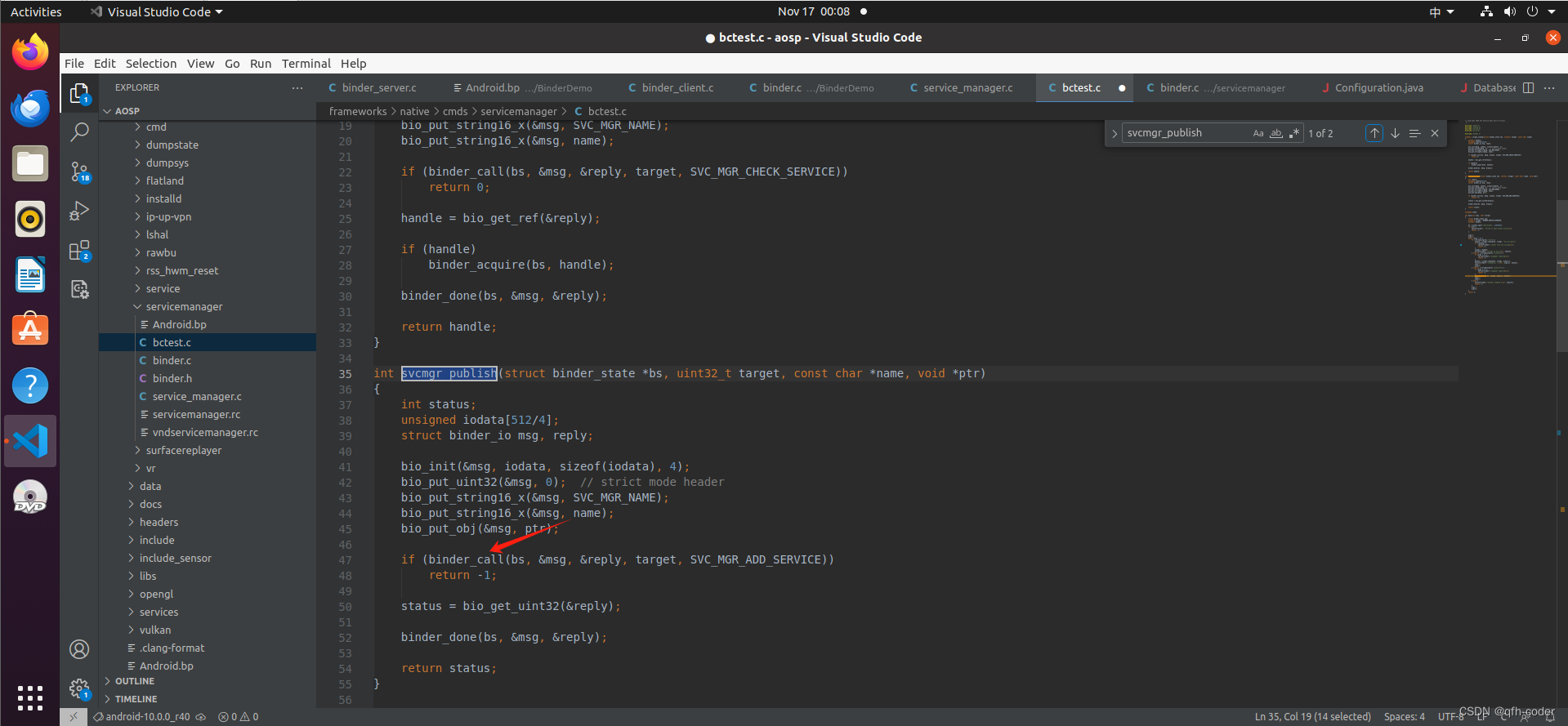

注册服务,构造好数据

发给目标target

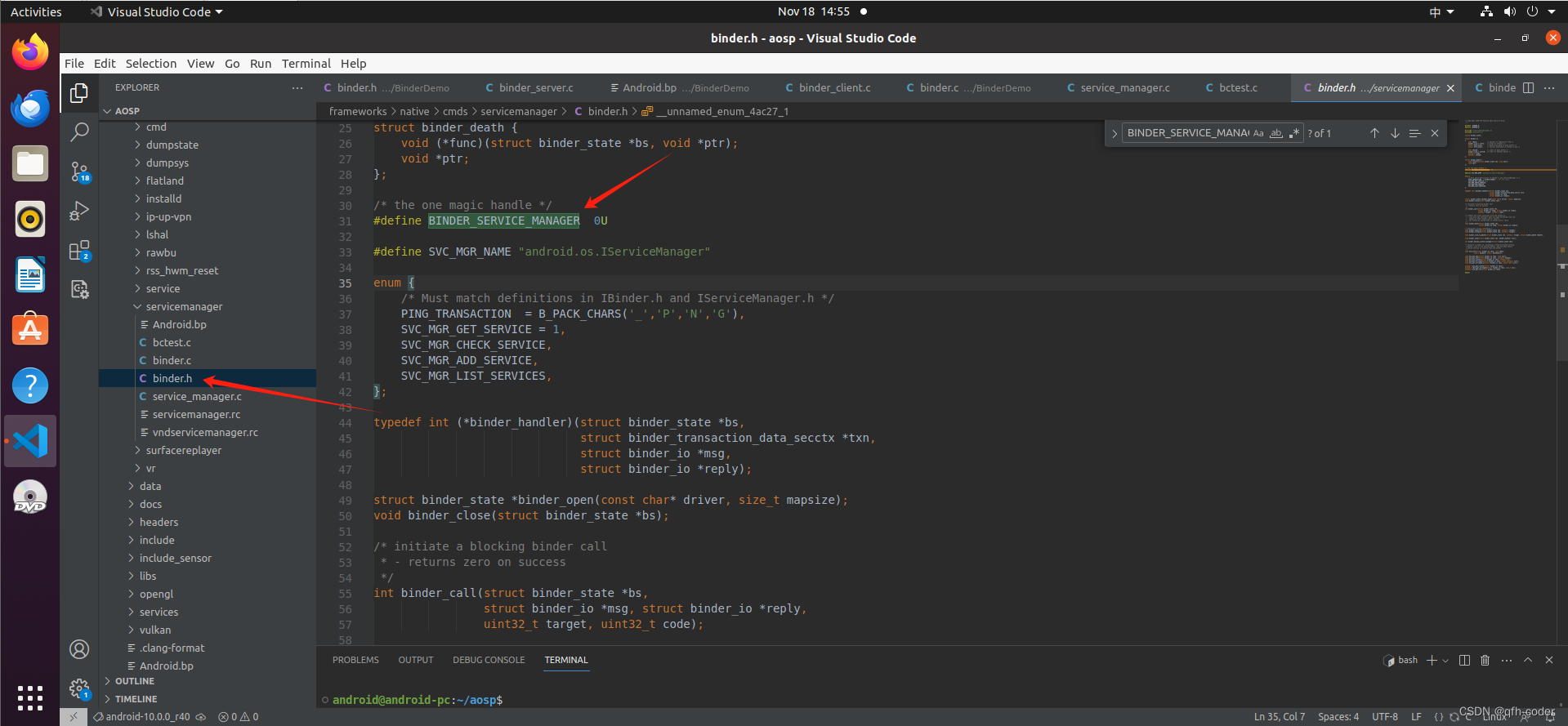

我们看一下这个值

在头文件中定义,句柄是0,进程间通信,0就是servicemanager进程,

通过binder_call调用,code: 表示要调用servicemanager中的"addservice"函数

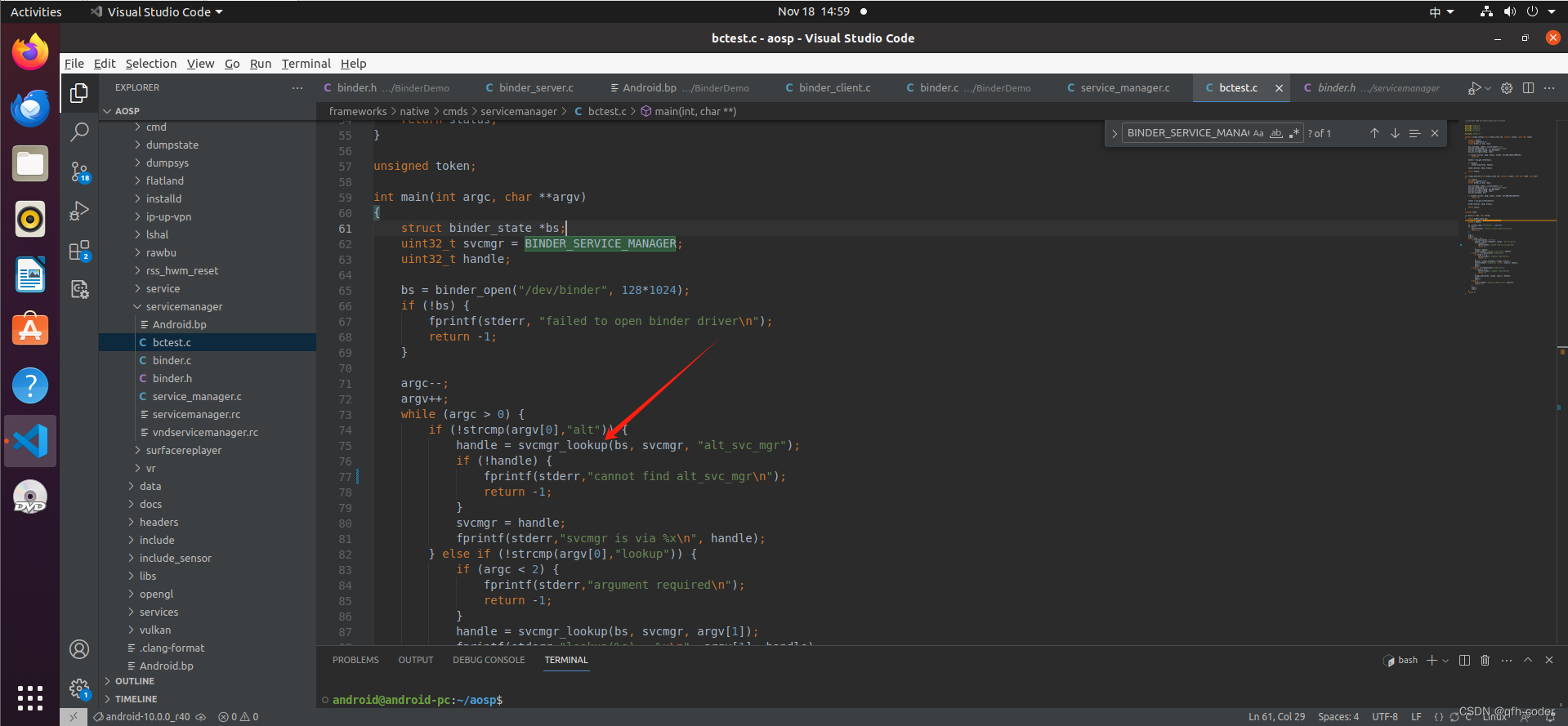

3.2 获取服务

打开驱动, 循环查询服务列表

if (binder_call(bs, &msg, &reply, target, SVC_MGR_CHECK_SERVICE))

return 0;

还是调用binder_call函数,msg中含有你想获取服务的名字,含有servicemanager回复的数据,

4.binder_call

实现远程调用, 向谁发数据,

target,目的进程

code,调用的函数

msg,提供的数据参数

reply,返回值

构造我们要发送的数据,放在buffer中,用binder_io

调用ioctl发送数据。

int binder_call(struct binder_state *bs,

struct binder_io *msg, struct binder_io *reply,

uint32_t target, uint32_t code)

{

int res;

struct binder_write_read bwr;

struct {

uint32_t cmd;

struct binder_transaction_data txn;

} __attribute__((packed)) writebuf;

unsigned readbuf[32];

if (msg->flags & BIO_F_OVERFLOW) {

fprintf(stderr,"binder: txn buffer overflow\n");

goto fail;

}

writebuf.cmd = BC_TRANSACTION;

writebuf.txn.target.handle = target;

writebuf.txn.code = code;

writebuf.txn.flags = 0;

writebuf.txn.data_size = msg->data - msg->data0;

writebuf.txn.offsets_size = ((char*) msg->offs) - ((char*) msg->offs0);

writebuf.txn.data.ptr.buffer = (uintptr_t)msg->data0;

writebuf.txn.data.ptr.offsets = (uintptr_t)msg->offs0;

bwr.write_size = sizeof(writebuf);

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) &writebuf;

hexdump(msg->data0, msg->data - msg->data0);

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

//调用ioctl发送数据。bwr结构体

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder: ioctl failed (%s)\n", strerror(errno));

goto fail;

}

res = binder_parse(bs, reply, (uintptr_t) readbuf, bwr.read_consumed, 0);

if (res == 0) return 0;

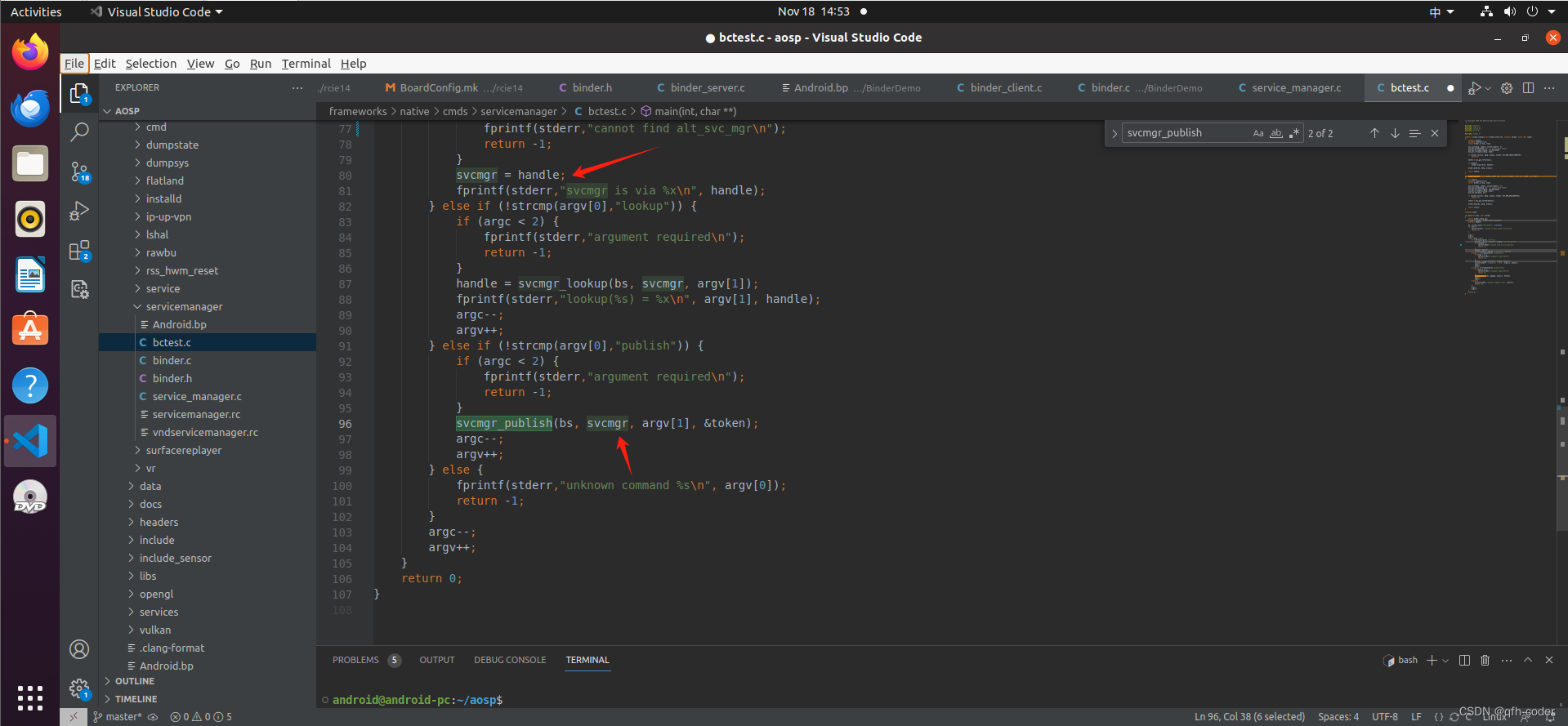

if (res < 0) goto fail;

}

fail:

memset(reply, 0, sizeof(*reply));

reply->flags |= BIO_F_IOERROR;

return -1;

}

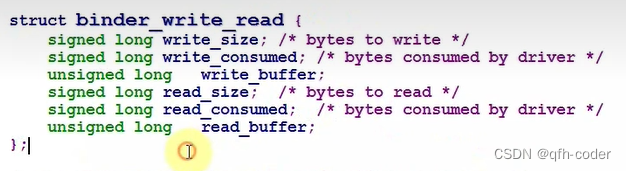

数据要转换,binder_io参数是这个类型,内核驱动要求res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);bwr是上图的类型。

ioctl收数据,也会收到binder_write_read,然后转化为binder_io

svcmgr_lookup看下面源码

uint32_t svcmgr_lookup(struct binder_state *bs, uint32_t target, const char *name)

{

uint32_t handle;

//binder_io对缓冲区的管理

unsigned iodata[512/4];

//初始化结构体binder_io

struct binder_io msg, reply;

//初始化就可以在缓冲池里面放数据 了

bio_init(&msg, iodata, sizeof(iodata), 4);

bio_put_uint32(&msg, 0); // strict mode header

bio_put_string16_x(&msg, SVC_MGR_NAME);

bio_put_string16_x(&msg, name);

//binder_io发送给驱动

if (binder_call(bs, &msg, &reply, target, SVC_MGR_CHECK_SERVICE))

return 0;

handle = bio_get_ref(&reply);

if (handle)

binder_acquire(bs, handle);

binder_done(bs, &msg, &reply);

return handle;

}

补充Demo分析记录

远程服务端的函数方法称为binder服务,ServiceManager是Android启动后的一个进程,RPC就是通过Binder驱动实现,它是一个字符驱动程序。

定义服务:函数方法等

注册服务:通过ServiceManager

获取服务:client

获取到服务后就发起远程调用,就是rpc原理。

C语言层面的通过系统调用函数操作binder驱动

Binder.c封装了系统调用函数

Server端

值为 0

uint32_t svcmgr = BINDER_SERVICE_MANAGER;

初始化驱动,并开辟内存,bs为binder驱动程序所返回的一个文件句柄

bs = binder_open("/dev/binder", 128*1024);

向ServiceManager注册hello服务,svcmgr=0,表示要发送数据给ServiceManager进程的代号0,hellobinder_service_handler回调,hellobinder_service_handler这是一个指针,传递给binder驱动,驱动就会标记hello服务对应的回调函数指针,客户端需要调用hello服务的时候,txn->target.ptr

ret = svcmgr_publish(bs, svcmgr, "hello", hellobinder_service_handler);

循环读取解析binder驱动数据,防止server进程挂掉

binder_loop(bs, test_server_handler);

binder驱动是在内核层,内核层向服务端发送客户端的数据,ServiceManager进程中使用binder_loop接收数据,解析传递来的数据包,binder_loop在里面解析完数据就会把这些数据传递给我们的回调函数test_server_handler,这样服务端就能拿到数据了,这个回调函数就会判断客户端发来的数据是要调用哪个服务端进程,,

int test_server_handler(struct binder_state *bs,

struct binder_transaction_data_secctx *txn_secctx,

struct binder_io *msg,

struct binder_io *reply)

struct binder_state *bs binder驱动文件句柄

binder_transaction_data_secctx 解析的数据结构体

msg 表示服务端的函数方法的参数

reply 返回给client的数据

取出客户端的发送来的数据

struct binder_transaction_data *txn = &txn_secctx->transaction_data;

转型为handler指针

int (*handler)(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply);

函数指针txn->target.ptr是服务端的回调函数指针由binder驱动标记好,如何调用handler,刚好就是指向这个服务的回调函数

handler = (int (*)(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply))txn->target.ptr;

进入回调code表示调用哪个函数的标记,给reply写入一个0,返回给客户端

switch(txn->code) {

case HELLO_BINDER:

hellobinder();

bio_put_uint32(reply, 0); /* no exception */

return 0;

//处理带有参数的函数方法

case HELLO_BINDER_TO:

s = bio_get_string16(msg, &len); //"IHelloService"

s = bio_get_string16(msg, &len); // name

if (s == NULL) {

return -1;

}

for (i = 0; i < len; i++)

name[i] = s[i];

name[i] = '\0';

//再去调用目标函数传递参数给它

i = hellobinder_to(name);

bio_put_uint32(reply, 0); /* no exception */

bio_put_uint32(reply, i);

break;

客户端

也是先要初始化驱动

bs = binder_open("/dev/binder", 128*1024);

查找服务,bs是binder文件句柄,svcmgr目标进程是servicemanager,hello服务,g_handle是返回的服务的索引标记进程

g_handle = svcmgr_lookup(bs, svcmgr, "hello");

然后rpc发起远程调用

之前先要构造客户端要发送给服务端的数据,构造一个binder_io结构体,bio_init

struct binder_io msg, reply;

bio_init(&msg, iodata, sizeof(iodata), 4);

把参数赋值到结构体中,一个是int的数据,一个是字符串数据

bio_put_uint32(&msg, 0); // strict mode header

bio_put_string16_x(&msg, "IHelloService");

发起rpc,g_bs是binder驱动返回的文件描述符,msg发送给服务端的参数都在这个结构体里面,reply回传给客户端的数据,g_handle是服务端服务的一个进程索引,通过它阔以找到对应的服务端进程,HELLO_BINDER是服务端对应的函数的code

if (binder_call(g_bs, &msg, &reply, g_handle, HELLO_BINDER))

return ;

解析返回的数据reply

binder_done(g_bs, &msg, &reply);

android,跨进程调用Binder补充分析

文件系统调用接口

mmap,open,ioctrl,都是驱动层调用函数

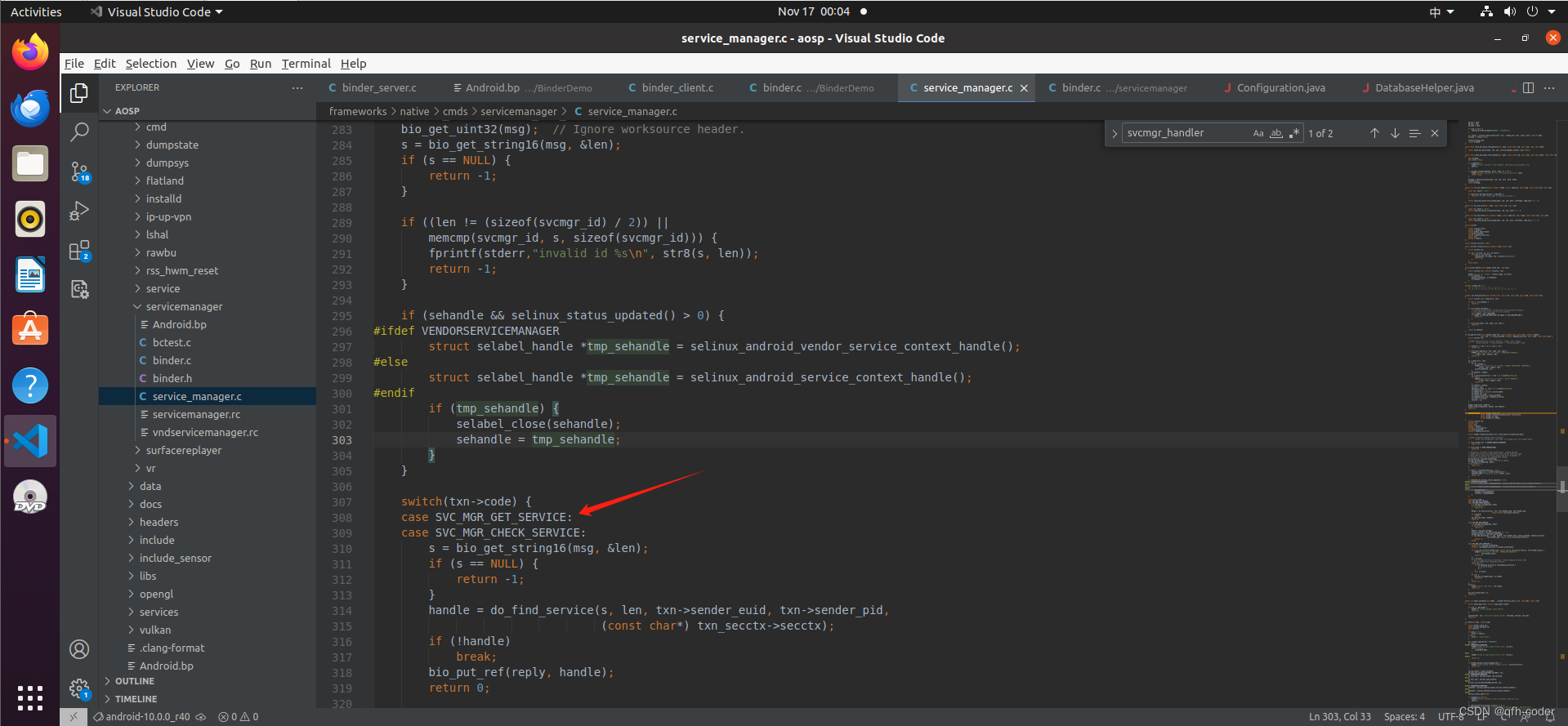

服务注册

/home/android/aosp/frameworks/native/cmds/servicemanager/service_manager.c

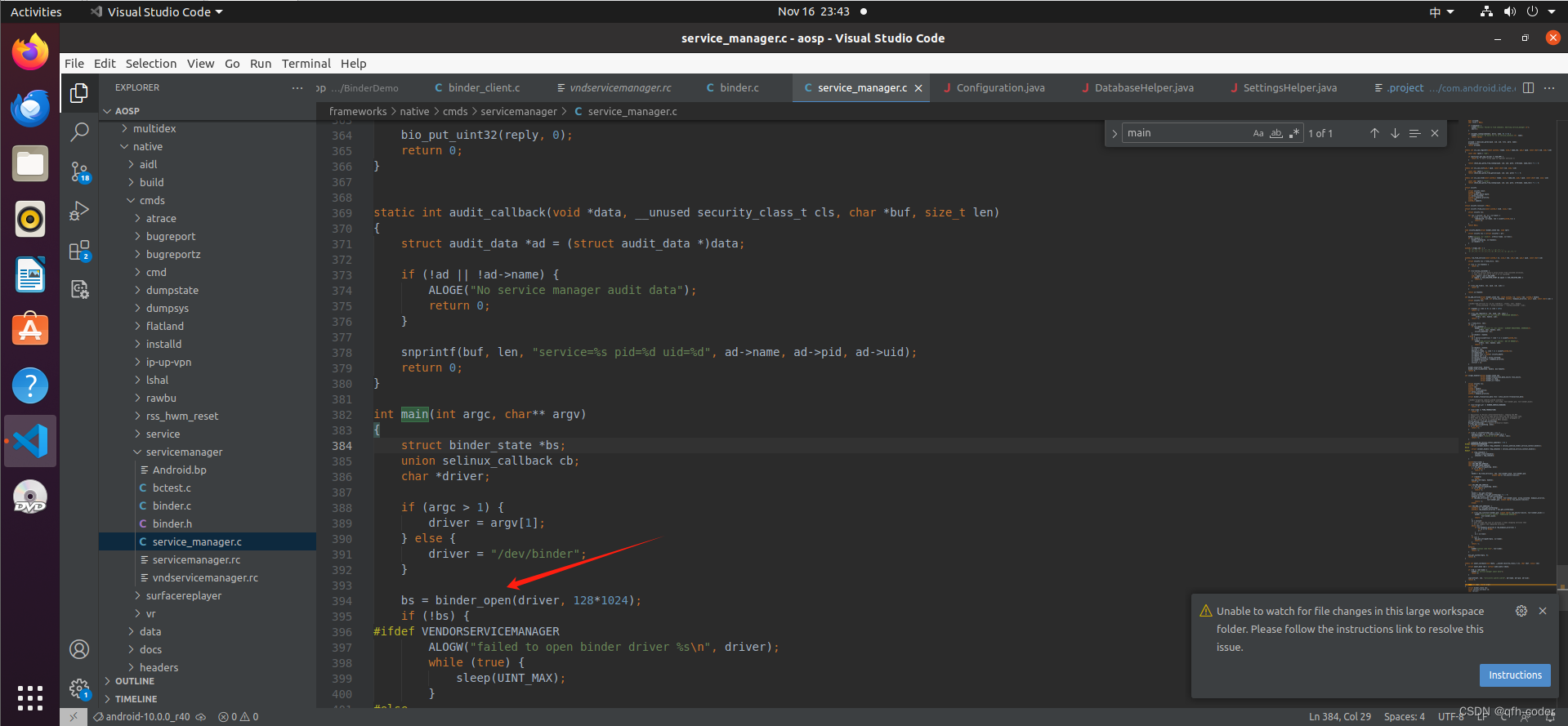

初始化binder驱动,mmap的值128k

bs = binder_open(driver, 128*1024);

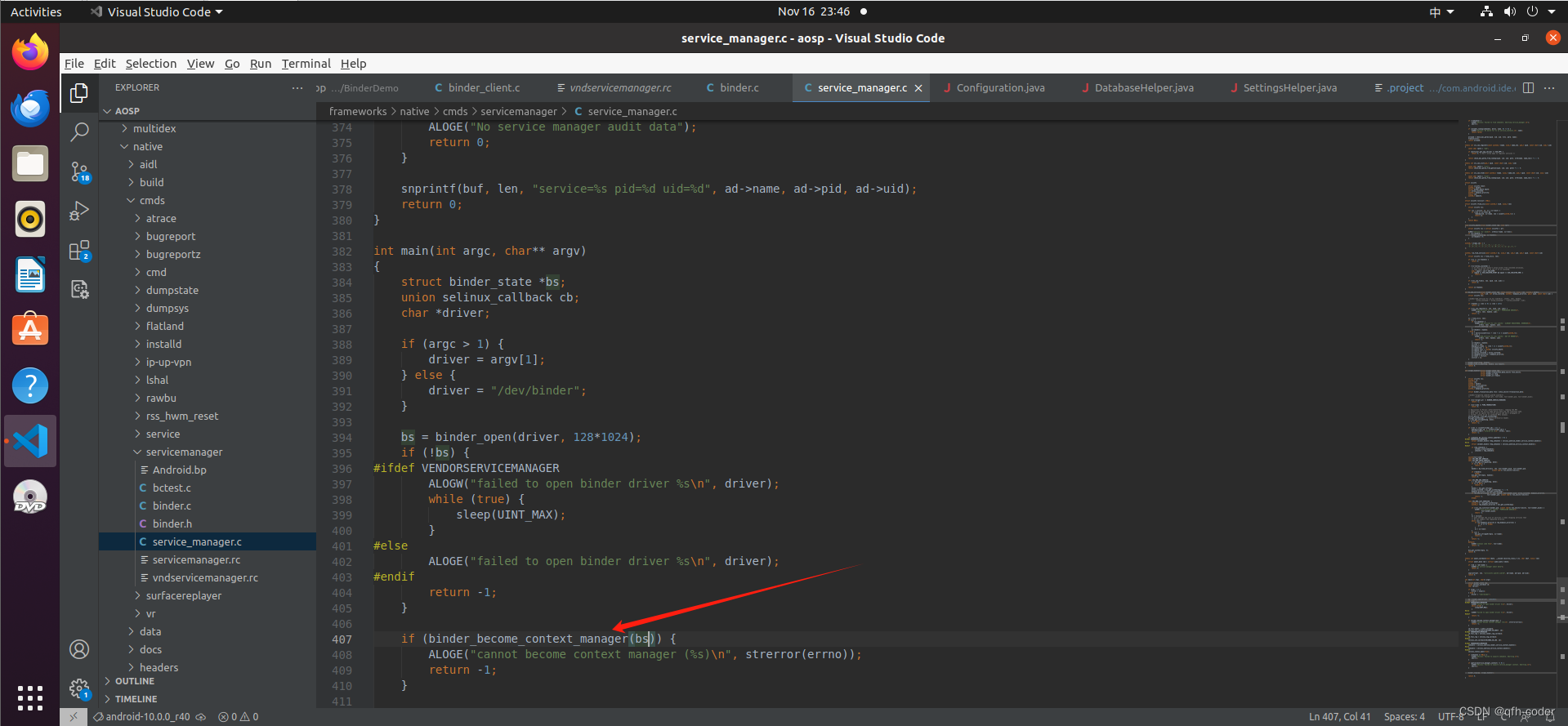

当前进程注册为servicemanager,这个进程就成为系统服务的管家

if (binder_become_context_manager(bs)) {

ALOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

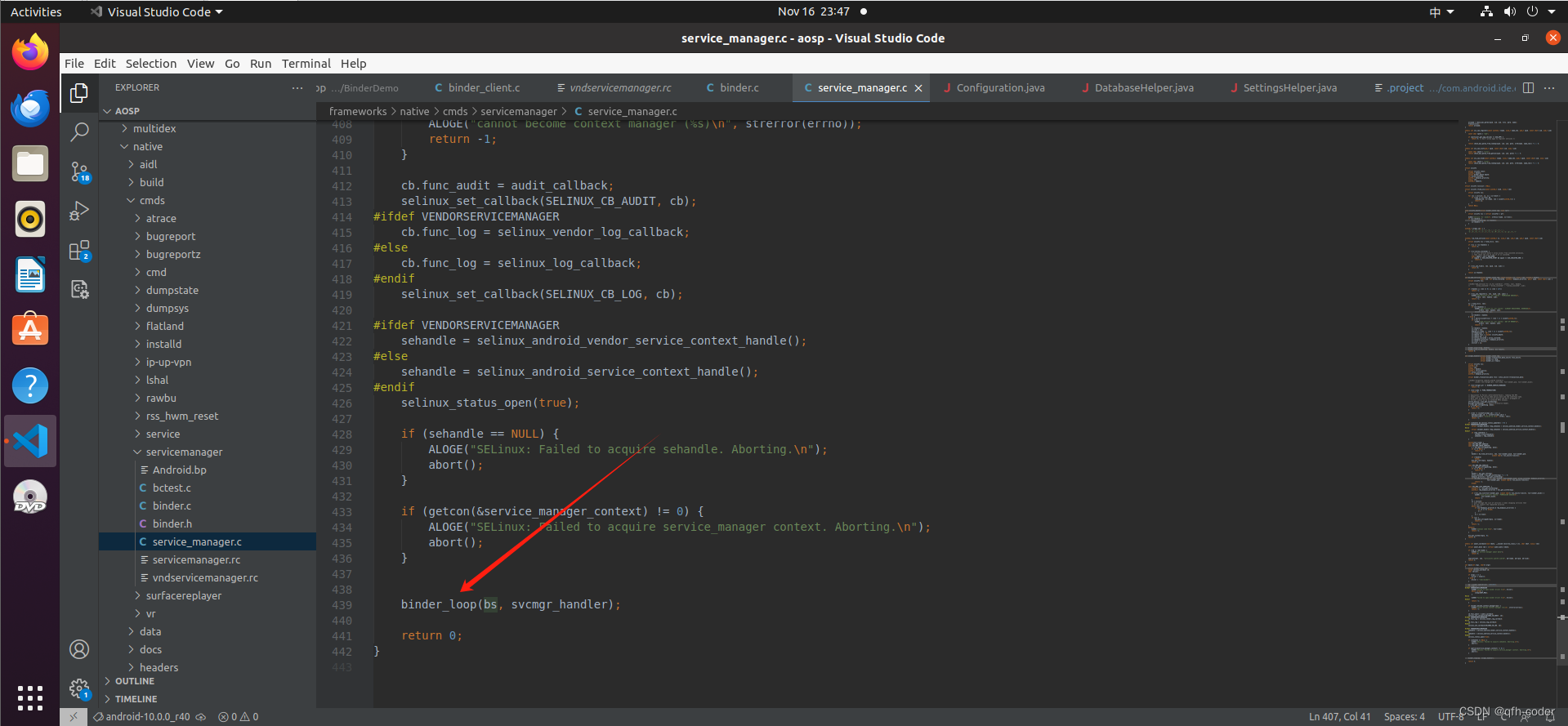

进入循环等待其他进程的远程调用,从binder驱动里面读取数据,解析数据,然后把数据传递给svcmgr_handler,是一个进程的回调函数,ioctrl发送一个指令给binder驱动,告诉驱动进入循环了,

binder_loop(bs, svcmgr_handler);

binder_open

driver驱动文件节点的位置,映射内存的大小128*1024

if (argc > 1) {

driver = argv[1];

} else {

driver = "/dev/binder";

}

bs = binder_open(driver, 128*1024);

源码bs,存储binder_open的返回值,open系统调用打开/dev/binder文件,获取到文件句柄,mmap用户空间和内核空间的映射。mapsize是内存映射区的大小

struct binder_state *binder_open(const char* driver, size_t mapsize)

{

struct binder_state *bs;

//分配内存空间

bs = malloc(sizeof(*bs));

bs->fd = open(driver, O_RDWR | O_CLOEXEC);

bs->mapsize = mapsize;

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

if (bs->mapped == MAP_FAILED) {

fprintf(stderr,"binder: cannot map device (%s)\n",

strerror(errno));

goto fail_map;

}

}

binder_become_context_manager

binder服务的管家,构建要发送的数据结构体flat_binder_object,memset置空,ioctl应用层向binder驱动发送数据,BINDER_SET_CONTEXT_MGR_EXT是一个驱动指令。告诉驱动我这个进程注册为servicemanager

int binder_become_context_manager(struct binder_state *bs)

{

struct flat_binder_object obj;

memset(&obj, 0, sizeof(obj));

//赋值

obj.flags = FLAT_BINDER_FLAG_TXN_SECURITY_CTX;

int result = ioctl(bs->fd, BINDER_SET_CONTEXT_MGR_EXT, &obj);

//失败了就会从新调用再去注册,发送数据

// fallback to original method

if (result != 0) {

android_errorWriteLog(0x534e4554, "121035042");

result = ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0);

}

return result;

}

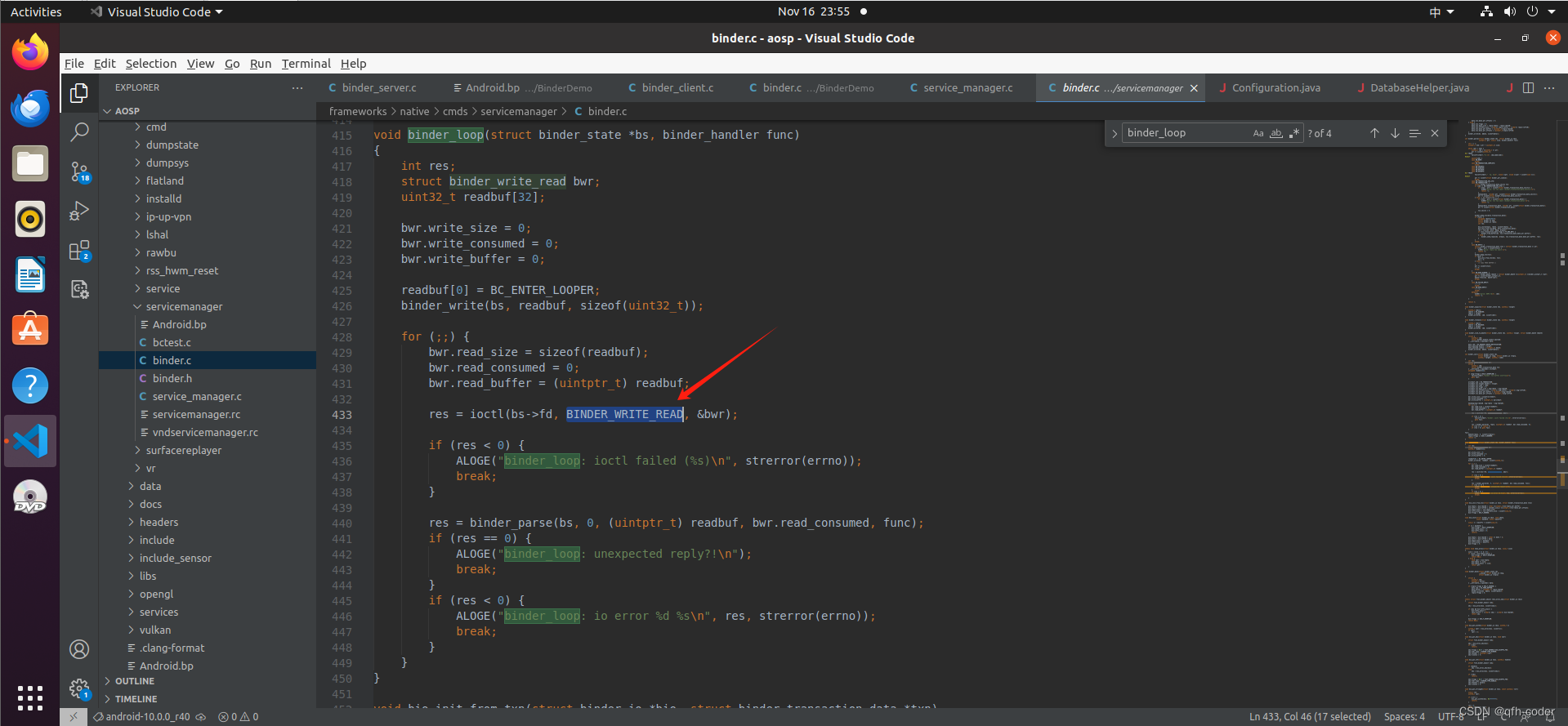

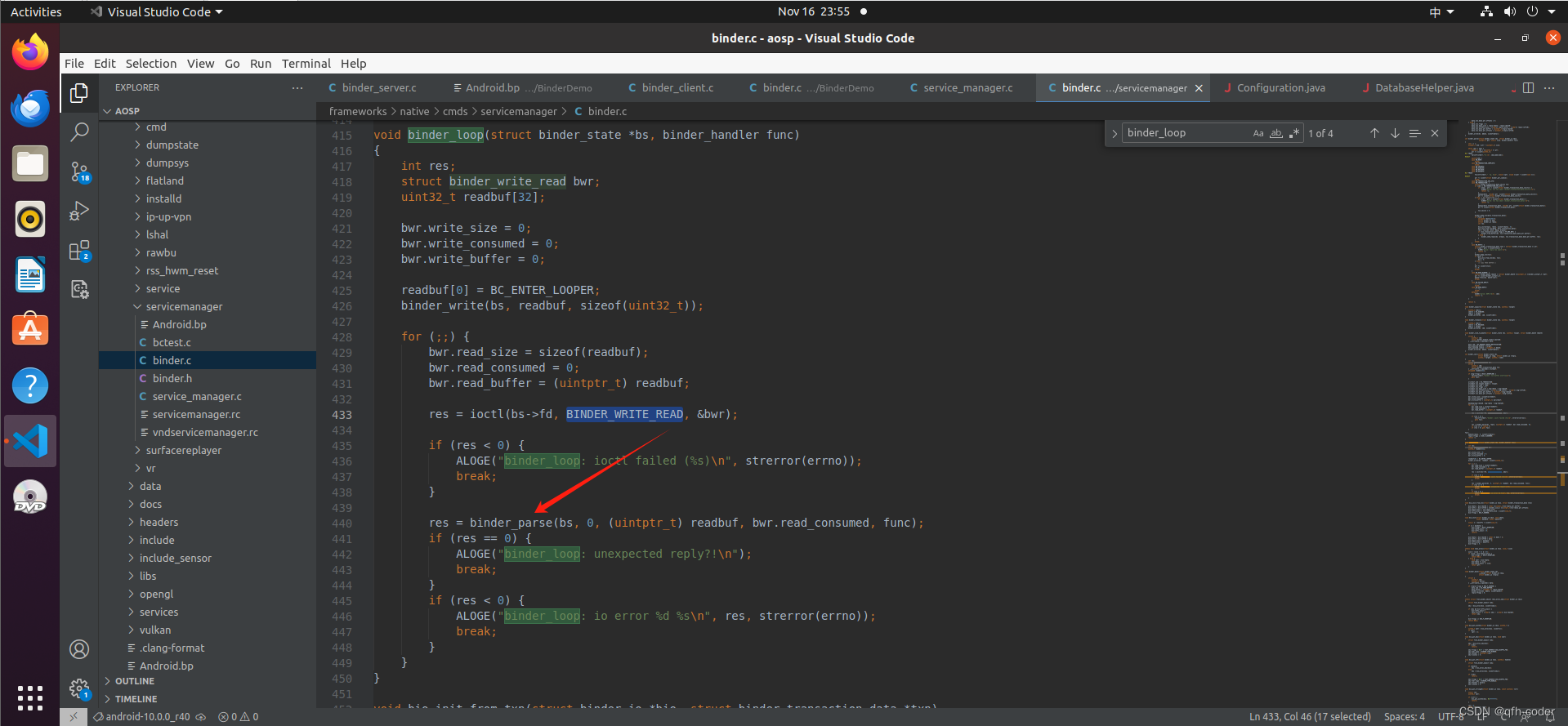

binder_loop

主要是读取数据然后解析数据然后传递给进程的回调函数,这样应用层就阔以拿到数据了

BC_ENTER_LOOPER告诉驱动应用层要开始进入循环了

binder_write封装了ioctrl,给驱动写数据,也就是发送数据

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(uint32_t));

//死循环

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

//读取数据存入bwr结构体中

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

//读取驱动的数据,解析出来,传入func给回调函数

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

if (res == 0) {

ALOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}

int binder_write(struct binder_state *bs, void *data, size_t len)

{

struct binder_write_read bwr;

int res;

//写入数据

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) data;

//不读数据

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

//发送请求,写数据写入内核

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder_write: ioctl failed (%s)\n",

strerror(errno));

}

return res;

}

struct binder_write_read {

binder_size_t write_size; /* bytes to write */

binder_size_t write_consumed; /* bytes consumed by driver */

binder_uintptr_t write_buffer;

binder_size_t read_size; /* bytes to read */

binder_size_t read_consumed; /* bytes consumed by driver */

binder_uintptr_t read_buffer;

};

struct {

//指令

uint32_t cmd;

//数据打包解包

struct binder_transaction_data txn;

} __attribute__((packed)) writebuf;

target数据发送给哪个进程,code远程调用哪个服务端的函数,data指向binder_io的内存地址

struct binder_transaction_data {

/* The first two are only used for bcTRANSACTION and brTRANSACTION,

* identifying the target and contents of the transaction.

*/

union {

/* target descriptor of command transaction */

__u32 handle;

/* target descriptor of return transaction */

binder_uintptr_t ptr;

} target;

binder_uintptr_t cookie; /* target object cookie */

__u32 code; /* transaction command */

/* General information about the transaction. */

__u32 flags;

pid_t sender_pid;

uid_t sender_euid;

binder_size_t data_size; /* number of bytes of data */

binder_size_t offsets_size; /* number of bytes of offsets */

/* If this transaction is inline, the data immediately

* follows here; otherwise, it ends with a pointer to

* the data buffer.

*/

union {

struct {

/* transaction data */

binder_uintptr_t buffer;

/* offsets from buffer to flat_binder_object structs */

binder_uintptr_t offsets;

} ptr;

__u8 buf[8];

} data;

};

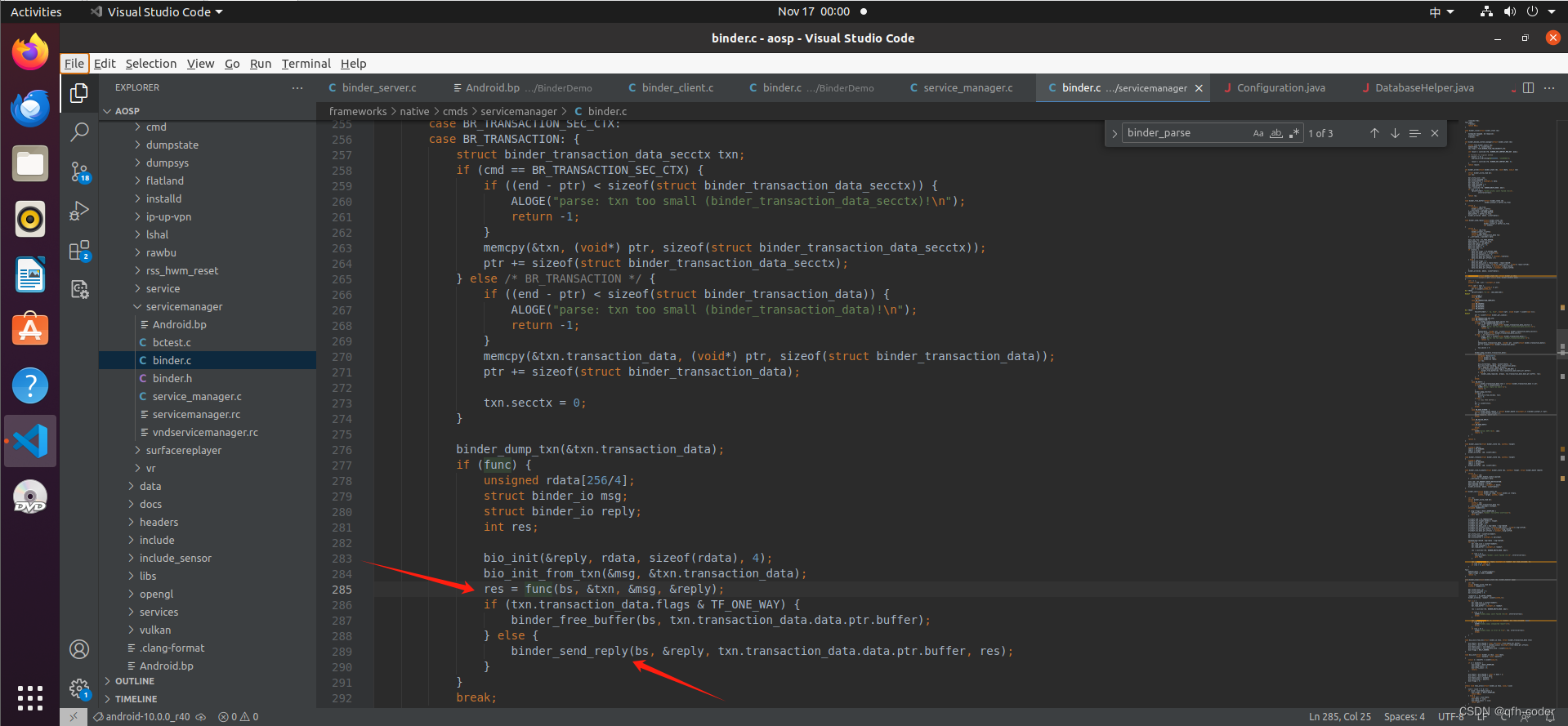

解析数据循环解析uint32_t cmd = *(uint32_t *) ptr;解析出指令,

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

while (ptr < end) {

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

switch(cmd) {

case BR_TRANSACTION_SEC_CTX:

case BR_TRANSACTION: {

struct binder_transaction_data_secctx txn;

if (cmd == BR_TRANSACTION_SEC_CTX) {

} else /* BR_TRANSACTION */ {

if ((end - ptr) < sizeof(struct binder_transaction_data)) {

ALOGE("parse: txn too small (binder_transaction_data)!\n");

return -1;

}

//

memcpy(&txn.transaction_data, (void*) ptr, sizeof(struct binder_transaction_data));

ptr += sizeof(struct binder_transaction_data);

txn.secctx = 0;

}

binder_dump_txn(&txn.transaction_data);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, &txn.transaction_data);

//回调函数,把reply的数据传递回去

res = func(bs, &txn, &msg, &reply);

if (txn.transaction_data.flags & TF_ONE_WAY) {

binder_free_buffer(bs, txn.transaction_data.data.ptr.buffer);

} else {

//发送给服务端数据

binder_send_reply(bs, &reply, txn.transaction_data.data.ptr.buffer, res);

}

}

break;

}

}

}

return r;

}

binder_io

数据结构体,client端写入数据,service端读取数据

struct binder_io

{

char *data; /* pointer to read/write from */

binder_size_t *offs; /* array of offsets */

size_t data_avail; /* bytes available in data buffer */

size_t offs_avail; /* entries available in offsets array */

char *data0; /* start of data buffer */

binder_size_t *offs0; /* start of offsets buffer */

uint32_t flags;

uint32_t unused;

};

使用方法

//client

bio_init(&msg, iodata, sizeof(iodata), 4);

bio_put_uint32(&msg, 0); // strict mode header

bio_put_string16_x(&msg, "IHelloService");

//server

strict_policy = bio_get_uint32(msg);

s = bio_get_string16(msg, &len); //"IHelloService"

s = bio_get_string16(msg, &len); // name

初始化bio,把bio所占的内存空间分为数据区和偏移区,size_t maxoffs是内存偏移区的大小,4个size_t的大小,size_t是int型结构,和平台有关,

bio_init(&msg, iodata, sizeof(iodata), 4);

void bio_init(struct binder_io *bio, void *data,

size_t maxdata, size_t maxoffs)

{

//字节数

size_t n = maxoffs * sizeof(size_t);

//内存溢出

if (n > maxdata) {

bio->flags = BIO_F_OVERFLOW;

bio->data_avail = 0;

bio->offs_avail = 0;

return;

}

//

bio->data = bio->data0 = (char *) data + n;

bio->offs = bio->offs0 = data;

bio->data_avail = maxdata - n;

bio->offs_avail = maxoffs;

bio->flags = 0;

}

bio存储整型数据,ptr获取到内存的指针,把指针这个地址的位置赋值,

void bio_put_uint32(struct binder_io *bio, uint32_t n)

{

uint32_t *ptr = bio_alloc(bio, sizeof(n));

if (ptr)

*ptr = n;

}

static void *bio_alloc(struct binder_io *bio, size_t size)

{ //4的整数

size = (size + 3) & (~3);

if (size > bio->data_avail) {

bio->flags |= BIO_F_OVERFLOW;

return NULL;

} else {

//指针移位

void *ptr = bio->data;

bio->data += size;

bio->data_avail -= size;

return ptr;

}

指针数据flat_binder_object存入binder_io *bio结构体里面,传进来的指针ptr先转化为obj再存入bio指针中。bio_alloc_obj分配内存

void bio_put_obj(struct binder_io *bio, void *ptr)

{

struct flat_binder_object *obj;

obj = bio_alloc_obj(bio);

if (!obj)

return;

obj->flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;

obj->hdr.type = BINDER_TYPE_BINDER;

//保存

obj->binder = (uintptr_t)ptr;

obj->cookie = 0;

}

static struct flat_binder_object *bio_alloc_obj(struct binder_io *bio)

{

struct flat_binder_object *obj;

//分配内存

obj = bio_alloc(bio, sizeof(*obj));

//flat_binder_object的数据需要记录内存的偏移量,

if (obj && bio->offs_avail) {

bio->offs_avail--;

*bio->offs++ = ((char*) obj) - ((char*) bio->data0);

return obj;

}

bio->flags |= BIO_F_OVERFLOW;

return NULL;

}

struct flat_binder_object {

struct binder_object_header hdr;

__u32 flags;

/* 8 bytes of data. */

union {

binder_uintptr_t binder; /* local object */

__u32 handle; /* remote object */

};

/* extra data associated with local object */

binder_uintptr_t cookie;

};

struct binder_object_header {

__u32 type;

};

binder_transaction_data_secctx

binder_transaction_data数据

struct binder_transaction_data_secctx {

struct binder_transaction_data transaction_data;

binder_uintptr_t secctx;

};

struct binder_transaction_data {

union {

__u32 handle;

binder_uintptr_t ptr;

} target;

binder_uintptr_t cookie;

__u32 code;

__u32 flags;

pid_t sender_pid;

uid_t sender_euid;

binder_size_t data_size;

binder_size_t offsets_size;

union {

struct {

binder_uintptr_t buffer;

binder_uintptr_t offsets;

} ptr;

__u8 buf[8];

} data;

};