一、高可用集群:

1.什么是高可用集群:

高可用集群(High Availability Cluster)是以减少服务中断时间为目地的服务器集群技术它通过保护用户的业务程序对外不间断提供的服务,把因软件、硬件、人为造成的故障对业务的影响降低到最小程度。

2.高可用的自动切换/故障转移(FailOver):

通俗地说,即当A无法为客户服务时,系统能够自动地切换,使B能够及时地顶上继续为客户提供服务,且客户感觉不到这个为他提供服务的对象已经更换。 通过上面判断节点故障后,将高可用集群资源(如VIP、httpd等)从该不具备法定票数的集群节点转移到故障转移域(Failover Domain,可以接收故障资源转移的节点)。

3.高可用中的自动侦测:

自动侦测阶段由主机上的软件通过冗余侦测线,经由复杂的监听程序,逻辑判断,来相互侦测对方运行的情况。 常用的方法是:集群各节点间通过心跳信息判断节点是否出现故障。

4.脑裂现象:

在高可用(HA)系统中,当联系2个节点的“心跳线”断开时,本来为一整体、动作协调的HA系统,就分裂成为2个独立的个体。由于相互失去了联系,都以为是对方出了故障。两个节点上的HA软件像“裂脑人”一样,争抢“共享资源”、争起“应用服务”,就会发生严重后果——或者共享资源被瓜分、2边“服务”都起不来了;或者2边“服务”都起来了,但同时读写“共享存储”,导致数据损坏(常见如数据库轮询着的联机日志出错)。

5.脑裂的原因:

因心跳线坏了(包括断了,老化)。 因网卡及相关驱动坏了,ip配置及冲突问题(网卡直连)。 因心跳线间连接的设备故障(网卡及交换机)。 因仲裁的机器出问题(采用仲裁的方案)。 高可用服务器上开启了 iptables防火墙阻挡了心跳消息传输。 高可用服务器上心跳网卡地址等信息配置不正确,导致发送心跳失败。 其他服务配置不当等原因,如心跳方式不同,心跳广插冲突、软件Bug等。

二、keepalived原理与简介:

1.keepalived是什么:

keepalived是集群管理中保证集群高可用的一个服务软件,用来防止单点故障。

2.keepalived工作原理:

keepalived是以VRRP协议为实现基础的,VRRP全称Virtual Router Redundancy Protocol,即虚拟路由冗余协议。

将N台提供相同功能的服务器组成一个服务器组,这个组里面有一个master和多个backup,master上面有一个对外提供服务的vip(该服务器所在局域网内其他机器的默认路由为该vip),master会发组播,当backup收不到vrrp包时就认为master宕掉了,这时就需要根据VRRP的优先级来选举一个backup当master

3.keepalived主要有三个模块:

分别是core、check和vrrp。 core模块为keepalived的核心,负责主进程的启动、维护以及全局配置文件的加载和解析。 check负责健康检查,包括常见的各种检查方式。 vrrp模块是来实现VRRP协议的。

下面来实现lvs+keepalived高可用集群:

环境配置:四台centos7虚拟机(使用NAT网络模式) ,两台lvs服务器,两台web服务器

192.168.23.137:作为第一台lvs服务器 lvs01

192.168.23.133:作为第二台lvs服务器 lvs02

192.168.23.134:作为第一台web服务器 web01

192.168.23.135:作为第二台web服务器 web02

LVS+keepalived高可用集群的大致工作流程:

在lvs没有实现高可用之前,我们的架构是一台lvs服务器和两台web服务器,由lvs做负载均衡, 将用户的请求按照一定的负载均衡算法,分发给两台web服务器。然而,这种架构有一个很大的痛点,由于我们访问web服务器是由lvs来进行负载均衡,也就是必须经过lvs服务器,从而访问到real-server也就是我们的web服务器。那么当lvs服务器挂掉之后 ,我们就无法达到均衡的去访问web服务器了。所以我们必须使用高可用技术,就是配置两台lvs服务器,在这两台lvs服务器上面都安装上keepalived。在正常情况下,一台lvs服务器作为master另一台lvs服务器作为backup,虚拟的vip只在master服务器上出现。我们只对外暴露出vip让客户进行访问,并不将真实的web服务器的ip暴露给用户,这样能够保证我们web服务器的安全,所以客户只能通过vip来访问我们的web服务器。 当客户通过vip来访问web服务器的时候,会先经过带有vip的服务器,也就是master,再通过master来进行负载均衡。当发生特殊情况:master服务器挂了的时候。此时的vip就会自动跳到backup服务器上,此时我们通过vip来访问web服务器的时候,也会先经过带有vip的服务器,也就是backup,再通过backup来进行负载均衡。从而实现了lvs的高可用

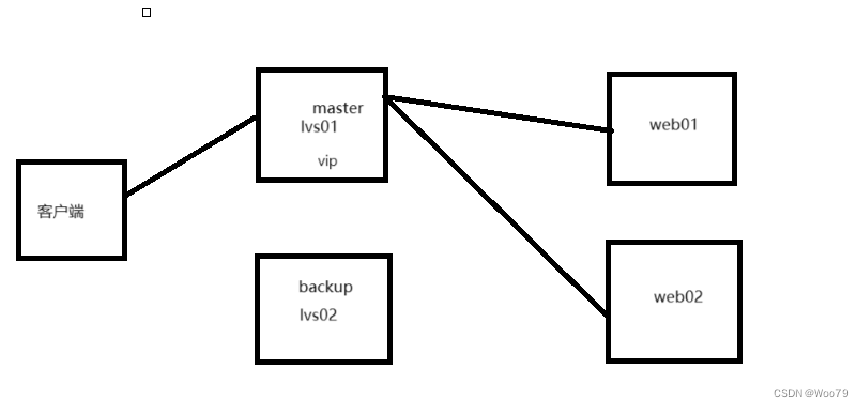

下图是正常情况下lvs+keepalived集群的工作模式,此时的vip在身为master的lvs01上面,lvs02一直监听lvs01的健康状况,客户通过vip访问web服务器时会先经过lvs01再由lvs01来进行负载均衡将访问分发给后面的两台real-server也就是我们的web服务器。

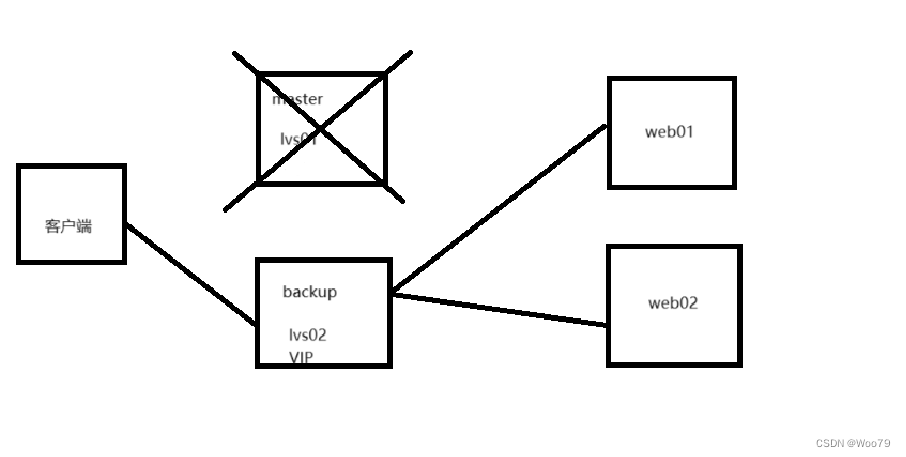

下图是当原来的master服务器挂掉之后的工作模式。我们可以看出,当lvs02监听到lvs01挂掉时, 此时的vip自动迁移到了原来的backup服务器上面也就是lvs02,客户通过vip访问web服务器时会先经过lvs02再由lvs02来进行负载均衡将访问分发给后面的两台real-server也就是我们的web服务器。

第一步:在lvs01上面安装keepalived和ipvsadm:

[root@bogon ~]# yum install keepalived ipvsadm -y安装完之后先不要启动这两个服务

2.在master上修改配置文件:

[root@lvs01 ~]# vim /etc/keepalived/keepalived.conf

#进去之后将默认配置文件删除,添加以下内容:

! Configuration File for keepalived

global_defs {

router_id Director1 #这个id是唯一的,不要与lvs02上面的重复。

}

vrrp_instance VI_1 {

state MASTER #另外一台机器是BACKUP

interface ens33 #心跳网卡,自己的网卡名称是多少就填多少

virtual_router_id 51 #虚拟路由编号,主备要一致

priority 150 #优先级

advert_int 1 #检查间隔,单位秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.23.20/24 dev ens33 #VIP和工作接口(vip要和这几台机器的网段一致,vip随便选一个就行,但是要确保此vip没有人使用)

}

}

virtual_server 192.168.23.20 80 { #LVS 配置,VIP

delay_loop 3 #服务论询的时间间隔,#每隔3秒检查一次real_server状态

lb_algo rr #LVS 调度算法

lb_kind DR #LVS 集群模式

protocol TCP

real_server 192.168.23.134 80 {

weight 1

TCP_CHECK {

connect_timeout 3 #健康检查方式,连接超时时间

}

}

real_server 192.168.23.135 80 {

weight 1

TCP_CHECK {

connect_timeout 3

}

}

}

3.拷贝master上的keepalived.conf到backup上:

scp 192.168.23.137:/etc/keepalived/keepalived.conf 192.168.23.133:/etc/keepalived/4.拷贝后,修改配置文件:

只需修改三个地方:

1.router_id Director2

2.state BACKUP

3.priority 100

5.lvs01 和 lvs02 上面都启动keepalived服务:

#!此台机器是137:lvs01

[root@lvs01 ~]# systemctl start keepalived.service

[root@lvs01 ~]# systemctl enable keepalived.service

#查看一下状态

[root@lvs01 ~]# systemctl status keepalived.service

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since 日 2024-03-31 11:33:52 CST; 55min ago

Process: 1130 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 1154 (keepalived)

Tasks: 3

CGroup: /system.slice/keepalived.service

├─1154 /usr/sbin/keepalived -D

├─1156 /usr/sbin/keepalived -D

└─1157 /usr/sbin/keepalived -D

3月 31 11:46:31 lvs01 Keepalived_healthcheckers[1156]: TCP connection to [192.168.23.135]:80 failed.

3月 31 11:46:32 lvs01 Keepalived_healthcheckers[1156]: TCP connection to [192.168.23.135]:80 failed.

3月 31 11:46:32 lvs01 Keepalived_healthcheckers[1156]: Check on service [192.168.23.135]:80 failed after 1 retry.

3月 31 11:46:32 lvs01 Keepalived_healthcheckers[1156]: Removing service [192.168.23.135]:80 from VS [192.168.23.20]:80

3月 31 11:46:32 lvs01 Keepalived_healthcheckers[1156]: Lost quorum 1-0=1 > 0 for VS [192.168.23.20]:80

3月 31 11:46:46 lvs01 Keepalived_healthcheckers[1156]: TCP connection to [192.168.23.135]:80 success.

3月 31 11:46:46 lvs01 Keepalived_healthcheckers[1156]: Adding service [192.168.23.135]:80 to VS [192.168.23.20]:80

3月 31 11:46:46 lvs01 Keepalived_healthcheckers[1156]: Gained quorum 1+0=1 <= 1 for VS [192.168.23.20]:80

3月 31 11:49:59 lvs01 Keepalived_healthcheckers[1156]: TCP connection to [192.168.23.134]:80 success.

3月 31 11:49:59 lvs01 Keepalived_healthcheckers[1156]: Adding service [192.168.23.134]:80 to VS [192.168.23.20]:80

#!此台机器是133:lvs02

[root@lvs02 ~]# systemctl start keepalived.service

[root@lvs02 ~]# systemctl enable keepalived.service

#查看一下状态:

[root@lvs02 ~]# systemctl status keepalived.service

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since 日 2024-03-31 11:33:56 CST; 57min ago

Process: 1136 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 1172 (keepalived)

Tasks: 3

Memory: 3.3M

CGroup: /system.slice/keepalived.service

├─1172 /usr/sbin/keepalived -D

├─1173 /usr/sbin/keepalived -D

└─1176 /usr/sbin/keepalived -D

3月 31 11:46:33 lvs02 Keepalived_healthcheckers[1173]: Removing service [192.168.23.135]:80 from VS [192.168.23.20]:80

3月 31 11:46:34 lvs02 Keepalived_healthcheckers[1173]: TCP connection to [192.168.23.134]:80 timeout.

3月 31 11:46:34 lvs02 Keepalived_healthcheckers[1173]: Check on service [192.168.23.134]:80 failed after 1 retry.

3月 31 11:46:34 lvs02 Keepalived_healthcheckers[1173]: Removing service [192.168.23.134]:80 from VS [192.168.23.20]:80

3月 31 11:46:34 lvs02 Keepalived_healthcheckers[1173]: Lost quorum 1-0=1 > 0 for VS [192.168.23.20]:80

3月 31 11:46:46 lvs02 Keepalived_healthcheckers[1173]: TCP connection to [192.168.23.135]:80 success.

3月 31 11:46:46 lvs02 Keepalived_healthcheckers[1173]: Adding service [192.168.23.135]:80 to VS [192.168.23.20]:80

3月 31 11:46:46 lvs02 Keepalived_healthcheckers[1173]: Gained quorum 1+0=1 <= 1 for VS [192.168.23.20]:80

3月 31 11:50:02 lvs02 Keepalived_healthcheckers[1173]: TCP connection to [192.168.23.134]:80 success.

3月 31 11:50:02 lvs02 Keepalived_healthcheckers[1173]: Adding service [192.168.23.134]:80 to VS [192.168.23.20]:80

[root@lvs02 ~]#

6.在master服务器也就是lvs01上面查看是否出现虚拟IP:

[root@lvs01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:ba:1f:ef brd ff:ff:ff:ff:ff:ff

inet 192.168.23.137/24 brd 192.168.23.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.23.20/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:feba:1fef/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:6e:9e:ef brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:6e:9e:ef brd ff:ff:ff:ff:ff:ff

[root@lvs01 ~]#

7.在master服务器也就是lvs02上面查看是否出现虚拟IP:

[root@lvs02 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:bf:89:01 brd ff:ff:ff:ff:ff:ff

inet 192.168.23.133/24 brd 192.168.23.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:febf:8901/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:6e:9e:ef brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:6e:9e:ef brd ff:ff:ff:ff:ff:ff

5: br-17a8c014f086: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:b6:aa:96:4c brd ff:ff:ff:ff:ff:ff

inet 172.19.0.1/16 brd 172.19.255.255 scope global br-17a8c014f086

valid_lft forever preferred_lft forever

6: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:33:bd:32:a9 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

[root@lvs02 ~]#

可见 lvs01 机器上的ens33网卡上面已经出现了我们配置的虚拟ip:192.168.23.20,在 lvs02 机器上查看,并未发现虚拟ip说明配置正确,否则两台机器都有虚拟ip的话就出现了脑裂现象

第二步:web服务器配置(此步骤在web01和web01机器上配置):

1.安装nginx测试点:

[root@web01 web]# yum install -y nginx && systemctl start nginx && systemctl enable nginx2.查看80端口是否启动:

[root@web01 web]# netstat -anpt | grep 80

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 9999/nginx: master

tcp6 0 0 192.168.23.134:10050 192.168.23.137:40480 TIME_WAIT -

3.自定义web主页,以便观察负载均衡结果:

#注意此机器是:web02

[root@web02 ~]# vim /usr/share/nginx/html/index.html

#将原有内容删除,写入以下内容:

This is web02

#注意此机器是:web01

[root@web01 ~]# vim /usr/share/nginx/html/index.html

#将原有内容删除,写入以下内容:

This is web014.配置虚拟地址:(web02同配置,在此就不展示了,只展示web01)

[root@web01 ~]# cp /etc/sysconfig/network-scripts/{ifcfg-lo,ifcfg-lo:0}[root@web01 ~]# vim /etc/sysconfig/network-scripts/ifcfg-lo:0

#写入以下内容,其他行注释掉:

DEVICE=lo:0

IPADDR=192.168.23.20

NETMASK=255.255.255.255

ONBOOT=yes

5.配置路由:(web02同配置,在此就不展示了,只展示web01)

在两台机器(RS)上,添加一个路由:route add -host 192.168.23.20 dev lo:0 确保如果请求的目标IP是$VIP,那么让出去的数据包的源地址也显示为$VIP

[root@web01 ~]# vim /etc/rc.local

#在最后一行加入以下内容:

/sbin/route add -host 192.168.23.20 dev lo:0

6.配置ARP:(web02同配置,在此就不展示了,只展示web01)

以下内容的作用是:忽略arp请求但是可以回复

[root@web01 ~]# vim /etc/sysctl.conf

#写入以下内容:

net.ipv4.conf.all.arp_ignore = 1

net.ipv4.conf.all.arp_announce = 2

net.ipv4.conf.default.arp_ignore = 1

net.ipv4.conf.default.arp_announce = 2

net.ipv4.conf.lo.arp_ignore = 1

net.ipv4.conf.lo.arp_announce = 2

7.重启虚拟机:(web02同配置,在此就不展示了,只展示web01)

[root@web01 ~]# reboot

第三步:测试效果:

1.观察vip地址在哪台机器上:

[root@lvs01 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:ba:1f:ef brd ff:ff:ff:ff:ff:ff

inet 192.168.23.137/24 brd 192.168.23.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.23.20/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:feba:1fef/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:6e:9e:ef brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:6e:9e:ef brd ff:ff:ff:ff:ff:ff

[root@lvs01 ~]#

[root@lvs02 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:bf:89:01 brd ff:ff:ff:ff:ff:ff

inet 192.168.23.133/24 brd 192.168.23.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:febf:8901/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:6e:9e:ef brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:6e:9e:ef brd ff:ff:ff:ff:ff:ff

5: br-17a8c014f086: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:b6:aa:96:4c brd ff:ff:ff:ff:ff:ff

inet 172.19.0.1/16 brd 172.19.255.255 scope global br-17a8c014f086

valid_lft forever preferred_lft forever

6: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:33:bd:32:a9 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

[root@lvs02 ~]#

可以看到此时vip也就是我们的虚拟ip在lvs01上

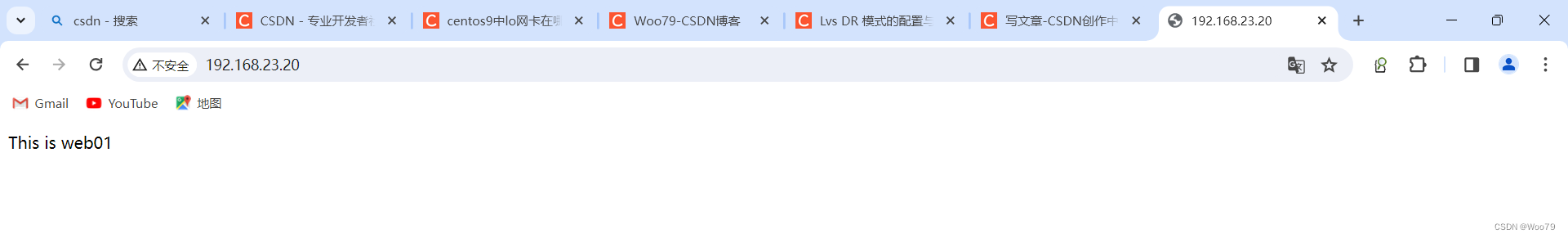

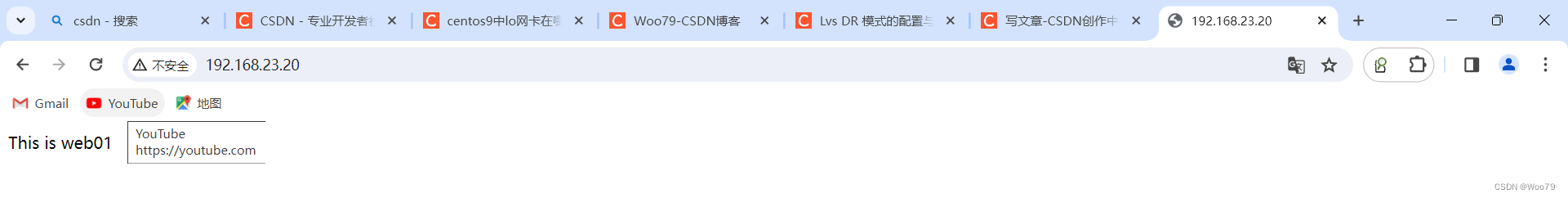

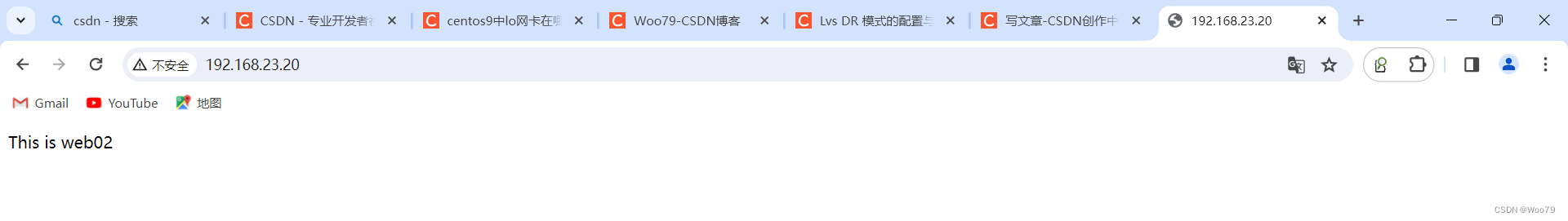

2.客户端浏览器访问vip:

连续刷新几次:

由此可见负载均衡效果已经实现

3.关闭master(lvs01)上的keepalived服务,再次访问vip

[root@lvs01 ~]# systemctl stop keepalived.service

[root@lvs01 ~]# systemctl status keepalived.service

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: inactive (dead) since 日 2024-03-31 12:58:49 CST; 3s ago

Process: 1130 ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 1154 (code=exited, status=0/SUCCESS)

3月 31 11:49:59 lvs01 Keepalived_healthcheckers[1156]: TCP connection to [192.168.23.134]:80 success.

3月 31 11:49:59 lvs01 Keepalived_healthcheckers[1156]: Adding service [192.168.23.134]:80 to VS [192.168.23.20]:80

3月 31 12:58:48 lvs01 Keepalived[1154]: Stopping

3月 31 12:58:48 lvs01 systemd[1]: Stopping LVS and VRRP High Availability Monitor...

3月 31 12:58:48 lvs01 Keepalived_vrrp[1157]: VRRP_Instance(VI_1) sent 0 priority

3月 31 12:58:48 lvs01 Keepalived_vrrp[1157]: VRRP_Instance(VI_1) removing protocol VIPs.

3月 31 12:58:48 lvs01 Keepalived_healthcheckers[1156]: Removing service [192.168.23.134]:80 from VS [192.168.23.20]:80

3月 31 12:58:48 lvs01 Keepalived_healthcheckers[1156]: Removing service [192.168.23.135]:80 from VS [192.168.23.20]:80

3月 31 12:58:48 lvs01 Keepalived_healthcheckers[1156]: Stopped

3月 31 12:58:49 lvs01 systemd[1]: Stopped LVS and VRRP High Availability Monitor.

[root@lvs01 ~]#

可见,再次访问vip仍能访问,负载均衡仍能实现

此时查看lvs02的ip:

[root@lvs02 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:bf:89:01 brd ff:ff:ff:ff:ff:ff

inet 192.168.23.133/24 brd 192.168.23.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 192.168.23.20/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:febf:8901/64 scope link

valid_lft forever preferred_lft forever

3: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:6e:9e:ef brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:6e:9e:ef brd ff:ff:ff:ff:ff:ff

5: br-17a8c014f086: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:b6:aa:96:4c brd ff:ff:ff:ff:ff:ff

inet 172.19.0.1/16 brd 172.19.255.255 scope global br-17a8c014f086

valid_lft forever preferred_lft forever

6: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:33:bd:32:a9 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

[root@lvs02 ~]#

我们发现此时vip已经自动跳到了lvs02机器上

4.关闭web1站点服务,再次访问VIP

[root@web01 ~]# systemctl stop nginx

[root@web01 ~]#

web01站点关闭后,再次访问vip我们发现仍能访问到业务。说明我们的高可用集群试验成功。到此实验完毕!

然而在真实工作场景下,这两台web服务器里面的资源是一致的,我们这里为了能够更好的展示lvs负载均衡的效果,就将两台服务器的内容进行了修改。