1.概述内容

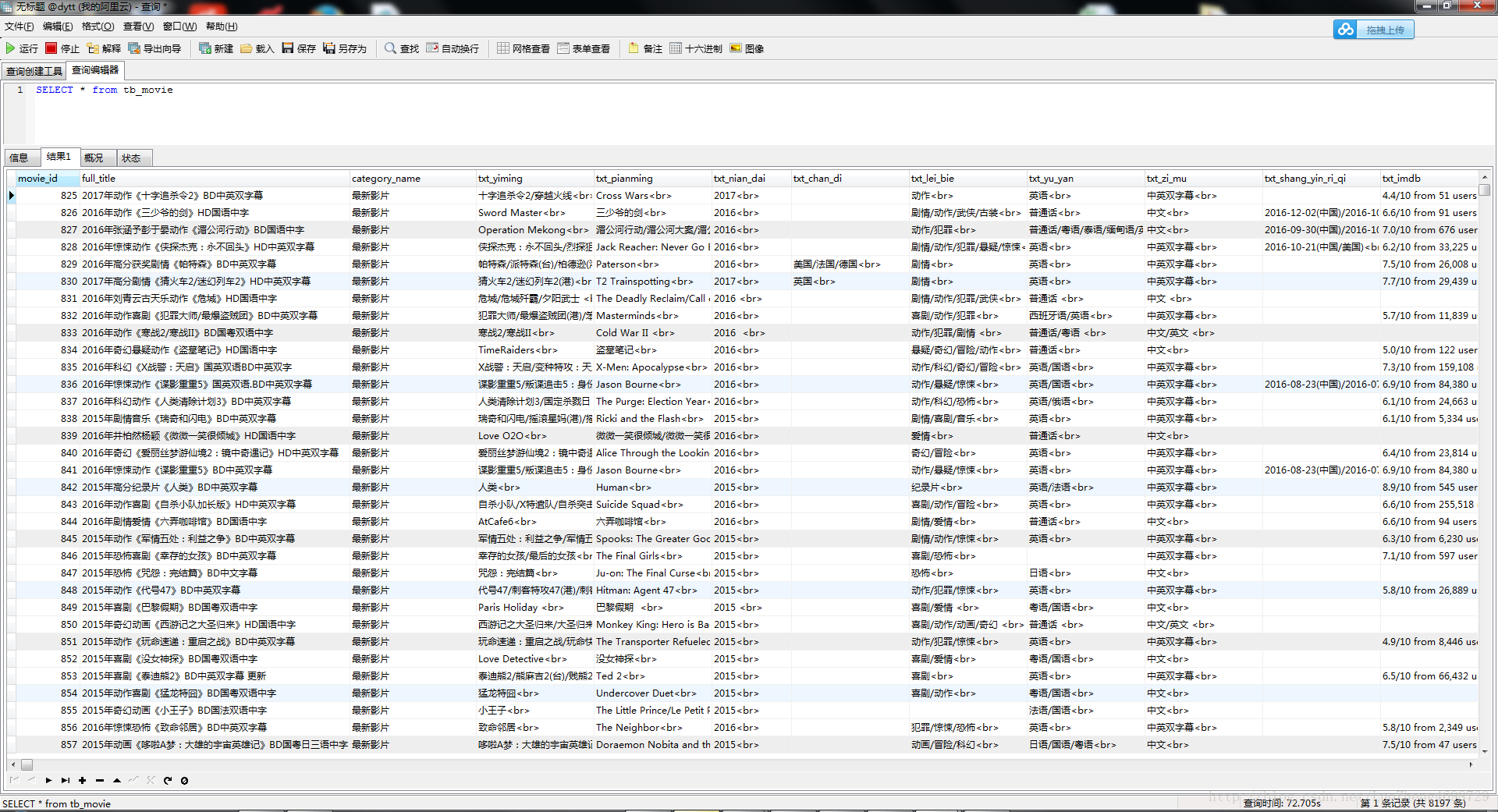

今天我们来用scrapy爬取电影天堂(http://www.dytt8.net/)这个网站,将影片存入mysql,下面是我的结果图:

2.要安装的python库

1.scrapy

2.BeautifulSoup

3.MySQLdb

这个大家自己百度安装吧!

3.爬取步骤

1.创建tb_movie表存储电影数据,我这里收集的字段比较详细,大家可以酌情收集。

CREATE TABLE `tb_movie` (

`movie_id` int(10) NOT NULL AUTO_INCREMENT COMMENT '电影id主键',

`full_title` varchar(500) COLLATE utf8_unicode_ci DEFAULT NULL,

`category_name` varchar(20) COLLATE utf8_unicode_ci DEFAULT NULL,

`txt_yiming` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '译 名',

`txt_pianming` varchar(500) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '片 名',

`txt_nian_dai` varchar(100) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '年 代',

`txt_chan_di` varchar(500) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '产 地',

`txt_lei_bie` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '类 别',

`txt_yu_yan` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '语 言',

`txt_zi_mu` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '字 幕',

`txt_shang_yin_ri_qi` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '上映日期',

`txt_imdb` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT 'IMDb评分',

`txt_dou_ban` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '豆瓣评分',

`txt_format` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '文件格式',

`txt_chi_cun` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '视频尺寸',

`txt_size` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '文件大小',

`txt_pian_chang` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '片 长',

`txt_dao_yan` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '导 演',

`txt_zhu_yan` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '主 演',

`txt_jian_jie` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '简 介',

`small_images` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '简介 图片列表(以逗号分隔)',

`big_images` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '大图, 海报图',

`release_time` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '发布时间',

`download_url` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL COMMENT '发布时间',

`create_time` datetime DEFAULT NULL COMMENT '创建时间',

`modify_time` datetime DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '更新时间',

`detail_url` varchar(1000) COLLATE utf8_unicode_ci DEFAULT NULL,

PRIMARY KEY (`movie_id`),

KEY `index_category_name` (`category_name`)

) ENGINE=InnoDB AUTO_INCREMENT=4076 DEFAULT CHARSET=utf8 COLLATE=utf8_unicode_ci COMMENT='电影主表';2.创建scrapy工程 ,然后进入工程目录创建spider,名为getmovie.py

1.scrapy startproject dytt

2.cd dytt

3.scrapy genspider getmovie dytt8.net3.编写items.py文件,字段和数据库相对应(注意调整你的items.py文件为utf-8编码)

class DyttItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

# 译 名

txtYiming = scrapy.Field()

# 片 名

txtPianming = scrapy.Field()

# 年 代

txtNianDai = scrapy.Field()

# 产 地

txtChanDi = scrapy.Field()

# 类 别

txtLeiBie = scrapy.Field()

#语 言

txtYuYan = scrapy.Field()

#字 幕

txtZiMu = scrapy.Field()

#上映日期

txtShangYinRiQi = scrapy.Field()

#IMDb评分

txtImdb = scrapy.Field()

#豆瓣评分

txtDouBan = scrapy.Field()

#文件格式

txtFormat = scrapy.Field()

#视频尺寸

txtChiCun = scrapy.Field()

#文件大小

txtSize = scrapy.Field()

#片 长

txtPianChang = scrapy.Field()

#导 演

txtDaoYan = scrapy.Field()

#主 演

txtZhuYan = scrapy.Field()

#简 介

txtJianJie = scrapy.Field()

#简介 图片列表

smallImages = scrapy.Field()

#大图, 海报图

bigImages = scrapy.Field()

#发布时间

releaseTime = scrapy.Field()

#下载地址

downloadUrl = scrapy.Field()

#全标题

fullTitle = scrapy.Field()

# 电影天堂详细电影 页面 地址

detailUrl = scrapy.Field()

# 类别

categoryName = scrapy.Field()4.编写spiders的getmovie.py

主要功能是访问解析网页,将数据内容封装成上面定义好的DyttItem。

解析访问流程主要是分为10大类,爬取时每类分为500页,每一页会访问详细电影介绍url, 详细注释在代码中,大家要打开电源天堂网站看对应的标签结构才能明白。

# -*- coding: utf-8 -*-

import scrapy

import re

import logging

from dytt.items import DyttItem

from bs4 import BeautifulSoup

import urllib.request

class GetmovieSpider(scrapy.Spider):

name = 'getmovie'

allowed_domains = ['dytt8.net']

start_urls = ['http://dytt8.net/']

def getUrl(self,pageIndex):

self.domain = "http://www.dytt8.net"

self.categoryName="最新影片"

return "http://www.dytt8.net/html/gndy/dyzz/list_23_"+str(pageIndex)+".html"

self.domain = "http://www.ygdy8.net"

# self.categoryName="国内电影"

# return "http://www.ygdy8.net/html/gndy/china/list_4_"+str(pageIndex)+".html"

#

# self.categoryName="日韩影片"

# return "http://www.ygdy8.net/html/gndy/rihan/list_6_"+str(pageIndex)+".html"

#

# self.categoryName="欧美影片"

# return "http://www.ygdy8.net/html/gndy/oumei/list_7_"+str(pageIndex)+".html"

#

# self.categoryName="华语电视"

# return "http://www.ygdy8.net/html/tv/hytv/list_71_"+str(pageIndex)+".html"

#

# self.categoryName="日韩电视"

# return "http://www.ygdy8.net/html/tv/rihantv/list_8_"+str(pageIndex)+".html"

#

# self.categoryName="欧美电视"

# return "http://www.ygdy8.net/html/tv/oumeitv/index.html"

#

# self.categoryName="最新综艺"

# return "http://www.ygdy8.net/html/zongyi2013/list_99_"+str(pageIndex)+".html"

#

# self.categoryName="旧版综艺"

# return "http://www.ygdy8.net/html/2009zongyi/list_89_"+str(pageIndex)+".html"

#

# self.categoryName="动漫资源"

# return "http://www.ygdy8.net/html/dongman/list_16_"+str(pageIndex)+".html"

#

# self.categoryName="游戏下载"

return "http://www.ygdy8.net/html/game/list_19_"+str(pageIndex)+".html"

#初始化request调用

def start_requests(self):

reqs = []

# 第一页到 500页

for i in range(1,500):

req = scrapy.Request(self.getUrl(i))

reqs.append(req)

return reqs

#解析每一个url时调用

def parse(self,response):

tables = response.xpath('//table[@class="tbspan"]');

for itemEntry in tables:

item = DyttItem()

details = itemEntry.xpath('tr[2]/td[2]/b').extract()[0]

soup = BeautifulSoup(details)

aList = soup.findAll('a', {'class':'ulink'})

if len(aList) == 1:

a = aList[0]

else:

a = aList[1]

detailUrl = self.domain + a.get('href')

fullTitle = a.string

item['fullTitle'] = fullTitle

item['detailUrl'] = detailUrl

item['categoryName'] = self.categoryName

yield scrapy.Request(url=detailUrl,meta={'item':item},callback=self.parseDetail,dont_filter=True)

def parseDetail(self,response):

item = response.meta['item']

logging.log(logging.WARNING,str(item['fullTitle']) + " url:"+response.url)

releaseTime = response.xpath('//div[@class="co_content8"]').extract()[0]

movContent = response.xpath('//div[@id="Zoom"]').extract()

# 正则 取时间

releaseTime = re.search(r"(\d{4}-\d{1,2}-\d{1,2})",releaseTime).group(0)

# 电影下载地址

downloadUrl = re.search(r'(<a[^>]*href="([^"]+)"[^>]*>)',movContent[0]).group(0)[9:-2]

#电影图片 数组

imagesArray = self.findImageSrc(movContent[0])

imagesArray = self.distinct(imagesArray)

# 文字信息数组

txtArray = self.findMoiveTextInfo(movContent[0])

#self.myParseMovieInfo(movContent[0])

if len(imagesArray) > 0:

try:

item['bigImages'] = imagesArray[0]

item['smallImages'] = self.arrayToString(imagesArray)

except:

item['bigImages'] = ''

item['smallImages'] = ''

else:

item['bigImages'] = ''

item['smallImages'] = ''

item['downloadUrl'] = downloadUrl

item['releaseTime'] = releaseTime

# 译 名

item['txtYiming'] = self.getStringInfo(txtArray,'译 名')

# 片 名

item['txtPianming'] = self.getStringInfo(txtArray,'片 名')

# 年 代

item['txtNianDai'] = self.getStringInfo(txtArray,'年 代')

# 产 地

item['txtChanDi'] = self.getStringInfo(txtArray,'产 地')

# 类 别

item['txtLeiBie'] = self.getStringInfo(txtArray,'类 别')

#语 言

item['txtYuYan'] = self.getStringInfo(txtArray,'语 言')

#字 幕

item['txtZiMu'] = self.getStringInfo(txtArray,'字 幕')

#上映日期

item['txtShangYinRiQi'] = self.getStringInfo(txtArray,'上映日期')

#IMDb评分

item['txtImdb'] = self.getStringInfo(txtArray,'IMDb评分')

#豆瓣评分

item['txtDouBan'] = self.getStringInfo(txtArray,'豆瓣评分')

#文件格式

item['txtFormat'] = self.getStringInfo(txtArray,'文件格式')

#视频尺寸

item['txtChiCun'] = self.getStringInfo(txtArray,'视频尺寸')

#文件大小

item['txtSize'] = self.getStringInfo(txtArray,'文件大小')

#片 长

item['txtPianChang'] = self.getStringInfo(txtArray,'片 长')

#导 演

item['txtDaoYan'] = self.getStringInfo(txtArray,'导 演')

#主 演

item['txtZhuYan'] = self.getStringInfo(txtArray,'主 演')

#简 介

item['txtJianJie'] = self.getStringInfo(txtArray,'简 介')

#格式化简介

if item['txtJianJie'] != "":

item['txtJianJie'] = item['txtJianJie'][0:str.rfind(item['txtJianJie'],'。')]

yield item

#### 数组去重

def distinct(self,src):

news_ids = []

for id in src:

if id not in news_ids:

news_ids.append(id)

return news_ids

def getStringInfo(self,array,key):

for i in array:

if key in i:

i = i.replace(key,"")

return i.strip()

return ""

def arrayToString(self,array):

str=""

if len(array) > 1:

for i in array[1:]:

str = str + i + ","

if ',' in str:

str = str[0:-1]

return str

def myParseMovieInfo(self,movContent):

try:

downloadUrl = re.search(r'(<a[^>]*href="([^"]+)"[^>]*>)',movContent).group(0)

downloadUrl = re.search(r'(ftp*>)',downloadUrl).group(0)

for item in movContent.split('<br>'):

item = item.strip()

if item == "":

continue

#logging.log(logging.WARNING, "&&&&:>>"+item)

except Exception as e:

logging.log(logging.WARNING, "parser movie content error")

def findMoiveTextInfo(self,movContent):

list = movContent.split('◎')

arryas = []

for i in list[1:]:

arryas.append(i)

return arryas

def findImageSrc(self,movContent):

try:

replace_pattern = r'<[img|IMG].*?>' #img标签的正则式

img_url_pattern = r'.+?src="(\S+)"' #img_url的正则式

img_url_list = []

need_replace_list = re.findall(replace_pattern, movContent)#找到所有的img标签

for tag in need_replace_list:

img_url_list.append(re.findall(img_url_pattern, tag)[0])#找到所有的img_url

return img_url_list

except:

logging.log(logging.WARNING, "parser movie image error")

return []

5.pipelines.py管道文件

主要是将上面封装好的DyttItem数据写入mysql,写入前先跟进名称和下载url查询,如果存在则代表重复电影,不插入数据库

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

import MySQLdb

import MySQLdb.cursors

from twisted.enterprise import adbapi

from dytt import settings

import logging

class DyttPipeline(object):

def __init__(self):

dbargs = dict(

host='你的数据库地址',

db='你的数据库名称',

user='你的数据库用户名',

passwd='你的数据库密码',

charset='utf8',

cursorclass = MySQLdb.cursors.DictCursor,

use_unicode= True,

)

self.dbpool = adbapi.ConnectionPool('MySQLdb', **dbargs)

def process_item(self, item, spider):

res = self.dbpool.runInteraction(self.insert_into_table,item)

return item

def insert_into_table(self,conn,item):

# 先查询存不存在

sql = 'select * from tb_movie where full_title="'+item['fullTitle']+'" and detail_url="'+item['detailUrl']+'"'

try:

# 执行SQL语句

conn.execute(sql)

# 获取所有记录列表

results = conn.fetchall()

if len(results) > 0: ## 存在

logging.log(logging.WARNING, "此电影已经存在@@@@@@@@@@@@")

return

except:

pass

sql = '''insert into tb_movie (txt_yiming,txt_pianming,txt_nian_dai,txt_chan_di,txt_lei_bie

,txt_yu_yan,txt_zi_mu,txt_shang_yin_ri_qi,txt_imdb,txt_dou_ban,txt_format,txt_chi_cun,txt_size

,txt_pian_chang,txt_dao_yan,txt_zhu_yan,txt_jian_jie,small_images,big_images,release_time,download_url,full_title,detail_url,category_name)

VALUES ('''

if item['txtYiming'] is not None:

sql = sql + '"'+item['txtYiming']+'",'

if item['txtPianming'] is not None:

sql = sql + '"'+item['txtPianming']+'",'

if item['txtNianDai'] is not None:

sql = sql + '"'+item['txtNianDai']+'",'

if item['txtChanDi'] is not None:

sql = sql + '"'+item['txtChanDi']+'",'

if item['txtLeiBie'] is not None:

sql = sql + '"'+item['txtLeiBie']+'",'

if item['txtYuYan'] is not None:

sql = sql + '"'+item['txtYuYan']+'",'

if item['txtZiMu'] is not None:

sql = sql + '"'+item['txtZiMu']+'",'

if item['txtShangYinRiQi'] is not None:

sql = sql + '"'+item['txtShangYinRiQi']+'",'

if item['txtImdb'] is not None:

sql = sql + '"'+item['txtImdb']+'",'

if item['txtDouBan'] is not None:

sql = sql + '"'+item['txtDouBan']+'",'

if item['txtFormat'] is not None:

sql = sql + '"'+item['txtFormat']+'",'

if item['txtChiCun'] is not None:

sql = sql + '"'+item['txtChiCun']+'",'

if item['txtSize'] is not None:

sql = sql + '"'+item['txtSize']+'",'

if item['txtPianChang'] is not None:

sql = sql + '"'+item['txtPianChang']+'",'

if item['txtDaoYan'] is not None:

sql = sql + '"'+item['txtDaoYan']+'",'

if item['txtZhuYan'] is not None:

sql = sql + '"'+item['txtZhuYan']+'",'

if item['txtJianJie'] is not None:

sql = sql + '"'+item['txtJianJie']+'",'

if item['smallImages'] is not None:

sql = sql + '"'+item['smallImages']+'",'

if item['bigImages'] is not None:

sql = sql + '"'+item['bigImages']+'",'

if item['releaseTime'] is not None:

sql = sql + '"'+item['releaseTime']+'",'

if item['downloadUrl'] is not None:

sql = sql + '"'+item['downloadUrl']+'",'

if item['fullTitle'] is not None:

sql = sql + '"'+item['fullTitle']+'",'

if item['detailUrl'] is not None:

sql = sql + '"'+item['detailUrl']+'",'

if item['categoryName'] is not None:

sql = sql + '"'+item['categoryName']+'",'

sql = sql[0:-1]

sql = sql + ')'

try:

conn.execute(sql)

except Exception as e:

logging.log(logging.WARNING, "sqlsqlsqlsqlsqlsqlsql error>> "+sql)

5.settings.py

#遵循爬虫规范

ROBOTSTXT_OBEY = True

#模拟浏览器

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.87 Safari/537.36'

#注册piplinses

ITEM_PIPELINES = {

'dytt.pipelines.DyttPipeline': 300,

}6.最后运行爬虫

#列举项目的所有爬虫

scrapy list

#运行getmovie爬虫

scrapy crawl getmovie老生常谈:深圳有爱好音乐的会打鼓(吉他,键盘,贝斯等)的程序员和其它职业可以一起交流加入我们乐队一起嗨。我的QQ:657455400