目录

人脸识别

1.环境准备

首先准备ROS系统,基于ros的软件支持opencv,usbcam

apt install ros-kinetic-desktop-full

apt install ros-kinetic-opencv3

apt install ros-kinetic-usb-cam

2.创建工作空间与功能包

在创建功能包时导入依赖库

$ source /opt/ros/kinetic/setup.zsh

$ mkdir -p ~/catkin_ws/src

$ cd ~/catkin_ws/src

$ catkin_init_workspace

$ cd ~/catkin_ws

$ catkin_make

$ souce ~/catkiin_ws/devel/setup.zsh

$ cd ~/catkin_ws/src

$ catkin_create_pkg test1 rospy roscpp std_msgs sensor_msgs cv_bridge image_transport

$ cd ~/catkin_ws

$ catkin_make

$ source devel/setup.zsh

创建文件目录结构

scripts存放代码,launch存放启动文件

$cd test1

$mkdir launch scripts

3.人脸识别程序

$cd test1/scripts

$touch face_detector.py

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import rospy

import cv2

import numpy as np

from sensor_msgs.msg import Image, RegionOfInterest

from cv_bridge import CvBridge, CvBridgeError

class faceDetector:

def __init__(self):

rospy.on_shutdown(self.cleanup);

# 创建cv_bridge

self.bridge = CvBridge()

self.image_pub = rospy.Publisher("cv_bridge_image", Image, queue_size=1)

# 获取haar特征的级联表的XML文件,文件路径在launch文件中传入

cascade_1 = rospy.get_param("~cascade_1", "")

cascade_2 = rospy.get_param("~cascade_2", "")

# 使用级联表初始化haar特征检测器

self.cascade_1 = cv2.CascadeClassifier(cascade_1)

self.cascade_2 = cv2.CascadeClassifier(cascade_2)

# 设置级联表的参数,优化人脸识别,可以在launch文件中重新配置

self.haar_scaleFactor = rospy.get_param("~haar_scaleFactor", 1.2)

self.haar_minNeighbors = rospy.get_param("~haar_minNeighbors", 2)

self.haar_minSize = rospy.get_param("~haar_minSize", 40)

self.haar_maxSize = rospy.get_param("~haar_maxSize", 60)

self.color = (50, 255, 50)

# 初始化订阅rgb格式图像数据的订阅者,此处图像topic的话题名可以在launch文件中重映射

self.image_sub = rospy.Subscriber("input_rgb_image", Image, self.image_callback, queue_size=1)

def image_callback(self, data):

# 使用cv_bridge将ROS的图像数据转换成OpenCV的图像格式

try:

cv_image = self.bridge.imgmsg_to_cv2(data, "bgr8")

frame = np.array(cv_image, dtype=np.uint8)

except CvBridgeError, e:

print e

# 创建灰度图像

grey_image = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# 创建平衡直方图,减少光线影响

grey_image = cv2.equalizeHist(grey_image)

# 尝试检测人脸

faces_result = self.detect_face(grey_image)

# 在opencv的窗口中框出所有人脸区域

if len(faces_result)>0:

for face in faces_result:

x, y, w, h = face

cv2.rectangle(cv_image, (x, y), (x+w, y+h), self.color, 2)

# 将识别后的图像转换成ROS消息并发布

self.image_pub.publish(self.bridge.cv2_to_imgmsg(cv_image, "bgr8"))

def detect_face(self, input_image):

# 首先匹配正面人脸的模型

if self.cascade_1:

faces = self.cascade_1.detectMultiScale(input_image,

self.haar_scaleFactor,

self.haar_minNeighbors,

cv2.CASCADE_SCALE_IMAGE,

(self.haar_minSize, self.haar_maxSize))

# 如果正面人脸匹配失败,那么就尝试匹配侧面人脸的模型

if len(faces) == 0 and self.cascade_2:

faces = self.cascade_2.detectMultiScale(input_image,

self.haar_scaleFactor,

self.haar_minNeighbors,

cv2.CASCADE_SCALE_IMAGE,

(self.haar_minSize, self.haar_maxSize))

return faces

def cleanup(self):

print "Shutting down vision node."

cv2.destroyAllWindows()

if __name__ == '__main__':

try:

# 初始化ros节点

rospy.init_node("face_detector")

faceDetector()

rospy.loginfo("Face detector is started..")

rospy.loginfo("Please subscribe the ROS image.")

rospy.spin()

except KeyboardInterrupt:

print "Shutting down face detector node."

cv2.destroyAllWindows()4.launch文件

$cd test1/launch

$touch usb_cam.launch face_detector.launch

usb_cam.launch开启摄像头

<launch>

<node name="usb_cam" pkg="usb_cam" type="usb_cam_node" output="screen" >

<param name="video_device" value="/dev/video0" />

<param name="image_width" value="640" />

<param name="image_height" value="480" />

<param name="pixel_format" value="yuyv" />

<param name="camera_frame_id" value="usb_cam" />

<param name="io_method" value="mmap"/>

</node>

</launch>face_detector.launch运行人脸识别程序

<launch>

<node pkg="test1" name="face_detector" type="face_detector.py" output="screen">

<remap from="input_rgb_image" to="/usb_cam/image_raw" />

<rosparam>

haar_scaleFactor: 1.2

haar_minNeighbors: 2

haar_minSize: 40

haar_maxSize: 60

</rosparam>

<param name="cascade_1" value="$(find robot_vision)/data/haar_detectors/haarcascade_frontalface_alt.xml" />

<param name="cascade_2" value="$(find robot_vision)/data/haar_detectors/haarcascade_profileface.xml" />

</node>

</launch>5.执行

分别开启三个终端,执行以下命令

$roslaunch test1 usb_cam.launch

$roslaunch test1 face_detector.launch

$rqt_image_view //也可用rviz订阅

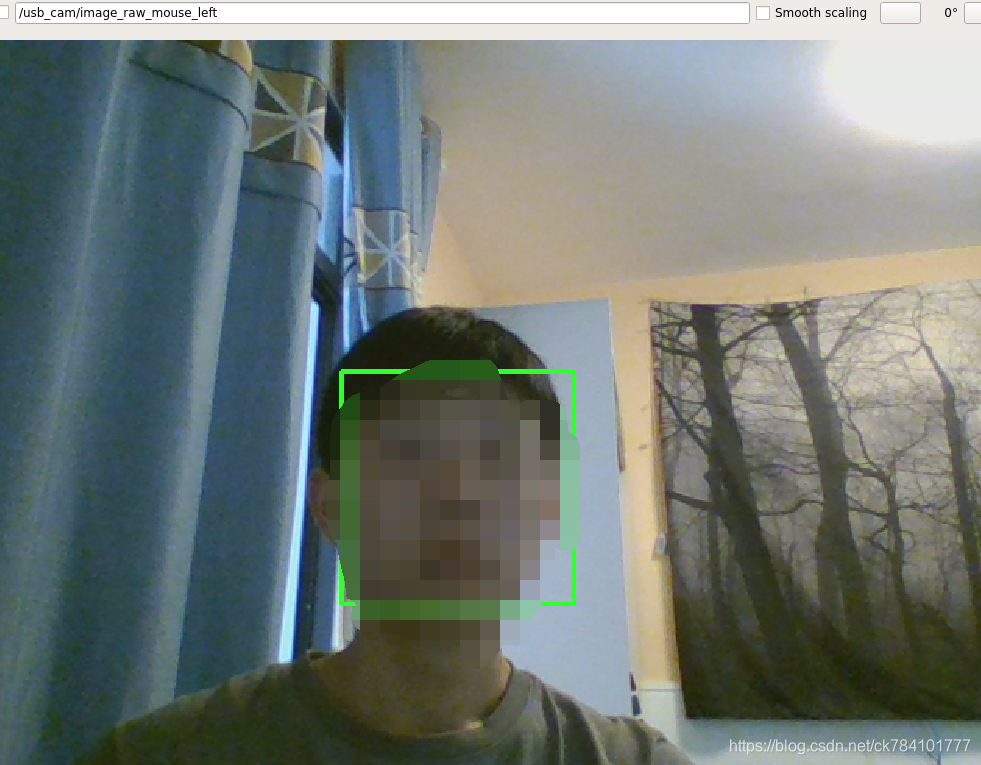

正面效果

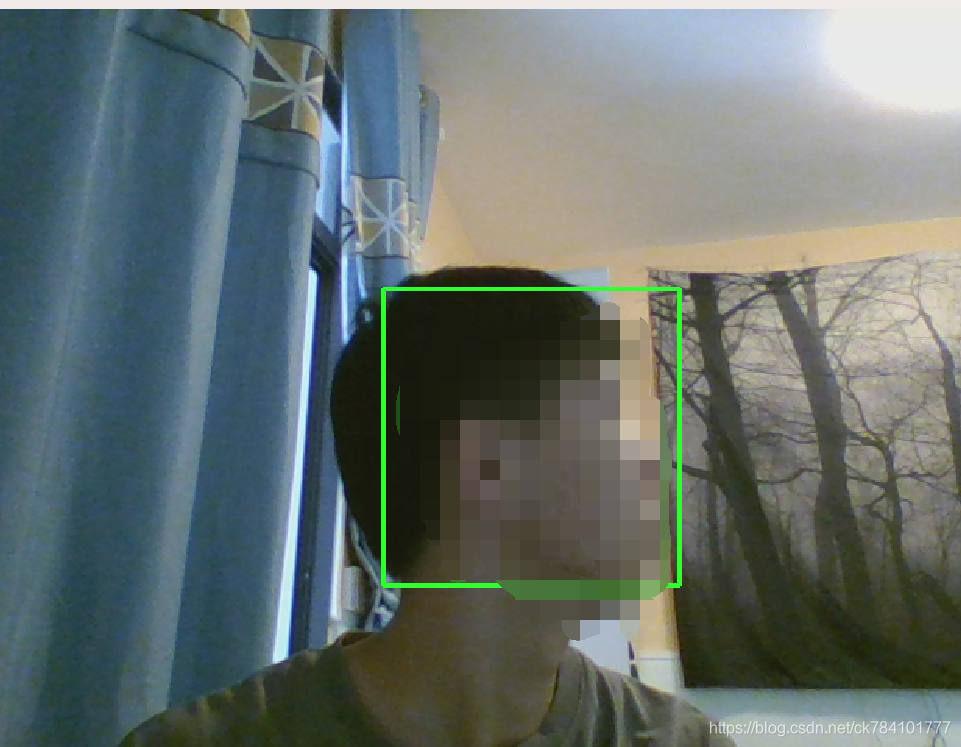

侧面效果

物体追踪

程序如下,使用方式同人脸识别,在scripts目录放入程序,在launch目录放入launch文件

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import rospy

import cv2

import numpy as np

from sensor_msgs.msg import Image, RegionOfInterest

from cv_bridge import CvBridge, CvBridgeError

class motionDetector:

def __init__(self):

rospy.on_shutdown(self.cleanup);

# 创建cv_bridge

self.bridge = CvBridge()

self.image_pub = rospy.Publisher("cv_bridge_image", Image, queue_size=1)

# 设置参数:最小区域、阈值

self.minArea = rospy.get_param("~minArea", 500)

self.threshold = rospy.get_param("~threshold", 25)

self.firstFrame = None

self.text = "Unoccupied"

# 初始化订阅rgb格式图像数据的订阅者,此处图像topic的话题名可以在launch文件中重映射

self.image_sub = rospy.Subscriber("input_rgb_image", Image, self.image_callback, queue_size=1)

def image_callback(self, data):

# 使用cv_bridge将ROS的图像数据转换成OpenCV的图像格式

try:

cv_image = self.bridge.imgmsg_to_cv2(data, "bgr8")

frame = np.array(cv_image, dtype=np.uint8)

except CvBridgeError, e:

print e

# 创建灰度图像

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

gray = cv2.GaussianBlur(gray, (21, 21), 0)

# 使用两帧图像做比较,检测移动物体的区域

if self.firstFrame is None:

self.firstFrame = gray

return

frameDelta = cv2.absdiff(self.firstFrame, gray)

thresh = cv2.threshold(frameDelta, self.threshold, 255, cv2.THRESH_BINARY)[1]

thresh = cv2.dilate(thresh, None, iterations=2)

binary, cnts, hierarchy= cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

for c in cnts:

# 如果检测到的区域小于设置值,则忽略

if cv2.contourArea(c) < self.minArea:

continue

# 在输出画面上框出识别到的物体

(x, y, w, h) = cv2.boundingRect(c)

cv2.rectangle(frame, (x, y), (x + w, y + h), (50, 255, 50), 2)

self.text = "Occupied"

# 在输出画面上打当前状态和时间戳信息

cv2.putText(frame, "Status: {}".format(self.text), (10, 20),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 2)

# 将识别后的图像转换成ROS消息并发布

self.image_pub.publish(self.bridge.cv2_to_imgmsg(frame, "bgr8"))

def cleanup(self):

print "Shutting down vision node."

cv2.destroyAllWindows()

if __name__ == '__main__':

try:

# 初始化ros节点

rospy.init_node("motion_detector")

rospy.loginfo("motion_detector node is started...")

rospy.loginfo("Please subscribe the ROS image.")

motionDetector()

rospy.spin()

except KeyboardInterrupt:

print "Shutting down motion detector node."

cv2.destroyAllWindows()

launch

<launch>

<node pkg="test1" name="motion_detector" type="motion_detector.py" output="screen">

<remap from="input_rgb_image" to="/usb_cam/image_raw" />

<rosparam>

minArea: 500

threshold: 25

</rosparam>

</node>

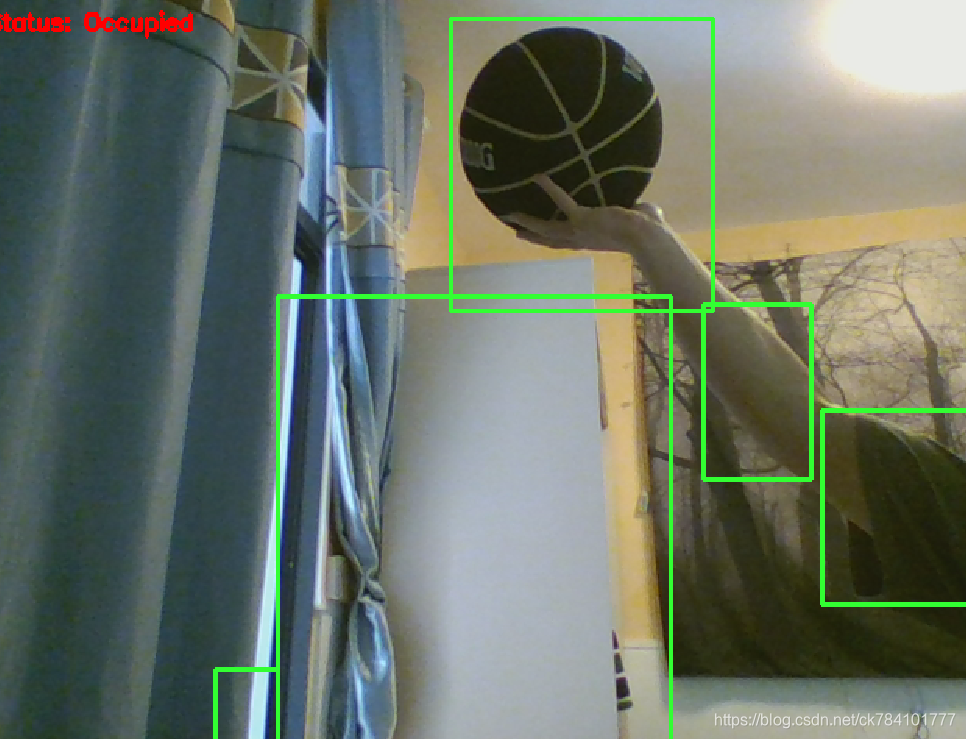

</launch>执行效果

被识别出的物体有篮球,柜子,手臂,衣袖,被识别物体用边框圈出