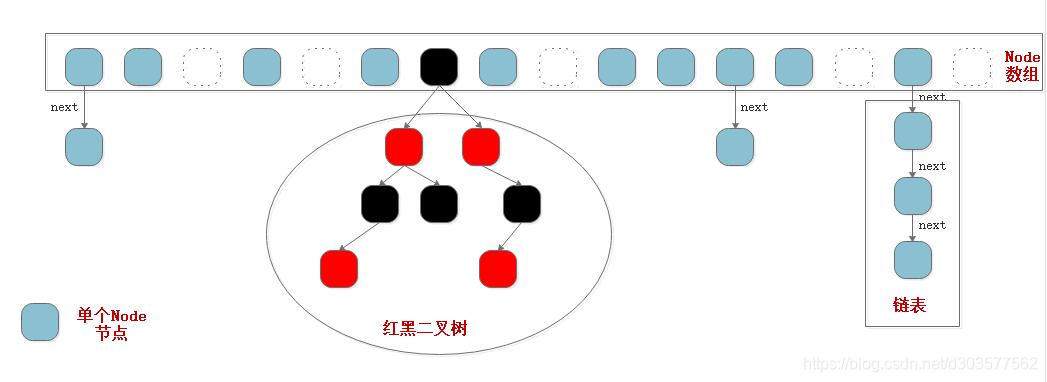

1.底层结构

ConcurrentHashMap的底层结构和HashMap是一致的,都是使用的数组+链表+红黑树。

2.链表转化为红黑树的场景和原因

1.场景

在putVal()方法中,链表新增完一个节点的时候,会对链表长度进行判断,如果链表长度大于8(包含当前新增节点),并且数组长度大于等于64,则转化为红黑树,否则扩容。

final V putVal(K key, V value, boolean onlyIfAbsent) {

if (key == null || value == null) throw new NullPointerException();

int hash = spread(key.hashCode());

int binCount = 0;

for (Node<K,V>[] tab = table;;) {

Node<K,V> f; int n, i, fh;

if (tab == null || (n = tab.length) == 0)

tab = initTable();

else if ((f = tabAt(tab, i = (n - 1) & hash)) == null) {

//如果数组该位置为空

//用一次CAS操作将新new出来的Node节点放入该位置

//如果CAS失败,就说明有并发,进入下一次循环

if (casTabAt(tab, i, null,

new Node<K,V>(hash, key, value, null)))

break;

}

else if ((fh = f.hash) == MOVED)

tab = helpTransfer(tab, f);

else {

V oldVal = null;

synchronized (f) {

if (tabAt(tab, i) == f) {

if (fh >= 0) {

//binCount初始为1

binCount = 1;

//这里binCount代表链表中第几个节点

for (Node<K,V> e = f;; ++binCount) {

K ek;

if (e.hash == hash &&

((ek = e.key) == key ||

(ek != null && key.equals(ek)))) {

oldVal = e.val;

if (!onlyIfAbsent)

e.val = value;

break;

}

Node<K,V> pred = e;

//当添加了新节点的时候,binCount并没有增加

//因此binCount代表的是不包含新节点的原先链表长度

if ((e = e.next) == null) {

pred.next = new Node<K,V>(hash, key,

value, null);

break;

}

}

}

else if (f instanceof TreeBin) {

Node<K,V> p;

binCount = 2;

if ((p = ((TreeBin<K,V>)f).putTreeVal(hash, key,

value)) != null) {

oldVal = p.val;

if (!onlyIfAbsent)

p.val = value;

}

}

}

}

if (binCount != 0) {

if (binCount >= TREEIFY_THRESHOLD)

treeifyBin(tab, i);

if (oldVal != null)

return oldVal;

break;

}

}

}

addCount(1L, binCount);

return null;

}

private final void treeifyBin(Node<K,V>[] tab, int index) {

Node<K,V> b; int n, sc;

if (tab != null) {

//这里会先判定数组是否小于64

//小于的话直接扩容,不会转红黑树

if ((n = tab.length) < MIN_TREEIFY_CAPACITY)

tryPresize(n << 1);

else if ((b = tabAt(tab, index)) != null && b.hash >= 0) {

synchronized (b) {

if (tabAt(tab, index) == b) {

TreeNode<K,V> hd = null, tl = null;

for (Node<K,V> e = b; e != null; e = e.next) {

TreeNode<K,V> p =

new TreeNode<K,V>(e.hash, e.key, e.val,

null, null);

if ((p.prev = tl) == null)

hd = p;

else

tl.next = p;

tl = p;

}

setTabAt(tab, index, new TreeBin<K,V>(hd));

}

}

}

}

}

2.原因

链表查找的时间复杂度为O(N/2),红黑树查找的时间复杂度为:O(logN)。

在N为8时,链表查询时间为8/2 = 4,红黑树查找时间为log8 = 3。

此时,查询的时间优势已经有所体现,当N越大的时候,越明显。

但是红黑树的增删改的维护成本很高。为什么在链表长度大于8的时候,就要转为红黑树呢?

以下是源码的一部分:

* The main disadvantage of per-bin locks is that other update

* operations on other nodes in a bin list protected by the same

* lock can stall, for example when user equals() or mapping

* functions take a long time. However, statistically, under

* random hash codes, this is not a common problem. Ideally, the

* frequency of nodes in bins follows a Poisson distribution

* (http://en.wikipedia.org/wiki/Poisson_distribution) with a

* parameter of about 0.5 on average, given the resizing threshold

* of 0.75, although with a large variance because of resizing

* granularity. Ignoring variance, the expected occurrences of

* list size k are (exp(-0.5) * pow(0.5, k) / factorial(k)). The

* first values are:

*

* 0: 0.60653066

* 1: 0.30326533

* 2: 0.07581633

* 3: 0.01263606

* 4: 0.00157952

* 5: 0.00015795

* 6: 0.00001316

* 7: 0.00000094

* 8: 0.00000006

* more: less than 1 in ten million

源码意思是说:Hash函数算出的HashCode导致冲突的概率符合泊松分布。在达到8的时候,已经是小于千万分之一的几率了。这里的8是从0开始算的,所以说长度为9。

因此,当链表长度等于8时已经是非常小的小概率事件了。这就说明此时再进行增加的概率微乎及微,因此增加的时间复杂度可以忽略不计,主要看查询的时间复杂度就行了。于是红黑树查询复杂度的优势一下子都显现出来了。

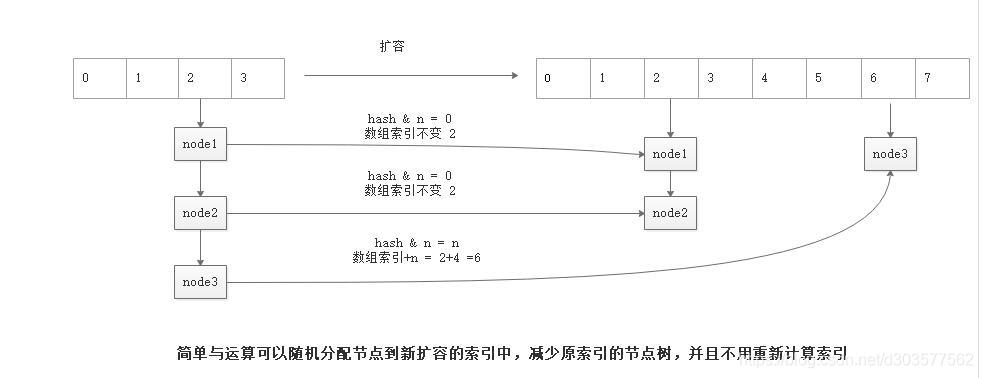

3.扩容机制

ConcurrentHashMap的扩容机制简单概括:

1.触发条件:当前数组长度小于64时,链表树化前和节点数量达到阈值时都会触发;当前数组长度大于等于64时,只有节点数量达到阈值时才会触发。

2.扩容大小:扩容机制和HashMap一样,扩容一倍。

3.是否支持并发:扩容时支持并发扩容,通过synchronized(Node) + CAS来保证线程安全。

4.流程:新建一个2倍大小的新节点数组,然后将旧数组里面的数据转移到新节点数组中。

5.参与线程:触发扩容的线程以及在扩容阶段对节点进行修改操作的线程,如增加、替换等。

扩容是通过transfer()方法实现的

private final void transfer(Node<K,V>[] tab, Node<K,V>[] nextTab) {

int n = tab.length, stride;

//计算步长,表示一个线程处理的数组长度,用来控制对CPU的使用。

//每个CPU最少处理16个长度的数组元素

//如果一个数组的长度只有16,那只有一个线程进行扩容任务

if ((stride = (NCPU > 1) ? (n >>> 3) / NCPU : n) < MIN_TRANSFER_STRIDE)

stride = MIN_TRANSFER_STRIDE; // subdivide range

if (nextTab == null) {

//初始化扩容要用的新数组

try {

@SuppressWarnings("unchecked")

Node<K,V>[] nt = (Node<K,V>[])new Node<?,?>[n << 1];

nextTab = nt;

} catch (Throwable ex) { // try to cope with OOME

sizeCtl = Integer.MAX_VALUE;

return;

}

nextTable = nextTab;

transferIndex = n;

}

int nextn = nextTab.length;

//这个用来标记数组中的节点已经被转移

ForwardingNode<K,V> fwd = new ForwardingNode<K,V>(nextTab);

//当为true时,线程可以参与到扩容的任务中。

//当为false时,说明线程自己的扩容任务没有结束或者扩容流程已经结束。

boolean advance = true;

//确保在提交新表格之前进行扫描

boolean finishing = false;

for (int i = 0, bound = 0;;) {

Node<K,V> f; int fh;

while (advance) {

int nextIndex, nextBound;

//扩容已经结束

if (--i >= bound || finishing)

advance = false;

//扩容任务已被划分完毕

else if ((nextIndex = transferIndex) <= 0) {

i = -1;

advance = false;

}

//通过CAS参与到扩容任务中

//CAS如果成功,则抢夺到nextIndex到nextBound这段区间的数据转移任务

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

bound = nextBound;

i = nextIndex - 1;

advance = false;

}

}

if (i < 0 || i >= n || i + n >= nextn) {

int sc;

if (finishing) {

//任务完成,更换新旧表引用,将新表引用置空

nextTable = null;

table = nextTab;

sizeCtl = (n << 1) - (n >>> 1);

return;

}

//sizeCtl如果为负,表示表正在初始化或调整大小

//-1用于初始化,-(1+活动的调整大小线程数)为正在调整大小

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

//如果不等于,说明还有线程正在扩容

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

return;

//此时说明扩容工作已完成

finishing = advance = true;

//在提交之前再次检查一下

i = n;

}

}

//如果节点为空,打上已被转移标记

else if ((f = tabAt(tab, i)) == null)

advance = casTabAt(tab, i, null, fwd);

//如果获取到的节点已经被转移,说明已经被处理了。

else if ((fh = f.hash) == MOVED)

advance = true;

else {

//针对单个节点进行加锁

synchronized (f) {

if (tabAt(tab, i) == f) {

Node<K,V> ln, hn;

if (fh >= 0) {

int runBit = fh & n;

Node<K,V> lastRun = f;

for (Node<K,V> p = f.next; p != null; p = p.next) {

//n必定为2的幂次方也体现在这里

//b的结果只有0或者n

int b = p.hash & n;

if (b != runBit) {

runBit = b;

lastRun = p;

}

}

//当为0的时候,数组索引不变

if (runBit == 0) {

ln = lastRun;

hn = null;

}

//当为n的时候,数组索引+n

else {

hn = lastRun;

ln = null;

}

for (Node<K,V> p = f; p != lastRun; p = p.next) {

int ph = p.hash; K pk = p.key; V pv = p.val;

if ((ph & n) == 0)

ln = new Node<K,V>(ph, pk, pv, ln);

else

hn = new Node<K,V>(ph, pk, pv, hn);

}

//将原本表中的节点转移到新表

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

//原表中对应的节点置为已处理

setTabAt(tab, i, fwd);

advance = true;

}

else if (f instanceof TreeBin) {

TreeBin<K,V> t = (TreeBin<K,V>)f;

TreeNode<K,V> lo = null, loTail = null;

TreeNode<K,V> hi = null, hiTail = null;

int lc = 0, hc = 0;

for (Node<K,V> e = t.first; e != null; e = e.next) {

int h = e.hash;

TreeNode<K,V> p = new TreeNode<K,V>

(h, e.key, e.val, null, null);

if ((h & n) == 0) {

if ((p.prev = loTail) == null)

lo = p;

else

loTail.next = p;

loTail = p;

++lc;

}

else {

if ((p.prev = hiTail) == null)

hi = p;

else

hiTail.next = p;

hiTail = p;

++hc;

}

}

//树在分裂之后如果长度不够(≤6),退化为链表

ln = (lc <= UNTREEIFY_THRESHOLD) ? untreeify(lo) :

(hc != 0) ? new TreeBin<K,V>(lo) : t;

hn = (hc <= UNTREEIFY_THRESHOLD) ? untreeify(hi) :

(lc != 0) ? new TreeBin<K,V>(hi) : t;

setTabAt(nextTab, i, ln);

setTabAt(nextTab, i + n, hn);

setTabAt(tab, i, fwd);

advance = true;

}

}

}

}

}

}

4.同步机制

ConcurrentHashMap是通过CAS+synchronized(Node)来保证同步性的。

1.CAS方法

操作节点数组

static final <K,V> Node<K,V> tabAt(Node<K,V>[] tab, int i) {

return (Node<K,V>)U.getObjectVolatile(tab, ((long)i << ASHIFT) + ABASE);

}

static final <K,V> boolean casTabAt(Node<K,V>[] tab, int i,

Node<K,V> c, Node<K,V> v) {

return U.compareAndSwapObject(tab, ((long)i << ASHIFT) + ABASE, c, v);

}

static final <K,V> void setTabAt(Node<K,V>[] tab, int i, Node<K,V> v) {

U.putObjectVolatile(tab, ((long)i << ASHIFT) + ABASE, v);

}

扩容时分配任务以及记录参与的线程数

//CAS方法通过步长来分配任务

else if (U.compareAndSwapInt

(this, TRANSFERINDEX, nextIndex,

nextBound = (nextIndex > stride ?

nextIndex - stride : 0))) {

bound = nextBound;

i = nextIndex - 1;

advance = false;

}

//sizeCtl如果为负,表示表正在初始化或调整大小

//-1用于初始化,-(1+活动的调整大小线程数)为正在调整大小

if (U.compareAndSwapInt(this, SIZECTL, sc = sizeCtl, sc - 1)) {

if ((sc - 2) != resizeStamp(n) << RESIZE_STAMP_SHIFT)

return;

finishing = advance = true;

i = n;

}

新增树子节点时锁树的根节点

/**

* Acquires write lock for tree restructuring.

*/

private final void lockRoot() {

if (!U.compareAndSwapInt(this, LOCKSTATE, 0, WRITER))

contendedLock(); // offload to separate method

}

2.synchronized

Synchronized用来锁头节点,也就是通过CAS方法从节点数组中取出来的节点。

f = tabAt(tab, i = (n - 1) & hash))

synchronized (f) {...}