ELK搭建笔记

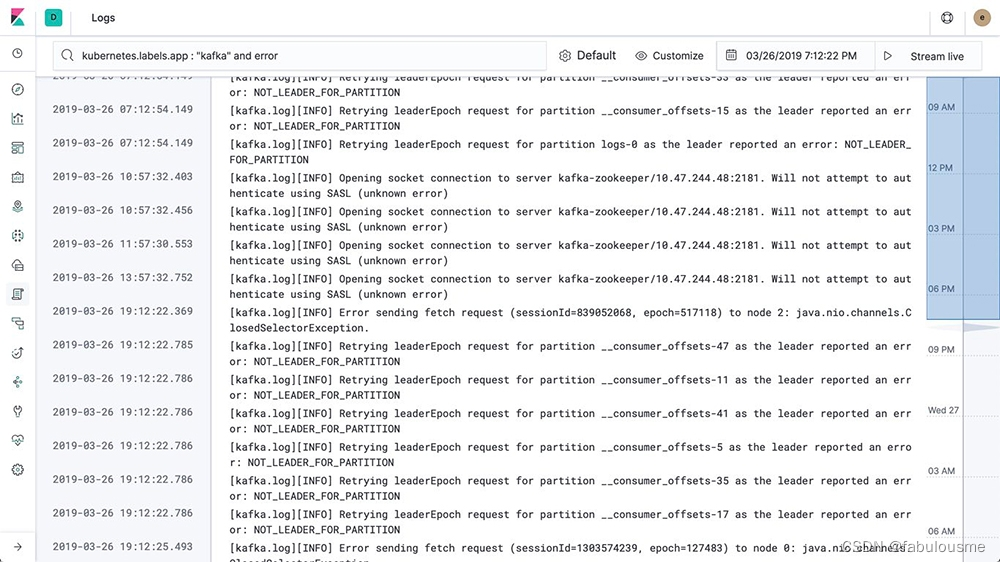

管理的系统较多,或者微服务架构体系下做运维,要检查日志。使用传统工作方式会比较麻烦,要登录每个服务器查看各个系统的日志,当日志过大时还很难查。ELK可以帮助我们解决这个问题。

ELK是一个简称,它由Elasticsearch、Logstash、Kibana3个系统组成,ELK是他们的首字母。通过ELK可以高效的归集、查询日志。

- Elasticsearch是一个高性能的全文检索数据库

- Logstash是一个管道,用于接收各个系统日志,并按一定规则传递给ES

- Kibana提供了一套用户界面,用于方便的查询和操作ES

(下图来自elastic官网)

下面介绍ELK的搭建和使用

1. 准备工作

环境说明

- 操作系统: CentOS7

- Docker

- docker-compose

版本说明

我使用的ELK版本:8.6.1。涉及插件等,在安装的是要要选择好自己使用的版本

拉取镜像()

docker pull docker.io/elasticsearch:8..6.1

docker pull docker.io/kibana:8.6.1

为什么没有logstash,因为我通过docker安装Logstash,在docker安装插件以后,插件没有持久化:容器重启后插件丢失。所以改为物理机安装logstash

2. 安装ES和kibana

创建目录:

/home/elasticsearch/logs

/home/elasticsearch/data

/home/elasticsearch/plugins

/home/kibana/logs

/home/kibana/data

/home/elk/config

下载分词插件,地址(根据自己的版本下载):

https://github.com/medcl/elasticsearch-analysis-ik/tree/v8.6.1

将分词插件解压,放到/home/elk/config目录。 创建配置文件: kibana.yml、 docker-compose.yml

目录结构:

- /home/elk

- kibana.yml

- docker-compose.yml

- config

- ik

docker-compose.yml

version: '3'

services:

elasticsearch:

container_name: elasticsearch

image: docker.io/elasticsearch:8.6.1

volumes:

- /home/elasticsearch/logs:/usr/share/elasticsearch/logs:rw

- /home/elasticsearch/data:/usr/share/elasticsearch/data:rw

- /home/elasticsearch/plugins:/usr/share/elasticsearch/plugins:rw

- ./config/ik:/usr/share/elasticsearch/plugins/ik

restart: always

privileged: true

ports:

- "9200:9200"

- "9300:9300"

environment:

cluster.name: elasticsearch

discovery.type: single-node

ES_JAVA_OPTS: "-Xms1g -Xmx1g"

logging:

driver: "json-file"

options:

max-size: "50m"

networks:

- stack

ulimits:

nofile:

soft: 65535

hard: 65535

kibana:

image: docker.io/kibana:8.6.1

container_name: kibana

volumes:

- /home/kibana/logs:/usr/share/kibana/logs:rw

- /home/kibana/data:/usr/share/kibana/data:rw

- ./kibana.yml:/usr/share/kibana/config/kibana.yml

ports: [ '5601:5601' ]

privileged: true

restart: always

networks:

- stack

depends_on: [ 'elasticsearch' ]

networks:

stack:

redisnet:

driver: bridge

kibana.yml

server.host: "0.0.0.0"

server.shutdownTimeout: "5s"

elasticsearch.hosts: [ "http://elasticsearch:9200" ]

elasticsearch.username: "kibana_system"

elasticsearch.password: "密码,待ES安装完毕以后再设置"

在/home/elk目录执行命名: docker-compose up -d 可启动ES和Kibana

启动后需要修改ES密码和初始化配置Kibana

修改ES密码

进入ES容器 docker exec -it elasticsearch /bin/bash

重置密码: ./bin/elasticsearch-reset-password -u elastic

This tool will reset the password of the [elastic] user to an autogenerated value.

The password will be printed in the console.

Please confirm that you would like to continue [y/N]y

Password for the [elastic] user successfully reset.

New value: ******(这里会显示密码,要复制出来存好)

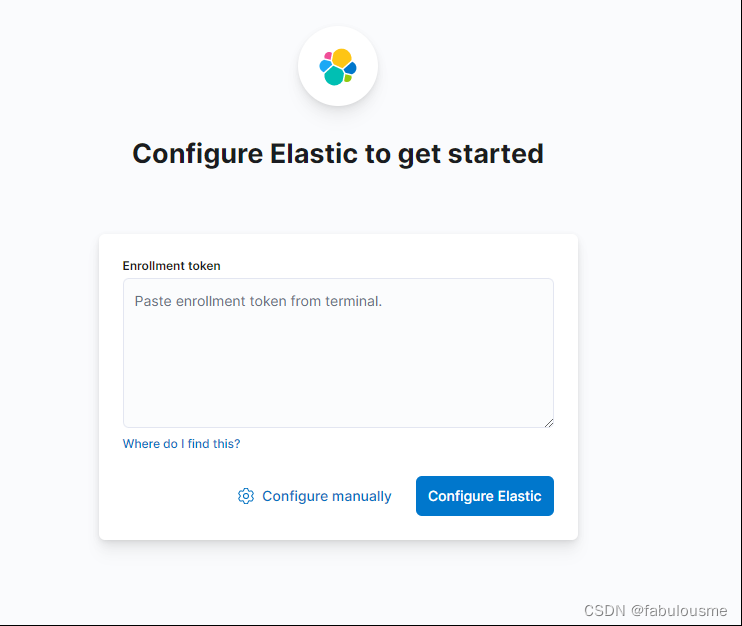

浏览器登录Kibana: http://192.168.49.136:5601/ ,这里需要输入ES的token

在主机执行: docker exec -it elasticsearch /usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s kibana 获取token

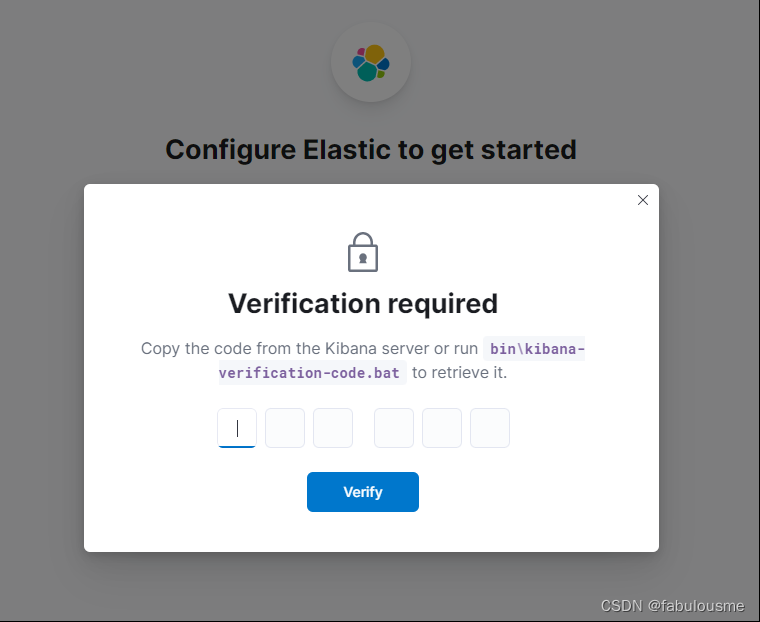

将token复制到kibana页面,并提交,提示需要验证码

运行 docker exec -it kibana bin/kibana-verification-code 得到验证码

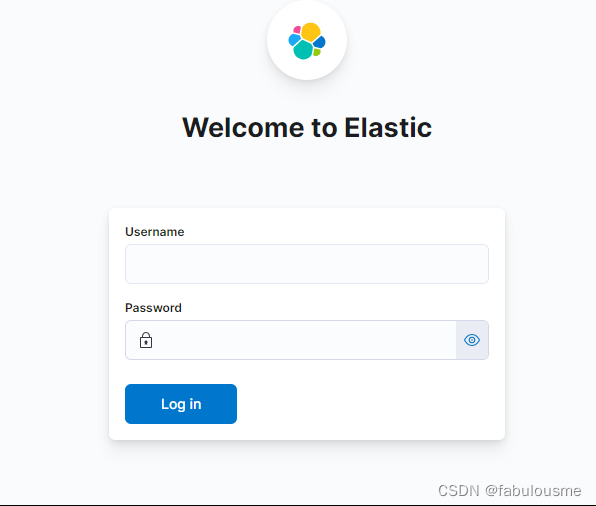

输入验证码以后,进入登录页面。使用前面重置的密码登录。用户名是elastic

点击【Explore on my own】开始使用

3. 安装Logstash

一开始用docker安装,结果安装过程中发现logstash的插件在重启容器后丢失,结题思路主要是目录映射,但是映射后还是不行。改为在主机安装。这里直接使用yum安装

官方文档:https://www.elastic.co/guide/en/logstash/8.8/installing-logstash.html

安装key

sudo rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

配置源

vim /etc/yum.repos.d/logstash.repo

name=Elastic repository for 8.x packages

baseurl=https://artifacts.elastic.co/packages/8.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

安装Logstash

yum install logstash-8.6.1.x86_64

安装multiline插件,用于处理日志换行问题: logstash-plugin install logstash-filter-multiline

查看已安装插件: logstash-plugin list

修改配置文件

input {

tcp {

type => "tcp"

mode => "server"

port => 4560

}

}

filter{

#过滤spring启动日志

if ("INFO org.springframework" in [message]) {

drop {}

}

# 通过multiline处理日志换行,如果不处理,日志里的换行会被当做新一条日志存储,影响阅读体验。

multiline {

pattern => "^(master).*"

negate => true

what => "previous"

}

#删除部分字段

mutate{

remove_field => ["type","port"]

}

}

output {

stdout {

codec => rubydebug

}

if "app_log_test" in [message]{

elasticsearch {

user => "logstash使用的用户名密码"

password => "logstash使用的用户名密码"

hosts => ["ip:port to ES"]

action => "index"

codec => rubydebug

index => "log_master_test"

}

}

if "app_log_prd" in [message]{

elasticsearch {

user => "logstash使用的用户名密码"

password => "logstash使用的用户名密码"

hosts => ["172.16.50.5:9200"]

action => "index"

codec => rubydebug

index => "log_master_prd"

}

}

}

4. 项目日志输出到Logstash

日志输出到Logstash对项目没有侵入,主要修改log4j2-spring.xml配置文件。我的项目里每个微服务都区分了prd、test等配置文件。这里列举1个。

在appenders里定义日志将要写入的3个地方:

- 控制台-console

- 文件系统-RollingFile

- logstash-Socket

然后再loggers里定义要启用的日志

log4j2-spring-test.xml

<?xml version="1.0" encoding="UTF-8"?>

<configuration monitorInterval="5">

<!--变量配置-->

<Properties>

<!-- 格式化输出:%date表示日期,%thread表示线程名,%-5level:级别从左显示5个字符宽度 %msg:日志消息,%n是换行符-->

<!-- %logger{36} 表示 Logger 名字最长36个字符 -->

<property name="LOG_PATTERN" value="%date{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n" />

<property name="logstash_pattern" value="[%thread] %-5level %logger{36} - %msg%n" />

<property name="FILE_PATH" value="/home/master_log/prd/app" />

</Properties>

<appenders>

<console name="Console" target="SYSTEM_OUT">

<!--输出日志的格式-->

<PatternLayout pattern="${LOG_PATTERN}"/>

<!--控制台只输出level及其以上级别的信息(onMatch) ACCEPT,其他的直接拒绝(onMismatch) DENY。 调试时,可改为 debug-->

<ThresholdFilter level="info" onMatch="ACCEPT" onMismatch="DENY"/>

</console>

<!-- 这个会打印出所有的info及以下级别的信息,每次大小超过size,则这size大小的日志会自动存入按年份-月份建立的文件夹下面并进行压缩,作为存档-->

<RollingFile name="RollingFileInfo" fileName="${FILE_PATH}/master.log" filePattern="${FILE_PATH}/master-%d{yyyy-MM-dd}_%i.log">

<!--控制台只输出level及以上级别的信息(onMatch),其他的直接拒绝(onMismatch)-->

<ThresholdFilter level="info" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="${LOG_PATTERN}"/>

<Policies>

<TimeBasedTriggeringPolicy interval="1"/>

<SizeBasedTriggeringPolicy size="10MB"/>

</Policies>

<DefaultRolloverStrategy max="15"/>

</RollingFile>

<Socket name="logstash" host="logstash服务ip" port="logstash服务端口" protocol="TCP">

<ElkStringPatternLayout pattern="${logstash_pattern}" projectName="app_log_prd"/>

</Socket>

</appenders>

<loggers>

<root level="info">

<appender-ref ref="Console"/>

<appender-ref ref="RollingFileInfo"/>

<appender-ref ref="logstash"/>

</root>

</loggers>

</configuration>

在ES分别创建索引:log_master_test、 log_master_prd

至此,项目的日志就会通过logstash写入到ES,并能通过kibana查询。不过还有几个优化:

- 异常日志

我们通常抛出异常是这样写:

try{

}catch(Exception e){

//这样的写法不能被日志系统识别到

e.printStackTrace();

}

要改为这样:

logger.error("发生错误:", e);

- 日志换行和报错优化

前面配置的logstash.conf里有个正则:pattern => "^(master).*",要在日志里增加这样的标识,需要在项目里加一个日志插件:ElkStringPatternLayout

/**

* ELK日志格式。

* 原文是JSON格式,但是logstash转换json报错。这里改为string格式

*/

@Plugin(name = "ElkStringPatternLayout", category = Node.CATEGORY, elementType = Layout.ELEMENT_TYPE, printObject = true)

public class ElkStringPatternLayout extends AbstractStringLayout {

/** 项目路径 */

private PatternLayout patternLayout;

private String projectName;

private ElkStringPatternLayout(Configuration config, RegexReplacement replace, String eventPattern, PatternSelector patternSelector, Charset charset, boolean alwaysWriteExceptions,

boolean noConsoleNoAnsi, String headerPattern, String footerPattern, String projectName) {

super(config, charset,

PatternLayout.createSerializer(config, replace, headerPattern, null, patternSelector, alwaysWriteExceptions, noConsoleNoAnsi),

PatternLayout.createSerializer(config, replace, footerPattern, null, patternSelector, alwaysWriteExceptions, noConsoleNoAnsi));

this.projectName = projectName;

this.patternLayout = PatternLayout.newBuilder()

.withPattern(eventPattern)

.withPatternSelector(patternSelector)

.withConfiguration(config)

.withRegexReplacement(replace)

.withCharset(charset)

.withAlwaysWriteExceptions(alwaysWriteExceptions)

.withNoConsoleNoAnsi(noConsoleNoAnsi)

.withHeader(headerPattern)

.withFooter(footerPattern)

.build();

}

@Override

public String toSerializable(LogEvent event) {

//在这里处理日志内容

String message = patternLayout.toSerializable(event);

String jsonStr = new StringLoggerInfo(projectName, message).toString();

return jsonStr + "\n";

}

@PluginFactory

public static ElkStringPatternLayout createLayout(

@PluginAttribute(value = "pattern", defaultString = PatternLayout.DEFAULT_CONVERSION_PATTERN) final String pattern,

@PluginElement("PatternSelector") final PatternSelector patternSelector,

@PluginConfiguration final Configuration config,

@PluginElement("Replace") final RegexReplacement replace,

@PluginAttribute(value = "charset") final Charset charset,

@PluginAttribute(value = "alwaysWriteExceptions", defaultBoolean = true) final boolean alwaysWriteExceptions,

@PluginAttribute(value = "noConsoleNoAnsi", defaultBoolean = false) final boolean noConsoleNoAnsi,

@PluginAttribute("header") final String headerPattern,

@PluginAttribute("footer") final String footerPattern,

@PluginAttribute("projectName") final String projectName){

return new ElkStringPatternLayout(config, replace, pattern, patternSelector, charset, alwaysWriteExceptions, noConsoleNoAnsi, headerPattern, footerPattern, projectName);

}

/**

* 输出的日志内容

*/

public static class StringLoggerInfo {

/** 项目名 */

private String projectName;

/** 日志信息 */

private String message;

public StringLoggerInfo(String projectName, String message) {

this.projectName = projectName;

this.message = message;

}

public String getProjectName() {

return projectName;

}

public String getMessage() {

return message;

}

@Override

public String toString() {

String repMsg = "(master)" + projectName + " " + message;

return repMsg;

}

}

}

5. 重要事项

使用Logstash时候,ES所在服务器的硬盘须保留一定空间,如果空间占满,会导致应用卡死。