框架简述

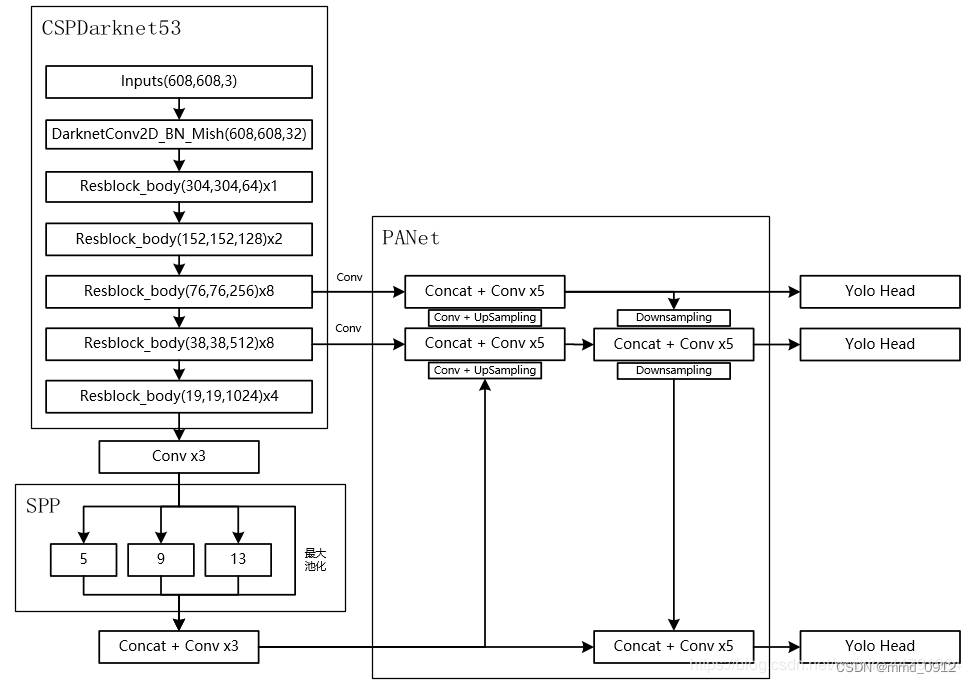

Yolov4是一种目标检测算法,具有较高的准确性和速度。Yolov4主要由Darknet-53和Yolov3的改进组成。Darknet-53是一个53层的卷积神经网络,用于提取图像特征。接下来,通过添加FPN和PANet模块来构建特征金字塔网络。然后,通过使用YOLOv3的多尺度预测(多尺度检测特征融合)和特征级联来改进网络性能。

Yolov4引入了一种基于CSPDarknet53的新骨架,名为CSPDarknet53-tiny。该骨架采用了CSP(Cross Stage Partial)连接,通过将主干网络的输出分成两段,一段做卷积处理,另一段保留原始特征,并将两者进行连接。这种结构可以显著减少计算量和参数数量,提高检测性能。

此外,Yolov4还使用了类似EfficientDet的BiFPN(Bi-directional Feature Pyramid Network)模块来实现特征金字塔网络,并在最后几层添加了SAM(Spatial Attention Module)和PAN(Path Aggregation Network)模块来增强网络的感受野和特征表达能力。它是Yolov3的改进版本。

网络对比

网络结构:Yolov4使用了一个更庞大的网络结构,包含更多的卷积层和残差连接,并引入了一些新的模块,如CSPDarknet53、SPP和PANet,以增强特征提取和感知能力。

骨干网络:Yolov4采用了CSPDarknet53作为骨干网络,相比Yolov3的Darknet53,CSPDarknet53拥有更高的准确性和计算效率。

特征金字塔网络(FPN):Yolov4在特征金字塔网络中使用了PANet模块,它能够有效地融合不同尺度和分辨率的特征图,提升了目标检测的性能。

数据增强策略:Yolov4引入了一些新的数据增强策略,如Mosaic数据增强和CutMix数据增强,以增加模型的泛化能力和鲁棒性。

边界框预测:Yolov4采用了YOLOv3-Tiny中的CIOU损失函数来优化边界框的预测结果,提高了检测的准确度。

参数解读

yolov4关键参数:

[net]

batch=64 # 所有的图片分成all_num/batch个批次,每batch个样本(64)更新一次参数,尽量保证一个batch里面各个类别都能取到样本

subdivisions=64 # 决定每次送入显卡的图片数目 batch/subdivisions

width=608 # 送入网络的图片宽度

height=608 # 送入网络的图片高度

channels=3 # 送入网络的图片通道数

momentum=0.949 # 动量参数,表示梯度下降到最优值的速度

decay=0.0005 # 权重衰减正则项,防止过拟合.

angle=0 # 图片角度变化

saturation = 1.5 # 图片饱和度

exposure = 1.5 # 图片曝光量

hue=.1 # 图片色调

learning_rate=0.0013 # 学习率,影响权值更新的速度

burn_in=1000

max_batches = 500500 # 训练最大次数

policy=steps # 学习率调整的策略

steps=400000,450000 # 学习率调整时间

scales=.1,.1 # 学习率调整倍数

#cutmix=1 # 数据增强cutmix

mosaic=0 # 数据增强mosaic

#:104x104 54:52x52 85:26x26 104:13x13 for 416

[convolutional] # 利用32个大小为3*3*3的滤波器步长为1,填充值为1进行过滤然后用mish函数进行激活

batch_normalize=1 # 是否做BN

filters=32 # 输出特征图的数量

size=3 # 卷积核的尺寸

stride=1 # 做卷积运算的步长

pad=1 # 是否做pad

activation=mish # 激活函数类型 # (608 + 2 * 1 - 3)/ 1 + 1 = 608 # 608 * 608 * 32

# Downsample

[convolutional] # 利用64个大小为3*3*3的滤波器步长为2,填充值为1进行过滤然后用mish函数进行激活

batch_normalize=1

filters=64

size=3

stride=2

pad=1

activation=mish # (608 + 2 * 1 - 3)/ 2 + 1 = 304 # 304 * 304 * 64

[convolutional] # 利用64个大小为1*1*3的滤波器步长为1,填充值为1进行过滤然后用mish函数进行激活

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=mish # (304 + 2 * 1 -1) / 1 + 1 = 306 # 304 * 304 * 64

[route]

layers = -2 # 当属性layers只有一个值时,它会输出由该值索引的网络层的特征图 # 304 * 304 * 64

[convolutional] # 利用64个大小为1*1*3的滤波器步长为1,填充值为1进行过滤然后用mish函数进行激活

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=mish # (304 + 2 * 1 -1) / 1 + 1 = 306 # 304 * 304 * 64

[convolutional] # 利用32个大小为1*1*3的滤波器步长为1,填充值为1进行过滤然后用mish函数进行激活

batch_normalize=1

filters=32

size=1

stride=1

pad=1

activation=mish # (304 + 2 * 1 -1) / 1 + 1 = 306 # 304 * 304 * 32

[convolutional] # 利用64个大小为3*3*3的滤波器步长为1,填充值为1进行过滤然后用mish函数进行激活

batch_normalize=1

filters=64

size=3

stride=1

pad=1

activation=mish # (304 + 2 * 1 -3) / 1 + 1 = 304 # 304 * 304 * 64

[shortcut] #shortcut 操作是类似 ResNet 的跨层连接

from=-3 # 参数 from 是 −3,意思是 shortcut 的输出是当前层与先前的倒数第三层相加而得到,通俗来讲就是 add 操作

activation=linear # 304 * 304 * 64

[convolutional] # 利用64个大小为1*1*3的滤波器步长为1,填充值为1进行过滤然后用mish函数进行激活

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=mish # (304 + 2 * 1 -1) / 1 + 1 = 306 # 304 * 304 * 64

[route] # 304 * 304 * 128

layers = -1,-7 # 当属性layers有两个值,就是将上一层和从当前层倒数第7层进行融合,大于两个值同理

[convolutional] # 利用64个大小为1*1*3的滤波器步长为1,填充值为1进行过滤然后用mish函数进行激活

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=mish # (304 + 2 * 1 -1) / 1 + 1 = 306 # 304 * 304 * 32

# Downsample

[convolutional] # 利用128个大小为3*3*3的滤波器步长为2,填充值为1进行过滤然后用mish函数进行激活

batch_normalize=1

filters=128

size=3

stride=2

pad=1

activation=mish # (304 + 2 * 1 -3) / 2 + 1 = 152 # 152 * 152 * 32

[convolutional]

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=mish

[route]

layers = -2

[convolutional]

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=64

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=64

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=mish

[route]

layers = -1,-10

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

# Downsample

[convolutional]

batch_normalize=1

filters=256

size=3

stride=2

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[route]

layers = -2

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=mish

[route]

layers = -1,-28

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

# Downsample

[convolutional]

batch_normalize=1

filters=512

size=3

stride=2

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[route]

layers = -2

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=mish

[route]

layers = -1,-28

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=mish

# Downsample

[convolutional]

batch_normalize=1

filters=1024

size=3

stride=2

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=mish

[route]

layers = -2

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=mish

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=mish

[shortcut]

from=-3

activation=linear

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=mish

[route]

layers = -1,-16

[convolutional]

batch_normalize=1

filters=1024

size=1

stride=1

pad=1

activation=mish

##########################

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=leaky

### SPP ###

[maxpool]

stride=1

size=5

[route]

layers=-2

[maxpool]

stride=1

size=9

[route]

layers=-4

[maxpool]

stride=1

size=13

[route]

layers=-1,-3,-5,-6

### End SPP ### 每个maxpool的padding=size/2

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[upsample]

stride=2

[route]

layers = 85

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[route]

layers = -1, -3

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=512

activation=leaky

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=512

activation=leaky

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=leaky

[upsample]

stride=2

[route]

layers = 54

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=leaky

[route]

layers = -1, -3

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=256

activation=leaky

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=256

activation=leaky

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=leaky

##########################

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=256

activation=leaky

[convolutional]

size=1

stride=1

pad=1

filters=33

activation=linear

[yolo]

mask = 0,1,2 #对应的anchors索引值

anchors = 23, 40, 25, 79, 44, 77, 30,124, 54,121, 42,197, 87,112, 76,193, 126,255

classes=6

num=9

jitter=.3

ignore_thresh = .7

truth_thresh = 1

scale_x_y = 1.2

iou_thresh=0.213

cls_normalizer=1.0

iou_normalizer=0.07

iou_loss=ciou

nms_kind=greedynms

beta_nms=0.6

max_delta=5

[route]

layers = -4

[convolutional]

batch_normalize=1

size=3

stride=2

pad=1

filters=256

activation=leaky

[route]

layers = -1, -16

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=512

activation=leaky

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=512

activation=leaky

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=512

activation=leaky

[convolutional]

size=1

stride=1

pad=1

filters=33

activation=linear

[yolo]

mask = 3,4,5

#anchors = 12, 16, 19, 36, 40, 28, 36, 75, 76, 55, 72, 146, 142, 110, 192, 243, 459, 401

anchors = 23, 40, 25, 79, 44, 77, 30,124, 54,121, 42,197, 87,112, 76,193, 126,255

classes=6

num=9

jitter=.3

ignore_thresh = .7

truth_thresh = 1

scale_x_y = 1.1

iou_thresh=0.213

cls_normalizer=1.0

iou_normalizer=0.07

iou_loss=ciou

nms_kind=greedynms

beta_nms=0.6

max_delta=5

[route]

layers = -4

[convolutional]

batch_normalize=1

size=3

stride=2

pad=1

filters=512

activation=leaky

[route]

layers = -1, -37

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[convolutional]

batch_normalize=1

filters=512

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

size=3

stride=1

pad=1

filters=1024

activation=leaky

[convolutional]

size=1

stride=1

pad=1

filters=33

activation=linear

[yolo]

mask = 6,7,8

#anchors = 12, 16, 19, 36, 40, 28, 36, 75, 76, 55, 72, 146, 142, 110, 192, 243, 459, 401

anchors = 23, 40, 25, 79, 44, 77, 30,124, 54,121, 42,197, 87,112, 76,193, 126,255

classes=6

num=9

jitter=.3

ignore_thresh = .7

truth_thresh = 1

random=0

scale_x_y = 1.05

iou_thresh=0.213

cls_normalizer=1.0

iou_normalizer=0.07

iou_loss=ciou

nms_kind=greedynms

beta_nms=0.6

max_delta=5

yolov4训练日志:

layer filters size/strd(dil) input output

0 conv 32 3 x 3/ 1 608 x 608 x 3 -> 608 x 608 x 32 0.639 BF

1 conv 64 3 x 3/ 2 608 x 608 x 32 -> 304 x 304 x 64 3.407 BF

2 conv 64 1 x 1/ 1 304 x 304 x 64 -> 304 x 304 x 64 0.757 BF

3 route 1 -> 304 x 304 x 64

4 conv 64 1 x 1/ 1 304 x 304 x 64 -> 304 x 304 x 64 0.757 BF

5 conv 32 1 x 1/ 1 304 x 304 x 64 -> 304 x 304 x 32 0.379 BF

6 conv 64 3 x 3/ 1 304 x 304 x 32 -> 304 x 304 x 64 3.407 BF

7 Shortcut Layer: 4, wt = 0, wn = 0, outputs: 304 x 304 x 64 0.006 BF

8 conv 64 1 x 1/ 1 304 x 304 x 64 -> 304 x 304 x 64 0.757 BF

9 route 8 2 -> 304 x 304 x 128

10 conv 64 1 x 1/ 1 304 x 304 x 128 -> 304 x 304 x 64 1.514 BF

11 conv 128 3 x 3/ 2 304 x 304 x 64 -> 152 x 152 x 128 3.407 BF

12 conv 64 1 x 1/ 1 152 x 152 x 128 -> 152 x 152 x 64 0.379 BF

13 route 11 -> 152 x 152 x 128

14 conv 64 1 x 1/ 1 152 x 152 x 128 -> 152 x 152 x 64 0.379 BF

15 conv 64 1 x 1/ 1 152 x 152 x 64 -> 152 x 152 x 64 0.189 BF

16 conv 64 3 x 3/ 1 152 x 152 x 64 -> 152 x 152 x 64 1.703 BF

17 Shortcut Layer: 14, wt = 0, wn = 0, outputs: 152 x 152 x 64 0.001 BF

18 conv 64 1 x 1/ 1 152 x 152 x 64 -> 152 x 152 x 64 0.189 BF

19 conv 64 3 x 3/ 1 152 x 152 x 64 -> 152 x 152 x 64 1.703 BF

20 Shortcut Layer: 17, wt = 0, wn = 0, outputs: 152 x 152 x 64 0.001 BF

21 conv 64 1 x 1/ 1 152 x 152 x 64 -> 152 x 152 x 64 0.189 BF

22 route 21 12 -> 152 x 152 x 128

23 conv 128 1 x 1/ 1 152 x 152 x 128 -> 152 x 152 x 128 0.757 BF

24 conv 256 3 x 3/ 2 152 x 152 x 128 -> 76 x 76 x 256 3.407 BF

25 conv 128 1 x 1/ 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BF

26 route 24 -> 76 x 76 x 256

27 conv 128 1 x 1/ 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BF

28 conv 128 1 x 1/ 1 76 x 76 x 128 -> 76 x 76 x 128 0.189 BF

29 conv 128 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 128 1.703 BF

30 Shortcut Layer: 27, wt = 0, wn = 0, outputs: 76 x 76 x 128 0.001 BF

31 conv 128 1 x 1/ 1 76 x 76 x 128 -> 76 x 76 x 128 0.189 BF

32 conv 128 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 128 1.703 BF

33 Shortcut Layer: 30, wt = 0, wn = 0, outputs: 76 x 76 x 128 0.001 BF

34 conv 128 1 x 1/ 1 76 x 76 x 128 -> 76 x 76 x 128 0.189 BF

35 conv 128 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 128 1.703 BF

36 Shortcut Layer: 33, wt = 0, wn = 0, outputs: 76 x 76 x 128 0.001 BF

37 conv 128 1 x 1/ 1 76 x 76 x 128 -> 76 x 76 x 128 0.189 BF

38 conv 128 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 128 1.703 BF

39 Shortcut Layer: 36, wt = 0, wn = 0, outputs: 76 x 76 x 128 0.001 BF

40 conv 128 1 x 1/ 1 76 x 76 x 128 -> 76 x 76 x 128 0.189 BF

41 conv 128 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 128 1.703 BF

42 Shortcut Layer: 39, wt = 0, wn = 0, outputs: 76 x 76 x 128 0.001 BF

43 conv 128 1 x 1/ 1 76 x 76 x 128 -> 76 x 76 x 128 0.189 BF

44 conv 128 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 128 1.703 BF

45 Shortcut Layer: 42, wt = 0, wn = 0, outputs: 76 x 76 x 128 0.001 BF

46 conv 128 1 x 1/ 1 76 x 76 x 128 -> 76 x 76 x 128 0.189 BF

47 conv 128 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 128 1.703 BF

48 Shortcut Layer: 45, wt = 0, wn = 0, outputs: 76 x 76 x 128 0.001 BF

49 conv 128 1 x 1/ 1 76 x 76 x 128 -> 76 x 76 x 128 0.189 BF

50 conv 128 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 128 1.703 BF

51 Shortcut Layer: 48, wt = 0, wn = 0, outputs: 76 x 76 x 128 0.001 BF

52 conv 128 1 x 1/ 1 76 x 76 x 128 -> 76 x 76 x 128 0.189 BF

53 route 52 25 -> 76 x 76 x 256

54 conv 256 1 x 1/ 1 76 x 76 x 256 -> 76 x 76 x 256 0.757 BF

55 conv 512 3 x 3/ 2 76 x 76 x 256 -> 38 x 38 x 512 3.407 BF

56 conv 256 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BF

57 route 55 -> 38 x 38 x 512

58 conv 256 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BF

59 conv 256 1 x 1/ 1 38 x 38 x 256 -> 38 x 38 x 256 0.189 BF

60 conv 256 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 256 1.703 BF

61 Shortcut Layer: 58, wt = 0, wn = 0, outputs: 38 x 38 x 256 0.000 BF

62 conv 256 1 x 1/ 1 38 x 38 x 256 -> 38 x 38 x 256 0.189 BF

63 conv 256 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 256 1.703 BF

64 Shortcut Layer: 61, wt = 0, wn = 0, outputs: 38 x 38 x 256 0.000 BF

65 conv 256 1 x 1/ 1 38 x 38 x 256 -> 38 x 38 x 256 0.189 BF

66 conv 256 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 256 1.703 BF

67 Shortcut Layer: 64, wt = 0, wn = 0, outputs: 38 x 38 x 256 0.000 BF

68 conv 256 1 x 1/ 1 38 x 38 x 256 -> 38 x 38 x 256 0.189 BF

69 conv 256 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 256 1.703 BF

70 Shortcut Layer: 67, wt = 0, wn = 0, outputs: 38 x 38 x 256 0.000 BF

71 conv 256 1 x 1/ 1 38 x 38 x 256 -> 38 x 38 x 256 0.189 BF

72 conv 256 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 256 1.703 BF

73 Shortcut Layer: 70, wt = 0, wn = 0, outputs: 38 x 38 x 256 0.000 BF

74 conv 256 1 x 1/ 1 38 x 38 x 256 -> 38 x 38 x 256 0.189 BF

75 conv 256 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 256 1.703 BF

76 Shortcut Layer: 73, wt = 0, wn = 0, outputs: 38 x 38 x 256 0.000 BF

77 conv 256 1 x 1/ 1 38 x 38 x 256 -> 38 x 38 x 256 0.189 BF

78 conv 256 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 256 1.703 BF

79 Shortcut Layer: 76, wt = 0, wn = 0, outputs: 38 x 38 x 256 0.000 BF

80 conv 256 1 x 1/ 1 38 x 38 x 256 -> 38 x 38 x 256 0.189 BF

81 conv 256 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 256 1.703 BF

82 Shortcut Layer: 79, wt = 0, wn = 0, outputs: 38 x 38 x 256 0.000 BF

83 conv 256 1 x 1/ 1 38 x 38 x 256 -> 38 x 38 x 256 0.189 BF

84 route 83 56 -> 38 x 38 x 512

85 conv 512 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 512 0.757 BF

86 conv 1024 3 x 3/ 2 38 x 38 x 512 -> 19 x 19 x1024 3.407 BF

87 conv 512 1 x 1/ 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BF

88 route 86 -> 19 x 19 x1024

89 conv 512 1 x 1/ 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BF

90 conv 512 1 x 1/ 1 19 x 19 x 512 -> 19 x 19 x 512 0.189 BF

91 conv 512 3 x 3/ 1 19 x 19 x 512 -> 19 x 19 x 512 1.703 BF

92 Shortcut Layer: 89, wt = 0, wn = 0, outputs: 19 x 19 x 512 0.000 BF

93 conv 512 1 x 1/ 1 19 x 19 x 512 -> 19 x 19 x 512 0.189 BF

94 conv 512 3 x 3/ 1 19 x 19 x 512 -> 19 x 19 x 512 1.703 BF

95 Shortcut Layer: 92, wt = 0, wn = 0, outputs: 19 x 19 x 512 0.000 BF

96 conv 512 1 x 1/ 1 19 x 19 x 512 -> 19 x 19 x 512 0.189 BF

97 conv 512 3 x 3/ 1 19 x 19 x 512 -> 19 x 19 x 512 1.703 BF

98 Shortcut Layer: 95, wt = 0, wn = 0, outputs: 19 x 19 x 512 0.000 BF

99 conv 512 1 x 1/ 1 19 x 19 x 512 -> 19 x 19 x 512 0.189 BF

100 conv 512 3 x 3/ 1 19 x 19 x 512 -> 19 x 19 x 512 1.703 BF

101 Shortcut Layer: 98, wt = 0, wn = 0, outputs: 19 x 19 x 512 0.000 BF

102 conv 512 1 x 1/ 1 19 x 19 x 512 -> 19 x 19 x 512 0.189 BF

103 route 102 87 -> 19 x 19 x1024

104 conv 1024 1 x 1/ 1 19 x 19 x1024 -> 19 x 19 x1024 0.757 BF

105 conv 512 1 x 1/ 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BF

106 conv 1024 3 x 3/ 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BF

107 conv 512 1 x 1/ 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BF

108 max 5x 5/ 1 19 x 19 x 512 -> 19 x 19 x 512 0.005 BF

109 route 107 -> 19 x 19 x 512

110 max 9x 9/ 1 19 x 19 x 512 -> 19 x 19 x 512 0.015 BF

111 route 107 -> 19 x 19 x 512

112 max 13x13/ 1 19 x 19 x 512 -> 19 x 19 x 512 0.031 BF

113 route 112 110 108 107 -> 19 x 19 x2048

114 conv 512 1 x 1/ 1 19 x 19 x2048 -> 19 x 19 x 512 0.757 BF

115 conv 1024 3 x 3/ 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BF

116 conv 512 1 x 1/ 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BF

117 conv 256 1 x 1/ 1 19 x 19 x 512 -> 19 x 19 x 256 0.095 BF

118 upsample 2x 19 x 19 x 256 -> 38 x 38 x 256

119 route 85 -> 38 x 38 x 512

120 conv 256 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BF

121 route 120 118 -> 38 x 38 x 512

122 conv 256 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BF

123 conv 512 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BF

124 conv 256 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BF

125 conv 512 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BF

126 conv 256 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BF

127 conv 128 1 x 1/ 1 38 x 38 x 256 -> 38 x 38 x 128 0.095 BF

128 upsample 2x 38 x 38 x 128 -> 76 x 76 x 128

129 route 54 -> 76 x 76 x 256

130 conv 128 1 x 1/ 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BF

131 route 130 128 -> 76 x 76 x 256

132 conv 128 1 x 1/ 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BF

133 conv 256 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BF

134 conv 128 1 x 1/ 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BF

135 conv 256 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BF

136 conv 128 1 x 1/ 1 76 x 76 x 256 -> 76 x 76 x 128 0.379 BF

137 conv 256 3 x 3/ 1 76 x 76 x 128 -> 76 x 76 x 256 3.407 BF

138 conv 51 1 x 1/ 1 76 x 76 x 256 -> 76 x 76 x 51 0.151 BF

139 yolo

[yolo] params: iou loss: ciou (4), iou_norm: 0.07, obj_norm: 1.00, cls_norm: 1.00, delta_norm: 1.00, scale_x_y: 1.20

140 route 136 -> 76 x 76 x 128

141 conv 256 3 x 3/ 2 76 x 76 x 128 -> 38 x 38 x 256 0.852 BF

142 route 141 126 -> 38 x 38 x 512

143 conv 256 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BF

144 conv 512 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BF

145 conv 256 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BF

146 conv 512 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BF

147 conv 256 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 256 0.379 BF

148 conv 512 3 x 3/ 1 38 x 38 x 256 -> 38 x 38 x 512 3.407 BF

149 conv 51 1 x 1/ 1 38 x 38 x 512 -> 38 x 38 x 51 0.075 BF

150 yolo

[yolo] params: iou loss: ciou (4), iou_norm: 0.07, obj_norm: 1.00, cls_norm: 1.00, delta_norm: 1.00, scale_x_y: 1.10

151 route 147 -> 38 x 38 x 256

152 conv 512 3 x 3/ 2 38 x 38 x 256 -> 19 x 19 x 512 0.852 BF

153 route 152 116 -> 19 x 19 x1024

154 conv 512 1 x 1/ 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BF

155 conv 1024 3 x 3/ 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BF

156 conv 512 1 x 1/ 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BF

157 conv 1024 3 x 3/ 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BF

158 conv 512 1 x 1/ 1 19 x 19 x1024 -> 19 x 19 x 512 0.379 BF

159 conv 1024 3 x 3/ 1 19 x 19 x 512 -> 19 x 19 x1024 3.407 BF

160 conv 51 1 x 1/ 1 19 x 19 x1024 -> 19 x 19 x 51 0.038 BF

161 yolo

[yolo] params: iou loss: ciou (4), iou_norm: 0.07, obj_norm: 1.00, cls_norm: 1.00, delta_norm: 1.00, scale_x_y: 1.05

Total BFLOPS 127.403

avg_outputs = 1049302

Allocate additional workspace_size = 106.46 MB

以上是yolov4的网络结构,整个网络比较长,因此需要将其各个部分进行归纳总结;YOLOv4网络共有161层,在608 × 608的分辨率下,计算量总共128.46BFLOPS,YOLOv3为141BFLOPS。