一、项目架构要求

1.数据仓库的数据来源为业务数据库(mysql)

2.通过sqoop将mysql中的业务数据导入到大数据平台(hive)

3.通过hive进行数据计算和数据分析形成数据报表

4.再通过sqoop将数据报表导出到mysql

5.使用FineReport制作数据报表

二、项目实现

2.1 初始化脚本

进行数据预置,完成“1.数据仓库的数据来源为业务数据库(mysql)”。

2.1.1 初始化MySQL脚本

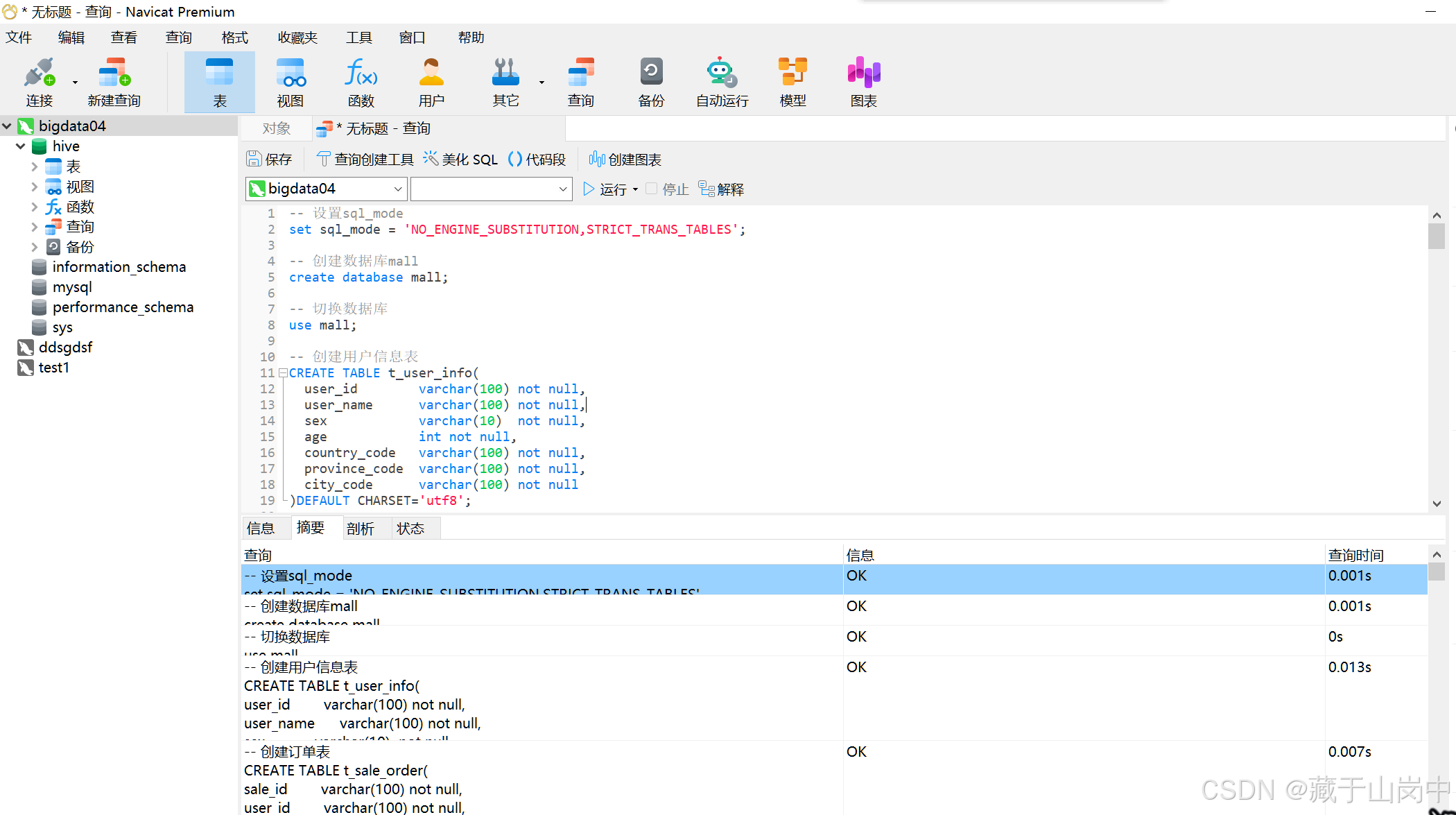

在navicat中,远程连接虚拟主机上的MySQL,并且执行以下查询。

-- 设置sql_mode

set sql_mode = 'NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES';

-- 创建数据库mall

create database mall;

-- 切换数据库

use mall;

-- 创建用户信息表

CREATE TABLE t_user_info(

user_id varchar(100) not null,

user_name varchar(100) not null,

sex varchar(10) not null,

age int not null,

country_code varchar(100) not null,

province_code varchar(100) not null,

city_code varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建订单表

CREATE TABLE t_sale_order(

sale_id varchar(100) not null,

user_id varchar(100) not null,

goods_id varchar(100) not null,

price int not null,

sale_count int not null,

total_price int not null,

create_time varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建商品信息表

CREATE TABLE dim_goods_info(

goods_id varchar(100) not null,

goods_name varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建国家信息表

CREATE TABLE dim_country_info(

country_code varchar(100) not null,

country_name varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建省份信息表

CREATE TABLE dim_province_info(

province_code varchar(100) not null,

province_name varchar(100) not null,

country_code varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建城市信息表

CREATE TABLE dim_city_info(

city_code varchar(100) not null,

city_name varchar(100) not null,

province_code varchar(100) not null

)DEFAULT CHARSET='utf8';

-- 创建用户浏览日志表

CREATE TABLE t_access_log(

log_str varchar(500) not null

)DEFAULT CHARSET='utf8';

-- 创建商品类别表

CREATE TABLE dim_goods_type(

goods_id varchar(100) not null,

type_id varchar(100) not null,

type_name varchar(100) not null

)DEFAULT CHARSET='utf8';

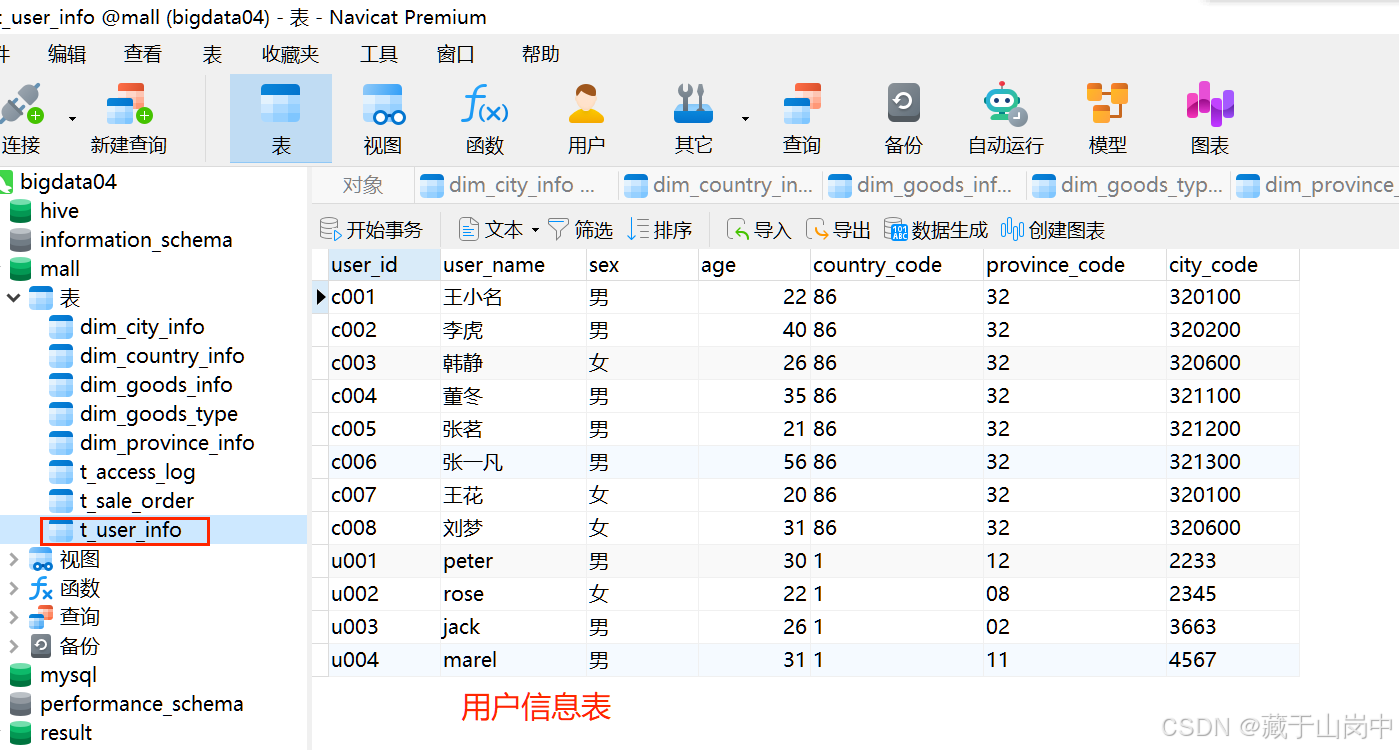

-- 用户信息表插入数据

insert into t_user_info values('c001','王小名','男',22,'86','32','320100');

insert into t_user_info values('c002','李虎','男',40,'86','32','320200');

insert into t_user_info values('c003','韩静','女',26,'86','32','320600');

insert into t_user_info values('c004','董冬','男',35,'86','32','321100');

insert into t_user_info values('c005','张茗','男',21,'86','32','321200');

insert into t_user_info values('c006','张一凡','男',56,'86','32','321300');

insert into t_user_info values('c007','王花','女',20,'86','32','320100');

insert into t_user_info values('c008','刘梦','女',31,'86','32','320600');

insert into t_user_info values('u001','peter','男',30,'1','12','2233');

insert into t_user_info values('u002','rose','女',22,'1','08','2345');

insert into t_user_info values('u003','jack','男',26,'1','02','3663');

insert into t_user_info values('u004','marel','男',31,'1','11','4567');

commit;

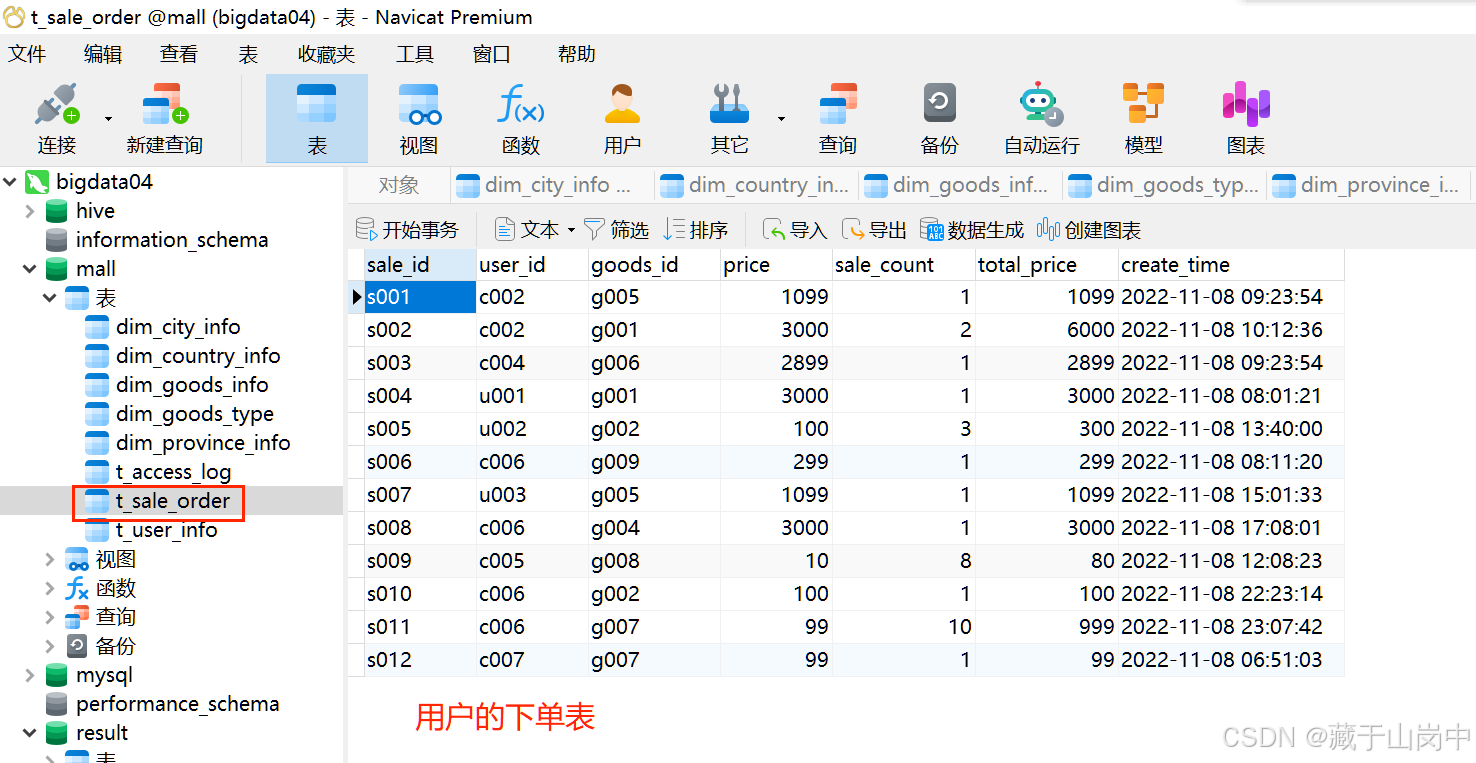

-- 订单表插入数据

insert into t_sale_order values('s001','c002','g005',1099,1,1099,'2022-11-08 09:23:54');

insert into t_sale_order values('s002','c002','g001',3000,2,6000,'2022-11-08 10:12:36');

insert into t_sale_order values('s003','c004','g006',2899,1,2899,'2022-11-08 09:23:54');

insert into t_sale_order values('s004','u001','g001',3000,1,3000,'2022-11-08 08:01:21');

insert into t_sale_order values('s005','u002','g002',100,3,300,'2022-11-08 13:40:00');

insert into t_sale_order values('s006','c006','g009',299,1,299,'2022-11-08 08:11:20');

insert into t_sale_order values('s007','u003','g005',1099,1,1099,'2022-11-08 15:01:33');

insert into t_sale_order values('s008','c006','g004',3000,1,3000,'2022-11-08 17:08:01');

insert into t_sale_order values('s009','c005','g008',10,8,80,'2022-11-08 12:08:23');

insert into t_sale_order values('s010','c006','g002',100,1,100,'2022-11-08 22:23:14');

insert into t_sale_order values('s011','c006','g007',99,10,999,'2022-11-08 23:07:42');

insert into t_sale_order values('s012','c007','g007',99,1,99,'2022-11-08 06:51:03');

commit;

-- 商品信息表插入数据

insert into dim_goods_info values('g001','OPPO K9x 5G全网通手机');

insert into dim_goods_info values('g002','儿童历史地理大百科全书 绘本礼盒典藏全40册');

insert into dim_goods_info values('g003','欧珀莱 AUPRES 时光锁小紫钻抗皱紧实眼霜');

insert into dim_goods_info values('g004','苏泊尔(SUPOR)净水器家用超滤软水机');

insert into dim_goods_info values('g005','小米粽 平板电脑');

insert into dim_goods_info values('g006','GoPro HERO11 Black运动相机');

insert into dim_goods_info values('g007','云南实建褚橙冰糖橙');

insert into dim_goods_info values('g008','四色蓝泡泡洁厕');

insert into dim_goods_info values('g009','奥康男鞋');

commit;

-- 国家信息表插入数据

insert into dim_country_info values('1','美国');

insert into dim_country_info values('65','新加坡');

insert into dim_country_info values('81','日本');

insert into dim_country_info values('61','澳大利亚');

insert into dim_country_info values('54','阿根廷');

insert into dim_country_info values('55','巴西');

insert into dim_country_info values('45','丹麦');

insert into dim_country_info values('86','中国');

commit;

-- 省份信息表插入数据

insert into dim_province_info values('11','北京市','86');

insert into dim_province_info values('12','天津市','86');

insert into dim_province_info values('31','上海市','86');

insert into dim_province_info values('50','重庆市','86');

insert into dim_province_info values('13','河北省','86');

insert into dim_province_info values('41','河南省','86');

insert into dim_province_info values('53','云南省','86');

insert into dim_province_info values('21','辽宁省','86');

insert into dim_province_info values('23','湖南省','86');

insert into dim_province_info values('43','黑龙江省','86');

insert into dim_province_info values('34','安徽省','86');

insert into dim_province_info values('37','山东省','86');

insert into dim_province_info values('65','新疆维吾尔自治区','86');

insert into dim_province_info values('32','江苏省','86');

insert into dim_province_info values('33','浙江省','86');

insert into dim_province_info values('36','江西省','86');

commit;

-- 城市信息表插入数据

insert into dim_city_info values('320100','南京市','32');

insert into dim_city_info values('320200','无锡市','32');

insert into dim_city_info values('320300','徐州市','32');

insert into dim_city_info values('320400','常州市','32');

insert into dim_city_info values('320500','苏州市','32');

insert into dim_city_info values('320600','南通市','32');

insert into dim_city_info values('320700','连云港市','32');

insert into dim_city_info values('320800','淮安市','32');

insert into dim_city_info values('320900','盐城市','32');

insert into dim_city_info values('321000','扬州市','32');

insert into dim_city_info values('321100','镇江市','32');

insert into dim_city_info values('321200','泰州市','32');

insert into dim_city_info values('321300','宿迁市','32');

commit;

-- 用户浏览日志表插入数据

insert into t_access_log values('{"user_id": "c001","productId": "g002","productName": "儿童历史地理大百科全书 绘本礼盒典藏全40册","viewTimestamp": "2022-11-07 13:42:38"}');

insert into t_access_log values('{"user_id": "c006","productId": "g007","productName": "云南实建褚橙冰糖橙","viewTimestamp": "2022-11-09 01:02:18"}');

insert into t_access_log values('{"user_id": "c002","productId": "g001","productName": "OPPO K9x 5G全网通手机","viewTimestamp": "2022-11-07 11:02:28"}');

insert into t_access_log values('{"user_id": "c006","productId": "g001","productName": "OPPO K9x 5G全网通手机","viewTimestamp": "2022-11-09 13:01:05"}');

insert into t_access_log values('{"user_id": "c008","productId": "g005","productName": "小米粽 平板电脑","viewTimestamp": "2022-11-09 01:02:18"}');

insert into t_access_log values('{"user_id": "c006","productId": "g007","productName": "云南实建褚橙冰糖橙","viewTimestamp": "2022-11-09 01:02:18"}');

insert into t_access_log values('{"user_id": "u001","productId": "g001","productName": "OPPO K9x 5G全网通手机","viewTimestamp": "2022-11-08 08:45:00"}');

insert into t_access_log values('{"user_id": "u003","productId": "g005","productName": "小米粽 平板电脑","viewTimestamp": "2022-11-09 01:02:18"}');

insert into t_access_log values('{"user_id": "u001","productId": "g006","productName": "GoPro HERO11 Black运动相机","viewTimestamp": "2022-11-07 09:06:27"}');

insert into t_access_log values('{"user_id": "u001","productId": "g002","productName": "儿童历史地理大百科全书 绘本礼盒典藏全40册","viewTimestamp": "2022-11-09 08:02:29"}');

commit;

-- 商品类别表插入数据

insert into dim_goods_type values('g001','1','3C产品');

insert into dim_goods_type values('g002','2','书籍');

insert into dim_goods_type values('g003','3','日用品');

insert into dim_goods_type values('g004','4','家电');

insert into dim_goods_type values('g005','1','3C产品');

insert into dim_goods_type values('g006','1','3C产品');

insert into dim_goods_type values('g007','5','水果');

insert into dim_goods_type values('g008','3','日用品');

insert into dim_goods_type values('g009','6','鞋帽');

commit;

执行结果:

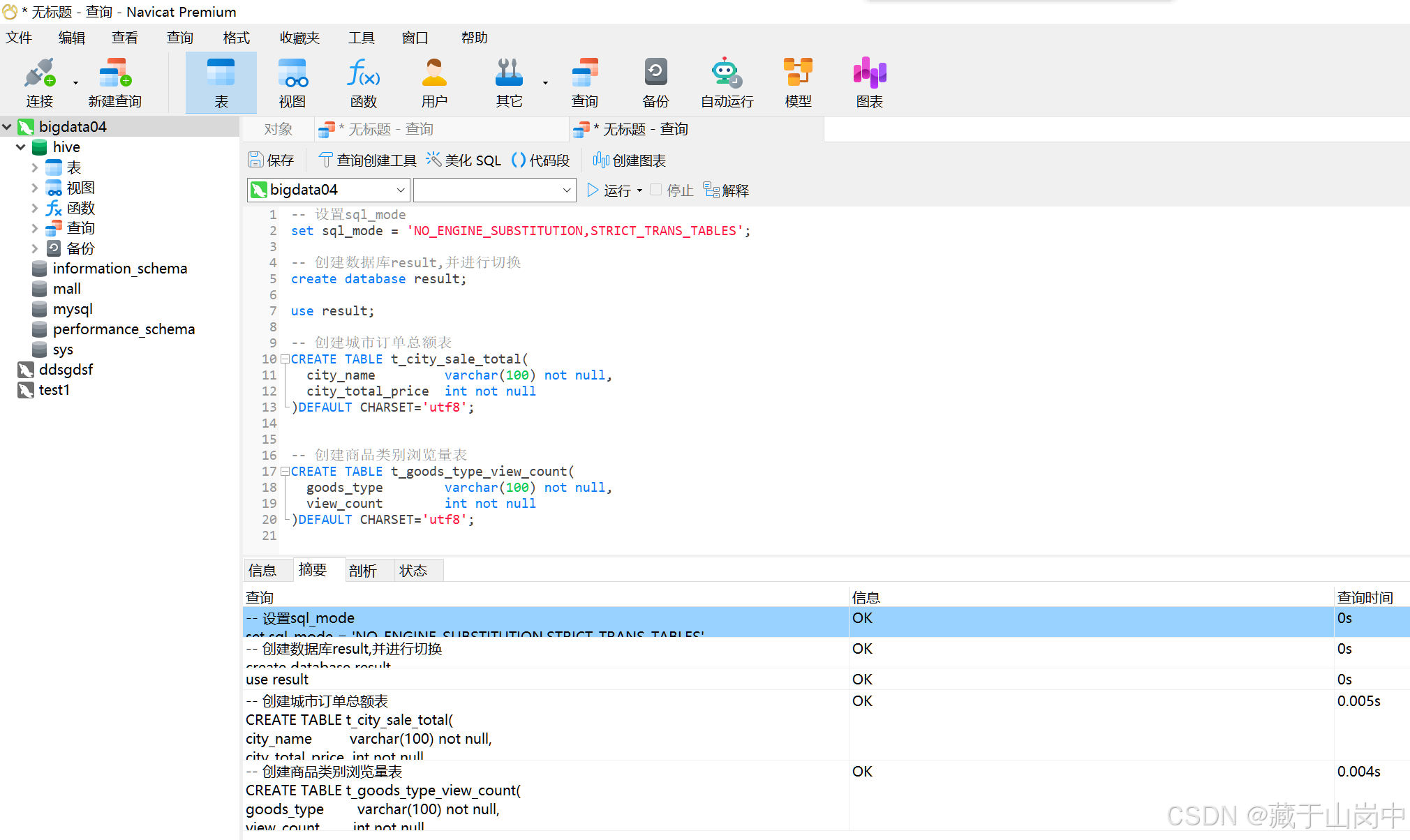

再执行下面的SQL查询语句。

-- 设置sql_mode

set sql_mode = 'NO_ENGINE_SUBSTITUTION,STRICT_TRANS_TABLES';

-- 创建数据库result,并进行切换

create database result;

use result;

-- 创建城市订单总额表

CREATE TABLE t_city_sale_total(

city_name varchar(100) not null,

city_total_price int not null

)DEFAULT CHARSET='utf8';

-- 创建商品类别浏览量表

CREATE TABLE t_goods_type_view_count(

goods_type varchar(100) not null,

view_count int not null

)DEFAULT CHARSET='utf8';

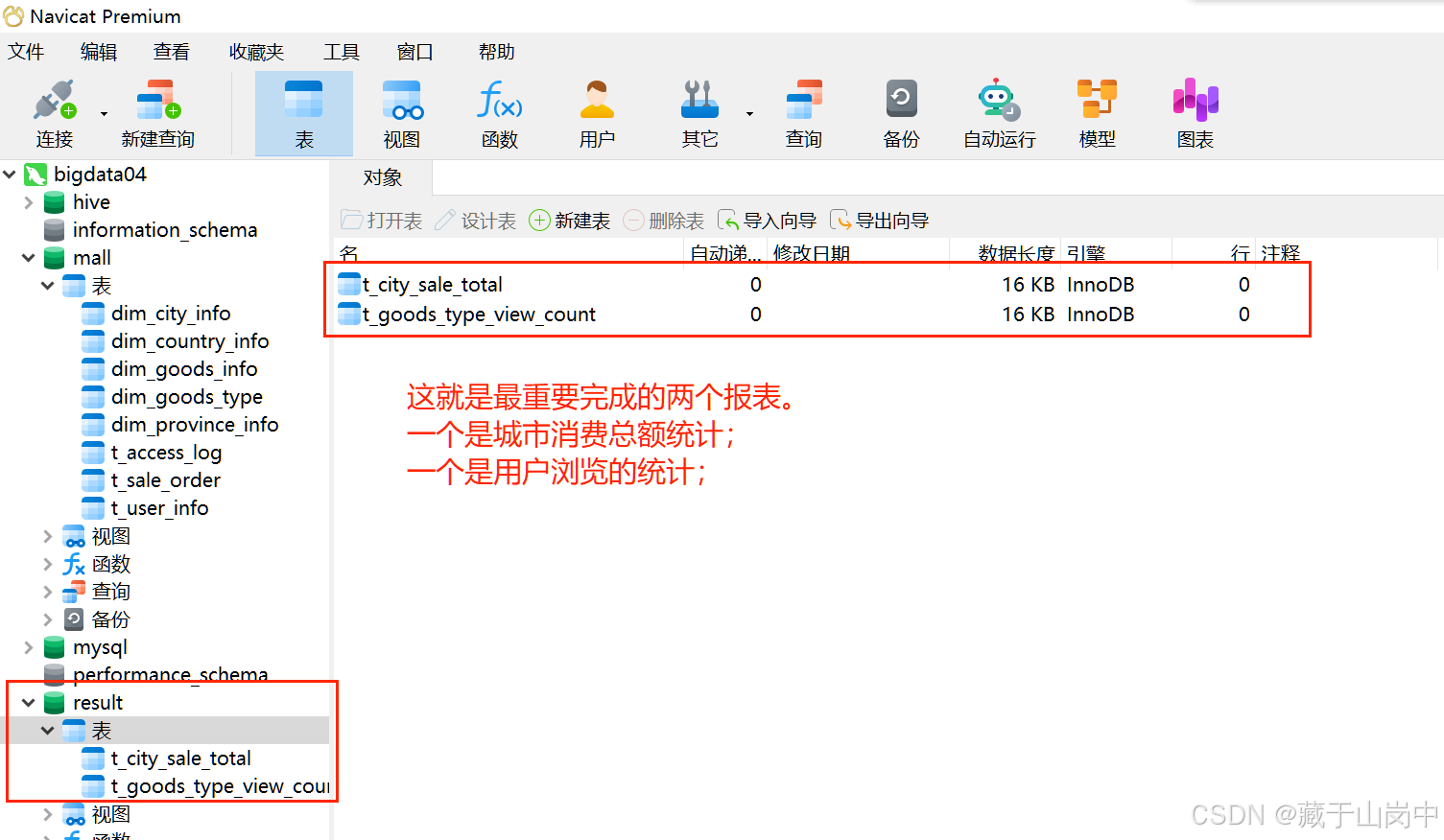

执行结果截图:

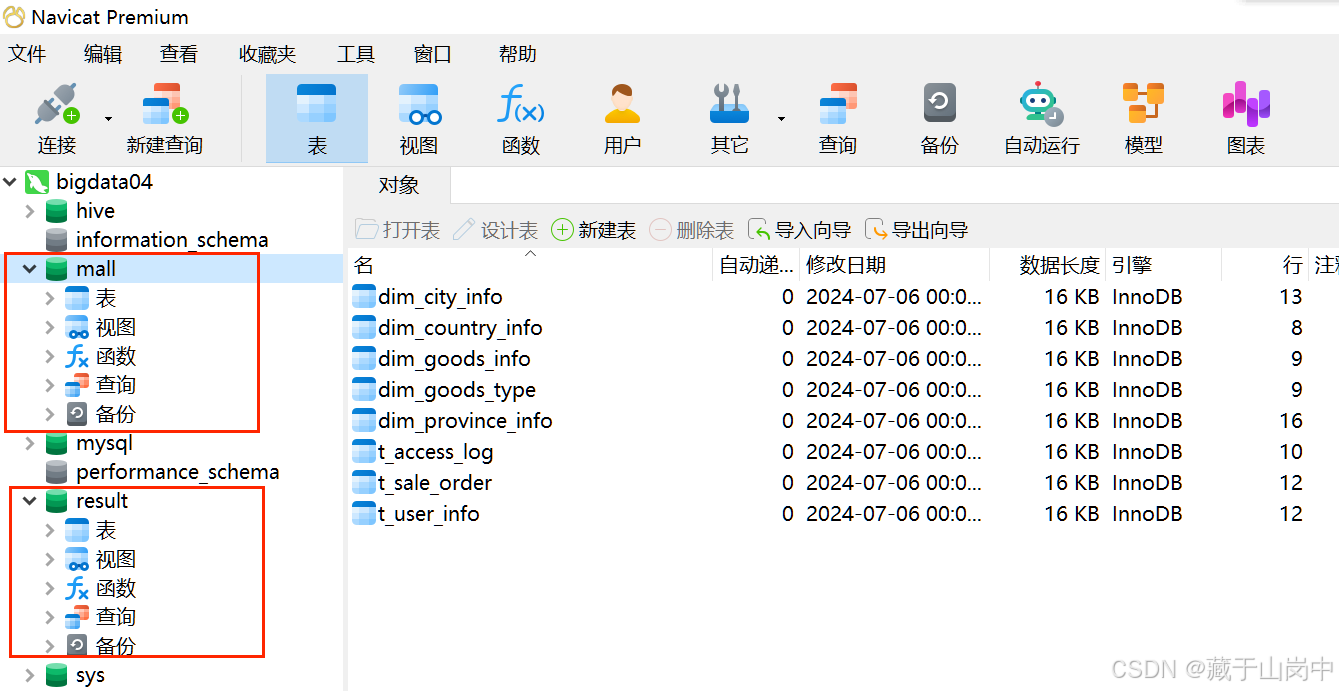

刷新之后,查看新创建的两个数据库:mall和result

分析一下数据库及表的内容。

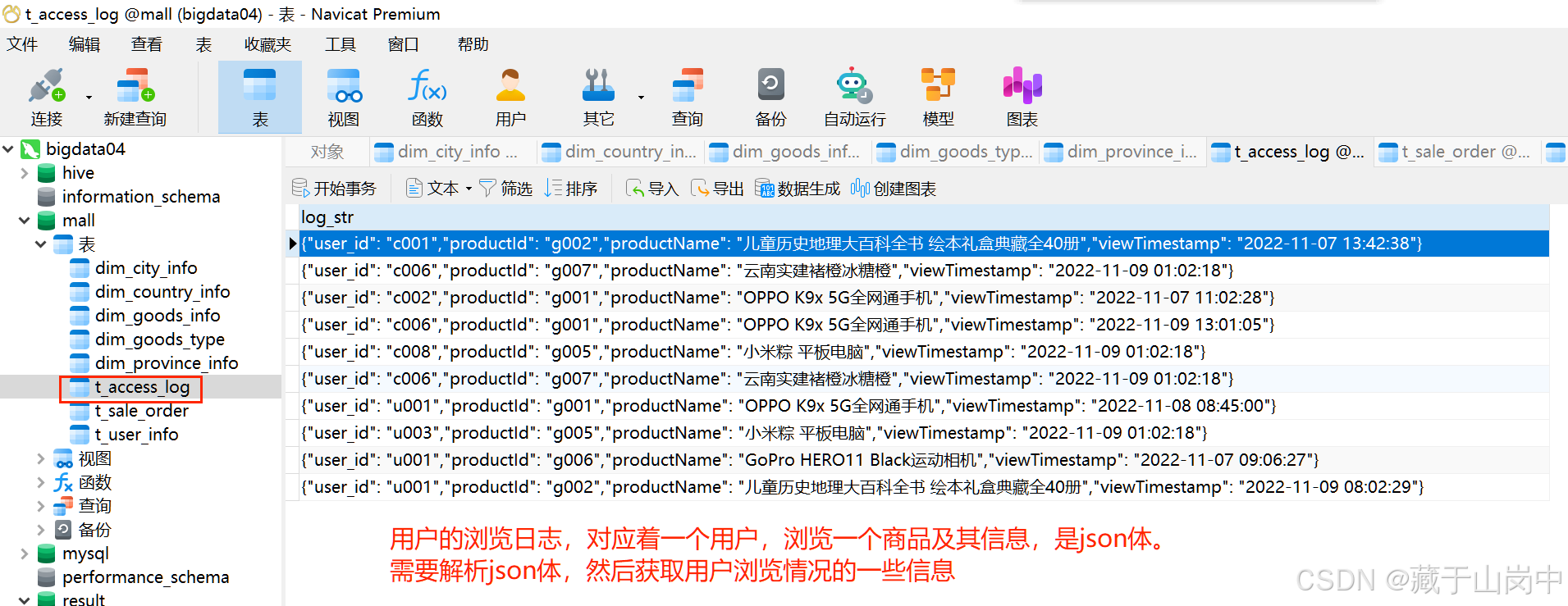

(1)mall.t_access_log

(2)mall.t_sale_order

(3)mall.t_user_info

(4)result数据库

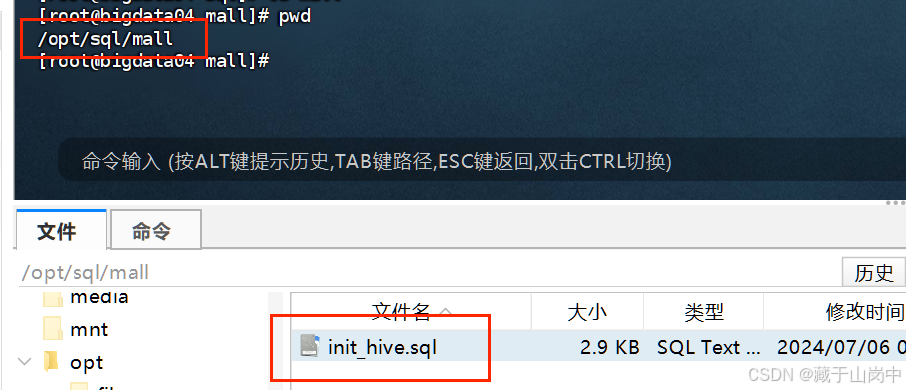

2.1.2 初始化Hive脚本

首先,在虚拟机上开启hive,在虚拟机上上传一个SQL文件:init_hive.sql 用于在hive中创建数据表。

项目中想要做的就是通过sqoop将MySQL中的数据导入到hive大数据平台,所以这个里面的创建八张表和上面的MySQL中创建的八张表一一对应。

--创建数据库mall_bigdata

create database if not exists mall_bigdata;

--切换数据库至mall_bigdata

use mall_bigdata;

--创建用户信息表

create table if not exists mall_bigdata.ods_user_info

(

user_id STRING comment "用户id"

,user_name STRING comment "用户姓名"

,sex STRING comment "性别"

,age INT comment "年龄"

,country_code STRING comment "国家码"

,province_code STRING comment "省份码"

,city_code STRING comment "城市码"

)

comment "用户信息表"

row format delimited fields terminated by ","

stored as textfile;

--创建订单表

create table if not exists mall_bigdata.ods_sale_order

(

sale_id STRING comment "订单id"

,user_id STRING comment "用户id"

,goods_id STRING comment "商品id"

,price INT comment "单价"

,sale_count INT comment "购买数量"

,total_price INT comment "购买总金额"

,create_time STRING comment "订单生成时间"

)

comment "销售订单表"

row format delimited fields terminated by ","

stored as textfile;

--创建商品信息表

create table if not exists mall_bigdata.dim_goods_info

(

goods_id STRING comment "商品id"

,goods_name STRING comment "商品名称"

)

comment "商品信息表"

row format delimited fields terminated by ","

stored as textfile;

--创建国家信息表

create table if not exists mall_bigdata.dim_country_info

(

country_code STRING comment "国家码"

,country_name STRING comment "国家名称"

)

comment "国家信息表"

row format delimited fields terminated by ","

stored as textfile;

--创建省份信息表

create table if not exists mall_bigdata.dim_province_info

(

province_code STRING comment "省份码"

,province_name STRING comment "省份名称"

,country_code STRING comment "国家码"

)

comment "省份信息表"

row format delimited fields terminated by ","

stored as textfile;

--创建城市信息表

create table if not exists mall_bigdata.dim_city_info

(

city_code STRING comment "城市码"

,city_name STRING comment "城市名称"

,province_code STRING comment "省份码"

)

comment "城市信息表"

row format delimited fields terminated by ","

stored as textfile;

--创建用户浏览日志表

create table if not exists mall_bigdata.ods_access_log

(

log_str STRING comment "浏览日志"

)

comment "用户浏览日志表"

row format delimited fields terminated by "|"

stored as textfile;

--创建商品类别表

create table if not exists mall_bigdata.dim_goods_type

(

goods_id STRING comment "商品id"

,type_id STRING comment "商品类别id"

,type_name STRING comment "商品类别名称"

)

comment "商品类别表"

row format delimited fields terminated by ","

stored as textfile;

上传到虚拟机之后,在hive上执行该sql文件

source /opt/sql/mall/init_hive.sql;

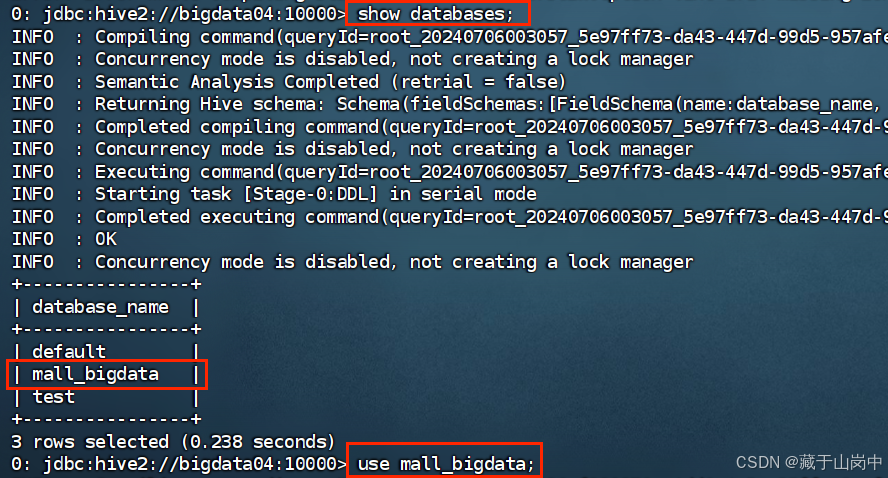

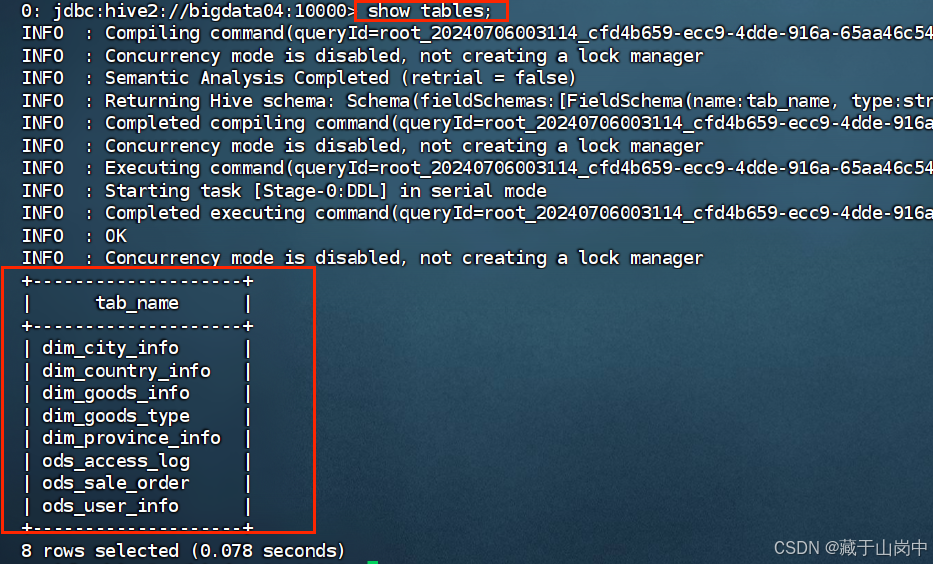

创建之后,检查一下,可以看到创建了一个新的数据库:mall_bigdata

再查看一下mall_bigdata里面的数据表

use mall_bigdata;

show tables;

2.2 数据传输

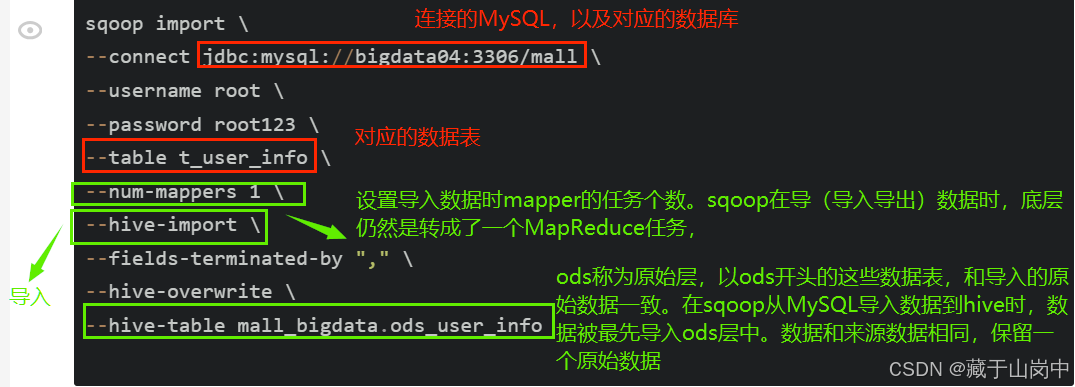

完成“2.通过sqoop将mysql中的业务数据导入到大数据平台(hive)”。

2.2.1 下载安装sqoop

可以参考文章Linux上安装sqoop1.4.6

2.2.2 sqoop导入数据

进入sqoop目录bin下:

cd /opt/softs/sqoop1.4.6/bin

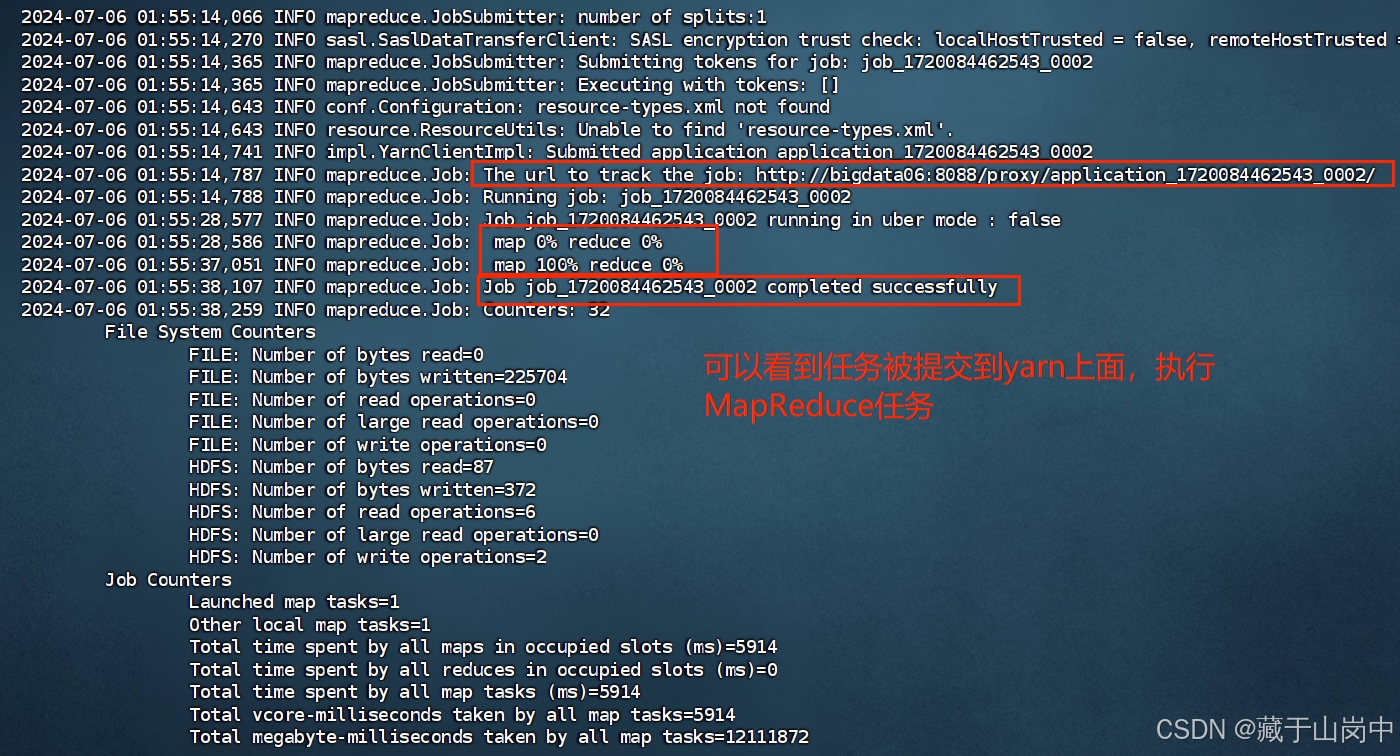

sqoop import \

--connect jdbc:mysql://bigdata04:3306/mall \

--username root \

--password cxy20030419 \

--table t_user_info \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "," \

--hive-overwrite \

--hive-table mall_bigdata.ods_user_info

解析:

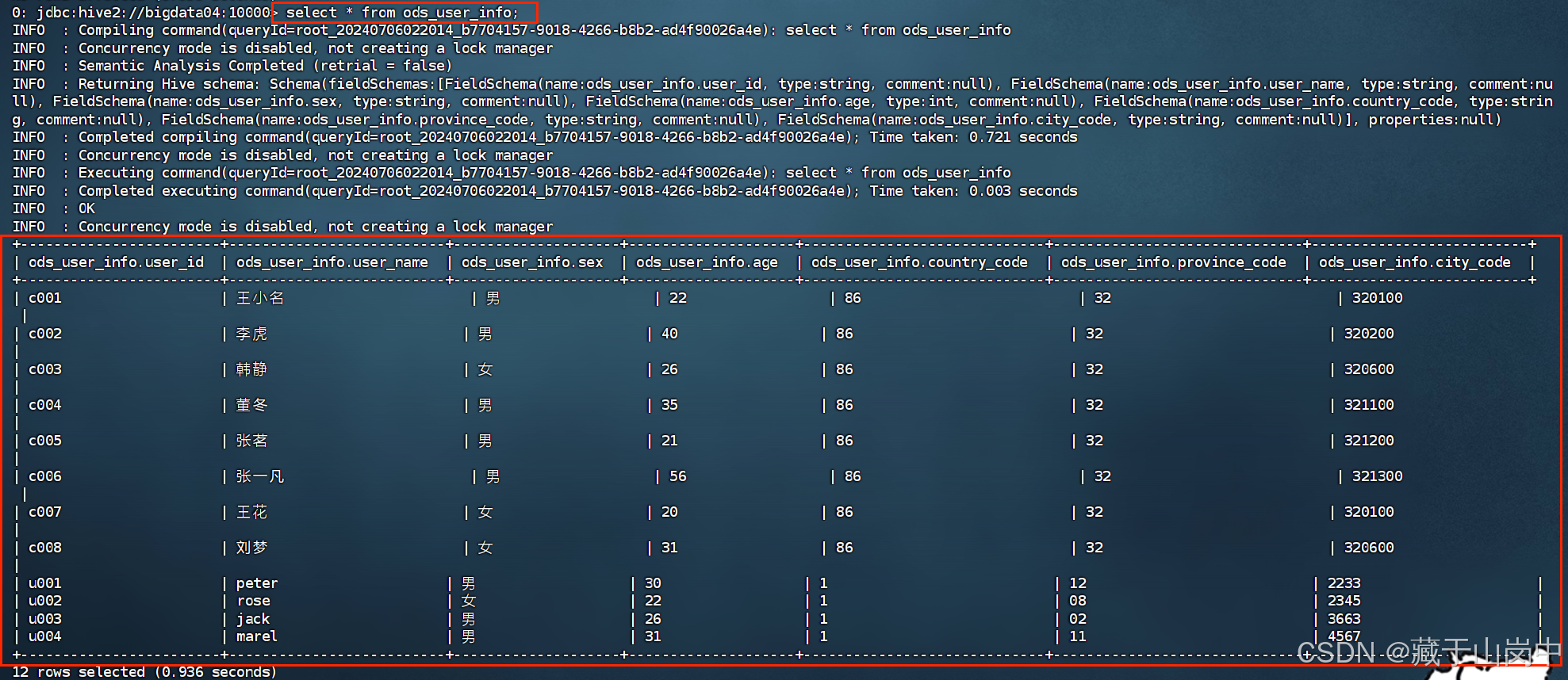

检查一下

没有问题后,然后依次对八张表,都进行上述操作。(修改MySQL中表名以及hive中对应表名即可)

sqoop import \

--connect jdbc:mysql://bigdata04:3306/mall \

--username root \

--password cxy20030419 \

--table t_sale_order \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "," \

--hive-overwrite \

--hive-table mall_bigdata.ods_sale_order

sqoop import \

--connect jdbc:mysql://bigdata04:3306/mall \

--username root \

--password cxy20030419 \

--table t_access_log \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "," \

--hive-overwrite \

--hive-table mall_bigdata.ods_access_log

sqoop import \

--connect jdbc:mysql://bigdata04:3306/mall \

--username root \

--password cxy20030419 \

--table dim_country_info \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "," \

--hive-overwrite \

--hive-table mall_bigdata.ods_country_info

sqoop import \

--connect jdbc:mysql://bigdata04:3306/mall \

--username root \

--password cxy20030419 \

--table dim_goods_info \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "," \

--hive-overwrite \

--hive-table mall_bigdata.ods_goods_info

sqoop import \

--connect jdbc:mysql://bigdata04:3306/mall \

--username root \

--password cxy20030419 \

--table dim_province_info \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "," \

--hive-overwrite \

--hive-table mall_bigdata.ods_province_info

sqoop import \

--connect jdbc:mysql://bigdata04:3306/mall \

--username root \

--password cxy20030419 \

--table dim_city_info \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "," \

--hive-overwrite \

--hive-table mall_bigdata.ods_city_info

sqoop import \

--connect jdbc:mysql://bigdata04:3306/mall \

--username root \

--password cxy20030419 \

--table dim_goods_type \

--num-mappers 1 \

--hive-import \

--fields-terminated-by "," \

--hive-overwrite \

--hive-table mall_bigdata.ods_goods_type

2.3 生成数据报表

2.3.1 补全用户信息表

首先需要生成一个临时的辅助表,用来补全用户信息表中的关于用户的所在国家名称、所在省份名称、所在城市名称。

--切换数据库

use mall_bigdata;

--补全用户信息表中的关于用户的所在国家名称、所在省份名称、所在城市名称,一个临时表

--如果所生成的字段在不同表中同名列,那就需要指定是在哪个表中选择的,一般是从最左表进行选择

--在运行脚本的时候进行创建,运行结束之后就删除

--dwd是明细层

--hive中可以将一个查到的数据集作为新表的字段,所以不需要明确表的字段,直接使用select的结果建表

create table if not exists mall_bigdata.tmp_dwd_user_info

as

select

user_id,

user_name,

sex,

age,

country_name,

province_name,

city_name

from

(select

user_id,

user_name,

sex,

age,

country_code,

province_code,

city_code

from mall_bigdata.ods_user_info) t1

left join

(

select

country_code,

country_name

from ods_country_info

) t2

on t1.country_code = t2.country_code

left join

(

select

province_code,

province_name,

country_code

from ods_province_info

) t3

on t1.province_code = t3.province_code and t1.country_code = t3.country_code

left join

(

select

city_code,

city_name,

province_code

from ods_city_info

) t4

on t1.city_code = t4.city_code and t1.province_code = t4.province_code;

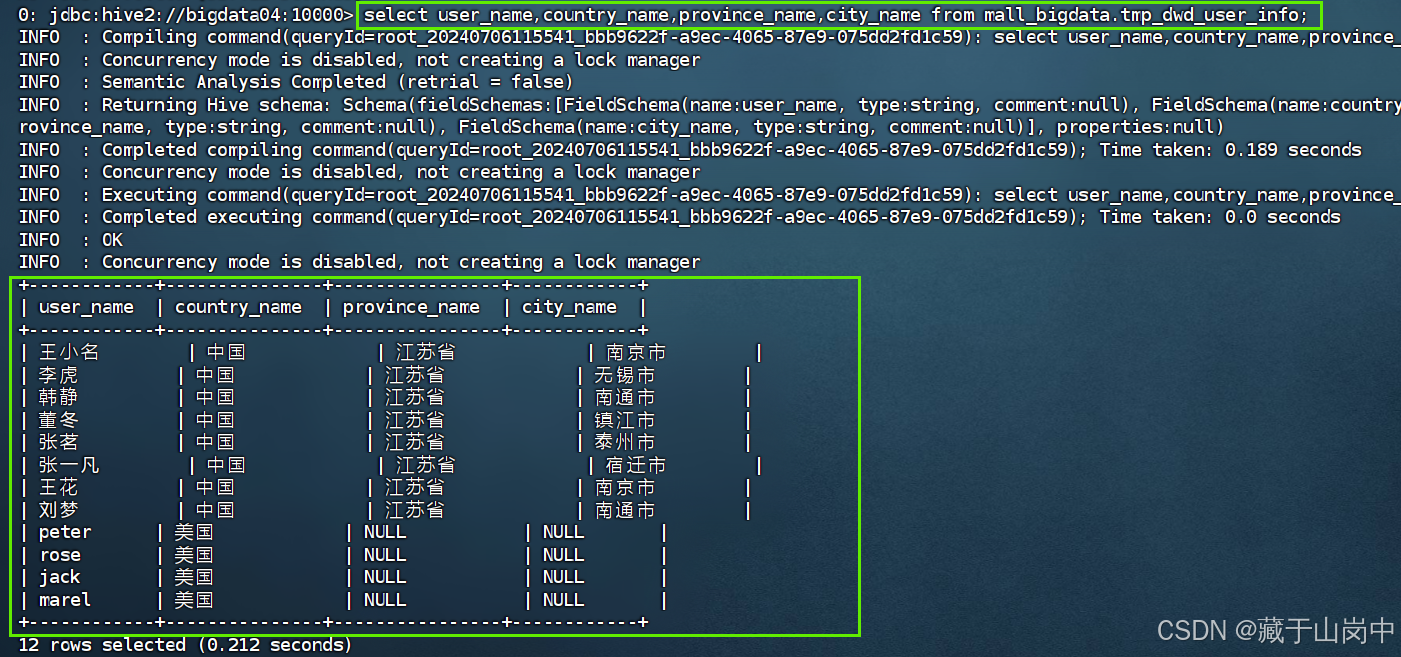

执行sql文件之后,查看一下数据库中的数据

select user_name,country_name,province_name,city_name from mall_bigdata.tmp_dwd_user_info;

2.3.2 补全订单表用户、商品信息

第二步,补全订单表的用户名称和商品名称,过滤中国用户的订单记录。

--补全订单表中用户名称和商品名称

--过滤中国用户的订单记录

create table if not exists mall_bigdata.dwd_sale_order_detail

as

select

sale_id,

t1.user_id,

user_name,

sex,

age,

country_name,

province_name,

city_name,

t1.goods_id,

goods_name,

price,

sale_count,

total_price,

create_time

from

(

select

sale_id,

user_id,

goods_id,

price,

sale_count,

total_price,

create_time

from ods_sale_order

)t1

left on

(

select

user_id,

user_name,

sex,

age,

country_name,

province_name,

city_name

from tmp_dwd_user_info

)t2

on t1.user_id = t2.user_id

left join

(

select

goods_id,

goods_name

from ods_goods_info

)t3

on t1.goods_id = t3.goods_id

where t2.country_name='中国';

--删除临时表,过河就拆桥

drop table if exists tmp_dwd_user_info;

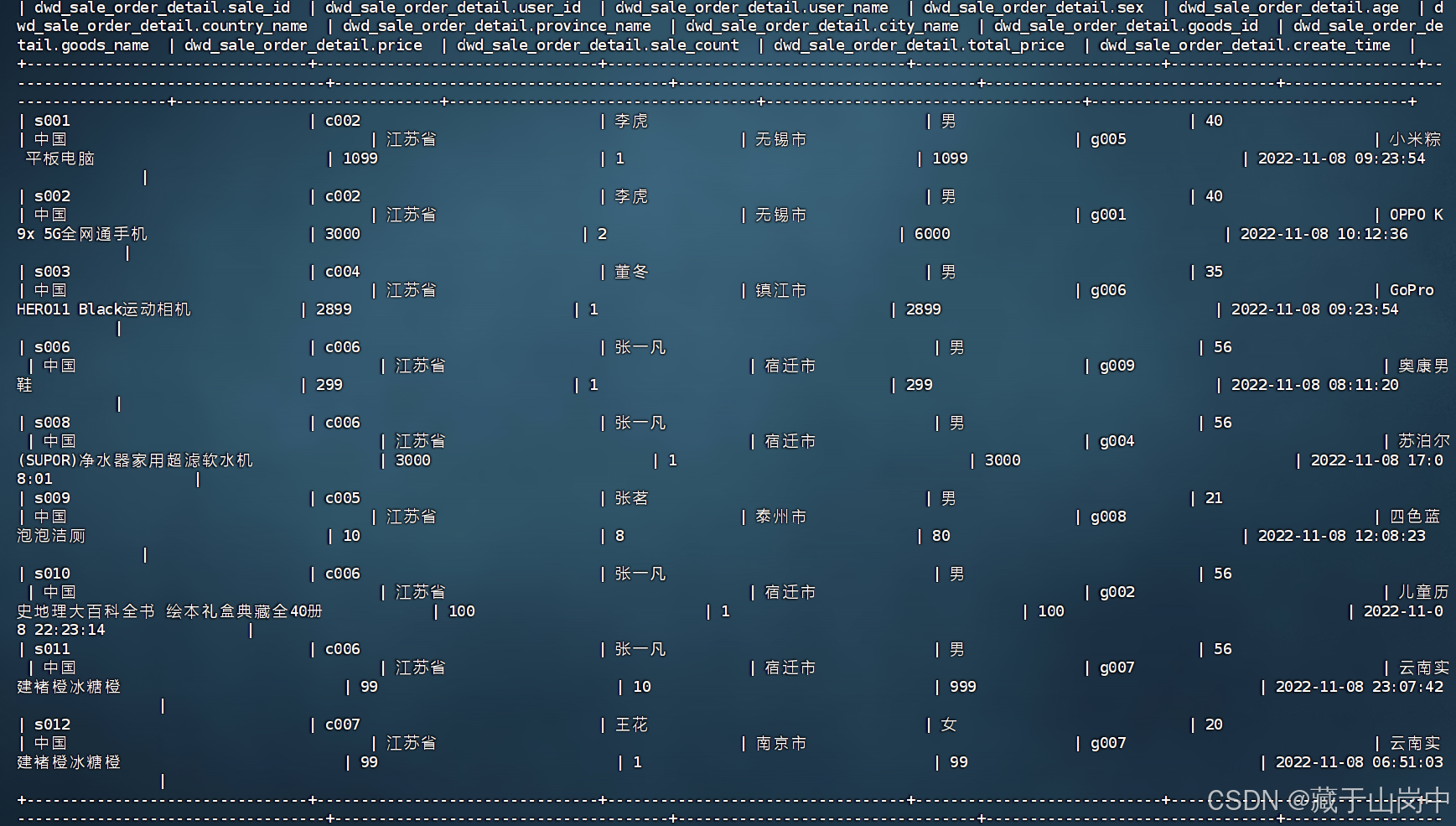

执行sql文件之后,查看表中的数据

2.3.3 计算不同城市的销售总额

dws是汇总层,汇总层的数据一般都是来源于明细层dwd,比如我现在要计算不同城市的销售总额的时候,就在明细层里面先对每个订单的用户、商品、销售信息进行汇总,其中包括订单总额和城市信息等,这些就是明细层里面进行的明细数据。这样我们只需要从明细层里面进行一些数据的计算汇总即可得到最终的数据报表。

create table if not exists mall_bigdata.dws_sale_order_city_total

as

select

city_name,

sum(total_price) as total_price

from dwd_sale_order_detail

group by city_name;

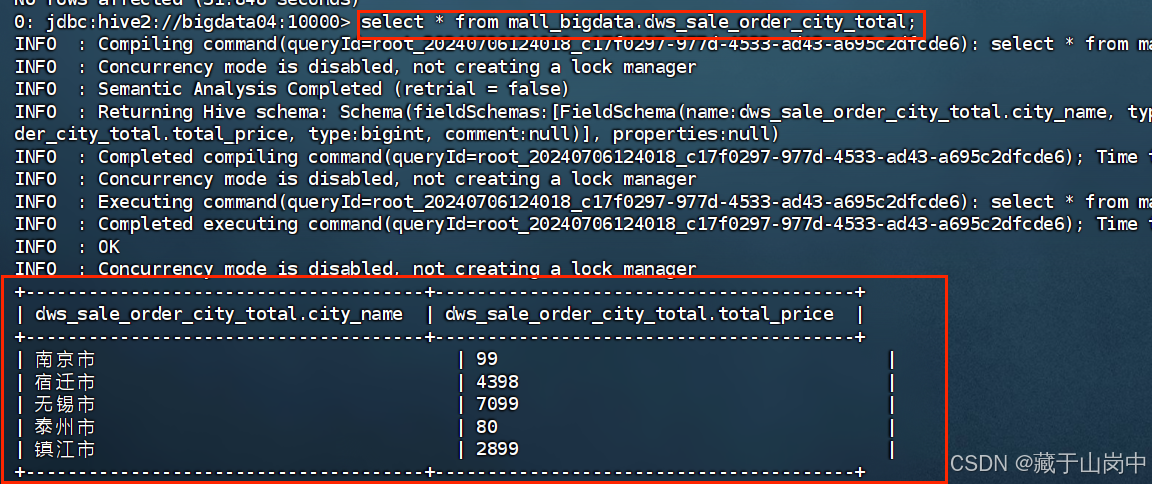

运行完sql文件之后,可以查看一下汇总的报表数据。

source /opt/sql/mall/dws_sale_order_city_total.sql;

2.3.4 计算用户商品类别浏览量表

提取用户浏览日志表中的商品信息,补全商品的类型

再根据商品类别的不同,计算用户对于不同的商品类型的浏览次数

create table if not exists mall_bigdata.dws_view_goods_type_count

as

select

type_name,

count(type_name) as view_goods_type_count

from

(

select

get_json_object(log_str,'$.productId') as product_id

from mall_bigdata.ods_access_log

)t1

inner join

(

select

goods_id,

type_name

from ods_goods_type

)t2

on t1.product_id = t2.goods_id

group by type_name;

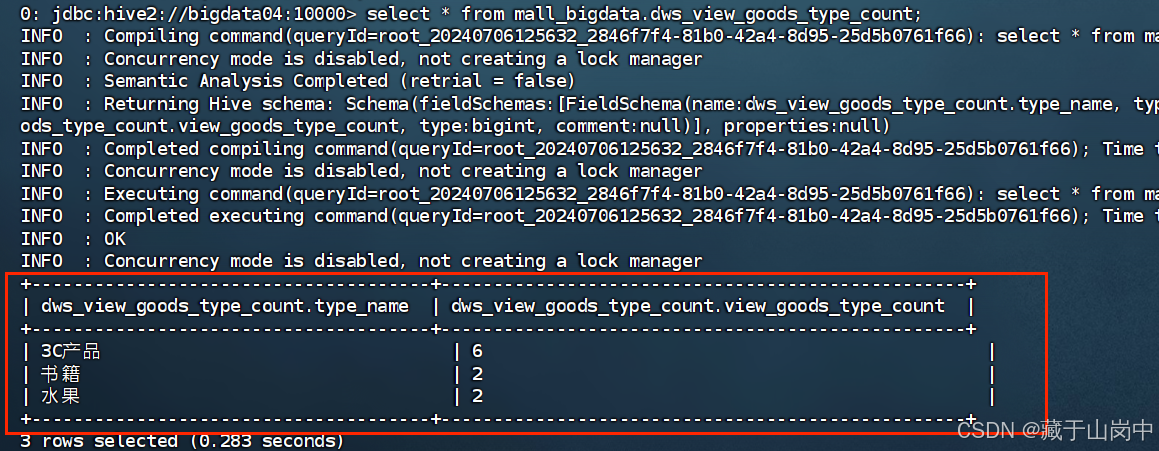

运行完sql文件之后,可以查看一下汇总的报表数据。

source /opt/sql/mall/dws_view_goods_type_count.sql;

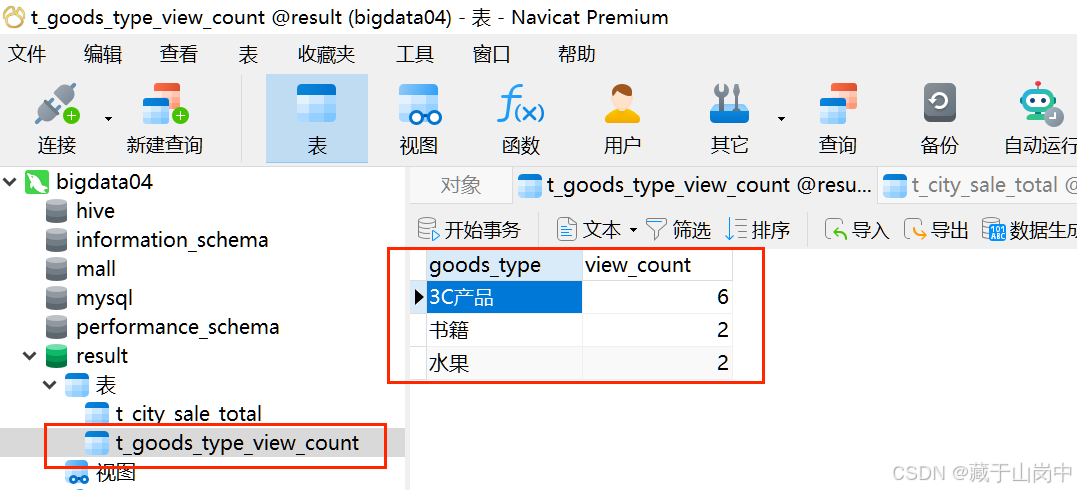

2.4 数据报表导出到MySQL

首先需要将MySQL的字符集修改为utf-8,详见文章Linux中MySQL修改字符集为utf-8

也就是完成“4.再通过sqoop将数据报表导出到mysql”。

hive的默认分隔符是"\001"

cd /opt/softs/sqoop1.4.6/bin

-- sqoop导出数据到mysql

sqoop export \

--connect jdbc:mysql://bigdata04:3306/result \

--username root \

--password cxy20030419 \

--table t_city_sale_total \

--num-mappers 1 \

--export-dir /user/hive/warehouse/mall_bigdata.db/dws_sale_order_city_total \

--input-fields-terminated-by "\001"

sqoop export \

--connect jdbc:mysql://bigdata04:3306/result \

--username root \

--password cxy20030419 \

--table t_goods_type_view_count \

--num-mappers 1 \

--export-dir /user/hive/warehouse/mall_bigdata.db/dws_view_goods_type_count \

--input-fields-terminated-by "\001"

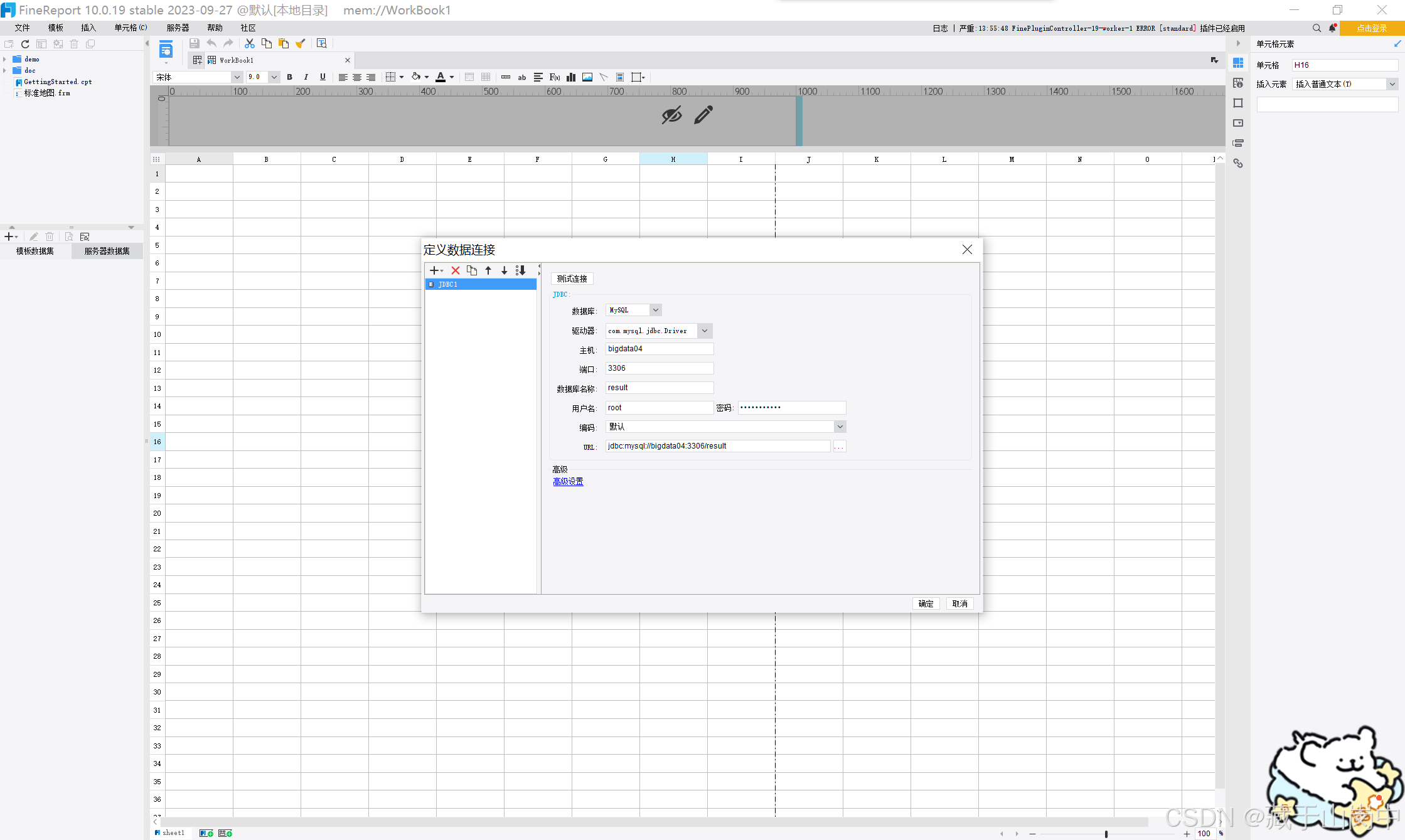

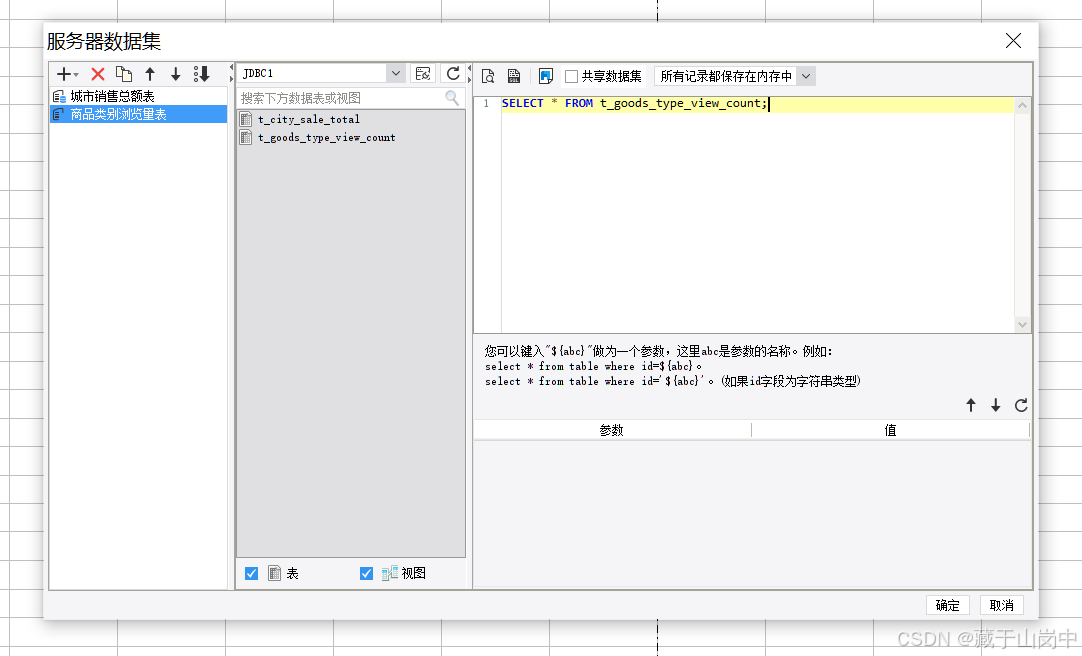

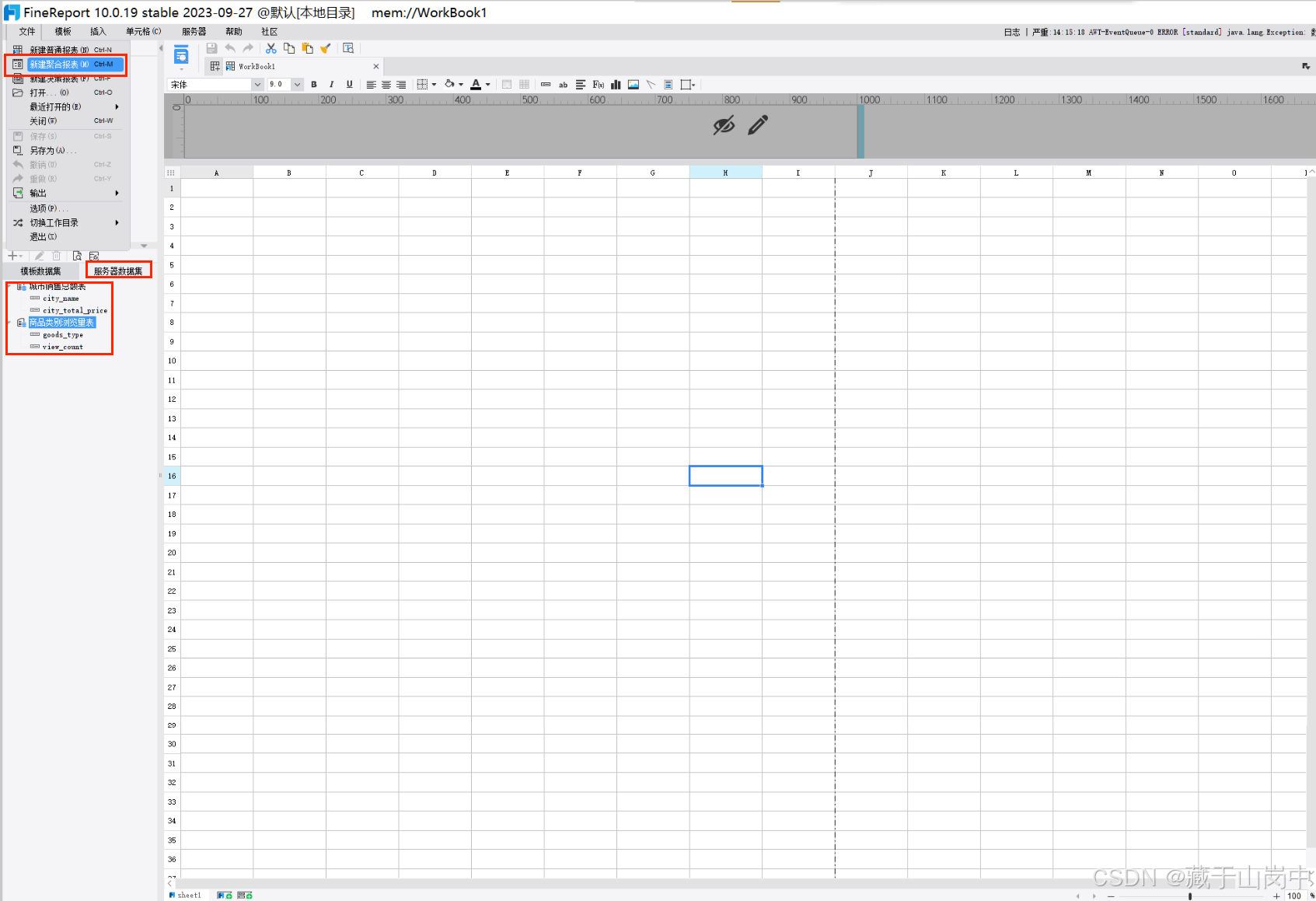

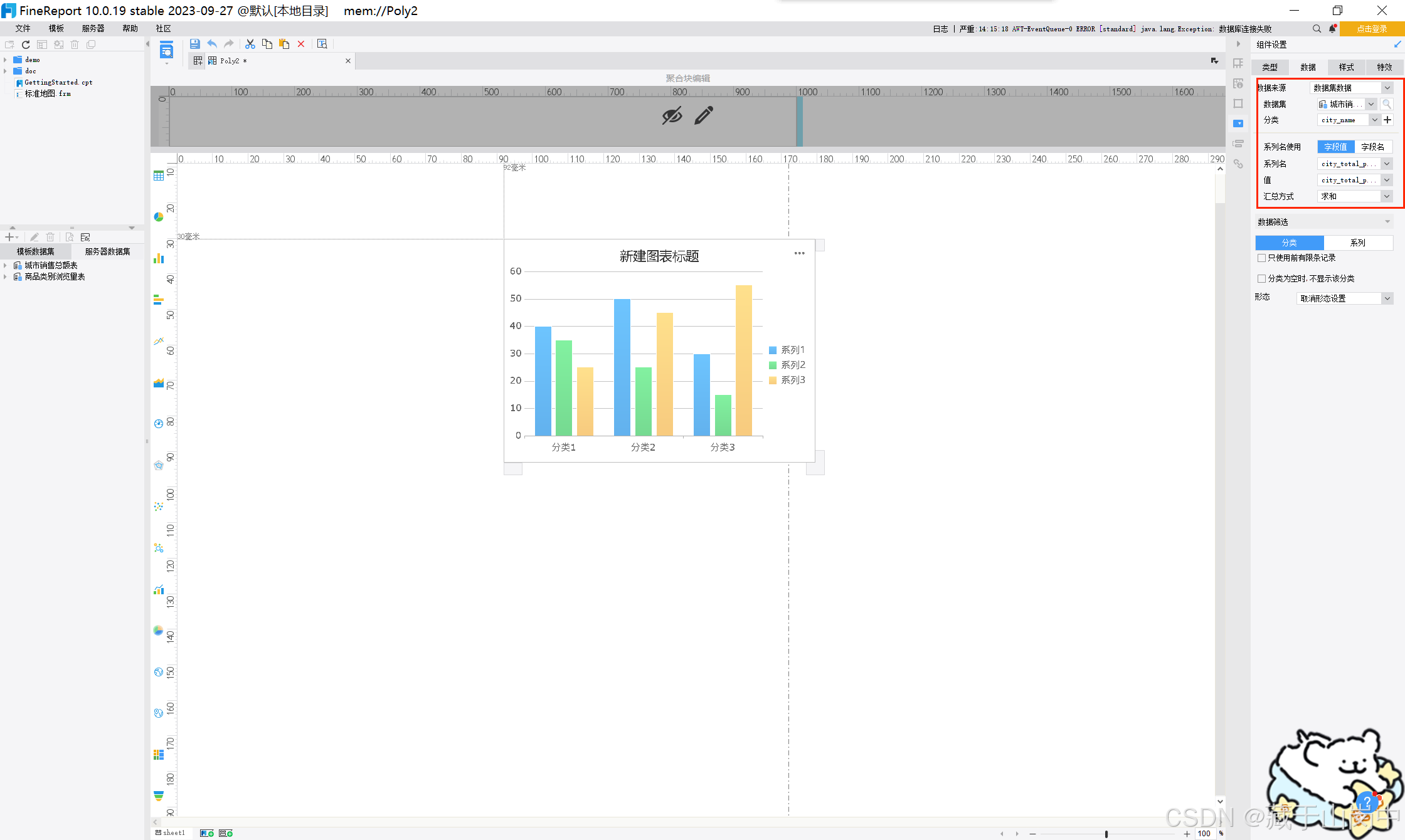

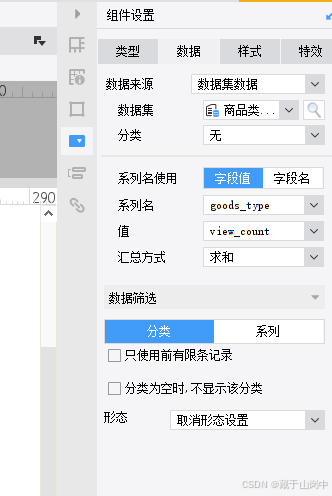

2.5 使用FineReport制作数据报表

先finereport连接MySQL数据库

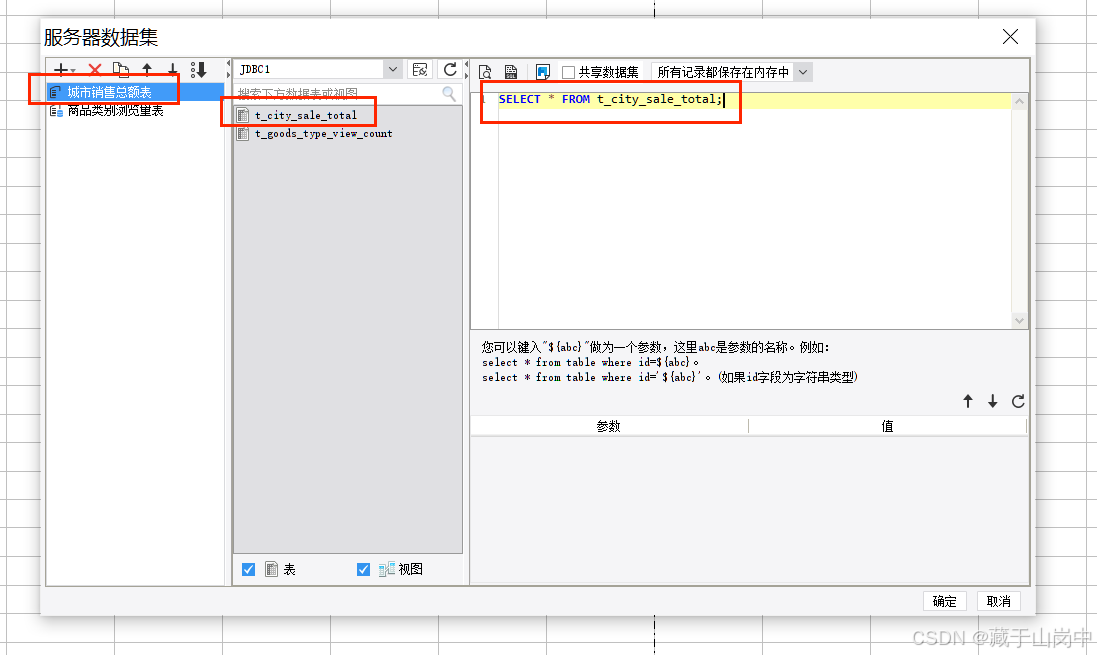

新建聚合报表

同样的方法可以制作商品类别浏览量表:

可以预览之后保存: