秋招面试专栏推荐 :深度学习算法工程师面试问题总结【百面算法工程师】——点击即可跳转

💡💡💡本专栏所有程序均经过测试,可成功执行💡💡💡

专栏目录 :《YOLOv8改进有效涨点》专栏介绍 & 专栏目录 | 目前已有40+篇内容,内含各种Head检测头、损失函数Loss、Backbone、Neck、NMS等创新点改进——点击即可跳转

作为视觉变换器的核心构建块,注意力机制是一种捕获长距离依赖关系的强大工具。然而,这种能力是有代价的:它带来了巨大的计算负担和沉重的内存占用,因为需要计算所有空间位置之间的成对token交互。一系列工作试图通过引入手工制作和与内容无关的稀疏性来缓解这个问题,例如将注意力操作限制在局部窗口、轴向条纹或扩张窗口内。与这些方法相比,为此,研究人员提出了一种新颖的动态稀疏注意力机制,即通过双层路由来实现更灵活的计算分配,并具有内容感知能力。文章在介绍主要的原理后,将手把手教学如何进行模块的代码添加和修改,并将修改后的完整代码放在文章的最后,方便大家一键运行,小白也可轻松上手实践。以帮助您更好地学习深度学习目标检测YOLO系列的挑战。

目录

1.原理

论文地址:BiFormer: Vision Transformer with Bi-Level Routing Attention——点击即可跳转

官方代码:官方代码仓库——点击即可跳转

BiFormer:主要原理和架构

BiFormer 是一种新型视觉转换器,它集成了双层路由注意 (BRA) 机制,以提高各种计算机视觉任务的计算效率和性能。

关键概念

双层路由注意 (BRA):

-

目的:BRA 旨在降低转换器中标准注意机制的计算复杂度。

-

结构:

-

区域到区域路由:将图像划分为区域,路由机制确定要关注的最相关区域。

-

标记到标记注意:在选定区域内,应用标记级注意来模拟细粒度关系。

-

-

效率:这种分层方法实现了 O((HW)^{4/3}) 复杂度,低于 vanilla 注意力的 O((HW)^2) 复杂度,使其对于高分辨率输入更有效。

四阶段金字塔结构:

-

阶段:该模型通过四个阶段处理输入,每个阶段都会降低空间分辨率并增加通道数量。

-

模块:

-

补丁嵌入:将图像转换为基于补丁的表示的初始阶段。

-

补丁合并:后续阶段合并补丁以逐步减少空间维度。

-

BiFormer 块:每个阶段由多个 BiFormer 块组成,其中包括深度卷积、BRA 模块和用于局部和全局特征提取的多层感知器 (MLP)。

-

深度卷积:

-

在每个 BiFormer 块的开头合并,以隐式编码相对位置信息,帮助模型理解空间关系,而无需明确的位置编码。

多层感知器 (MLP):

-

每个 BiFormer 块中的 BRA 模块后面都有一个两层 MLP,便于每个位置的特征嵌入并增强模型的表示能力。

架构设计

-

变体:BiFormer 有三种尺寸:BiFormer-T(微型)、BiFormer-S(小型)和 BiFormer-B(基础),它们在每个阶段使用的通道和块数量上有所不同。

-

配置:

-

每个注意力头有 32 个通道。

-

MLP 扩展比率设置为 3。

-

特定的 top-k 值和区域分区因子在不同阶段有所不同,以优化不同任务(例如分类、分割)的性能。

性能

-

效率:BiFormer 在准确率和计算效率方面优于几种当代模型,在 ImageNet-1K 等基准测试中取得了显著的成果。

-

应用:该模型用途广泛,在图像分类、对象检测和语义分割等任务中均有改进。

总之,BiFormer 利用双层路由注意机制实现性能和计算成本之间的平衡,使其成为各种视觉任务的强大支柱。

2. 将BiFormer 添加到YOLOv8中

2.1 BiFormer 的代码实现

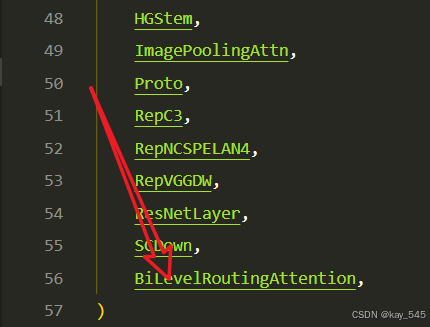

关键步骤一: 将下面代码粘贴到在/ultralytics/ultralytics/nn/modules/block.py中,并在该文件的__all__中添加“BiLevelRoutingAttention”

"""

Bi-Level Routing Attention.

"""

from typing import Tuple, Optional

import torch

import torch.nn as nn

import torch.nn.functional as F

from einops import rearrange

from torch import Tensor, LongTensor

__all__ = ['BiLevelRoutingAttention']

class TopkRouting(nn.Module):

"""

differentiable topk routing with scaling

Args:

qk_dim: int, feature dimension of query and key

topk: int, the 'topk'

qk_scale: int or None, temperature (multiply) of softmax activation

with_param: bool, wether inorporate learnable params in routing unit

diff_routing: bool, wether make routing differentiable

soft_routing: bool, wether make output value multiplied by routing weights

"""

def __init__(self, qk_dim, topk=4, qk_scale=None, param_routing=False, diff_routing=False):

super().__init__()

self.topk = topk

self.qk_dim = qk_dim

self.scale = qk_scale or qk_dim ** -0.5

self.diff_routing = diff_routing

# TODO: norm layer before/after linear?

self.emb = nn.Linear(qk_dim, qk_dim) if param_routing else nn.Identity()

# routing activation

self.routing_act = nn.Softmax(dim=-1)

def forward(self, query: Tensor, key: Tensor) -> Tuple[Tensor]:

"""

Args:

q, k: (n, p^2, c) tensor

Return:

r_weight, topk_index: (n, p^2, topk) tensor

"""

if not self.diff_routing:

query, key = query.detach(), key.detach()

query_hat, key_hat = self.emb(query), self.emb(key) # per-window pooling -> (n, p^2, c)

attn_logit = (query_hat * self.scale) @ key_hat.transpose(-2, -1) # (n, p^2, p^2)

topk_attn_logit, topk_index = torch.topk(attn_logit, k=self.topk, dim=-1) # (n, p^2, k), (n, p^2, k)

r_weight = self.routing_act(topk_attn_logit) # (n, p^2, k)

return r_weight, topk_index

class KVGather(nn.Module):

def __init__(self, mul_weight='none'):

super().__init__()

assert mul_weight in ['none', 'soft', 'hard']

self.mul_weight = mul_weight

def forward(self, r_idx: Tensor, r_weight: Tensor, kv: Tensor):

"""

r_idx: (n, p^2, topk) tensor

r_weight: (n, p^2, topk) tensor

kv: (n, p^2, w^2, c_kq+c_v)

Return:

(n, p^2, topk, w^2, c_kq+c_v) tensor

"""

# select kv according to routing index

n, p2, w2, c_kv = kv.size()

topk = r_idx.size(-1)

# print(r_idx.size(), r_weight.size())

# FIXME: gather consumes much memory (topk times redundancy), write cuda kernel?

topk_kv = torch.gather(kv.view(n, 1, p2, w2, c_kv).expand(-1, p2, -1, -1, -1),

# (n, p^2, p^2, w^2, c_kv) without mem cpy

dim=2,

index=r_idx.view(n, p2, topk, 1, 1).expand(-1, -1, -1, w2, c_kv)

# (n, p^2, k, w^2, c_kv)

)

if self.mul_weight == 'soft':

topk_kv = r_weight.view(n, p2, topk, 1, 1) * topk_kv # (n, p^2, k, w^2, c_kv)

elif self.mul_weight == 'hard':

raise NotImplementedError('differentiable hard routing TBA')

# else: #'none'

# topk_kv = topk_kv # do nothing

return topk_kv

class QKVLinear(nn.Module):

def __init__(self, dim, qk_dim, bias=True):

super().__init__()

self.dim = dim

self.qk_dim = qk_dim

self.qkv = nn.Linear(dim, qk_dim + qk_dim + dim, bias=bias)

def forward(self, x):

q, kv = self.qkv(x).split([self.qk_dim, self.qk_dim + self.dim], dim=-1)

return q, kv

# q, k, v = self.qkv(x).split([self.qk_dim, self.qk_dim, self.dim], dim=-1)

# return q, k, v

class BiLevelRoutingAttention(nn.Module):

"""

n_win: number of windows in one side (so the actual number of windows is n_win*n_win)

kv_per_win: for kv_downsample_mode='ada_xxxpool' only, number of key/values per window. Similar to n_win, the actual number is kv_per_win*kv_per_win.

topk: topk for window filtering

param_attention: 'qkvo'-linear for q,k,v and o, 'none': param free attention

param_routing: extra linear for routing

diff_routing: wether to set routing differentiable

soft_routing: wether to multiply soft routing weights

"""

def __init__(self, dim, n_win=7, num_heads=8, qk_dim=None, qk_scale=None,

kv_per_win=4, kv_downsample_ratio=4, kv_downsample_kernel=None, kv_downsample_mode='identity',

topk=4, param_attention="qkvo", param_routing=False, diff_routing=False, soft_routing=False,

side_dwconv=3,

auto_pad=True):

super().__init__()

# local attention setting

self.dim = dim

self.n_win = n_win # Wh, Ww

self.num_heads = num_heads

self.qk_dim = qk_dim or dim

assert self.qk_dim % num_heads == 0 and self.dim % num_heads == 0, 'qk_dim and dim must be divisible by num_heads!'

self.scale = qk_scale or self.qk_dim ** -0.5

################side_dwconv (i.e. LCE in ShuntedTransformer)###########

self.lepe = nn.Conv2d(dim, dim, kernel_size=side_dwconv, stride=1, padding=side_dwconv // 2,

groups=dim) if side_dwconv > 0 else \

lambda x: torch.zeros_like(x)

################ global routing setting #################

self.topk = topk

self.param_routing = param_routing

self.diff_routing = diff_routing

self.soft_routing = soft_routing

# router

assert not (self.param_routing and not self.diff_routing) # cannot be with_param=True and diff_routing=False

self.router = TopkRouting(qk_dim=self.qk_dim,

qk_scale=self.scale,

topk=self.topk,

diff_routing=self.diff_routing,

param_routing=self.param_routing)

if self.soft_routing: # soft routing, always diffrentiable (if no detach)

mul_weight = 'soft'

elif self.diff_routing: # hard differentiable routing

mul_weight = 'hard'

else: # hard non-differentiable routing

mul_weight = 'none'

self.kv_gather = KVGather(mul_weight=mul_weight)

# qkv mapping (shared by both global routing and local attention)

self.param_attention = param_attention

if self.param_attention == 'qkvo':

self.qkv = QKVLinear(self.dim, self.qk_dim)

self.wo = nn.Linear(dim, dim)

elif self.param_attention == 'qkv':

self.qkv = QKVLinear(self.dim, self.qk_dim)

self.wo = nn.Identity()

else:

raise ValueError(f'param_attention mode {self.param_attention} is not surpported!')

self.kv_downsample_mode = kv_downsample_mode

self.kv_per_win = kv_per_win

self.kv_downsample_ratio = kv_downsample_ratio

self.kv_downsample_kenel = kv_downsample_kernel

if self.kv_downsample_mode == 'ada_avgpool':

assert self.kv_per_win is not None

self.kv_down = nn.AdaptiveAvgPool2d(self.kv_per_win)

elif self.kv_downsample_mode == 'ada_maxpool':

assert self.kv_per_win is not None

self.kv_down = nn.AdaptiveMaxPool2d(self.kv_per_win)

elif self.kv_downsample_mode == 'maxpool':

assert self.kv_downsample_ratio is not None

self.kv_down = nn.MaxPool2d(self.kv_downsample_ratio) if self.kv_downsample_ratio > 1 else nn.Identity()

elif self.kv_downsample_mode == 'avgpool':

assert self.kv_downsample_ratio is not None

self.kv_down = nn.AvgPool2d(self.kv_downsample_ratio) if self.kv_downsample_ratio > 1 else nn.Identity()

elif self.kv_downsample_mode == 'identity': # no kv downsampling

self.kv_down = nn.Identity()

elif self.kv_downsample_mode == 'fracpool':

# assert self.kv_downsample_ratio is not None

# assert self.kv_downsample_kenel is not None

# TODO: fracpool

# 1. kernel size should be input size dependent

# 2. there is a random factor, need to avoid independent sampling for k and v

raise NotImplementedError('fracpool policy is not implemented yet!')

elif kv_downsample_mode == 'conv':

# TODO: need to consider the case where k != v so that need two downsample modules

raise NotImplementedError('conv policy is not implemented yet!')

else:

raise ValueError(f'kv_down_sample_mode {self.kv_downsaple_mode} is not surpported!')

# softmax for local attention

self.attn_act = nn.Softmax(dim=-1)

self.auto_pad = auto_pad

def forward(self, x, ret_attn_mask=False):

"""

x: NHWC tensor

Return:

NHWC tensor

"""

x = rearrange(x, "n c h w -> n h w c")

# NOTE: use padding for semantic segmentation

###################################################

if self.auto_pad:

N, H_in, W_in, C = x.size()

pad_l = pad_t = 0

pad_r = (self.n_win - W_in % self.n_win) % self.n_win

pad_b = (self.n_win - H_in % self.n_win) % self.n_win

x = F.pad(x, (0, 0, # dim=-1

pad_l, pad_r, # dim=-2

pad_t, pad_b)) # dim=-3

_, H, W, _ = x.size() # padded size

else:

N, H, W, C = x.size()

assert H % self.n_win == 0 and W % self.n_win == 0 #

###################################################

# patchify, (n, p^2, w, w, c), keep 2d window as we need 2d pooling to reduce kv size

x = rearrange(x, "n (j h) (i w) c -> n (j i) h w c", j=self.n_win, i=self.n_win)

#################qkv projection###################

# q: (n, p^2, w, w, c_qk)

# kv: (n, p^2, w, w, c_qk+c_v)

# NOTE: separte kv if there were memory leak issue caused by gather

q, kv = self.qkv(x)

# pixel-wise qkv

# q_pix: (n, p^2, w^2, c_qk)

# kv_pix: (n, p^2, h_kv*w_kv, c_qk+c_v)

q_pix = rearrange(q, 'n p2 h w c -> n p2 (h w) c')

kv_pix = self.kv_down(rearrange(kv, 'n p2 h w c -> (n p2) c h w'))

kv_pix = rearrange(kv_pix, '(n j i) c h w -> n (j i) (h w) c', j=self.n_win, i=self.n_win)

q_win, k_win = q.mean([2, 3]), kv[..., 0:self.qk_dim].mean(

[2, 3]) # window-wise qk, (n, p^2, c_qk), (n, p^2, c_qk)

##################side_dwconv(lepe)##################

# NOTE: call contiguous to avoid gradient warning when using ddp

lepe = self.lepe(rearrange(kv[..., self.qk_dim:], 'n (j i) h w c -> n c (j h) (i w)', j=self.n_win,

i=self.n_win).contiguous())

lepe = rearrange(lepe, 'n c (j h) (i w) -> n (j h) (i w) c', j=self.n_win, i=self.n_win)

############ gather q dependent k/v #################

r_weight, r_idx = self.router(q_win, k_win) # both are (n, p^2, topk) tensors

kv_pix_sel = self.kv_gather(r_idx=r_idx, r_weight=r_weight, kv=kv_pix) # (n, p^2, topk, h_kv*w_kv, c_qk+c_v)

k_pix_sel, v_pix_sel = kv_pix_sel.split([self.qk_dim, self.dim], dim=-1)

# kv_pix_sel: (n, p^2, topk, h_kv*w_kv, c_qk)

# v_pix_sel: (n, p^2, topk, h_kv*w_kv, c_v)

######### do attention as normal ####################

k_pix_sel = rearrange(k_pix_sel, 'n p2 k w2 (m c) -> (n p2) m c (k w2)',

m=self.num_heads) # flatten to BMLC, (n*p^2, m, topk*h_kv*w_kv, c_kq//m) transpose here?

v_pix_sel = rearrange(v_pix_sel, 'n p2 k w2 (m c) -> (n p2) m (k w2) c',

m=self.num_heads) # flatten to BMLC, (n*p^2, m, topk*h_kv*w_kv, c_v//m)

q_pix = rearrange(q_pix, 'n p2 w2 (m c) -> (n p2) m w2 c',

m=self.num_heads) # to BMLC tensor (n*p^2, m, w^2, c_qk//m)

# param-free multihead attention

attn_weight = (

q_pix * self.scale) @ k_pix_sel # (n*p^2, m, w^2, c) @ (n*p^2, m, c, topk*h_kv*w_kv) -> (n*p^2, m, w^2, topk*h_kv*w_kv)

attn_weight = self.attn_act(attn_weight)

out = attn_weight @ v_pix_sel # (n*p^2, m, w^2, topk*h_kv*w_kv) @ (n*p^2, m, topk*h_kv*w_kv, c) -> (n*p^2, m, w^2, c)

out = rearrange(out, '(n j i) m (h w) c -> n (j h) (i w) (m c)', j=self.n_win, i=self.n_win,

h=H // self.n_win, w=W // self.n_win)

out = out + lepe

# output linear

out = self.wo(out)

# NOTE: use padding for semantic segmentation

# crop padded region

if self.auto_pad and (pad_r > 0 or pad_b > 0):

out = out[:, :H_in, :W_in, :].contiguous()

if ret_attn_mask:

return out, r_weight, r_idx, attn_weight

else:

return rearrange(out, "n h w c -> n c h w")双层路由注意 (BRA) 是一种机制,旨在通过在应用细粒度的 token-to-token 注意之前在粗区域级别动态过滤掉不相关的键值对,从而提高视觉转换器的效率和性能。以下是 BRA 如何处理图像的详细说明:

区域级过滤:

-

该过程从构建区域级亲和力图开始。此图捕获图像不同区域之间的关系。

-

对于每个查询区域,该方法会过滤掉最不相关的键值对,仅保留前 k 个最相关的区域。通过仅关注图像中最相关的部分,这减少了所需的计算次数。

Token 收集:

-

一旦确定了相关区域,下一步就是从这些区域收集键值 token。这涉及选择在空间上分散在图像上的 token。

-

BRA 不会执行稀疏矩阵乘法(这在 GPU 上效率低下),而是将这些标记聚集到密集矩阵中,从而实现硬件友好的密集矩阵乘法。

细粒度标记到标记注意力:

-

收集相关标记后,BRA 在选定区域内应用标记到标记注意力。此步骤是实际注意力机制计算值的权重和加权总和的地方。

-

这可确保每个查询都关注语义上最相关的键值对,而不会被无关信息分散注意力。

整体流程:

-

两级方法(区域级过滤,然后是标记级注意力)使 BRA 能够实现动态和查询感知的稀疏性。这意味着注意力机制可以适应图像的内容,从而提供更灵活、更高效的计算。

-

通过关注较小的相关标记子集,BRA 减少了视觉转换器的计算负担和内存占用。

BiFormer 中的实现:

-

BRA 被用作名为 BiFormer 的新视觉转换器架构的核心构建块。

-

BiFormer 受益于 BRA 的效率,使其适用于各种计算机视觉任务,如图像分类、对象检测和语义分割。

-

该设计确保 BiFormer 能够通过以内容感知的方式仅关注最相关的标记来有效地处理密集预测任务。

区域级路由和标记级注意力相结合使 BRA 能够平衡计算效率和性能,使其成为视觉转换器的强大工具。

2.2 更改init.py文件

关键步骤二:修改modules文件夹下的__init__.py文件,先导入函数

然后在下面的__all__中声明函数

2.3 添加yaml文件

关键步骤三:在/ultralytics/ultralytics/cfg/models/v8下面新建文件yolov8_BiFormer.yaml文件,粘贴下面的内容

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2f, [1024, True]]

- [-1, 1, SPPF, [1024, 5]] # 9

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, C2f, [512]] # 12

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 1, BiLevelRoutingAttention, []] # 15 (P3/8-small)

- [-1, 3, C2f, [256]] # 16 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]]

- [[-1, 12], 1, Concat, [1]] # cat head P4

- [-1, 1, BiLevelRoutingAttention, []] # 15 (P3/8-small)

- [-1, 3, C2f, [512]] # 20 (P4/16-medium)

- [-1, 1, Conv, [512, 3, 2]]

- [[-1, 9], 1, Concat, [1]] # cat head P5

- [-1, 1, BiLevelRoutingAttention, []] # 15 (P3/8-small)

- [-1, 3, C2f, [1024]] # 24 (P5/32-large)

- [[16, 20, 24], 1, Detect, [nc]] # Detect(P3, P4, P5)温馨提示:因为本文只是对yolov8基础上添加模块,如果要对yolov8n/l/m/x进行添加则只需要指定对应的depth_multiple 和 width_multiple。

# YOLOv8n

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.25 # layer channel multiple

max_channels: 1024 # max_channels

# YOLOv8s

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

max_channels: 1024 # max_channels

# YOLOv8l

depth_multiple: 1.0 # model depth multiple

width_multiple: 1.0 # layer channel multiple

max_channels: 512 # max_channels

# YOLOv8m

depth_multiple: 0.67 # model depth multiple

width_multiple: 0.75 # layer channel multiple

max_channels: 768 # max_channels

# YOLOv8x

depth_multiple: 1.33 # model depth multiple

width_multiple: 1.25 # layer channel multiple

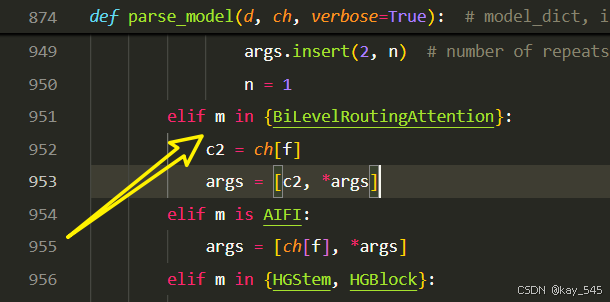

max_channels: 512 # max_channels2.4 在task.py中进行注册

关键步骤四:在task.py的parse_model函数中进行注册,

elif m in {BiLevelRoutingAttention}:

c2 = ch[f]

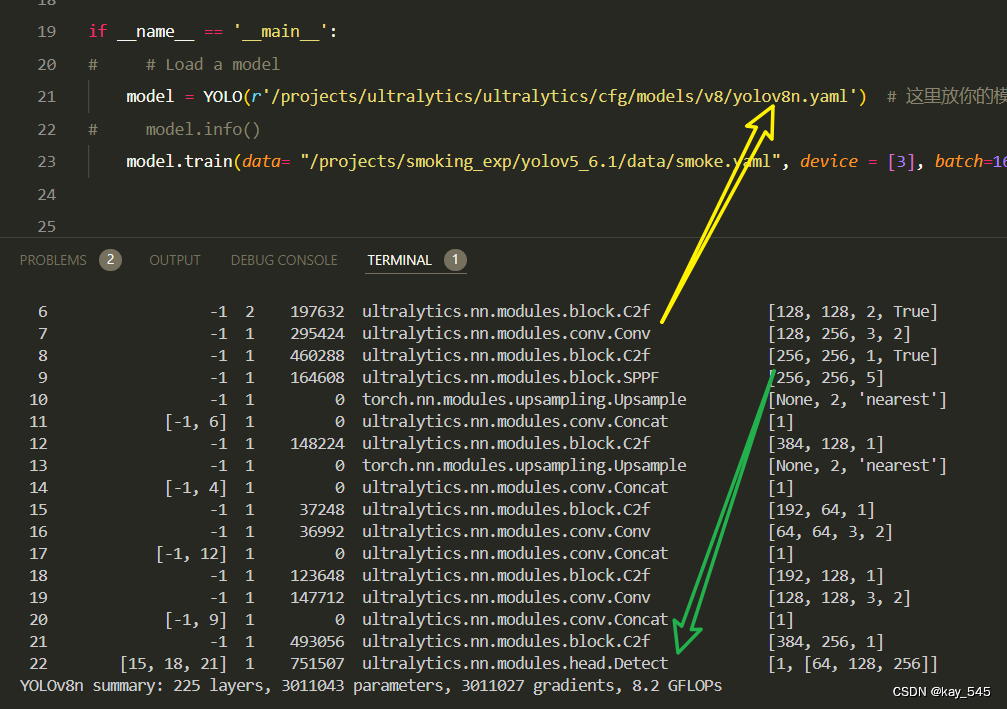

args = [c2, *args]2.5 执行程序

关键步骤五:在ultralytics文件中新建train.py,将model的参数路径设置为yolov8_PA.yaml的路径即可

from ultralytics import YOLO

# Load a model

# model = YOLO('yolov8n.yaml') # build a new model from YAML

# model = YOLO('yolov8n.pt') # load a pretrained model (recommended for training)

model = YOLO(r'/projects/ultralytics/ultralytics/cfg/models/v8/yolov8_BiFormer.yaml') # build from YAML and transfer weights

# Train the model

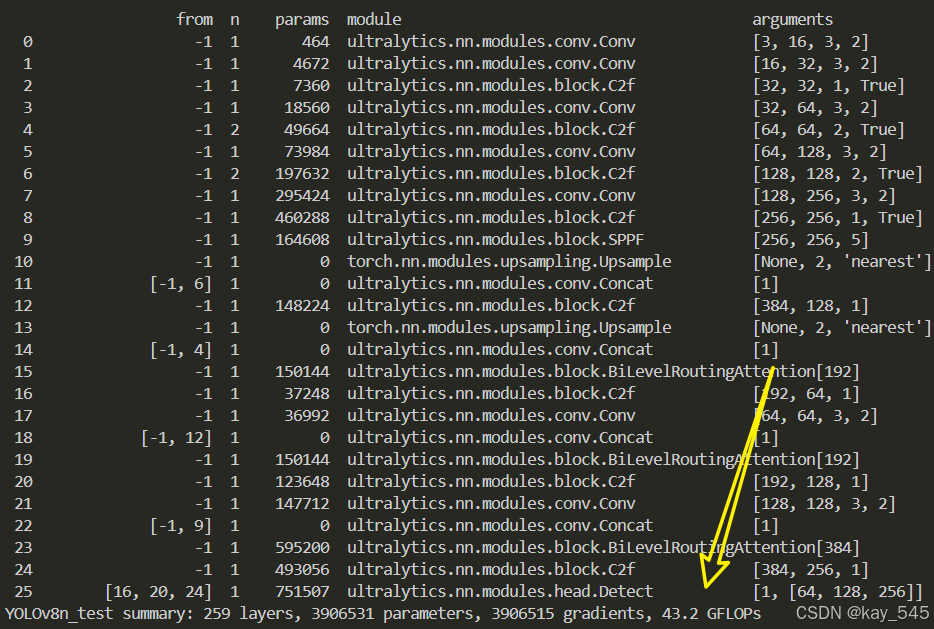

model.train(batch=16)🚀运行程序,如果出现下面的内容则说明添加成功🚀

3. 完整代码分享

https://pan.baidu.com/s/1K_LuanI17gTpTSxrMBwueg?pwd=hp8v提取码:hp8v

4. GFLOPs

关于GFLOPs的计算方式可以查看:百面算法工程师 | 卷积基础知识——Convolution

未改进的YOLOv8nGFLOPs

改进后的GFLOPs

5. 进阶

可以结合损失函数或者卷积模块进行多重改进

6. 总结

BiFormer 是一种新的视觉 Transformer 架构,其核心构建块是双级路由注意力机制 (Bi-Level Routing Attention, BRA)。这种机制首先通过构建区域级亲和图,筛选出每个查询区域中最不相关的键值对,仅保留前 k 个最相关的区域,从而减少计算量。然后,通过将这些相关区域的键值令牌聚集到密集矩阵中,利用 GPU 友好的密集矩阵乘法进行高效计算。接着,在选定区域内应用细粒度的令牌到令牌的注意力机制,从而使每个查询仅关注最语义相关的键值对。通过这种方式,BiFormer 实现了动态且查询感知的稀疏性,使得注意力机制能够根据图像内容进行灵活分配计算资源。最终,BiFormer 在处理图像分类、目标检测和语义分割等任务时,既能保持较高的性能,又能大幅提高计算效率。