概述

1)ceph集群:nautilus版,monitoring为192.168.39.19-21

2)kuberntes集群环境:v1.14.2

3)集成方式:storageclass动态提供存储

4)k8s集群的节点已安装ceph-common包。

1)步骤1:在ceph集群中创建一个用户ceph rbd块的pool和一个普通用户

ceph osd pool create ceph-demo 16 16

ceph osd pool application enable ceph-demo rbd

ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=ceph-demo' mgr 'profile rbd pool=ceph-demo'

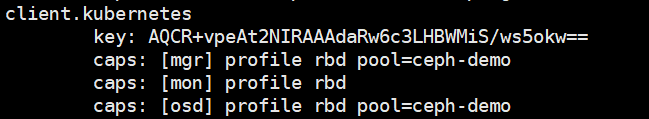

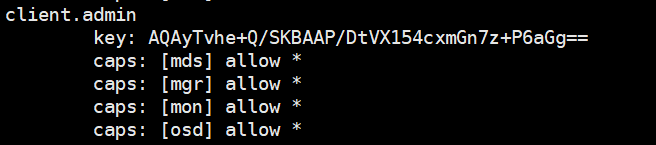

此时ceph集群中存在admin用户和kubernetes用户,如图所示:

2)步骤2:在k8s中创建存放ceph用户的secret

#创建secret对象保存ceph admin用户的key

kubectl create secret generic ceph-admin --type="kubernetes.io/rbd" --from-literal=key=AQAyTvhe+Q/SKBAAP/DtVX154cxmGn7z+P6aGg== --namespace=kube-system

#创建secret对象保存ceph kubernetes用户的key

kubectl create secret generic ceph-kubernetes --type="kubernetes.io/rbd" --from-literal=key=AQCR+vpeAt2NIRAAAdaRw6c3LHBWMiS/ws5okw== --namespace=kube-system

3)步骤3:在k8s中部署csi插件

git clone https://github.com/kubernetes-incubator/external-storage.git

cd external-storage/ceph/rbd/deploy

export NAMESPACE=kube-system

sed -r -i "s/namespace: [^ ]+/namespace: $NAMESPACE/g" ./rbac/clusterrolebinding.yaml ./rbac/rolebinding.yaml

kubectl -n $NAMESPACE apply -f ./rbac

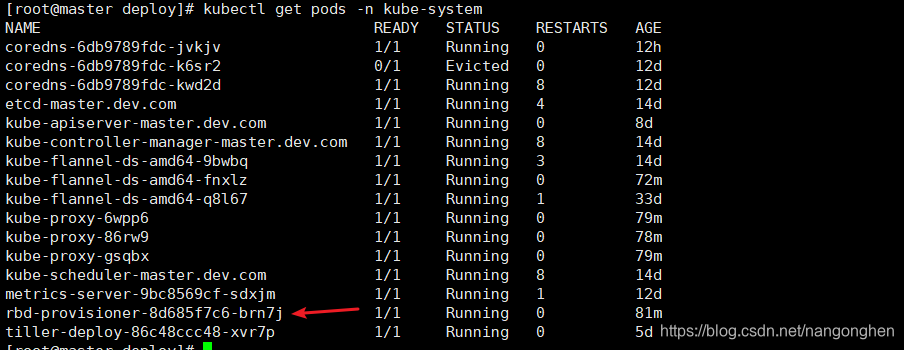

csi插件部署成功,如图所示:

4)步骤4:在k8s中创建storageclass

[root@master deploy]# cat storageclass-ceph-rbd.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: ceph-rbd

provisioner: ceph.com/rbd

parameters:

monitors: 192.168.39.19:6789,192.168.39.20:6789,192.168.39.21:6789

adminId: admin

adminSecretName: ceph-admin

adminSecretNamespace: kube-system

#userId字段会变成插件创建的pv的spec.rbd.user字段

userId: kubernetes

userSecretName: ceph-kubernetes

userSecretNamespace: kube-system

pool: ceph-demo

fsType: xfs

imageFormat: "2"

imageFeatures: "layering"

步骤5:在k8s中创建指定storageclass的pvc

[root@master deploy]# cat ceph-rbd-pvc-test.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: ceph-rbd-claim

spec:

accessModes:

- ReadWriteOnce

storageClassName: ceph-rbd

resources:

requests:

storage: 32Mi

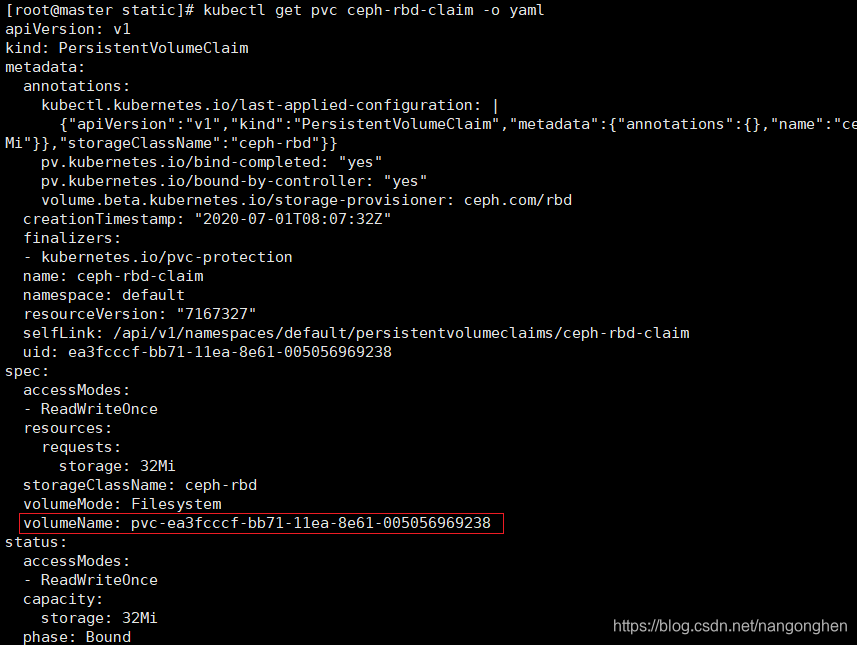

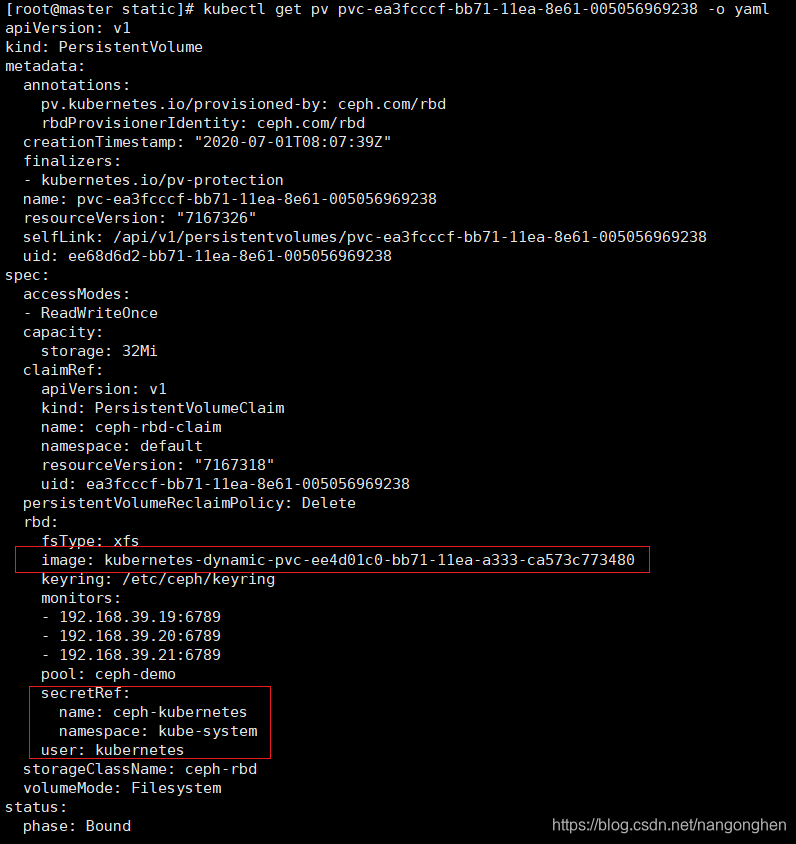

此时查看pvc和pv的详细信息,如图所示:

6)步骤6:在k8s中创建指定pvc的pod

[root@master deploy]# cat ceph-busybox-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: ceph-rbd-busybox

spec:

containers:

- name: ceph-busybox

image: busybox:1.27

command: ["sleep", "60000"]

volumeMounts:

- name: ceph-vol1

mountPath: /usr/share/busybox

readOnly: false

volumes:

- name: ceph-vol1

persistentVolumeClaim:

claimName: ceph-rbd-claim

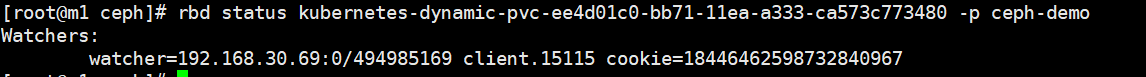

7)步骤7:查看pod的挂载情况以及ceph rbd的信息。

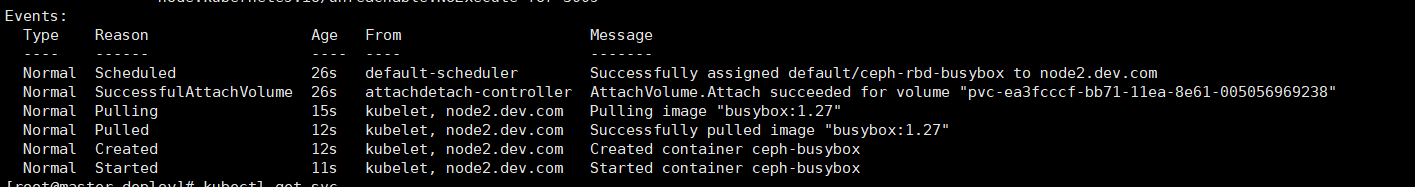

pod正确启动,事件如图所示:

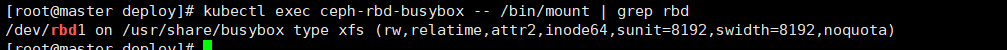

pod中正确挂载rbd块,进入pod中使用mount命令查看:

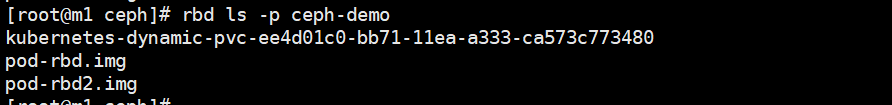

在ceph集群中的ceph-demo pool中存在csi插件创建的rbd块: