一、安装和配置nfs

这里选用ip为192.168.85.100的master节点作为nfs服务器服务端

在master节点上执行

yum安装nfs-utils

yum install -y nfs-utils创建exports文件,* 表示暴露权限给所有主机;* 也可以使用 192.168.0.0/16 代替,表示暴露给所有子网内主机

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports创建nfs共享目录

mkdir -pv /nfs/data/赋予访问权限

chmod 777 -R /nfs/data/启动nfs服务端,并设置开启自启

systemctl start nfs-serversystemctl start rpcbindsystemctl enable nfs-serversystemctl enable rpcbind加载nfs配置

exportfs -r检查是否生效

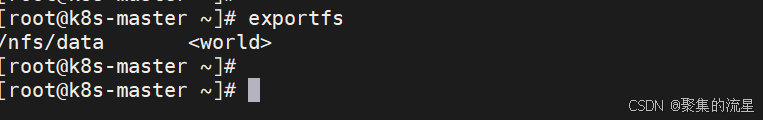

exportfs

在其他Node节点安装nfs-utils

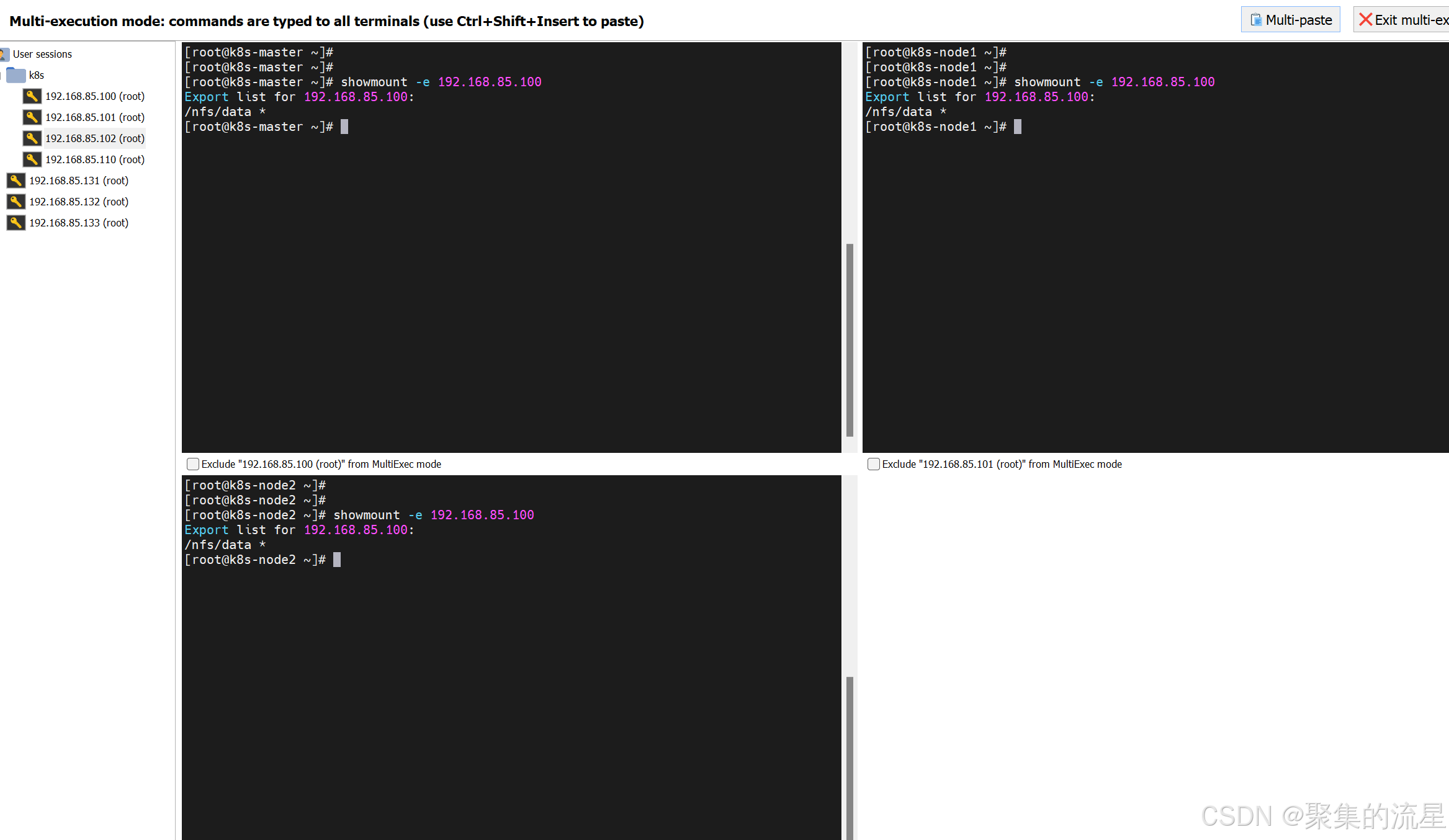

yum install -y nfs-utils测试nfs服务端是否设置共享目录

# showmount -e <nfs服务器的IP>

showmount -e 192.168.85.100二、配置StorageClass的provisioner

编写provisioner存储供应商nfs的资源文件

vim k8s-nfs-provisioner.yamlyaml文件内容如下,nfs服务端ip改为自己的

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-client

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # 指定一个供应商的名字

# or choose another name, 必须匹配 deployment 的 env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false" # 删除 PV 的时候,PV 中的内容是否备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: ccr.ccs.tencentyun.com/gcr-containers/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.85.100 # NFS 服务器的地址

- name: NFS_PATH

value: /nfs/data # NFS 服务器的共享目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.85.100

path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

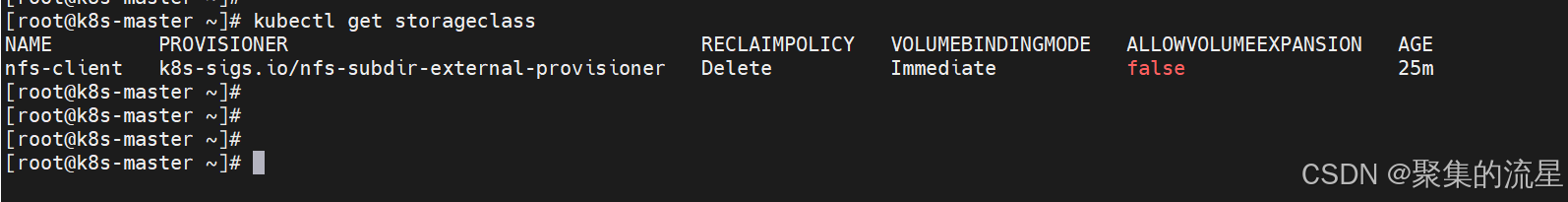

kubectl apply -f k8s-nfs-provisioner.yaml三、测试

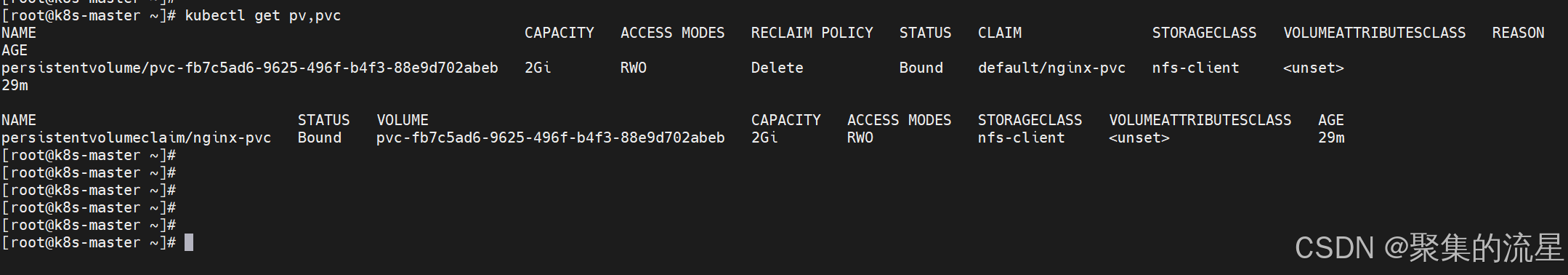

测试创建pvc能否自动申请pv,创建pvc资源文件

vim k8s-pvc.yamlapiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

namespace: default

labels:

app: nginx-pvc

spec:

storageClassName: nfs-client # 注意此处

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

---

apiVersion: v1

kind: Pod

metadata:

name: nginx

namespace: default

labels:

app: nginx

spec:

containers:

- name: nginx

image: anolis-registry.cn-zhangjiakou.cr.aliyuncs.com/openanolis/nginx:1.14.1-8.6

resources:

limits:

cpu: 200m

memory: 500Mi

requests:

cpu: 100m

memory: 200Mi

ports:

- containerPort: 80

name: http

volumeMounts:

- name: localtime

mountPath: /etc/localtime

- name: html

mountPath: /usr/share/nginx/html/

volumes:

- name: localtime

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

- name: html

persistentVolumeClaim:

claimName: nginx-pvc

readOnly: false

restartPolicy: Always