- 在开始之前先自己准备一个Redis实例

一、部署redis_exporter

1、docker部署(这里我用的这种)

docker run -d --name redis_exporter -e REDIS_ADDR="redis://172.16.3.225:31618" -e REDIS_PASSWORD='chinaedu_newcj' -p 9121:9121 oliver006/redis_exporter

REDIS_ADDR: 可以一个或多个,多个节点用逗号分开

2、二进制部署

wget https://github.com/oliver006/redis_exporter/releases/download/v1.12.1/redis_exporter-v1.12.1.linux-amd64.tar.gz

tar -zxvf redis_exporter-v1.12.1.linux-amd64.tar.gz

./redis_exporter -redis.addr=172.16.3.225:31618 -redis.password='chinaedu_newcj' -web.listen-address=172.16.3.225:9121

注意:密码中有点 等特殊字符, 需要使用 ‘’ .

3、Kubernetes部署

cat > redis_exporter.yaml << 'EOF'

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-exporter

namespace: default

labels:

app: redis-exporter

spec:

selector:

matchLabels:

app: redis-exporter

replicas: 1

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

app: redis-exporter

spec:

containers:

- name: redis-exporter

image: oliver006/redis_exporter

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 100m

memory: 100Mi

env:

- name: REDIS_PASSWORD

value: chinaedu_newcj

- name: REDIS_ADDR

value: redis://172.16.3.225:31618

ports:

- containerPort: 80

name: redis-exporter

volumeMounts:

- name: localtime

mountPath: /etc/localtime

volumes:

- name: localtime

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

restartPolicy: Always

---

apiVersion: v1

kind: Service

metadata:

name: redis-exporter

namespace: default

labels:

app: redis-exporter

spec:

selector:

app: redis-exporter

type: ClusterIP

sessionAffinity: None

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

ports:

- name: redis-exporter

protocol: TCP

port: 9121

targetPort: 9121

EOF

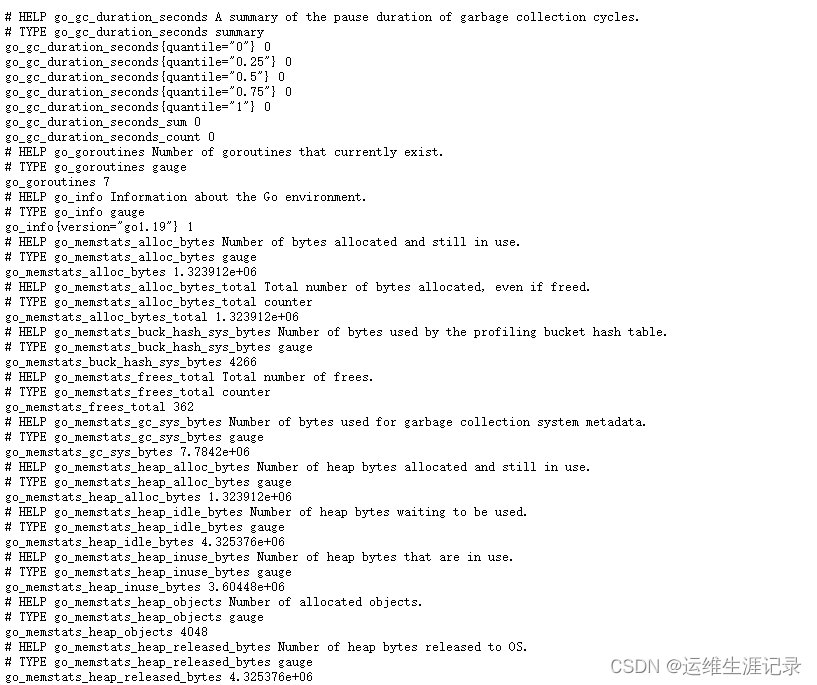

4、访问

二、配置 Prometheus 服务自动发现

1、有 Service Pod 的redis_exporter自动发现

对于有Service暴露的服务我们可以用 prometheus-operator 项目定义的ServiceMonitorCRD来配置服务发现,配置模板如下:

cat > redis-ServiceMonitor.yaml << 'EOF'

# ServiceMonitor 服务自动发现规则

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor # prometheus-operator 定义的CRD

metadata:

name: redis-metrics

namespace: monitoring

spec:

jobLabel: app # 监控数据的job标签指定为metrics label的值,即加上数据标签job=redis-exporter

selector:

matchLabels:

app: redis-exporter # 自动发现label中有 app=redis-exporter 的service

namespaceSelector:

matchNames: # 配置需要自动发现的命名空间,可以配置多个

- default

endpoints:

- port: redis-exporter # 拉去metric的端口,这个写的是 service的端口名称,即 service yaml的spec.ports.name

interval: 15s # 拉取metric的时间间隔

EOF

以上配置了lzulms命名空间的 jmx-metrics Service的服务自动发现,Prometheus会将这个service 的所有关联pod自动加入监控,并从apiserver获取到最新的pod列表,这样当我们的服务副本扩充时也能自动添加到监控系统中。

2、没有Service pod 的redis_exporter自动发现

那么对于没有创建 Service 的服务,比如以HostPort对集群外暴露服务的实例,我们可以使用 PodMonitor 来做服务发现,相关样例如下:

# PodMonitor 服务自动发现规则,最新的版本支持,旧版本可能不支持

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor # prometheus-operator 定义的CRD

metadata:

name: redis-metrics

namespace: monitoring

spec:

jobLabel: app # 监控数据的job标签指定为app label的值,即加上数据标签job=redis-exporter

selector:

matchLabels:

app: redis-exporter # 自动发现label中有 app=redis-exporter 的pod

namespaceSelector:

matchNames: # 配置需要自动发现的命名空间,可以配置多个

- default

podMetricsEndpoints:

- port: redis-exporter # Pod yaml中 metric暴露端口的名称 即 spec.ports.name

interval: 15s # 拉取metric的时间间隔

3、监控Kubernetes集群外的redis_exporter

cat > redis-monitor.yaml << 'EOF'

apiVersion: v1

kind: Endpoints

metadata:

name: redis-metrics

namespace: monitoring

labels:

k8s-app: redis-metrics

subsets:

- addresses:

- ip: 172.16.3.225

ports:

- name: redis-exporter

port: 9121

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: redis-metrics

namespace: monitoring

labels:

k8s-app: redis-metrics

spec:

type: ClusterIP

clusterIP: None

ports:

- name: redis-exporter

port: 9121

protocol: TCP

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: redis-metrics

namespace: monitoring

labels:

app: redis-metrics

k8s-app: redis-metrics

prometheus: kube-prometheus

release: kube-prometheus

spec:

endpoints:

- port: redis-exporter

interval: 15s

selector:

matchLabels:

k8s-app: redis-metrics

namespaceSelector:

matchNames:

- monitoring

EOF

4、为Prometheus serviceAccount 添加对应namespace的权限

注意:如果添加的监控在monitoring命名空间下,则可以忽略这步…

cat > redis-serviceAccount.yaml << 'EOF'

# 在对应的ns中创建角色

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: prometheus-k8s

namespace: default

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- pods

verbs:

- get

- list

- watch

---

# 绑定角色 prometheus-k8s 角色到 Role

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: prometheus-k8s

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: prometheus-k8s

subjects:

- kind: ServiceAccount

name: prometheus-k8s # Prometheus 容器使用的 serviceAccount,kube-prometheus默认使用prometheus-k8s这个用户

namespace: monitoring

EOF

三、导入Grafana模板

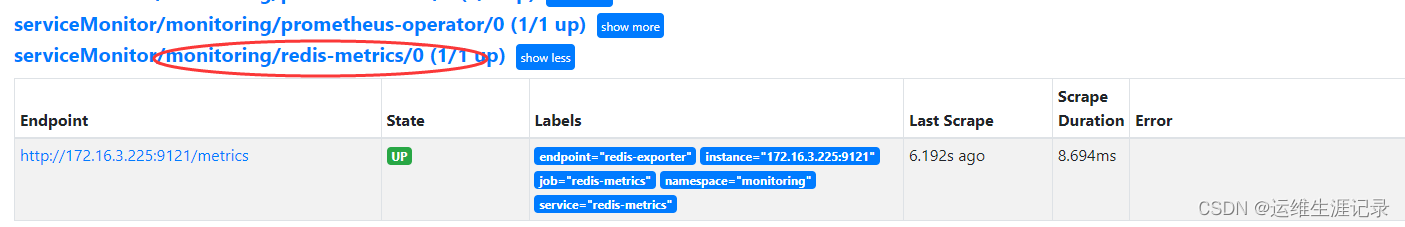

在导入模板前先确认一下Prometheus Targets是否有redis-metrics监控

- Grafana导入模板ID:11835

四、添加报警规则

vim kube-prometheus/manifests/prometheus-rules.yaml

# 去最后一行添加

---

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

prometheus: k8s

role: alert-rules

name: jvm-metrics-rules

namespace: monitoring

spec:

groups:

- name: Redis-监控告警

rules:

- alert: 警报!Redis应用不可用

expr: redis_up == 0

for: 0m

labels:

severity: 严重告警

annotations:

summary: "{{ $labels.instance }} Redis应用不可用"

description: "Redis应用不可达\n 当前值 = {{ $value }}"

- alert: 警报!丢失Master节点

expr: (count(redis_instance_info{role="master"}) ) < 1

for: 0m

labels:

severity: 严重告警

annotations:

summary: "{{ $labels.instance }} 丢失Redis master"

description: "Redis集群当前没有主节点\n 当前值 = {{ $value }}"

- alert: 警报!脑裂,主节点太多

expr: count(redis_instance_info{role="master"}) > 1

for: 0m

labels:

severity: 严重告警

annotations:

summary: "{{ $labels.instance }} Redis脑裂,主节点太多"

description: "{{ $labels.instance }} 主节点太多\n 当前值 = {{ $value }}"

- alert: 警报!Slave连接不可达

expr: count without (instance, job) (redis_connected_slaves) - sum without (instance, job) (redis_connected_slaves) - 1 > 1

for: 0m

labels:

severity: 严重告警

annotations:

summary: "{{ $labels.instance }} Redis丢失slave节点"

description: "Redis slave不可达.请确认主从同步状态\n 当前值 = {{ $value }}"

- alert: 警报!Redis副本不一致

expr: delta(redis_connected_slaves[1m]) < 0

for: 0m

labels:

severity: 严重告警

annotations:

summary: "{{ $labels.instance }} Redis 副本不一致"

description: "Redis集群丢失一个slave节点\n 当前值 = {{ $value }}"

- alert: 警报!Redis集群抖动

expr: changes(redis_connected_slaves[1m]) > 1

for: 2m

labels:

severity: 严重告警

annotations:

summary: "{{ $labels.instance }} Redis集群抖动"

description: "Redis集群抖动,请检查.\n 当前值 = {{ $value }}"

- alert: 警报!持久化失败

expr: (time() - redis_rdb_last_save_timestamp_seconds) / 3600 > 24

for: 0m

labels:

severity: 严重告警

annotations:

summary: "{{ $labels.instance }} Redis持久化失败"

description: "Redis持久化失败(>24小时)\n 当前值 = {{ printf \"%.1f\" $value }}小时"

- alert: 警报!内存不足

expr: redis_memory_used_bytes / redis_total_system_memory_bytes * 100 > 90

for: 2m

labels:

severity: 一般告警

annotations:

summary: "{{ $labels.instance }}系统内存不足"

description: "Redis占用系统内存(> 90%)\n 当前值 = {{ printf \"%.2f\" $value }}%"

- alert: 警报!Maxmemory不足

expr: redis_config_maxmemory !=0 and redis_memory_used_bytes / redis_memory_max_bytes * 100 > 80

for: 2m

labels:

severity: 一般告警

annotations:

summary: "{{ $labels.instance }} Maxmemory设置太小"

description: "超出设置最大内存(> 80%)\n 当前值 = {{ printf \"%.2f\" $value }}%"

- alert: 警报!连接数太多

expr: redis_connected_clients > 200

for: 2m

labels:

severity: 一般告警

annotations:

summary: "{{ $labels.instance }} 实时连接数太多"

description: "连接数太多(>200)\n 当前值 = {{ $value }}"

- alert: 警报!连接数太少

expr: redis_connected_clients < 1

for: 2m

labels:

severity: 一般告警

annotations:

summary: "{{ $labels.instance }} 实时连接数太少"

description: "连接数(<1)\n 当前值 = {{ $value }}"

- alert: 警报!拒绝连接数

expr: increase(redis_rejected_connections_total[1m]) > 0

for: 0m

labels:

severity: 严重告警

annotations:

summary: "{{ $labels.instance }} 拒绝连接"

description: "Redis有拒绝连接,请检查连接数配置\n 当前值 = {{ printf \"%.0f\" $value }}"

- alert: 警报!执行命令数大于1000

expr: rate(redis_commands_processed_total[1m]) > 1000

for: 0m

labels:

severity: 严重告警

annotations:

summary: "{{ $labels.instance }} 执行命令次数太多"

description: "Redis执行命令次数太多\n 当前值 = {{ printf \"%.0f\" $value }}"