前言:借鉴的这篇老哥文章,使用vue3的方式来书写的

第一步:下载引入必要包

下载依赖

npm install face-api.js

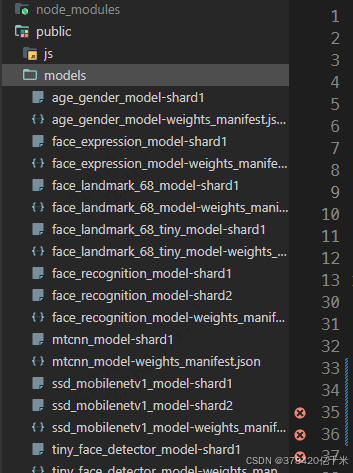

下载model

将下载的人脸识别的模型放入public/models文件夹下

完整代码

<template>

<div class="main-body">

<el-link type="primary" class="cancel-btn" @click="cancel">取消</el-link>

<!-- <el-button type="primary" @click="useCamera">打开摄像头</el-button> -->

<!-- <el-button type="plain" @click="photoShoot">拍照</el-button> -->

<!-- <el-alert

:title="httpsAlert"

type="info"

:closable="false"

v-show="httpsAlert !== ''"

>

</el-alert> -->

<div class="videoImage" ref="faceBox">

<video ref="video" style="display: none"></video>

<canvas

ref="canvas"

class="radiusDiv"

width="400"

height="400"

v-show="videoShow"

></canvas>

<img

ref="image"

class="radiusDiv"

:src="picture"

alt=""

v-show="pictureShow"

/>

<div class="tips-box f-c-c">{{ identifyMsg }}</div>

</div>

</div>

</template>

<script lang="ts" setup>

import { setAllDictory } from "@/utils/Tools";

import { setToken } from "@/utils/token";

import { faceLoginAPI } from "@/request/learnPlatform/login";

import * as faceApi from "face-api.js";

import { onBeforeUnmount, onMounted } from "vue";

import { useRouter } from "vue-router";

let emit = defineEmits(["finished"]);

let videoShow = ref(false);

let pictureShow = ref(false);

// 图片地址

let picture = ref("");

// 用于视频识别的节点

let canvas = ref();

let video = ref();

let image = ref();

let timeout = ref(0);

let msgTimeout = ref(null);

// 模型识别的条件

let options = ref("");

// 提示控制

let noOne = ref("");

let moreThanOne = ref("");

// 不是通过Https访问提示

let httpsAlert = ref("");

let identifyMsg = ref("");

let canLogin = ref(false);

const router = useRouter();

const cancel = () => {

emit("finished");

};

const init = async () => {

await faceApi.nets.ssdMobilenetv1.loadFromUri("/models");

await faceApi.loadFaceLandmarkModel("/models");

options.value = new faceApi.SsdMobilenetv1Options({

minConfidence: 0.5, // 0.1 ~ 0.9

});

// 视频中识别使用的节点

};

/**

* 使用视频来成像摄像头

*/

const useCamera = () => {

videoShow.value = true;

pictureShow.value = false;

cameraOptions();

};

/**

* 使用摄像头

*/

const cameraOptions = () => {

let constraints = {

video: {

width: 400,

height: 400,

},

};

// 如果不是通过loacalhost或者通过https访问会将报错捕获并提示

try {

let promise = navigator.mediaDevices.getUserMedia(constraints);

promise

.then((MediaStream) => {

// 返回参数

video.value.srcObject = MediaStream;

video.value.play();

recognizeFace();

})

.catch((error) => {

console.log(error);

});

} catch (err) {

httpsAlert.value = `您现在在使用非Https访问,

请先在chrome://flags/#unsafely-treat-insecure-origin-as-secure中修改配置;

添将当前链接${window.location.href}添加到列表;

并且将Insecure origins treated as secure修改为enabled;

修改完成后请重启浏览器后再次访问!`;

}

};

/**

* 人脸识别方法

* 通过canvas节点识别

* 节点对象执行递归识别绘制

*/

const recognizeFace = async () => {

if (video.value.paused) return clearTimeout(timeout.value);

canvas.value

.getContext("2d", { willReadFrequently: true })

.drawImage(video.value, 0, 0, 400, 400);

const results = await faceApi

.detectAllFaces(canvas.value, options.value)

.withFaceLandmarks();

canLogin.value = false;

if (results.length === 0) {

identifyMsg.value = "未识别到人脸";

if (moreThanOne.value !== "") {

// moreThanOne.value.close();

moreThanOne.value = "";

}

if (noOne.value === "") {

// identifyMsg.value = "未识别到人脸";

// noOne.value = this.$message({

// message: "未识别到人脸";

// type: "warning";

// duration: 0;

// });

}

} else if (results.length > 1) {

identifyMsg.value = "检测到镜头中有多个人";

if (noOne.value !== "") {

// noOne.value.close();

noOne.value = "";

}

if (moreThanOne.value === "") {

// identifyMsg.value = "检测到镜头中有多个人";

// moreThanOne.value = this.$message({

// message: "检测到镜头中有多个人";

// type: "warning";

// duration: 0;

// });

}

} else {

if (noOne.value !== "") {

// noOne.value.close();

noOne.value = "";

}

if (moreThanOne.value !== "") {

// moreThanOne.value.close();

moreThanOne.value = "";

}

identifyMsg.value = "识别中...请保持不动2秒";

canLogin.value = true;

console.log("🚀 ~ canLogin.value:", canLogin.value);

}

// 通过canvas显示video信息

if (canLogin.value) {

if (msgTimeout.value === null) {

msgTimeout.value = setTimeout(async () => {

// canLogin.value = false;

await photoShoot();

clearTimeout(msgTimeout.value);

msgTimeout.value = null;

}, 2000);

}

}

timeout.value = setTimeout(() => {

return recognizeFace();

});

};

/**

* 拍照上传

*/

const photoShoot = async () => {

// 拿到图片的base64

let canvasImg = canvas.value.toDataURL("image/png");

// 拍照以后将video隐藏

videoShow.value = false;

pictureShow.value = true;

video.value.pause();

// 停止摄像头成像

video.value.srcObject.getTracks()[0].stop();

if (canvasImg) {

// 拍照将base64转为file流文件

let blob = dataURLtoBlob(canvasImg);

let file = blobToFile(blob, "imgName");

// 将blob图片转化路径图片

let image = window.URL.createObjectURL(file);

picture.value = image;

// 将拍照后的图片发送给后端

let formData = new FormData();

formData.append("file", picture.value);

axios({

method: 'post',

url: '/user/12345',

data: formData

}).then(res => {

console.log(res)

// 跳转主页

router.replace({ name: "home" });

}).catch(err => {

console.log(err)

})

} else {

console.log("canvas生成失败");

}

};

/**

* 将图片转为blob格式

* dataurl 拿到的base64的数据

*/

const dataURLtoBlob = (dataurl) => {

let arr = dataurl.split(","),

mime = arr[0].match(/:(.*?);/)[1],

bstr = atob(arr[1]),

n = bstr.length,

u8arr = new Uint8Array(n);

while (n--) {

u8arr[n] = bstr.charCodeAt(n);

}

return new Blob([u8arr], {

type: mime,

});

};

/**

* 生成文件信息

* theBlob 文件

* fileName 文件名字

*/

const blobToFile = (theBlob, fileName) => {

theBlob.lastModifiedDate = new Date().toLocaleDateString();

theBlob.name = fileName;

return theBlob;

};

onMounted(() => {

// 初始化

init();

// 使用相机

useCamera();

});

onBeforeUnmount(() => {

clearTimeout(timeout.value);

});

</script>

<style scoped lang="scss">

.videoImage {

display: flex;

height: 100vh;

background: #000;

flex-direction: column;

justify-content: center;

align-items: center;

.radiusDiv {

width: 400px;

height: 400px;

border-radius: 50%;

}

.tips-box {

width: 300px;

height: 20px;

margin-top: 20px;

color: #fff;

}

}

.main-body {

position: relative;

.cancel-btn {

position: absolute;

top: 10px;

left: 10px;

}

}

</style>