MMSEGMENTATION安装与训练自定义数据集

实验环境

本次实验使用Autodl平台的服务器,租用的ubuntu环境如下

- 软件环境

PyTorch==2.0.0

Python==3.8

Cuda==11.8

mmsegmentation==v1.2.2

mmcv==2.1.0

- 硬件环境

GPU: RTX 4090D 24GB

CPU: 15 vCPU AMD EPYC 9754 128-Core Processor

安装和运行 MMSEG

参考链接:开始:安装和运行 MMSeg — MMSegmentation 1.2.2 文档

此处默认已经安装了 PyTorch

-

pip install -U openmim mim install mmengine mim install "mmcv==2.1.0" -

安装 MMSegmentation

此处装在autodl-tmp目录下,因此先进入该目录

cd autodl-tmp拉取代码并进入项目目录

# git clone -b main https://github.com/open-mmlab/mmsegmentation.git git clone --branch v1.2.2 https://github.com/open-mmlab/mmsegmentation.git cd mmsegmentation pip install -v -e . # '-v' 表示详细模式,更多的输出 # '-e' 表示以可编辑模式安装工程, # 因此对代码所做的任何修改都生效,无需重新安装 -

验证是否安装成功

下载配置文件和模型文件,该下载过程可能需要花费几分钟,这取决于您的网络环境。当下载结束,您将看到以下两个文件在您当前工作目录:

pspnet_r50-d8_4xb2-40k_cityscapes-512x1024.py和pspnet_r50-d8_512x1024_40k_cityscapes_20200605_003338-2966598c.pthmim download mmsegmentation --config pspnet_r50-d8_4xb2-40k_cityscapes-512x1024 --dest .安装测试环境所需要的库,官网少了这一步,导致运行缺少库报错

pip install -r requirements/tests.txt验证推理 demo,您将在当前文件夹中看到一个新图像

result.jpg,其中所有目标都覆盖了分割 maskpython demo/image_demo.py demo/demo.png configs/pspnet/pspnet_r50-d8_4xb2-40k_cityscapes-512x1024.py pspnet_r50-d8_512x1024_40k_cityscapes_20200605_003338-2966598c.pth --device cuda:0 --out-file result.jpgresult.jpg如下

训练自定义数据集

参考链接:教程1:了解配置文件 — MMSegmentation 1.2.2 文档

此处以PIDNet为例

在 config/_base_ 文件夹下面有4种基本组件类型: 数据集(dataset),模型(model),训练策略(schedule)和运行时的默认设置(default runtime)。许多模型都可以很容易地通过组合这些组件进行实现,比如 DeepLabV3,PSPNet。使用 _base_ 下的组件构建的配置信息叫做原始配置 (primitive)。

对于同一个文件夹下的所有配置文件,建议只有一个对应的原始配置文件。所有其他的配置文件都应该继承自这个原始配置文件,从而保证每个配置文件的最大继承深度为 3。

-

为了保护源代码,在

mmsegmentation/configs下新建_my_base_文件夹,此文件夹下再创建文件夹datasets,models和schedules用于存放自定义配置文件,目录结构如下mmsegmentation/ ├── configs/ │ ├── _my_base_/ │ ├── datasets/ │ ├── schedules/ │ ├── models/ │ ├── default_runtime.py -

新建自定义数据配置文件

此处我的数据集格式为

VOC2012VOC2012/ ├── Annotations/ ├── ImageSets/ │ └── Segmentation/ ├── JPEGImages/ ├── SegmentationClass/ └── SegmentationObject/复制

mmsegmentation/configs/_base_/datasets/pascal_voc12.py到mmsegmentation/configs/_my_base_/datasets目录下根据数据集属性此处我重命名为

road_cracks_512x512.py,内容如下需要注意的是以下几处关键取值:(其它取值参考教程1:了解配置文件 — MMSegmentation 1.2.2 文档)

变量名 取值示例 解释 dataset_type RoadCracksDataset 自定义数据集类名,后面用得着 data_root /root/autodl-tmp/VOC2012/ 数据集存放路径 # dataset settings dataset_type = 'RoadCracksDataset' #自定义数据集名称 data_root = '/root/autodl-tmp/VOC2012/' #数据集路径 crop_size = (512, 512) #数据输入模型的resize后大小 train_pipeline = [ dict(type='LoadImageFromFile'), dict(type='LoadAnnotations'), dict( type='RandomResize', scale=(512, 512), ratio_range=(0.5, 2.0), keep_ratio=True), dict(type='RandomCrop', crop_size=crop_size, cat_max_ratio=0.75), dict(type='RandomFlip', prob=0.5), dict(type='PhotoMetricDistortion'), dict(type='PackSegInputs') ] test_pipeline = [ dict(type='LoadImageFromFile'), dict(type='Resize', scale=(512, 512), keep_ratio=True), # add loading annotation after ``Resize`` because ground truth # does not need to do resize data transform dict(type='LoadAnnotations'), dict(type='PackSegInputs') ] img_ratios = [0.5, 0.75, 1.0, 1.25, 1.5, 1.75] tta_pipeline = [ dict(type='LoadImageFromFile', backend_args=None), dict( type='TestTimeAug', transforms=[ [ dict(type='Resize', scale_factor=r, keep_ratio=True) for r in img_ratios ], [ dict(type='RandomFlip', prob=0., direction='horizontal'), dict(type='RandomFlip', prob=1., direction='horizontal') ], [dict(type='LoadAnnotations')], [dict(type='PackSegInputs')] ]) ] train_dataloader = dict( batch_size=4, num_workers=4, persistent_workers=True, sampler=dict(type='InfiniteSampler', shuffle=True), dataset=dict( type=dataset_type, data_root=data_root, data_prefix=dict( img_path='JPEGImages', seg_map_path='SegmentationClass'), ann_file='ImageSets/Segmentation/train.txt', pipeline=train_pipeline)) val_dataloader = dict( batch_size=1, num_workers=4, persistent_workers=True, sampler=dict(type='DefaultSampler', shuffle=False), dataset=dict( type=dataset_type, data_root=data_root, data_prefix=dict( img_path='JPEGImages', seg_map_path='SegmentationClass'), ann_file='ImageSets/Segmentation/val.txt', pipeline=test_pipeline)) test_dataloader = val_dataloader val_evaluator = dict(type='IoUMetric', iou_metrics=['mIoU']) test_evaluator = val_evaluator -

新建自定义训练配置文件

复制

mmsegmentation/configs/_base_/schedules/schedule_40k.py到mmsegmentation/configs/_my_base_/schedules目录下文件名

schedule_40k.py,这个文件主要设置学习率,epochs等# optimizer optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.0005) optim_wrapper = dict(type='OptimWrapper', optimizer=optimizer, clip_grad=None) # learning policy param_scheduler = [ dict( type='PolyLR', eta_min=1e-4, power=0.9, begin=0, end=40000, by_epoch=False) ] # training schedule for 40k train_cfg = dict(type='IterBasedTrainLoop', max_iters=40000, val_interval=1000) val_cfg = dict(type='ValLoop') test_cfg = dict(type='TestLoop') default_hooks = dict( timer=dict(type='IterTimerHook'), logger=dict(type='LoggerHook', interval=50, log_metric_by_epoch=False), param_scheduler=dict(type='ParamSchedulerHook'), checkpoint=dict(type='CheckpointHook', by_epoch=False, interval=4000), sampler_seed=dict(type='DistSamplerSeedHook'), visualization=dict(type='SegVisualizationHook')) -

新建自定义运行配置文件

复制

mmsegmentation/configs/_base_/default_runtime.py到mmsegmentation/configs/_my_base_目录下文件名

default_runtime.py,这个文件主要设置运行时打印相关的设置default_scope = 'mmseg' env_cfg = dict( cudnn_benchmark=True, mp_cfg=dict(mp_start_method='fork', opencv_num_threads=0), dist_cfg=dict(backend='nccl'), ) vis_backends = [dict(type='LocalVisBackend')] visualizer = dict( type='SegLocalVisualizer', vis_backends=vis_backends, name='visualizer') log_processor = dict(by_epoch=False) log_level = 'INFO' load_from = None resume = False tta_model = dict(type='SegTTAModel') -

自定义训练模型

复制

mmsegmentation/configs/pidnet/pidnet-s_2xb6-120k_1024x1024-cityscapes.py到mmsegmentation/configs/_my_base_/models目录下此处我重命名为

pidnet-s_2xb6-40k_512x512-roadcracks.py其中以下几处关键值需要修改

变量名 取值 解释 base - 配置文件路径 class_weight [1,1] 类别权重 num_classes 19 类别(包括背景) _base_ = [ '../_base_/datasets/cityscapes_1024x1024.py', '../_base_/default_runtime.py' ] # The class_weight is borrowed from https://github.com/openseg-group/OCNet.pytorch/issues/14 # noqa # Licensed under the MIT License class_weight = [ 0.8373, 0.918, 0.866, 1.0345, 1.0166, 0.9969, 0.9754, 1.0489, 0.8786, 1.0023, 0.9539, 0.9843, 1.1116, 0.9037, 1.0865, 1.0955, 1.0865, 1.1529, 1.0507 ] checkpoint_file = 'https://download.openmmlab.com/mmsegmentation/v0.5/pretrain/pidnet/pidnet-s_imagenet1k_20230306-715e6273.pth' # noqa crop_size = (1024, 1024) data_preprocessor = dict( type='SegDataPreProcessor', mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], bgr_to_rgb=True, pad_val=0, seg_pad_val=255, size=crop_size) norm_cfg = dict(type='SyncBN', requires_grad=True) model = dict( type='EncoderDecoder', data_preprocessor=data_preprocessor, backbone=dict( type='PIDNet', in_channels=3, channels=32, ppm_channels=96, num_stem_blocks=2, num_branch_blocks=3, align_corners=False, norm_cfg=norm_cfg, act_cfg=dict(type='ReLU', inplace=True), init_cfg=dict(type='Pretrained', checkpoint=checkpoint_file)), decode_head=dict( type='PIDHead', in_channels=128, channels=128, num_classes=19, norm_cfg=norm_cfg, act_cfg=dict(type='ReLU', inplace=True), align_corners=True, loss_decode=[ dict( type='CrossEntropyLoss', use_sigmoid=False, class_weight=class_weight, loss_weight=0.4), dict( type='OhemCrossEntropy', thres=0.9, min_kept=131072, class_weight=class_weight, loss_weight=1.0), dict(type='BoundaryLoss', loss_weight=20.0), dict( type='OhemCrossEntropy', thres=0.9, min_kept=131072, class_weight=class_weight, loss_weight=1.0) ]), train_cfg=dict(), test_cfg=dict(mode='whole')) train_pipeline = [ dict(type='LoadImageFromFile'), dict(type='LoadAnnotations'), dict( type='RandomResize', scale=(2048, 1024), ratio_range=(0.5, 2.0), keep_ratio=True), dict(type='RandomCrop', crop_size=crop_size, cat_max_ratio=0.75), dict(type='RandomFlip', prob=0.5), dict(type='PhotoMetricDistortion'), dict(type='GenerateEdge', edge_width=4), dict(type='PackSegInputs') ] train_dataloader = dict(batch_size=6, dataset=dict(pipeline=train_pipeline)) iters = 120000 # optimizer optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.0005) optim_wrapper = dict(type='OptimWrapper', optimizer=optimizer, clip_grad=None) # learning policy param_scheduler = [ dict( type='PolyLR', eta_min=0, power=0.9, begin=0, end=iters, by_epoch=False) ] # training schedule for 120k train_cfg = dict( type='IterBasedTrainLoop', max_iters=iters, val_interval=iters // 10) val_cfg = dict(type='ValLoop') test_cfg = dict(type='TestLoop') default_hooks = dict( timer=dict(type='IterTimerHook'), logger=dict(type='LoggerHook', interval=50, log_metric_by_epoch=False), param_scheduler=dict(type='ParamSchedulerHook'), checkpoint=dict( type='CheckpointHook', by_epoch=False, interval=iters // 10), sampler_seed=dict(type='DistSamplerSeedHook'), visualization=dict(type='SegVisualizationHook')) randomness = dict(seed=304)根据我的数据集调整后,修改为自己的类别数(根据后面报错,删除了

class_weight的使用),修改处均注释_base_ = [ '../datasets/road_cracks_512x512.py', '../default_runtime.py' ] # The class_weight is borrowed from https://github.com/openseg-group/OCNet.pytorch/issues/14 # noqa # Licensed under the MIT License # class_weight = [ # 0.8373, 0.918, 0.866, 1.0345, 1.0166, 0.9969, 0.9754, 1.0489, 0.8786, # 1.0023, 0.9539, 0.9843, 1.1116, 0.9037, 1.0865, 1.0955, 1.0865, 1.1529, # 1.0507 # ] class_weight = [ 1,1 ] checkpoint_file = 'https://download.openmmlab.com/mmsegmentation/v0.5/pretrain/pidnet/pidnet-s_imagenet1k_20230306-715e6273.pth' # noqa crop_size = (512, 512) data_preprocessor = dict( type='SegDataPreProcessor', mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], bgr_to_rgb=True, pad_val=0, seg_pad_val=255, size=crop_size) norm_cfg = dict(type='SyncBN', requires_grad=True) model = dict( type='EncoderDecoder', data_preprocessor=data_preprocessor, backbone=dict( type='PIDNet', in_channels=3, channels=32, ppm_channels=96, num_stem_blocks=2, num_branch_blocks=3, align_corners=False, norm_cfg=norm_cfg, act_cfg=dict(type='ReLU', inplace=True), init_cfg=dict(type='Pretrained', checkpoint=checkpoint_file)), decode_head=dict( type='PIDHead', in_channels=128, channels=128, num_classes=2, ###此处改为自己的类别数 norm_cfg=norm_cfg, act_cfg=dict(type='ReLU', inplace=True), align_corners=True, loss_decode=[ dict( type='CrossEntropyLoss', use_sigmoid=False, ###此处删除 loss_weight=0.4), dict( type='OhemCrossEntropy', thres=0.9, min_kept=131072, ###此处删除 loss_weight=1.0), dict(type='BoundaryLoss', loss_weight=20.0), dict( type='OhemCrossEntropy', thres=0.9, min_kept=131072, ###此处删除 loss_weight=1.0) ]), train_cfg=dict(), test_cfg=dict(mode='whole')) train_pipeline = [ dict(type='LoadImageFromFile'), dict(type='LoadAnnotations'), dict( type='RandomResize', scale=(512, 512), ratio_range=(0.5, 2.0), keep_ratio=True), dict(type='RandomCrop', crop_size=crop_size, cat_max_ratio=0.75), dict(type='RandomFlip', prob=0.5), dict(type='PhotoMetricDistortion'), dict(type='GenerateEdge', edge_width=4), dict(type='PackSegInputs') ] train_dataloader = dict(batch_size=6, dataset=dict(pipeline=train_pipeline)) iters = 120000 # optimizer optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.0005) optim_wrapper = dict(type='OptimWrapper', optimizer=optimizer, clip_grad=None) # learning policy param_scheduler = [ dict( type='PolyLR', eta_min=0, power=0.9, begin=0, end=iters, by_epoch=False) ] # training schedule for 120k train_cfg = dict( type='IterBasedTrainLoop', max_iters=iters, val_interval=1000) ##1000张图验证模型一次 val_cfg = dict(type='ValLoop') test_cfg = dict(type='TestLoop') default_hooks = dict( timer=dict(type='IterTimerHook'), logger=dict(type='LoggerHook', interval=50, log_metric_by_epoch=False), param_scheduler=dict(type='ParamSchedulerHook'), checkpoint=dict( type='CheckpointHook', by_epoch=False, interval=iters // 10), sampler_seed=dict(type='DistSamplerSeedHook'), visualization=dict(type='SegVisualizationHook')) randomness = dict(seed=304) -

新建自定义数据集类

参考链接:新增自定义数据集 — MMSegmentation 1.2.2 文档

创建一个新文件

mmseg/datasets/roadcracks.py# Copyright (c) OpenMMLab. All rights reserved. import os.path as osp import mmengine.fileio as fileio from mmseg.registry import DATASETS from .basesegdataset import BaseSegDataset @DATASETS.register_module() class RoadCracksDataset(BaseSegDataset): """Pascal VOC dataset. Args: split (str): Split txt file for Pascal VOC. """ METAINFO = dict( classes=['background','crack'], palette=[[0, 0, 0],[128, 64, 128]]) def __init__(self, ann_file, img_suffix='.jpg', seg_map_suffix='.png', **kwargs) -> None: super().__init__( img_suffix=img_suffix, seg_map_suffix=seg_map_suffix, ann_file=ann_file, **kwargs) assert fileio.exists(self.data_prefix['img_path'], self.backend_args) and osp.isfile(self.ann_file)在

mmseg/datasets/__init__.py中导入模块# Copyright (c) OpenMMLab. All rights reserved. # yapf: disable from .ade import ADE20KDataset from .basesegdataset import BaseCDDataset, BaseSegDataset from .bdd100k import BDD100KDataset from .chase_db1 import ChaseDB1Dataset from .cityscapes import CityscapesDataset from .coco_stuff import COCOStuffDataset from .dark_zurich import DarkZurichDataset from .dataset_wrappers import MultiImageMixDataset from .decathlon import DecathlonDataset from .drive import DRIVEDataset from .dsdl import DSDLSegDataset from .hrf import HRFDataset from .isaid import iSAIDDataset from .isprs import ISPRSDataset from .levir import LEVIRCDDataset from .lip import LIPDataset from .loveda import LoveDADataset from .mapillary import MapillaryDataset_v1, MapillaryDataset_v2 from .night_driving import NightDrivingDataset from .nyu import NYUDataset from .pascal_context import PascalContextDataset, PascalContextDataset59 from .potsdam import PotsdamDataset from .refuge import REFUGEDataset from .stare import STAREDataset from .synapse import SynapseDataset # yapf: disable from .transforms import (CLAHE, AdjustGamma, Albu, BioMedical3DPad, BioMedical3DRandomCrop, BioMedical3DRandomFlip, BioMedicalGaussianBlur, BioMedicalGaussianNoise, BioMedicalRandomGamma, ConcatCDInput, GenerateEdge, LoadAnnotations, LoadBiomedicalAnnotation, LoadBiomedicalData, LoadBiomedicalImageFromFile, LoadImageFromNDArray, LoadMultipleRSImageFromFile, LoadSingleRSImageFromFile, PackSegInputs, PhotoMetricDistortion, RandomCrop, RandomCutOut, RandomMosaic, RandomRotate, RandomRotFlip, Rerange, ResizeShortestEdge, ResizeToMultiple, RGB2Gray, SegRescale) from .voc import PascalVOCDataset from .roadcracks import RoadCracksDataset ####此处为新增 # yapf: enable __all__ = [ 'BaseSegDataset', 'BioMedical3DRandomCrop', 'BioMedical3DRandomFlip', 'CityscapesDataset', 'PascalVOCDataset', 'ADE20KDataset', 'PascalContextDataset', 'PascalContextDataset59', 'ChaseDB1Dataset', 'DRIVEDataset', 'HRFDataset', 'STAREDataset', 'DarkZurichDataset', 'NightDrivingDataset', 'COCOStuffDataset', 'LoveDADataset', 'MultiImageMixDataset', 'iSAIDDataset', 'ISPRSDataset', 'PotsdamDataset', 'LoadAnnotations', 'RandomCrop', 'SegRescale', 'PhotoMetricDistortion', 'RandomRotate', 'AdjustGamma', 'CLAHE', 'Rerange', 'RGB2Gray', 'RandomCutOut', 'RandomMosaic', 'PackSegInputs', 'ResizeToMultiple', 'LoadImageFromNDArray', 'LoadBiomedicalImageFromFile', 'LoadBiomedicalAnnotation', 'LoadBiomedicalData', 'GenerateEdge', 'DecathlonDataset', 'LIPDataset', 'ResizeShortestEdge', 'BioMedicalGaussianNoise', 'BioMedicalGaussianBlur', 'BioMedicalRandomGamma', 'BioMedical3DPad', 'RandomRotFlip', 'SynapseDataset', 'REFUGEDataset', 'MapillaryDataset_v1', 'MapillaryDataset_v2', 'Albu', 'LEVIRCDDataset', 'LoadMultipleRSImageFromFile', 'LoadSingleRSImageFromFile', 'ConcatCDInput', 'BaseCDDataset', 'DSDLSegDataset', 'BDD100KDataset', 'NYUDataset','RoadCracksDataset' ] ###此处添加类名RoadCracksDataset,与mmseg/datasets/roadcracks.py中类名一致,同时与road_cracks_512x512.py中dataset_type一致 -

添加类别标签

在

mmseg/utils/class_names.py中补充数据集元信息,添加roadcracks_classes()和roadcracks_palette(),修改dataset_aliases注:根据此处代码逻辑,_classes()与_palette()后缀不能改变,最好就是前缀和

mmseg/datasets/roadcracks.py中的roadcracks.py文件名一致def roadcracks_classes(): """Roadcracks class names for external use.""" return [ 'background','crack' ] def roadcracks_palette(): """Roadcracks class names for external use.""" return [ [0, 0, 0],[128, 64, 128] ] dataset_aliases = { 'cityscapes': ['cityscapes'], 'ade': ['ade', 'ade20k'], 'voc': ['voc', 'pascal_voc', 'voc12', 'voc12aug'], 'pcontext': ['pcontext', 'pascal_context', 'voc2010'], 'loveda': ['loveda'], 'potsdam': ['potsdam'], 'vaihingen': ['vaihingen'], 'cocostuff': [ 'cocostuff', 'cocostuff10k', 'cocostuff164k', 'coco-stuff', 'coco-stuff10k', 'coco-stuff164k', 'coco_stuff', 'coco_stuff10k', 'coco_stuff164k' ], 'isaid': ['isaid', 'iSAID'], 'stare': ['stare', 'STARE'], 'lip': ['LIP', 'lip'], 'mapillary_v1': ['mapillary_v1'], 'mapillary_v2': ['mapillary_v2'], 'bdd100k': ['bdd100k'], 'roadcracks': ['roadcracks'] ##新增处 } -

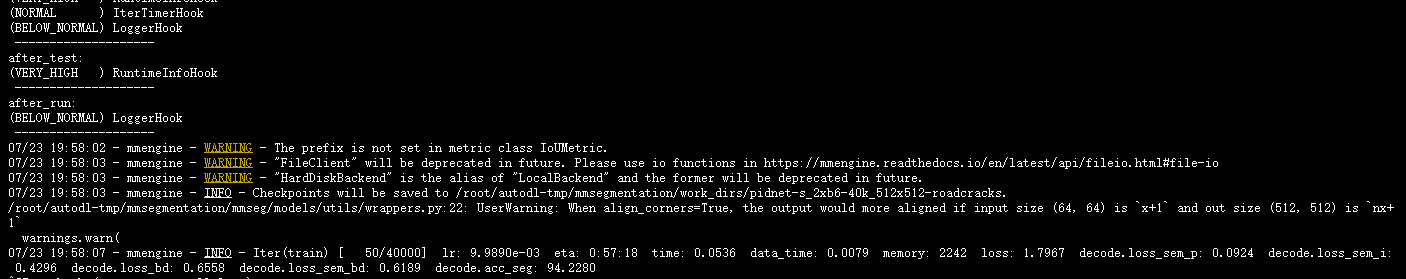

训练模型,默日志保存路径

./work_dirspython tools/train.py configs/_my_base_/models/pidnet-s_2xb6-120k_512x512-roadcracks.py运行结果

常见问题

-

RuntimeError: weight tensor should be defined either for all or no classes完整报错

Traceback (most recent call last): File "tools/train.py", line 104, in <module> main() File "tools/train.py", line 100, in main runner.train() File "/root/miniconda3/lib/python3.8/site-packages/mmengine/runner/runner.py", line 1777, in train model = self.train_loop.run() # type: ignore File "/root/miniconda3/lib/python3.8/site-packages/mmengine/runner/loops.py", line 287, in run self.run_iter(data_batch) File "/root/miniconda3/lib/python3.8/site-packages/mmengine/runner/loops.py", line 311, in run_iter outputs = self.runner.model.train_step( File "/root/miniconda3/lib/python3.8/site-packages/mmengine/model/base_model/base_model.py", line 114, in train_step losses = self._run_forward(data, mode='loss') # type: ignore File "/root/miniconda3/lib/python3.8/site-packages/mmengine/model/base_model/base_model.py", line 361, in _run_forward results = self(**data, mode=mode) File "/root/miniconda3/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl return forward_call(*args, **kwargs) File "/root/autodl-tmp/mmsegmentation/mmseg/models/segmentors/base.py", line 94, in forward return self.loss(inputs, data_samples) File "/root/autodl-tmp/mmsegmentation/mmseg/models/segmentors/encoder_decoder.py", line 178, in loss loss_decode = self._decode_head_forward_train(x, data_samples) File "/root/autodl-tmp/mmsegmentation/mmseg/models/segmentors/encoder_decoder.py", line 139, in _decode_head_forward_train loss_decode = self.decode_head.loss(inputs, data_samples, File "/root/autodl-tmp/mmsegmentation/mmseg/models/decode_heads/decode_head.py", line 262, in loss losses = self.loss_by_feat(seg_logits, batch_data_samples) File "/root/autodl-tmp/mmsegmentation/mmseg/models/decode_heads/pid_head.py", line 173, in loss_by_feat loss['loss_sem_p'] = self.loss_decode[0]( File "/root/miniconda3/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl return forward_call(*args, **kwargs) File "/root/autodl-tmp/mmsegmentation/mmseg/models/losses/cross_entropy_loss.py", line 286, in forward loss_cls = self.loss_weight * self.cls_criterion( File "/root/autodl-tmp/mmsegmentation/mmseg/models/losses/cross_entropy_loss.py", line 45, in cross_entropy loss = F.cross_entropy( File "/root/miniconda3/lib/python3.8/site-packages/torch/nn/functional.py", line 3029, in cross_entropy return torch._C._nn.cross_entropy_loss(input, target, weight, _Reduction.get_enum(reduction), ignore_index, label_smoothing) RuntimeError: weight tensor should be defined either for all or no classes报错原因

class_weight数组list长度与num_classes不一致

例如

class_weight = [ 1 ] #..... num_classes=2解决方法,修改为一致

class_weight = [ 1,1 ] #..... num_classes=2 -

RuntimeError: CUDA error: device-side assert triggered完整报错

Traceback (most recent call last): File "tools/train.py", line 104, in <module> main() File "tools/train.py", line 100, in main runner.train() File "/root/miniconda3/lib/python3.8/site-packages/mmengine/runner/runner.py", line 1777, in train model = self.train_loop.run() # type: ignore File "/root/miniconda3/lib/python3.8/site-packages/mmengine/runner/loops.py", line 287, in run self.run_iter(data_batch) File "/root/miniconda3/lib/python3.8/site-packages/mmengine/runner/loops.py", line 311, in run_iter outputs = self.runner.model.train_step( File "/root/miniconda3/lib/python3.8/site-packages/mmengine/model/base_model/base_model.py", line 114, in train_step losses = self._run_forward(data, mode='loss') # type: ignore File "/root/miniconda3/lib/python3.8/site-packages/mmengine/model/base_model/base_model.py", line 361, in _run_forward results = self(**data, mode=mode) File "/root/miniconda3/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl return forward_call(*args, **kwargs) File "/root/autodl-tmp/mmsegmentation/mmseg/models/segmentors/base.py", line 94, in forward return self.loss(inputs, data_samples) File "/root/autodl-tmp/mmsegmentation/mmseg/models/segmentors/encoder_decoder.py", line 178, in loss loss_decode = self._decode_head_forward_train(x, data_samples) File "/root/autodl-tmp/mmsegmentation/mmseg/models/segmentors/encoder_decoder.py", line 139, in _decode_head_forward_train loss_decode = self.decode_head.loss(inputs, data_samples, File "/root/autodl-tmp/mmsegmentation/mmseg/models/decode_heads/decode_head.py", line 262, in loss losses = self.loss_by_feat(seg_logits, batch_data_samples) File "/root/autodl-tmp/mmsegmentation/mmseg/models/decode_heads/pid_head.py", line 175, in loss_by_feat loss['loss_sem_i'] = self.loss_decode[1](i_logit, sem_label) File "/root/miniconda3/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1501, in _call_impl return forward_call(*args, **kwargs) File "/root/autodl-tmp/mmsegmentation/mmseg/models/losses/ohem_cross_entropy_loss.py", line 64, in forward class_weight = score.new_tensor(self.class_weight) RuntimeError: CUDA error: device-side assert triggered CUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect. For debugging consider passing CUDA_LAUNCH_BLOCKING=1. Compile with `TORCH_USE_CUDA_DSA` to enable device-side assertions.问题原因

从报错中可以看出是

ohem_cross_entropy_loss.py的问题,损失函数本身没有问题的解决方法

-

删除模型配置文件中

loss_decode的class_weight相关部分loss_decode=[ dict( type='CrossEntropyLoss', use_sigmoid=False, class_weight=class_weight, loss_weight=0.4), dict( type='OhemCrossEntropy', thres=0.9, min_kept=131072, class_weight=class_weight, loss_weight=1.0), dict(type='BoundaryLoss', loss_weight=20.0), dict( type='OhemCrossEntropy', thres=0.9, min_kept=131072, class_weight=class_weight, loss_weight=1.0) ]),修改后

loss_decode=[ dict( type='CrossEntropyLoss', use_sigmoid=False, loss_weight=0.4), dict( type='OhemCrossEntropy', thres=0.9, min_kept=131072, loss_weight=1.0), dict(type='BoundaryLoss', loss_weight=20.0), dict( type='OhemCrossEntropy', thres=0.9, min_kept=131072, loss_weight=1.0) ]), -

修改

mmsegmentation/mmseg/models/losses/cross_entropy_loss.py修改前部分,注释掉部分代码

if (avg_factor is None) and reduction == 'mean': if class_weight is None: if avg_non_ignore: avg_factor = label.numel() - (label == ignore_index).sum().item() else: avg_factor = label.numel() else: # the average factor should take the class weights into account label_weights = torch.stack([class_weight[cls] for cls in label ]).to(device=class_weight.device) if avg_non_ignore: label_weights[label == ignore_index] = 0 avg_factor = label_weights.sum() if weight is not None: weight = weight.float() loss = weight_reduce_loss( loss, weight=weight, reduction=reduction, avg_factor=avg_factor) return loss修改后

if (avg_factor is None) and reduction == 'mean': if class_weight is None: if avg_non_ignore: avg_factor = label.numel() - (label == ignore_index).sum().item() else: avg_factor = label.numel() # else: # # the average factor should take the class weights into account # label_weights = torch.stack([class_weight[cls] for cls in label # ]).to(device=class_weight.device) # if avg_non_ignore: # label_weights[label == ignore_index] = 0 # avg_factor = label_weights.sum() if weight is not None: weight = weight.float() loss = weight_reduce_loss( loss, weight=weight, reduction=reduction, avg_factor=avg_factor) return loss

参考文章

-