目录

1. yolov8 目标检测

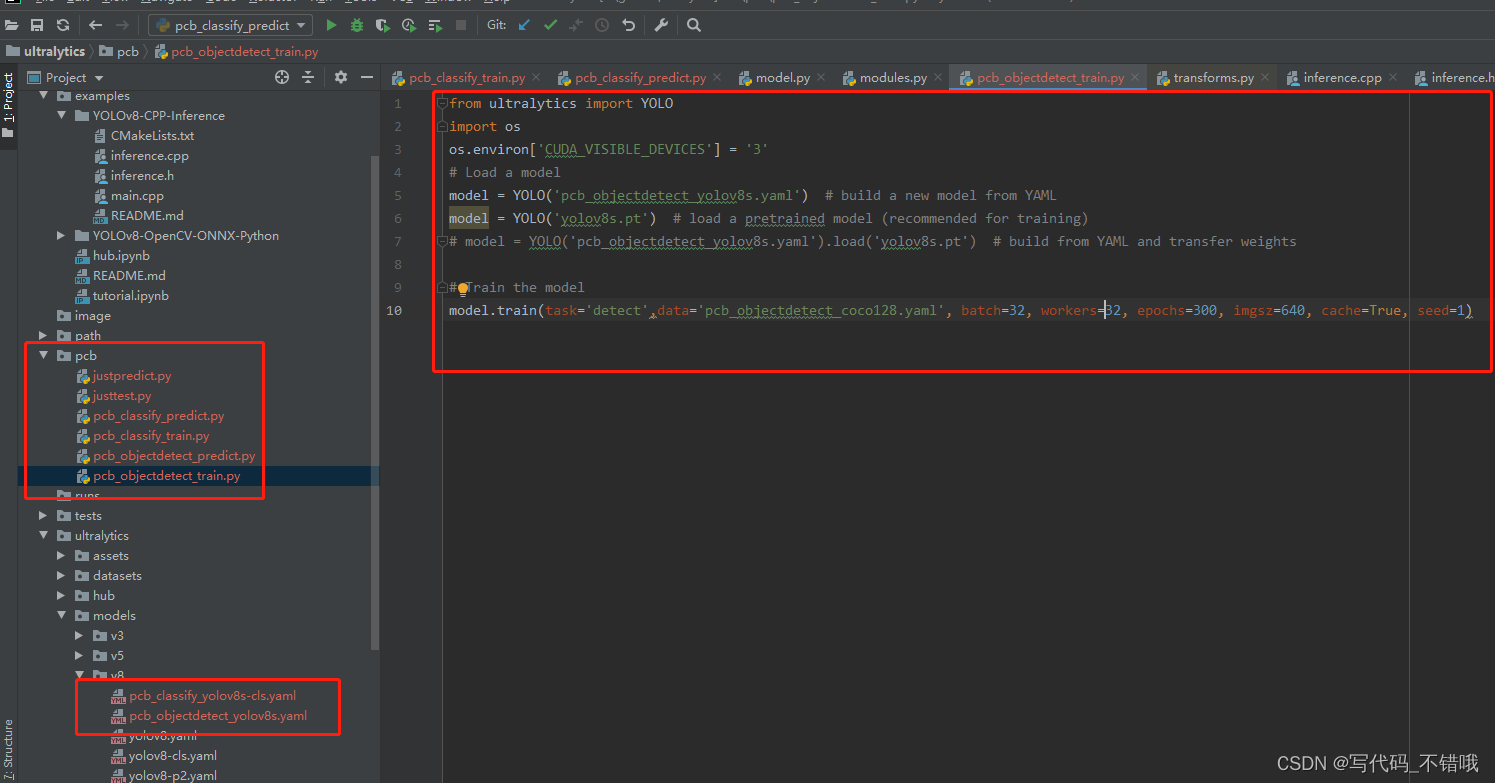

1. 目标检测按照官方代码 8.0.81直接训练,训练后会得到 .pt 文件。

from ultralytics import YOLO

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '3'

# Load a model

model = YOLO('pcb_objectdetect_yolov8s.yaml') # build a new model from YAML

model = YOLO('yolov8s.pt') # load a pretrained model (recommended for training)

# model = YOLO('pcb_objectdetect_yolov8s.yaml').load('yolov8s.pt') # build from YAML and transfer weights

# Train the model

model.train(task='detect',data='pcb_objectdetect_coco128.yaml', batch=32, workers=32, epochs=300, imgsz=640, cache=True, seed=1)先测试下是否有问题,如果没问题,就用官方自带的 export.py 进行转换,这样参照参考把 669-486 注释,换为如下的,输出 torchscript 格式:

# def forward(self, x):

# shape = x[0].shape # BCHW

# for i in range(self.nl):

# x[i] = torch.cat((self.cv2[i](x[i]), self.cv3[i](x[i])), 1)

# if self.training:

# return x

# elif self.dynamic or self.shape != shape:

# self.anchors, self.strides = (x.transpose(0, 1) for x in make_anchors(x, self.stride, 0.5))

# self.shape = shape

#

# box, cls = torch.cat([xi.view(shape[0], self.no, -1) for xi in x], 2).split((self.reg_max * 4, self.nc), 1)

# dbox = dist2bbox(self.dfl(box), self.anchors.unsqueeze(0), xywh=True, dim=1) * self.strides

# y = torch.cat((dbox, cls.sigmoid()), 1)

# return y if self.export else (y, x)

def forward(self, x):

z = [] # inference output

for i in range(self.nl):

boxes = self.cv2[i](x[i]).permute(0, 2, 3, 1)

scores = self.cv3[i](x[i]).sigmoid().permute(0, 2, 3, 1)

feat = torch.cat((boxes, scores), -1)

z.append(feat)

return tuple(z)2. 接着用 ncnn github上提供的 pnnx,按照GitHub - pnnx/pnnx: PyTorch Neural Network eXchange 说明进行把torchscript转换为 ncnn的param 和 bin 格式

3. 接着用参考的代码,进行测试

#include "layer.h"

#include "net.h"

#include "opencv2/opencv.hpp"

#include <float.h>

#include <stdio.h>

#include <vector>

#define MAX_STRIDE 32 // if yolov8-p6 model modify to 64

struct Object

{

cv::Rect_<float> rect;

int label;

float prob;

};

static float softmax(

const float* src,

float* dst,

int length

)

{

float alpha = -FLT_MAX;

for (int c = 0; c < length; c++)

{

float score = src[c];

if (score > alpha)

{

alpha = score;

}

}

float denominator = 0;

float dis_sum = 0;

for (int i = 0; i < length; ++i)

{

dst[i] = expf(src[i] - alpha);

denominator += dst[i];

}

for (int i = 0; i < length; ++i)

{

dst[i] /= denominator;

dis_sum += i * dst[i];

}

return dis_sum;

}

static void generate_proposals(

int stride,

const ncnn::Mat& feat_blob,

const float prob_threshold,

std::vector<Object>& objects

)

{

const int reg_max = 16;

float dst[16];

const int num_w = feat_blob.w;

const int num_grid_y = feat_blob.c;

const int num_grid_x = feat_blob.h;

const int num_class = num_w - 4 * reg_max;

for (int i = 0; i < num_grid_y; i++)

{

for (int j = 0; j < num_grid_x; j++)

{

const float* matat = feat_blob.channel(i).row(j);

int class_index = 0;

float class_score = -FLT_MAX;

for (int c = 0; c < num_class; c++)

{

float score = matat[4 * reg_max + c];

if (score > class_score)

{

class_index = c;

class_score = score;

}

}

if (class_score >= prob_threshold)

{

float x0 = j + 0.5f - softmax(matat, dst, 16);

float y0 = i + 0.5f - softmax(matat + 16, dst, 16);

float x1 = j + 0.5f + softmax(matat + 2 * 16, dst, 16);

float y1 = i + 0.5f + softmax(matat + 3 * 16, dst, 16);

x0 *= stride;

y0 *= stride;

x1 *= stride;

y1 *= stride;

Object obj;

obj.rect.x = x0;

obj.rect.y = y0;

obj.rect.width = x1 - x0;

obj.rect.height = y1 - y0;

obj.label = class_index;

obj.prob = class_score;

objects.push_back(obj);

}

}

}

}

static float clamp(

float val,

float min = 0.f,

float max = 1280.f

)

{

return val > min ? (val < max ? val : max) : min;

}

static void non_max_suppression(

std::vector<Object>& proposals,

std::vector<Object>& results,

int orin_h,

int orin_w,

float dh = 0,

float dw = 0,

float ratio_h = 1.0f,

float ratio_w = 1.0f,

float conf_thres = 0.25f,

float iou_thres = 0.65f

)

{

results.clear();

std::vector<cv::Rect> bboxes;

std::vector<float> scores;

std::vector<int> labels;

std::vector<int> indices;

for (auto& pro : proposals)

{

bboxes.push_back(pro.rect);

scores.push_back(pro.prob);

labels.push_back(pro.label);

}

cv::dnn::NMSBoxes(

bboxes,

scores,

conf_thres,

iou_thres,

indices

);

for (auto i : indices)

{

auto& bbox = bboxes[i];

float x0 = bbox.x;

float y0 = bbox.y;

float x1 = bbox.x + bbox.width;

float y1 = bbox.y + bbox.height;

float& score = scores[i];

int& label = labels[i];

x0 = (x0 - dw) / ratio_w;

y0 = (y0 - dh) / ratio_h;

x1 = (x1 - dw) / ratio_w;

y1 = (y1 - dh) / ratio_h;

x0 = clamp(x0, 0.f, orin_w);

y0 = clamp(y0, 0.f, orin_h);

x1 = clamp(x1, 0.f, orin_w);

y1 = clamp(y1, 0.f, orin_h);

Object obj;

obj.rect.x = x0;

obj.rect.y = y0;

obj.rect.width = x1 - x0;

obj.rect.height = y1 - y0;

obj.prob = score;

obj.label = label;

results.push_back(obj);

}

}

static int detect_yolov8(const cv::Mat& bgr, std::vector<Object>& objects)

{

ncnn::Net yolov8;

yolov8.opt.use_vulkan_compute = true;

// yolov8.opt.use_bf16_storage = true;

// original pretrained model from https://github.com/ultralytics/ultralytics

// the ncnn model https://github.com/nihui/ncnn-assets/tree/master/models

if (yolov8.load_param("yolov8s.ncnn.param"))

exit(-1);

if (yolov8.load_model("yolov8s.ncnn.bin"))

exit(-1);

const int target_size = 640;

const float prob_threshold = 0.25f;

const float nms_threshold = 0.45f;

int img_w = bgr.cols;

int img_h = bgr.rows;

// letterbox pad to multiple of MAX_STRIDE

int w = img_w;

int h = img_h;

float scale = 1.f;

if (w > h)

{

scale = (float)target_size / w;

w = target_size;

h = h * scale;

}

else

{

scale = (float)target_size / h;

h = target_size;

w = w * scale;

}

ncnn::Mat in = ncnn::Mat::from_pixels_resize(bgr.data, ncnn::Mat::PIXEL_BGR2RGB, img_w, img_h, w, h);

// pad to target_size rectangle

// ultralytics/yolo/data/dataloaders/v5augmentations.py letterbox

int wpad = (w + MAX_STRIDE - 1) / MAX_STRIDE * MAX_STRIDE - w;

int hpad = (h + MAX_STRIDE - 1) / MAX_STRIDE * MAX_STRIDE - h;

int top = hpad / 2;

int bottom = hpad - hpad / 2;

int left = wpad / 2;

int right = wpad - wpad / 2;

ncnn::Mat in_pad;

ncnn::copy_make_border(in,

in_pad,

top,

bottom,

left,

right,

ncnn::BORDER_CONSTANT,

114.f);

const float norm_vals[3] = { 1 / 255.f, 1 / 255.f, 1 / 255.f };

in_pad.substract_mean_normalize(0, norm_vals);

ncnn::Extractor ex = yolov8.create_extractor();

ex.input("in0", in_pad);

std::vector<Object> proposals;

// stride 8

{

ncnn::Mat out;

ex.extract("out0", out);

std::vector<Object> objects8;

generate_proposals(8, out, prob_threshold, objects8);

proposals.insert(proposals.end(), objects8.begin(), objects8.end());

}

// stride 16

{

ncnn::Mat out;

ex.extract("out1", out);

std::vector<Object> objects16;

generate_proposals(16, out, prob_threshold, objects16);

proposals.insert(proposals.end(), objects16.begin(), objects16.end());

}

// stride 32

{

ncnn::Mat out;

ex.extract("out2", out);

std::vector<Object> objects32;

generate_proposals(32, out, prob_threshold, objects32);

proposals.insert(proposals.end(), objects32.begin(), objects32.end());

}

non_max_suppression(proposals, objects,

img_h, img_w, hpad / 2, wpad / 2,

scale, scale, prob_threshold, nms_threshold);

return 0;

}

static void draw_objects(const cv::Mat& bgr, const std::vector<Object>& objects)

{

static const char* class_names[] = {

"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light",

"fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow",

"elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee",

"skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard",

"tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple",

"sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch",

"potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone",

"microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear",

"hair drier", "toothbrush"

};

cv::Mat image = bgr.clone();

for (size_t i = 0; i < objects.size(); i++)

{

const Object& obj = objects[i];

fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob,

obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

cv::rectangle(image, obj.rect, cv::Scalar(255, 0, 0));

char text[256];

sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);

int baseLine = 0;

cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

int x = obj.rect.x;

int y = obj.rect.y - label_size.height - baseLine;

if (y < 0)

y = 0;

if (x + label_size.width > image.cols)

x = image.cols - label_size.width;

cv::rectangle(image, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)),

cv::Scalar(255, 255, 255), -1);

cv::putText(image, text, cv::Point(x, y + label_size.height),

cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));

}

cv::imshow("image", image);

cv::waitKey(0);

}

int main(int argc, char** argv)

{

if (argc != 2)

{

fprintf(stderr, "Usage: %s [imagepath]\n", argv[0]);

return -1;

}

const char* imagepath = argv[1];

cv::Mat m = cv::imread(imagepath, 1);

if (m.empty())

{

fprintf(stderr, "cv::imread %s failed\n", imagepath);

return -1;

}

std::vector<Object> objects;

detect_yolov8(m, objects);

draw_objects(m, objects);

return 0;

}2. 分类

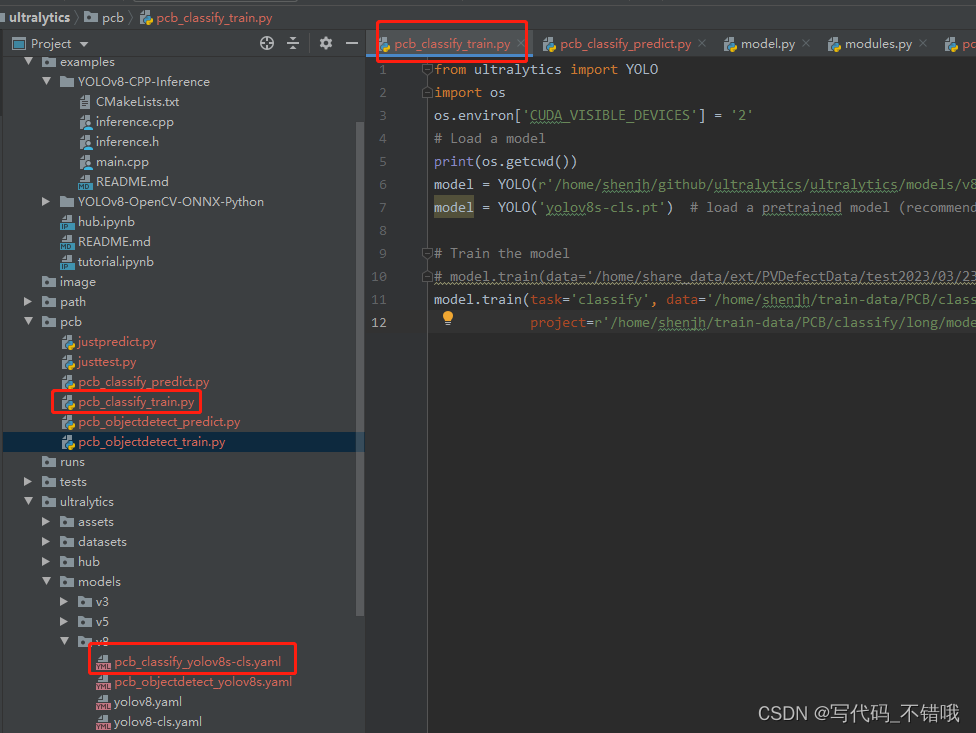

1. 训练分类,也参考官方提供的示例,我是自建了一个PCB文件夹,把训练分类脚本放进去,内容如下:

from ultralytics import YOLO

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '2'

# Load a model

print(os.getcwd())

model = YOLO(r'/home/shenjh/github/ultralytics/ultralytics/models/v8/pcb_classify_yolov8s-cls.yaml') # build a new model from YAML

model = YOLO('yolov8s-cls.pt') # load a pretrained model (recommended for training)

# Train the model

# model.train(data='/home/share_data/ext/PVDefectData/test2023/03/23/pcb/pcbclassify/long/', batch=32, workers=32, epochs=100, imgsz=224, cache=True, seed=2023)

model.train(task='classify', data='/home/shenjh/train-data/PCB/classify/long/', batch=32, workers=32, epochs=100, imgsz=224, cache=True, seed=2023,

project=r'/home/shenjh/train-data/PCB/classify/long/models/')2. 接着先验证训练好的模型,可以直接用训练的NG和OK先验证下,看看结果对不对,如下:

from ultralytics import YOLO

model = YOLO("/home/shenjh/train-data/PCB/classify/long/models/train/weights/best.pt")

model.predict(task='classify', source='/home/share_data/ext/PVDefectData/test2023/04/23/long/NG/', imgsz=224)3. 如果没问题,就用官方自带的export.py,转为 onnx,如下,但是opset=9为啥设为9,我记不清在哪里看到的:

from ultralytics import YOLO

model = YOLO("/home/shenjh/train-data/PCB/classify/long/models/train/weights/best.pt")

model.export(format="onnx", opset=9)4. 接着再测试下 onnx,如下:

model = YOLO("/home/shenjh/train-data/PCB/classify/long/models/train/weights/best.onnx")

model.predict(task='classify', source='/home/share_data/ext/PVDefectData/test2023/04/23/long/NG/', imgsz=224)5. 没问题的话,就用c++加载测试下onnx,如下,这里是参照参考3的,只不过我把 Normalize 那一块注释了,并把原来的 cv::dnn::blobFromImage(crop_image, blob, 1, cv::Size(crop_image.cols, crop_image.rows), cv::Scalar(), true, false); 改为 cv::dnn::blobFromImage(crop_image, blob, 1.0 / 255.0, cv::Size(crop_image.cols, crop_image.rows), cv::Scalar(), true, false); 差异见红色字体

#include <iostream>

#include <fstream>

#include <opencv2/opencv.hpp>

#include <io.h>

//预处理

void pre_process(cv::Mat& image, cv::Mat& blob, int INPUT_WIDTH=224, int INPUT_HEIGHT=224)

{

//CenterCrop

int crop_size = std::min(image.cols, image.rows);

int left = (image.cols - crop_size) / 2, top = (image.rows - crop_size) / 2;

cv::Mat crop_image = image(cv::Rect(left, top, crop_size, crop_size));

cv::resize(crop_image, crop_image, cv::Size(INPUT_WIDTH, INPUT_HEIGHT));

//Normalize

//crop_image.convertTo(crop_image, CV_32FC3, 1. / 255.);

//cv::subtract(crop_image, cv::Scalar(0.406, 0.456, 0.485), crop_image);

//cv::divide(crop_image, cv::Scalar(0.225, 0.224, 0.229), crop_image);

cv::dnn::blobFromImage(crop_image, blob, 1.0 / 255.0, cv::Size(crop_image.cols, crop_image.rows), cv::Scalar(), true, false);

}

//网络推理

void process(cv::Mat& blob, cv::dnn::Net& net, std::vector<cv::Mat>& outputs)

{

net.setInput(blob);

net.forward(outputs, net.getUnconnectedOutLayersNames());

}

//后处理

std::string post_process(std::vector<cv::Mat>& detections, std::vector<std::string>& class_name)

{

std::vector<float> values;

for (size_t i = 0; i < detections[0].cols; i++)

{

values.push_back(detections[0].at<float>(0, i));

}

int id = std::distance(values.begin(), std::max_element(values.begin(), values.end()));

return class_name[id];

}

int main(int argc, char** argv)

{

//常量

const int INPUT_WIDTH = 224;

const int INPUT_HEIGHT = 224;

std::ifstream ifs("Z:/temp/longlast.txt");

std::string imgRoot = "Z:/temp/long/NG";

cv::dnn::Net net = cv::dnn::readNet("Z:/temp/202304231600/long.onnx");

std::vector<std::string> class_name;

std::string line;

while (getline(ifs, line))

{

class_name.push_back(line);

}

_finddata64i32_t fileInfo;

intptr_t hFile = _findfirst((imgRoot + "\\*.bmp").c_str(), &fileInfo);

if (hFile == -1) {

std::cout << "not find image!\n";

return -1;

}

static int count = 0;

do

{

std::string imgPath = imgRoot + "/" + std::string(fileInfo.name);

cv::Mat image = cv::imread(imgPath), blob;

clock_t start = clock();

pre_process(image, blob, INPUT_WIDTH, INPUT_HEIGHT);

std::vector<cv::Mat> outputs;

process(blob, net, outputs);

std::string ret = post_process(outputs, class_name);

clock_t ends = clock();

std::cout << fileInfo.name << " pcb_time: " << (double)(ends - start) / CLOCKS_PER_SEC << " ret: " << ret << std::endl;

count++;

} while (_findnext(hFile, &fileInfo) == 0);

return 0;

}

7. 测试没问题后,利用ncnn自带的onnx2ncnn,把 .onnx 转为 ncnn,这里下载的是:ncnn-20230223-windows-vs2019版本

8.利用C++代码测试ncnn,如下:

#include <float.h>

#include <stdio.h>

#include <vector>

#include <io.h>

#include "opencv2/opencv.hpp"

#include "layer.h"

#include "net.h"

bool _long_load_model = false;

int _long_target_size;

std::string _long_param_path;

std::string _long_bin_path;

void* pcb_classify_get_net(std::string& param_path, std::string& bin_path, int target_size)

{

static ncnn::Net long_ptr;

if (!_long_load_model)

{

_long_target_size = target_size;

_long_load_model = true;

long_ptr.opt.use_vulkan_compute = false;

int ret_long_para = long_ptr.load_param(param_path.c_str());

if (ret_long_para != 0)

exit(-1);

int ret_long_bin = long_ptr.load_model(bin_path.c_str());

if (ret_long_bin != 0)

exit(-1);

}

return &long_ptr;

}

int pcb_classify_run(const cv::Mat& bgr, ncnn::Net* lound_ptr)

{

ncnn::Mat in_pad = ncnn::Mat::from_pixels_resize(bgr.data, ncnn::Mat::PIXEL_BGR2RGB, bgr.cols, bgr.rows, _long_target_size, _long_target_size);

const float norm_vals[3] = { 1 / 255.f, 1 / 255.f, 1 / 255.f };

in_pad.substract_mean_normalize(0, norm_vals);

ncnn::Extractor ex = lound_ptr->create_extractor();

ex.input("images", in_pad);

ncnn::Mat out;

ex.extract("output0", out);

//ex.extract(outBlobName.c_str(), out);

// manually call softmax on the fc output

// convert result into probability

// skip if your model already has softmax operation

{

ncnn::Layer* softmax = ncnn::create_layer("Softmax");

ncnn::ParamDict pd;

softmax->load_param(pd);

softmax->forward_inplace(out, lound_ptr->opt);

delete softmax;

}

out = out.reshape(out.h * out.w * out.c);

// trans output

std::vector<float> predicted_scores;

//predicted_scores.clear();

predicted_scores.resize(out.w);

for (int j = 0; j < out.w; j++)

{

predicted_scores[j] = out[j];

}

int maxIndex = (int)(std::max_element(predicted_scores.begin(),

predicted_scores.end()) - predicted_scores.begin());

return maxIndex;

}

int main()

{

std::vector<std::string> class_name;

std::ifstream ifs("Z:/temp/longlast.txt");

std::string line;

std::string imgRoot = "Z:/temp/long/OK";

std::string param_path = "Z:/temp/202304231600/long.param";

std::string bin_path = "Z:/temp/202304231600/long.bin";

int target_size = 224;

while (getline(ifs, line))

{

class_name.push_back(line);

}

void* ptr = pcb_classify_get_net(param_path, bin_path, target_size);

_finddata64i32_t fileInfo;

intptr_t hFile = _findfirst((imgRoot + "\\*.bmp").c_str(), &fileInfo);

if (hFile == -1) {

std::cout << "not find image!\n";

return -1;

}

static int count = 0;

do

{

std::string imgPath = imgRoot + "/" + std::string(fileInfo.name);

cv::Mat image = cv::imread(imgPath), blob;

clock_t start = clock();

int ret = pcb_classify_run(image, (ncnn::Net*)ptr);

clock_t ends = clock();

std::cout << fileInfo.name << " pcb_time: " << (double)(ends - start) / CLOCKS_PER_SEC << " ret: " << class_name[ret] << std::endl;

count++;

} while (_findnext(hFile, &fileInfo) == 0);

return 0;

}

3. 参考

1.Tutorial - deploy YOLOv5 with ncnn · Tencent/ncnn · Discussion #4541 · GitHub