一、Phoenix 介绍:

- Phoenix提供了类标准Sql的方式进行操作Hbase的数据。

- Phoenix 操作hbase有两种方式,创建表,创建视图。

区别如下:

创建表的话,就可以对HBase进行插入,查询,删除操作。

视图的话,一般就只可以进行查询操作。

虽然看起来表的功能比视图更强大一些。但就像是mysql等关系型数据库一样,删除视图操作不会影响原始表的结构。同时Phoenix的视图也支持创建二级索引相关的。

因为使用phoenix 创建表后,会自动和hbase建立关联映射。当你使用phoenix删除和hbase之间的关系时,就会将hbase中的表也删掉了,并将与之关联的索引表一并删除。

二、phoenix 常用命令

- 温馨提示:笔者开启了Kerberos安全认证机制,因此第一步是先对机器进行Kerberos认证;如果开启了用户访问hbase表权限相关的,通过phoenix查询hbase中的表需要开通该用户拥有访问hbase 系统表相关的权限。

注:phoenix会将没有用双引号的表名列名等转化成大写,所以如果表名跟列名为小写需用双引号括起来。

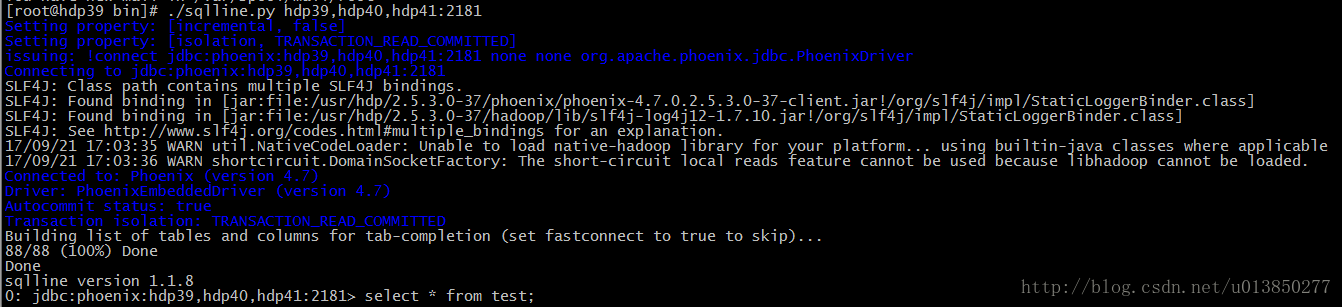

2.1 进入phoenix 命令行

[root@hdp39 ~]# cd /usr/hdp/2.5.3.0-37/phoenix/bin

[root@hdp39 bin]# ./sqlline.py hdp39,hdp40,hdp41:2181

(集群采用的安全模式为kerberos,因而执行这条命令前进行kinit的用户必须拥有操作Hbase相关的权限)

2.2、help

查看内置命令2.3、!tables

List all the tables in the database 查看表结构 ! desc tableName

0: jdbc:phoenix:hdp40,hdp41,hdp39:2181> !desc "t_hbase1"

+------------+--------------+-------------+--------------+------------+------------+--------------+----------------+-----------------+-----------------+-----------+----------+-------------+

| TABLE_CAT | TABLE_SCHEM | TABLE_NAME | COLUMN_NAME | DATA_TYPE | TYPE_NAME | COLUMN_SIZE | BUFFER_LENGTH | DECIMAL_DIGITS | NUM_PREC_RADIX | NULLABLE | REMARKS | COLUMN_DEF |

+------------+--------------+-------------+--------------+------------+------------+--------------+----------------+-----------------+-----------------+-----------+----------+-------------+

| | | t_hbase1 | ROW | 12 | VARCHAR | null | null | null | null | 0 | | |

| | | t_hbase1 | id | 12 | VARCHAR | null | null | null | null | 1 | | |

| | | t_hbase1 | salary | 12 | VARCHAR | null | null | null | null | 1 | | |

| | | t_hbase1 | url | 12 | VARCHAR | null | null | null | null | 1 | | |

| | | t_hbase1 | details | 12 | VARCHAR | null | null | null | null | 1 | | |

+------------+--------------+-------------+--------------+------------+------------+--------------+----------------+-----------------+-----------------+-----------+----------+-------------+2.4、创建表

- 分为两种方式,一种为hbase中没有对应表,另一种为hbase中已经存在该表

- a 创建phoenix表

[root@hdp07 bin]# ./sqlline.py hdp40,hdp41,hdp39:2181 /tmp/us_population.sql us_population.sql 内容如下所示:

[root@hdp07 tmp]# cat us_population.sql

CREATE TABLE IF NOT EXISTS us_population (

state CHAR(2) NOT NULL,

city VARCHAR NOT NULL,

population BIGINT

CONSTRAINT my_pk PRIMARY KEY (state, city));创建成功后可在hbase中查询,结果如下所示(给表添加了协处理coprocessor):

hbase(main):002:0> desc 'US_POPULATION'

Table US_POPULATION is ENABLED

US_POPULATION, {TABLE_ATTRIBUTES => {coprocessor$1 => '|org.apache.phoenix.coprocessor.ScanRegionObserver|805306366|', coprocessor$2 => '|org.apache.phoenix.coprocessor.UngroupedAggregateRe

gionObserver|805306366|', coprocessor$3 => '|org.apache.phoenix.coprocessor.GroupedAggregateRegionObserver|805306366|', coprocessor$4 => '|org.apache.phoenix.coprocessor.ServerCachingEndpoi

ntImpl|805306366|', coprocessor$5 => '|org.apache.phoenix.hbase.index.Indexer|805306366|org.apache.hadoop.hbase.index.codec.class=org.apache.phoenix.index.PhoenixIndexCodec,index.builder=or

g.apache.phoenix.index.PhoenixIndexBuilder'}

COLUMN FAMILIES DESCRIPTION

{NAME => '0', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'FAST_DIFF', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VE

RSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}

1 row(s) in 0.1020 seconds

- 导入数据

[root@hdp07 bin]# ./psql.py -t US_POPULATION hdp40,hdp41,hdp39:2181 /tmp/us_population.csv- csv文件内容如下:

[root@hdp07 tmp]# cat us_population.csv

NY,New York,8143197

CA,Los Angeles,3844829

IL,Chicago,2842518

TX,Houston,2016582

PA,Philadelphia,1463281

AZ,Phoenix,1461575

TX,San Antonio,1256509

CA,San Diego,1255540

TX,Dallas,1213825

CA,San Jose,912332

导入数据之后查看如下:

- 查看数据

phoenix shell 中查看数据如下所示:

0: jdbc:phoenix:hdp40,hdp41,hdp39:2181> select * from us_population;

+--------+---------------+-------------+

| STATE | CITY | POPULATION |

+--------+---------------+-------------+

| AZ | Phoenix | 1461575 |

| CA | Los Angeles | 3844829 |

| CA | San Diego | 1255540 |

| CA | San Jose | 912332 |

| IL | Chicago | 2842518 |

| NY | New York | 8143197 |

| PA | Philadelphia | 1463281 |

| TX | Dallas | 1213825 |

| TX | Houston | 2016582 |

| TX | San Antonio | 1256509 |

+--------+---------------+-------------+hbase shell 下查看数据如下所示:

hbase(main):005:0> scan 'US_POPULATION'

ROW COLUMN+CELL

AZPhoenix column=0:POPULATION, timestamp=1522308898804, value=\x80\x00\x00\x00\x00\x16MG

AZPhoenix column=0:_0, timestamp=1522308898804, value=x

CALos Angeles column=0:POPULATION, timestamp=1522308898804, value=\x80\x00\x00\x00\x00:\xAA\xDD

CALos Angeles column=0:_0, timestamp=1522308898804, value=x

CASan Diego column=0:POPULATION, timestamp=1522308898804, value=\x80\x00\x00\x00\x00\x13(t

CASan Diego column=0:_0, timestamp=1522308898804, value=x

CASan Jose column=0:POPULATION, timestamp=1522308898804, value=\x80\x00\x00\x00\x00\x0D\xEB\xCC

CASan Jose column=0:_0, timestamp=1522308898804, value=x

ILChicago column=0:POPULATION, timestamp=1522308898804, value=\x80\x00\x00\x00\x00+_\x96

ILChicago column=0:_0, timestamp=1522308898804, value=x

NYNew York column=0:POPULATION, timestamp=1522308898804, value=\x80\x00\x00\x00\x00|A]

NYNew York column=0:_0, timestamp=1522308898804, value=x

PAPhiladelphia column=0:POPULATION, timestamp=1522308898804, value=\x80\x00\x00\x00\x00\x16S\xF1

PAPhiladelphia column=0:_0, timestamp=1522308898804, value=x

TXDallas column=0:POPULATION, timestamp=1522308898804, value=\x80\x00\x00\x00\x00\x12\x85\x81

TXDallas column=0:_0, timestamp=1522308898804, value=x

TXHouston column=0:POPULATION, timestamp=1522308898804, value=\x80\x00\x00\x00\x00\x1E\xC5F

TXHouston column=0:_0, timestamp=1522308898804, value=x

TXSan Antonio column=0:POPULATION, timestamp=1522308898804, value=\x80\x00\x00\x00\x00\x13,=

TXSan Antonio column=0:_0, timestamp=1522308898804, value=x

- 也可以像创建表一样通过执行查询sql或者直接在phoenix shell命令行中输入对应的命令,如下所示

[root@hdp07 bin]# ./sqlline.py hdp40,hdp41,hdp39:2181 /tmp/us_population_queries.sql

.....

Building list of tables and columns for tab-completion (set fastconnect to true to skip)...

106/106 (100%) Done

Done

1/1 SELECT state as "State",count(city) as "City Count",sum(population) as "Population Sum"

FROM us_population

GROUP BY state

ORDER BY sum(population) DESC;

+--------+-------------+-----------------+

| State | City Count | Population Sum |

+--------+-------------+-----------------+

| NY | 1 | 8143197 |

| CA | 3 | 6012701 |

| TX | 3 | 4486916 |

| IL | 1 | 2842518 |

| PA | 1 | 1463281 |

| AZ | 1 | 1461575 |

+--------+-------------+-----------------+

6 rows selected (0.122 seconds)

us_population_queries.sql 内容如下:

[root@hdp07 tmp]# cat us_population_queries.sql

SELECT state as "State",count(city) as "City Count",

sum(population) as "Population Sum"

FROM us_population

GROUP BY state

ORDER BY sum(population) DESC;插入数据

0: jdbc:phoenix:hdp40,hdp41,hdp39:2181> UPSERT INTO us_population VALUES(‘YY’,’PHOENIX_TEST’,99999);

1 row affected (0.046 seconds)

注:如果插入的是同一个rowkey对应的数据,则相当于关系数据库的修改。删除数据

0: jdbc:phoenix:hdp40,hdp41,hdp39:2181> delete from us_population where CITY=’PHOENIX_TEST’;

1 row affected (0.05 seconds)

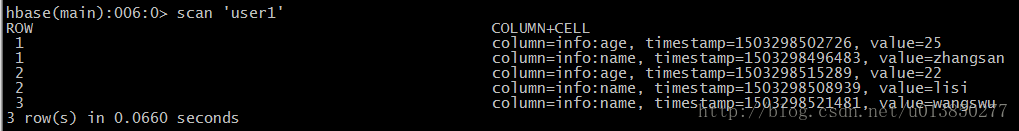

b 创建已存在的Hbase表或视图

(phoenix 操作Hbase的数据,首先在Phoenix中创建与Hbase关联的表或视图)建立hbase关联的映射表(该hbase表已存在)

hbase(main):006:0> scan 'user1'

ROW COLUMN+CELL

1 column=info:age, timestamp=1503298502726, value=25

1 column=info:name, timestamp=1503298496483, value=zhangsan

2 column=info:age, timestamp=1503298515289, value=22

2 column=info:name, timestamp=1503298508939, value=lisi

3 column=info:name, timestamp=1503298521481, value=wangswu

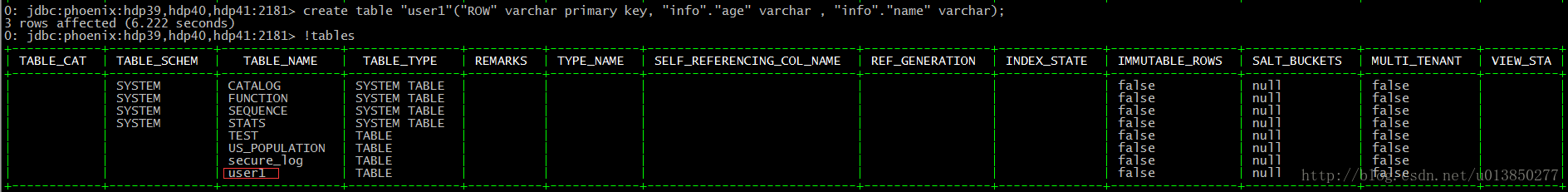

- phoenix 命令行中建表语句:

0: jdbc:phoenix:hdp39,hdp40,hdp41:2181>

create table "user1"(

"ROW" varchar primary key,

"info"."age" varchar ,

"info"."name" varchar); 0: jdbc:phoenix:hdp39,hdp40,hdp41:2181>

select * from "user1";(后可带limit)

+------+------+-----------+

| ROW | age | name |

+------+------+-----------+

| 1 | 25 | zhangsan |

| 2 | 22 | lisi |

| 3 | | wangswu |

+------+------+-----------+

3 rows selected (0.083 seconds)

**注:查询的时候表名或字段名要用双引号(单引号会报错),不带引号会自动被转换成大写**

建立t_hbase10对应的phoenix 关联表,该表共有1000万条数据,表已存在Hbase中

create table "t_hbase10"("ROW" varchar primary key, "info"."id" varchar , "info"."salary" varchar);

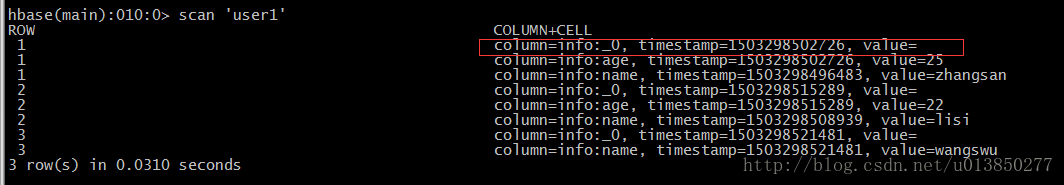

- 创建phoenix之前

- 创建phoenix之后

- 执行查询sql,并观察其对应的查询速度

0: jdbc:phoenix:hdp39,hdp40,hdp41:2181>

select count("id") from "t_hbase10";

Error: Operation timed out. (state=TIM01,code=6000)

java.sql.SQLTimeoutException: Operation timed out.

0: jdbc:phoenix:hdp39,hdp40,hdp41:2181>

select * from "t_hbase10" limit 2;

+----------+-----+---------+

| ROW | id | salary |

+----------+-----+---------+

| rowKey0 | B0 | 0 |

| rowKey1 | B1 | 1 |

+----------+-----+---------+

2 rows selected (0.42 seconds)

0: jdbc:phoenix:hdp39,hdp40,hdp41:2181>

select count(1) from "t_hbase10";

+-----------+

| COUNT(1) |

+-----------+

| 10000000 |

+-----------+

1 row selected (5.571 seconds)

0: jdbc:phoenix:hdp39,hdp40,hdp41:2181>

select * from "t_hbase10" where "id"='B100000';

+---------------+----------+---------+

| ROW | id | salary |

+---------------+----------+---------+

| rowKey100000 | B100000 | 100000 |

+---------------+----------+---------+

1 row selected (51.416 seconds)

0: jdbc:phoenix:hdp39,hdp40,hdp41:2181>

select * from "t_hbase10" where "ROW"='rowKey100000';

+---------------+----------+---------+

| ROW | id | salary |

+---------------+----------+---------+

| rowKey100000 | B100000 | 100000 |

+---------------+----------+---------+

1 row selected (0.042 seconds)给表建立索引:

CREATE INDEX id_name ON "t_hbase10"("colfamily"."id");参考文档: