Is Artificial Intelligence(AI) making us lazy or efficient?

人工智能(AI)使我们变得懒惰还是高效?

I think it’s making us efficient. Due to COVID-19, people are more often found interacting with their peers via social media and text messages. For instance, my push notifications are up by 37%, and positively enough I have reconnected with my school friends, old friends per se. However, this arose a problem of constantly sticking to my phone and suffering from Nomophobia and Phantom vibration syndrome.

我认为这使我们高效。 由于使用了COVID-19,人们更常通过社交媒体和短信与同龄人互动。 例如,我的推送通知增加了37%,而且很肯定的是,我与学校朋友,旧朋友本身重新建立了联系。 但是,这引起了一个问题,就是不断粘在我的手机上,并患有恐惧症和幻影振动综合症。

Nomophobia — a term describing a growing fear in today’s world — the fear of being without a mobile device, or beyond mobile phone contact. The Post Office commissioned YouGov, a research organization, to look at anxieties suffered by mobile phone users. The study found that about 58 percent of men and 47 percent of women suffer from the phobia, and an additional 9 percent feel stressed when their mobile phones are off. The study sampled 2,163 people. Read more here.

恐惧症(Nomophobia)是描述当今世界日益增长的恐惧感的一种术语,它表示担心没有移动设备或无法与手机通话。 邮局委托研究机构YouGov调查手机用户遭受的焦虑。 研究发现,大约58%的男性和47%的女性患有恐怖症,另外9%的人在关闭手机后会感到压力。 该研究对2,163人进行了抽样。 在这里阅读更多。

Phantom vibration syndrome — where you think your phone is vibrating but it’s not — has been around only since the mobile age. Nearly 90 percent of college undergrads in a 2012 study said they felt phantom vibrations. Get more insights here.

幻影振动综合症(您认为手机在振动,但实际上并没有),仅在移动时代出现了。 在2012年的一项研究中,将近90%的大学本科生表示他们感到幻影般的振动。 在此处获取更多见解。

I was indeed almost always on the phone, and even while sleeping, I used to wake up hastily to check my phone as well, and being an introvert I love to sleep. So, I decided to make AI work for me.

实际上,我几乎一直在打电话,即使在睡觉的时候,我也常常匆匆醒来,也要检查我的手机,而且我性格内向,喜欢睡觉。 因此,我决定让AI为我工作。

With Recurrent Neural Networks (RNN), I decided to train my machine to generate automatic replies, trained based on my personal chats/replies/forwards, etc.

借助递归神经网络(RNN),我决定训练我的机器以生成自动答复,并根据我的个人聊天/答复/转发等进行训练。

On the corollary, there are many fledgling chatbots trained on the humongous text. However, they lack the human touch and the word and sentence formations one uses while texting. While sending short messages, for instance — fewer people write “See you Later” and my personal network uses “c u l8r”- both of which convey the same message, but with different semantic and syntactic structuring.

结果是,有许多刚起步的聊天机器人已经接受了庞大的文本培训。 但是,它们缺乏人的触觉,并且在发短信时不会使用单词和句子的形式。 例如,在发送短消息时-更少的人写“稍后再见”,而我的个人网络使用“ cu8r”-两者都传达相同的消息,但语义和句法结构不同。

数据集: (Dataset :)

I have 881 text messages which are basically interactions between 11 different participants from India(most of them), Germany, and the USA. Due to this time difference, not all are active at once. Few are more gregarious, few more tacit. So this data is a perfect mix of human interactions — sarcastic and sassy — replies, which are more prominent in taking.

我有881条短信,基本上是来自印度(其中大多数),德国和美国的11个不同参与者之间的互动。 由于该时间差,并非所有功能都同时处于活动状态。 很少有合群的,很少是默认的。 因此,这些数据完美地融合了人类互动(嘲讽和野蛮)的回答,在回答中更为突出。

The main reason for training on this data is — it’s the most active group in my network and more close to me as I do not want to sound like a bot when I am “replying”.

对此数据进行培训的主要原因是-它是我网络中最活跃的组,并且与我更接近,因为我不想在“回复”时听起来像个机器人。

聊天 (LETS TALK)

# importing necessary libraries

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import nltk

import string

import unidecode

import random

import torchAfter importing we need to have a GPU as RNN or any deep learning neural network requires heavy computing and takes a long time on CPU. additionally, GPUs have additional advantages over CPUs, these include having more computational units and having a higher bandwidth to retrieve from memory.

导入后,我们需要将GPU用作RNN或任何深度学习神经网络都需要大量计算,并且在CPU上花费很长时间。 此外,GPU与CPU相比还具有其他优势,包括具有更多的计算单元和更高的带宽以从内存中检索。

train_on_gpu = torch.cuda.is_available()

if(train_on_gpu):

print('Training on GPU!')

else:

print('No GPU available, training on CPU; consider making n_epochs very small.')This code will tell you if you have a GPU or not. even if you don't have one, it is just going to take a longer, but still gives you results.

该代码将告诉您是否有GPU。 即使您没有,也将花费更长的时间,但仍然可以为您带来结果。

train_df = pd.read_csv("WhatsappChat.csv")

author = train_df["Content"]

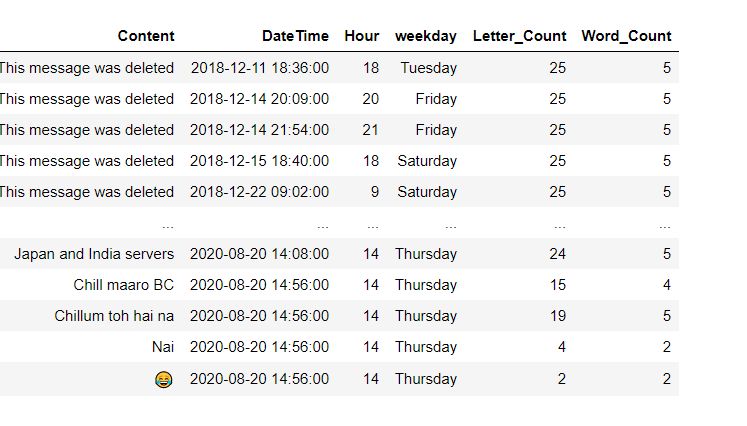

This is how the data frame looks like, I have worked on some data processing and Exploratory Data Analysis to bring it in this formation. The code to change WhatsApp chat into a similar pandas data frame visit here. As I am training it on the content of the chats, we will just be working on that column.

这就是数据框架的样子,我已经进行了一些数据处理和探索性数据分析,以使其形成这种形式。 将WhatsApp聊天更改为类似熊猫数据框架的代码请访问这里。 当我在聊天内容上对其进行培训时,我们将仅在该列上进行工作。

text = list(author)

def joinStrings(text):

return ' '.join(string for string in text)

text = joinStrings(text)

# text = [item for sublist in author[:5].values for item in sublist]

len(text.split())test_sentence = text.lower().split()trigrams = [([test_sentence[i], test_sentence[i + 1]], test_sentence[i + 2])

for i in range(len(test_sentence) - 2)]

chunk_len=len(trigrams)

print(trigrams[:3])after joining and making the content as a huge text data, I am training the data based on tri-gram as most of the replies — at least in my network — are sized at 3 words in reply.

加入内容并使之成为巨大的文本数据之后,我将根据tri-gram训练数据,因为大多数答复(至少在我的网络中)的答复大小为3个字。

Since to Train an RNN, I need a vocabulary size, so that my replies don't go out of bounds.

自从训练RNN以来,我需要一个词汇量,以便我的回答不会超出范围。

vocab = set(test_sentence)

voc_len=len(vocab)

word_to_ix = {word: i for i, word in enumerate(vocab)}# making input and their respective replies

inp=[]

tar=[]

for context, target in trigrams:

context_idxs = torch.tensor([word_to_ix[w] for w in context], dtype=torch.long)

inp.append(context_idxs)

targ = torch.tensor([word_to_ix[target]], dtype=torch.long)

tar.append(targ)RNN (RNN)

It’s time we define our neural network class and see what it can do for us.

是时候定义神经网络类了,看看它能为我们做什么。

class RNN(nn.Module):

def __init__(self, input_size, hidden_size, output_size, n_layers=1):

super(RNN, self).__init__()

self.input_size = input_size

self.hidden_size = hidden_size

self.output_size = output_size

self.n_layers = n_layers

self.encoder = nn.Embedding(input_size, hidden_size)

self.gru = nn.GRU(hidden_size*2, hidden_size, n_layers,batch_first=True,

bidirectional=False)

self.decoder = nn.Linear(hidden_size, output_size)

def forward(self, input, hidden):

input = self.encoder(input.view(1, -1))

output, hidden = self.gru(input.view(1, 1, -1), hidden)

output = self.decoder(output.view(1, -1))

return output, hiddendef init_hidden(self):

return Variable(torch.zeros(self.n_layers, 1, self.hidden_size))Here is a class RNN, a general object-oriented programming approach to instantiate objects and their respective methods or functions for faster execution.

这是类RNN,这是一种通用的面向对象的编程方法,用于实例化对象及其各自的方法或函数,以便更快地执行。

The def forward() function is our forward pass or feed-forward network and connection of the neural network. And the def init_hidden() is a variable instantiate for hidden layers.

def forward()函数是我们的前向通过或前馈网络以及神经网络的连接。 def init_hidden()是用于隐藏层的变量实例。

def train(inp, target):

hidden = decoder.init_hidden().cuda()

decoder.zero_grad()

loss = 0

for c in range(chunk_len):

output, hidden = decoder(inp[c].cuda(), hidden)

loss += criterion(output, target[c].cuda())loss.backward()

decoder_optimizer.step()return loss.data.item() / chunk_lenNow we need to reduce loss to get the optimized reply and check the accuracy of the model. The above code gives us data loss in whole backpropagation.

现在我们需要减少损失以获得优化的答复并检查模型的准确性。 上面的代码使我们在整个反向传播过程中都丢失了数据。

import time, mathdef time_since(since):

s = time.time() - since

m = math.floor(s / 60)

s -= m * 60

return '%dm %ds' % (m, s)This is a simple function to check how much time does it take to run the program or time taken to train the model.

这是一个简单的功能,用于检查运行程序需要多少时间或训练模型需要的时间。

n_epochs = 50

print_every = 10

plot_every = 10

hidden_size = 100

n_layers = 1

lr = 0.015decoder = RNN(voc_len, hidden_size, voc_len, n_layers)

decoder_optimizer = torch.optim.Adam(decoder.parameters(), lr=lr)

criterion = nn.CrossEntropyLoss()start = time.time()

all_losses = []

loss_avg = 0

if(train_on_gpu):

decoder.cuda()

for epoch in range(1, n_epochs + 1):

loss = train(inp,tar)

loss_avg += lossif epoch % print_every == 0:

print('[%s (%d %d%%) %.4f]' % (time_since(start), epoch, epoch / n_epochs * 50, loss))

# print(evaluate('ge', 200), '\n')if epoch % plot_every == 0:

all_losses.append(loss_avg / plot_every)

loss_avg = 0This is where the magic happens — training the chat and learning what to reply for 50 times I let the machine read the chat and let me know what is the best reply to the message. Also, prints the loss incurred after 10 epochs and time took to execute.

这就是魔术发生的地方–训练聊天并学习50次答复,让机器阅读聊天并让我知道对消息的最佳答复是什么。 同样,打印10个历元和执行时间之后的损失。

import matplotlib.pyplot as plt

import matplotlib.ticker as ticker

%matplotlib inlineplt.figure()

plt.plot(all_losses)This plots the losses for those who — like me — appreciate plots and likes visual representations then numbers.

对于那些像我一样欣赏情节,喜欢视觉表示再喜欢数字的人,这会画出损失。

def evaluate(prime_str='this process', predict_len=100, temperature=0.8):

hidden = decoder.init_hidden().cuda()for p in range(predict_len):

prime_input = torch.tensor([word_to_ix[w] for w in prime_str.split()], dtype=torch.long).cuda()

inp = prime_input[-2:] #last two words as input

output, hidden = decoder(inp, hidden)

# Sample from the network as a multinomial distribution

output_dist = output.data.view(-1).div(temperature).exp()

top_i = torch.multinomial(output_dist, 1)[0]

# Add predicted word to string and use as next input

predicted_word = list(word_to_ix.keys())[list(word_to_ix.values()).index(top_i)]

prime_str += " " + predicted_word

# inp = torch.tensor(word_to_ix[predicted_word], dtype=torch.long)return prime_strWe need to define an evaluation function to check if we are getting any tangible replies generated. It takes the prime string, length of the sentences, and temperature which takes care of the missing words if any new message comes.

我们需要定义一个评估函数,以检查是否生成了任何切实的答复。 它采用素数字符串,句子的长度和温度,如果有任何新消息出现,它将处理丢失的单词。

print(evaluate('trip pe',11, temperature=1))# output

trip pe shuru ? dekh na😅. bumble to sanky bhi use kar saktaVoila! There is a message generated and it makes less sense, but tangible words. Interestingly, it learned the smileys as well. And we use a huge amount of emoticons in our chats.

瞧! 生成了一条消息,它意义不大,但却是实话。 有趣的是,它也学会了笑脸。 而且我们在聊天中使用了大量的表情符号。

未来的工作 (Future work)

Now, all I need is work on APIs to embed this code in the WhatsApp chat, let it train in a span of a month, and generate the messages — then I don’t look at my phone. This will cure my sleep cycle and leverage me in interacting with people around me than on my phone. Hopefully with increased epochs, say 100 and more data over time this will give fewer errors and more personalized replies which will trick my friends into wondering whether I’m a BOT or replying with my conscience.

现在,我需要做的就是将这些代码嵌入到WhatsApp聊天中的API上,让它在一个月的时间内进行训练并生成消息-然后我就不会看手机了。 这可以改善我的睡眠周期,并可以使我与周围的人互动(而不是通过手机)。 希望随着时代的增加,例如随着时间的推移增加100个数据,这将减少错误并提供更多个性化的答复,这将使我的朋友迷惑不解,我是BOT还是出于良心而回覆。

If you are interested, you can get the code here.

如果您有兴趣,可以在此处获取代码。

Do let me know if you think this method lacks some ideas, or how I can optimize it further to get to being a humanlike BOT and let this AI take over my communication.

请让我知道您是否认为这种方法缺乏一些想法,或者我如何进一步优化它以成为一个像人一样的BOT,并让该AI接管我的交流。

翻译自: https://medium.com/the-innovation/chat-generator-d61cc5a1d1df