本文记录了在使用LoRA微调后的Qwen模型时的两种启动方式,一种是原始模型+LoRA模型,一种是将两个模型合并保存后再调用的方式。

LoRA模型的启动方式

LoRA模型可以通过peft包中的AutoPeftModelForCausalLM进行加载:

from peft import AutoPeftModelForCausalLM

# 设置LoRA微调后的模型存储路径(checkpoint)

model = AutoPeftModelForCausalLM.from_pretrained("/home/<用户名>/nlp/Qwen/finetune/output_qwen_medical/checkpoint-1000/", device_map='auto',trust_remote_code=True).eval()LoRA模型加载完毕还是需要transformers里面的AutoTokenizer:

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("/home/<用户名>/nlp/Qwen/model/qwen/Qwen-1_8B-Chat", trust_remote_code=True)然后再调model的chat方法即可:

# 第一轮对话

response, history = model.chat(tokenizer, "....", history =None)

print(response)以合并LoRA模型与原始模型的参数方式加载

保存模型参数:

# 可以将LoRA参数与原始参数合并加载

from peft import AutoPeftModelForCausalLM

model = AutoPeftModelForCausalLM.from_pretrained(

"/home/renjintao/nlp/Qwen/finetune/output_qwen_medical/checkpoint-1000/",

device_map="auto",

trust_remote_code=True

).eval()

merged_model = model.merge_and_unload()

# 保存合并模型

merged_model.save_pretrained("/home/renjintao/nlp/Qwen/model/qwen/qwen_1_8B_lora_medical")保存tokenizer相关的配置:

# 还需要保存tokenizer相关的配置

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained(

"/home/renjintao/nlp/Qwen/finetune/output_qwen_medical/checkpoint-1000/",

trust_remote_code=True

)

tokenizer.save_pretrained("/home/renjintao/nlp/Qwen/model/qwen/qwen_1_8B_lora_medical")以常规方式调用模型:

# 接下来就可以使用常规方式进行调用

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation import GenerationConfig

model_path = './model/qwen/qwen_1_8B_lora_medical'

tokenizer=AutoTokenizer.from_pretrained(model_path,trust_remote_code=True)

model=AutoModelForCausalLM.from_pretrained(model_path,device_map="auto",trust_remote_code=True)

model.generation_config = GenerationConfig.from_pretrained(model_path,trust_remote_code=True)对话试试:

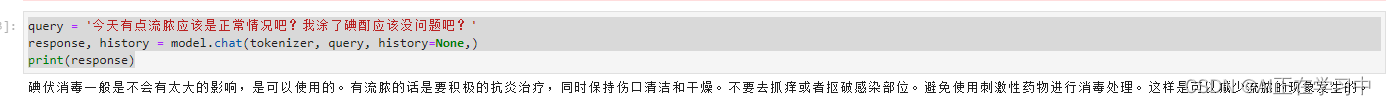

query = '今天有点流脓应该是正常情况吧?我涂了碘酊应该没问题吧?'

response, history = model.chat(tokenizer, query, history=None,)

print(response)已经成功启动微调后的模型: