spider 爬虫技术学习手册

一、网络爬虫介绍

引言:

在大数据时代,信息的采集是一项重要的工作,而互联网中的数据是海量的,如果单靠人力进行采集,不仅低效繁琐,搜索的成本也会提高。如何自动高效的获取互联网中我们感兴趣的信息,使其为我们所用是一个重要的问题,而爬虫技术就是为了解决这些问题而生的。

网络爬虫(web crawler、web spider …)也叫做网络机器人,可以代替人们自动地在互联网中进行数据信息的采集与整理。它是一种按照一定的规则,自动地抓取万维网信息的程序或者脚本,可以自动采集所有能够访问到的页面内容,以获取相关数据。

从功能上来讲,爬虫一般分为数据采集,处理,存储三个部分。爬虫从一个或若干个初始网页的URL开始,获得初始网页上的URL,在抓取网页的过程中,不断从当前页面上抽取洗呢URL放入队列,直到满足系统的停止条件。

二、网络爬虫入门

2.1 环境准备

- JDK1.8

- Intellij IDEA

- Maven

pom.xml

<dependencies>

<!-- https://mvnrepository.com/artifact/org.apache.httpcomponents/httpclient -->

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>4.5.2</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.slf4j/slf4j-log4j12 -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.25</version>

<!--<scope>test</scope>-->

</dependency>

</dependencies>

log4j.properties

log4j.rootLogger=DEBUG,A1

log4j.logger.cn.cfit=DEBUG

log4j.appender.A1=org.apache.log4j.ConsoleAppender

log4j.appender.A1.layout=org.apache.log4j.PatternLayout

log4j.appender.A1.layout.ConversionPattern=%-d{yyyy-MM-dd HH:mm:ss,SSS} [%t] [%c]-[%p] %m%n

2.2 一个简单的入门程序

public static void main(String[] args) throws IOException {

//打开浏览器 —— 创建一个HttpClienl对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//访问网址 —— 创建HttpGet 对象

HttpGet httpGet = new HttpGet("https://www.csdn.net/");

//按回车发起请求,接收响应 —— 使用HttpClient发送请求

CloseableHttpResponse response = httpClient.execute(httpGet);

//解析响应获取数据 —— 判断状态码是否为200

if (200 == response.getStatusLine().getStatusCode()) {

//获取响应

HttpEntity httpEntity = response.getEntity();

String content = EntityUtils.toString(httpEntity, "UTF8");

System.out.println(content);

}

}

三、HttpClient 爬取数据

网络爬虫就是用程序帮助我们访问网络上的资源,我们一直以来都是使用HTTP协议访问互联网的网页,网路爬虫需要编写程序,在程序里我们页需要使用同样的HTTP协议访问网页。

我们主要是用的是HttpClient技术,来实现抓取网页的数据。

3.1 GET请求

访问CSDN主页

package cn.cfit.spider;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

/**

* @Description: get请求无参数

* @Author: SunJie

* @Date: 2021/7/8

* @Version: V1.0

*/

public class HttpGetTest {

public static void main(String[] args) {

//1.创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//2.创建HttpGet对象,设置Url地址

HttpGet httpGet = new HttpGet("https://www.csdn.net/");

CloseableHttpResponse response = null;

try {

//3.使用HttpClient发起请求,获取response

response = httpClient.execute(httpGet);

//4.解析response

if (response.getStatusLine().getStatusCode()==200){

String content = EntityUtils.toString(response.getEntity(), "utf8");

System.out.println(content.length());

}

} catch (IOException e) {

e.printStackTrace();

}finally {

//关闭response

try {

response.close();

httpClient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

3.2 GET带参数请求

package cn.cfit.spider;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.client.utils.URIBuilder;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

import java.net.URISyntaxException;

/**

* @Description: get请求有参数

* @Author: SunJie

* @Date: 2021/7/8

* @Version: V1.0

*/

public class HttpGetParamTest {

public static void main(String[] args) throws URISyntaxException {

//1.创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//创建URIBuilder

URIBuilder uriBuilder = new URIBuilder("https://blog.csdn.net/qq_34474324");

//添加参数(支持链式调用)

uriBuilder.setParameter("t","1");

//2.创建HttpGet对象,设置Url地址

HttpGet httpGet = new HttpGet(uriBuilder.build());

System.out.println("发起请求的信息:" + httpGet);

CloseableHttpResponse response = null;

try {

//3.使用HttpClient发起请求,获取response

response = httpClient.execute(httpGet);

//4.解析response

if (response.getStatusLine().getStatusCode() == 200) {

String content = EntityUtils.toString(response.getEntity(), "utf8");

System.out.println(content.length());

}

} catch (IOException e) {

e.printStackTrace();

} finally {

//关闭response

try {

response.close();

httpClient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

3.3 POST请求

package cn.cfit.spider;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

/**

* @Description: post请求无参数

* @Author: SunJie

* @Date: 2021/7/8

* @Version: V1.0

*/

public class HttpPostTest {

public static void main(String[] args) {

//1.创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//2.创建httpPost对象,设置Url地址

HttpPost httpPost = new HttpPost("https://www.csdn.net/");

CloseableHttpResponse response = null;

try {

//3.使用HttpClient发起请求,获取response

response = httpClient.execute(httpPost);

//4.解析response

if (response.getStatusLine().getStatusCode()==200){

String content = EntityUtils.toString(response.getEntity(), "utf8");

System.out.println(content.length());

}

} catch (IOException e) {

e.printStackTrace();

}finally {

//关闭response

try {

response.close();

httpClient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

3.4 POST带参数请求

package cn.cfit.spider;

import org.apache.http.NameValuePair;

import org.apache.http.client.entity.UrlEncodedFormEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpPost;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.message.BasicNameValuePair;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

import java.io.UnsupportedEncodingException;

import java.util.ArrayList;

import java.util.List;

/**

* @Description: post请求有参数

* @Author: SunJie

* @Date: 2021/7/8

* @Version: V1.0

*/

public class HttpPostParamTest {

public static void main(String[] args) throws Exception {

//1.创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//2.创建httpPost对象,设置Url地址

HttpPost httpPost = new HttpPost("https://blog.csdn.net/qq_34474324");

//声明List,封装表单中的参数

List<NameValuePair> params = new ArrayList<>();

params.add(new BasicNameValuePair("t","1"));

//创建表单的Entity对象

UrlEncodedFormEntity formEntity = new UrlEncodedFormEntity(params,"utf8");

//设置表单的Entity实体对象到Post请求中

httpPost.setEntity(formEntity);

CloseableHttpResponse response = null;

try {

//3.使用HttpClient发起请求,获取response

response = httpClient.execute(httpPost);

//4.解析response

if (response.getStatusLine().getStatusCode()==200){

String content = EntityUtils.toString(response.getEntity(), "utf8");

System.out.println(content.length());

}

} catch (IOException e) {

e.printStackTrace();

}finally {

//关闭response

try {

response.close();

httpClient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

3.5 HttpClient连接池

package cn.cfit.spider;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.impl.conn.PoolingHttpClientConnectionManager;

/**

* @Description: HttpClient连接池

* @Author: SunJie

* @Date: 2021/7/8

* @Version: V1.0

*/

public class HttpClientPoolTest {

public static void main(String[] args) {

//创建连接池管理器

PoolingHttpClientConnectionManager cm = new PoolingHttpClientConnectionManager();

//设置最大连接数

cm.setMaxTotal(100);

//设置每个主机的最大连接数

cm.setDefaultMaxPerRoute(10);

//使用连接池管理器发起请求

doGet(cm);

doPsot(cm);

}

private static void doPsot(PoolingHttpClientConnectionManager cm) {

//不是每次创建新的HttpClient,而是从连接池获取

CloseableHttpClient httpClient = HttpClients.custom().setConnectionManager(cm).build();

}

private static void doGet(PoolingHttpClientConnectionManager cm) {

}

}

3.6 配置请求信息

package cn.cfit.spider;

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

/**

* @Description: 配置请求信息

* @Author: SunJie

* @Date: 2021/7/8

* @Version: V1.0

*/

public class HttpConfigTest {

public static void main(String[] args) {

//1.创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//2.创建HttpGet对象,设置Url地址

HttpGet httpGet = new HttpGet("https://www.csdn.net/");

//配置请求信息

RequestConfig config = RequestConfig.custom().setConnectTimeout(1000)//创建连接的最长时间,单位毫秒

.setConnectionRequestTimeout(500)//设置获取连接的最长时间,单位毫秒

.setSocketTimeout(10*1000)//设置数据传输的最长时间,单位毫秒

.build();

//给请求设置请求信息

httpGet.setConfig(config);

CloseableHttpResponse response = null;

try {

//3.使用HttpClient发起请求,获取response

response = httpClient.execute(httpGet);

//4.解析response

if (response.getStatusLine().getStatusCode()==200){

String content = EntityUtils.toString(response.getEntity(), "utf8");

System.out.println(content.length());

}

} catch (IOException e) {

e.printStackTrace();

}finally {

//关闭response

try {

response.close();

httpClient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

四、Jsoup 解析数据

我们抓取到页面后,还需要对页面进行解析。可以使用字符串处理工具解析页面,也可以使用正则表达式;但是这些方法都会带来很大的开发成本,所以我们需要一款专门解析html的技术。

4.1 Jsoup介绍

Jsoup是一款Java的HTNL解析器,可以直接解析某个URL地址、HTML文本内容。他提供了一套非常省力的API,可以通过DOM,CSS以及类似于jQuery的操作方法来取出和操作数据。

Jsoup的主要功能如下:

- 从一个URL、文件或字符中解析HTML;

- 使用DOM或CSS选择器来查找、取出数据;

- 可操作HTML元素、属性、文本

添加pom依赖:

Jsoup

<!-- https://mvnrepository.com/artifact/org.jsoup/jsoup -->

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.13.1</version>

</dependency>

<!-- https://mvnrepository.com/artifact/junit/junit -->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

<scope>test</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/commons-io/commons-io -->

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.6</version>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.commons/commons-lang3 -->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.7</version>

</dependency>

4.1.1 Jsoup解析URL

@Test

public void testUrl() throws Exception{

//解析Url地址,第一个参数是:访问的Url,第二个参数是:超时时间

Document doc = Jsoup.parse(new URL("https://www.csdn.net/"), 10000);

//使用标签选择器,获取title标签中的内容

String title = doc.getElementsByTag("title").first().text();

System.out.println(title);

}

**PS:**虽然Jsoup可以替代HttpClient直接发起请求解析数据,但是往往不会这样用,因为实际开发过程中,需要用到很多线程,连接池,代理等等方式,而Jsoup对这些功能的支持不是很好,所以我们一般只把Jsoup作为解析Html的工具。

4.1.2 Jsoup解析字符串

创建一个空的 csdn.html文件,粘贴以下内容

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>菜鸟教程(runoob.com)</title>

</head>

<body>

<h1>我的第一个标题</h1>

<p>我的第一个段落。</p>

<h3 id = "city_bj" class = "sname sss" acc="213">北京</h3>

<h3 id = "city_tj" acc="123">天津</h3>

<h3 id = "city_sz" acc="323">深圳</h3>

<span class = "sname">内蒙古</span>

<span class = "sname">赤峰市</span>

<span class = "sname">克什克腾旗</span>

</body>

</html>

@Test

public void testString() throws Exception{

//使用工具类读取文件,获取字符串

String content = FileUtils.readFileToString(new File("C:\\Users\\Pactera\\Desktop\\csdn.html"), "utf8");

//解析Url

Document document = Jsoup.parse(content);

System.out.println(document.getElementsByTag("title").first().text());

}

4.1.3 Jsoup解析文件

@Test

public void testFile() throws Exception{

//解析文件

Document document = Jsoup.parse(new File("C:\\Users\\Pactera\\Desktop\\csdn.html"), "utf8");

System.out.println(document.getElementsByTag("h1").first().text());

}

4.1.4 使用dom方式遍历文档

元素获取:

- 根据 id 查询元素 getElementById

- 根据标签获取元素 getElementByTag

- 根据 class 获取元素 getElementByClass

- 根据属性获取元素 getElementByAttribute

@Test

public void testDom() throws Exception{

//解析文件

Document dom = Jsoup.parse(new File("C:\\Users\\Pactera\\Desktop\\csdn.html"), "utf8");

//1. 根据 id 查询元素 getElementById

Element city_tj = dom.getElementById("city_tj");

System.out.println(city_tj.text());

//2. 根据标签获取元素 getElementByTag

Element span = dom.getElementsByTag("span").first();

System.out.println(span.text());

//3. 根据 class 获取元素getElementByClass

Elements names = dom.getElementsByClass("sname");

names.forEach(name-> System.out.println(name.text()));

//4. 根据属性获取元素 getElementByAttribute

Elements accs = dom.getElementsByAttribute("acc");

accs.forEach(acc-> System.out.println(acc.text()));

Elements accs1 = dom.getElementsByAttributeValue("acc","323");

accs1.forEach(acc-> System.out.println(acc.text()));

}

元素中获取数据:

- 从元素中获取 id

- 从元素中获取 className

- 从元素中获取属性的值 attr

- 从元素中获取所有属性的值 attributes

- 从元素中获取文本内容 text

@Test

public void testData() throws Exception{

//解析文件

Document dom = Jsoup.parse(new File("C:\\Users\\Pactera\\Desktop\\csdn.html"), "utf8");

//根据 id 查询元素 getElementById

Element city_tj = dom.getElementById("city_bj");

//1. 从元素中获取 id

System.out.println(city_tj.id());

//2. 从元素中获取 className

System.out.println(city_tj.className());

System.out.println(city_tj.classNames());

//3. 从元素中获取属性的值 attr

System.out.println(city_tj.attr("acc"));

//4. 从元素中获取所有属性的值 attributes

System.out.println(city_tj.attributes());

//5. 从元素中获取文本内容 text

System.out.println(city_tj.text());

}

4.1.5 使用 Selector 选择器方式遍历文档 **

tagname:通过标签查找元素;比如:span

#id:通过 ID 查找元素,比如:#city_bj

.class:通过class名称查找元素,比如:.sss

[attribute]:利用属性查找元素,比如:[acc]

[attr=value]:利用属性值来查找元素,比如:[class=sname]

@Test

public void testSelector() throws Exception{

//解析文件

Document dom = Jsoup.parse(new File("C:\\Users\\Pactera\\Desktop\\csdn.html"), "utf8");

//tagname:通过标签查找元素;比如:span

Elements spans = dom.select("span");

spans.forEach(span-> System.out.println(span.text()));

//#id:通过 ID 查找元素,比如:#city_bj

Elements selects = dom.select("#city_bj");

selects.forEach(select-> System.out.println(select.text()));

//.class:通过class名称查找元素,比如:.sss

Elements classs = dom.select(".sss");

classs.forEach(cl-> System.out.println(cl.text()));

//[attribute]:利用属性查找元素,比如:[acc]

Elements accs = dom.select("[acc]");

accs.forEach(acc-> System.out.println(acc.text()));

//[attr=value]:利用属性值来查找元素,比如:[class=sname]

Elements select = dom.select("[class=sname]");

select.forEach(se-> System.out.println(se.text()));

}

4.1.6 使用 Selector 选择器组合使用

el#id:元素+ID,比如:h3#city_bj

el.class:元素+class,比如:li.class

任意组合:比如:sapn[].sanme

ancestor child :查找某个元素下子元素,比如:city_con li 查找"city_con"下所有li

parent>child:查找某个父元素下的直接子元素,比如:

.city_con >ul>li 查找lity_con第一级(直接子元素)的ul,再找所有ul下的第一级li

parent>*:查找某个父元素下所有直接子元素

@Test

public void testSelectorAll() throws Exception{

//解析文件

Document dom = Jsoup.parse(new File("C:\\Users\\Pactera\\Desktop\\csdn.html"), "utf8");

//el#id:元素+ID,比如:h3#city_bj

Elements select1 = dom.select("h3#city_bj");

select1.forEach(se-> System.out.println("1"+se.text()));

//el.class:元素+class,比如:li.class

Elements select2 = dom.select("li.aaa");

select2.forEach(se-> System.out.println("2"+se.text()));

//任意组合:比如:sapn[].sanme

Elements select3 = dom.select("span[abc].sname");

select3.forEach(se-> System.out.println("3"+se.text()));

//ancestor child :查找某个元素下子元素,比如:city_con li 查找"city_con"下所有li

Elements select4 = dom.select(".city_con li");

select4.forEach(se-> System.out.println("4"+se.text()));

//parent>child:查找某个父元素下的直接子元素,比如:

Elements select5 = dom.select(".city_con>li");

select5.forEach(se-> System.out.println("5"+se.text()));

//.city_con >ul>li 查找lity_con第一级(直接子元素)的ul,再找所有ul下的第一级li

Elements select6 = dom.select(".city_con>ul>li");

select6.forEach(se-> System.out.println("6"+se.text()));

//parent>*:查找某个父元素下所有直接子元素

Elements select7 = dom.select(".city_con*");

select7.forEach(se-> System.out.println("7"+se.text()));

}

五、爬虫案例

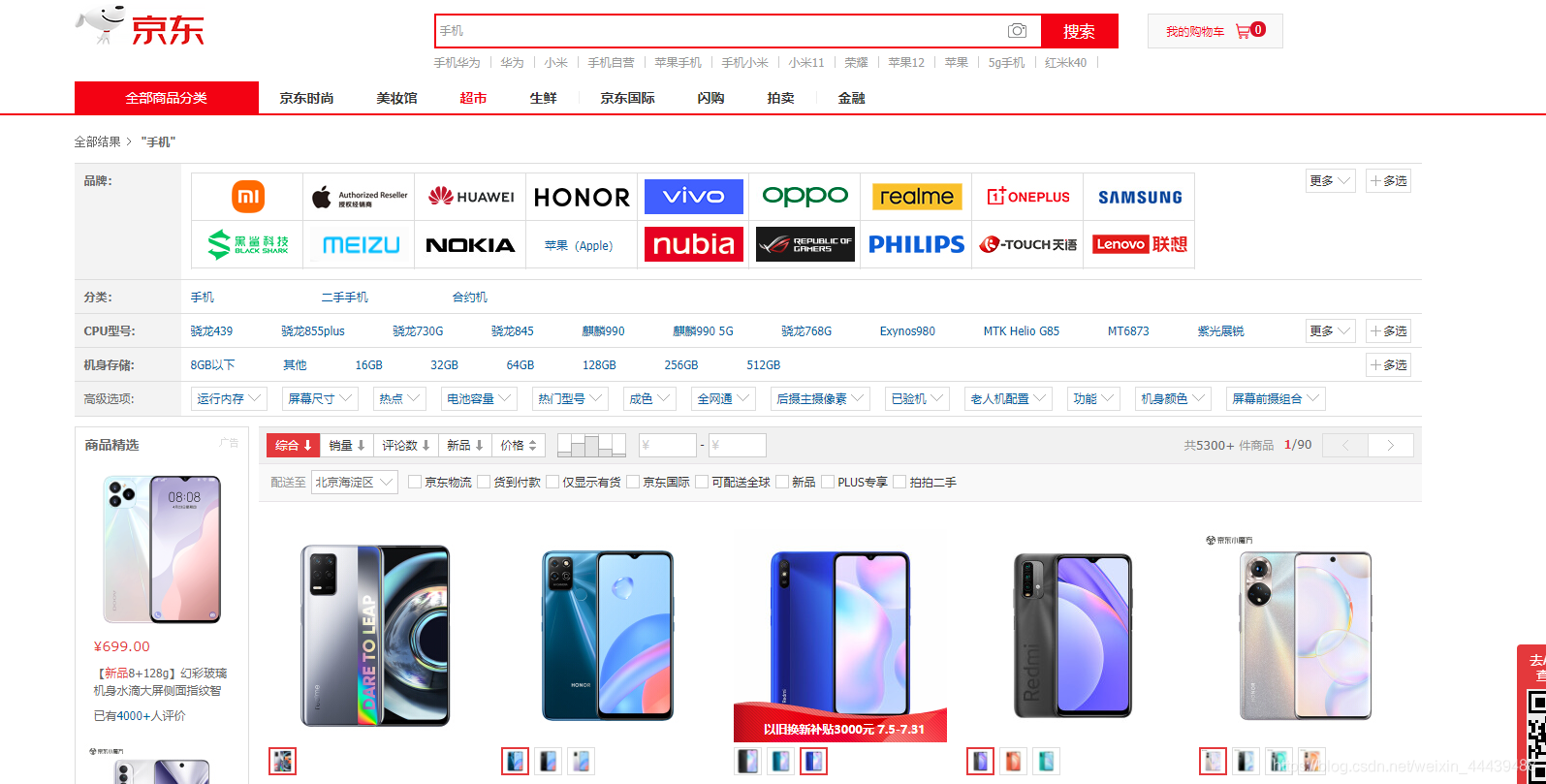

学习了HttpClient的Jsoup,就掌握了如何抓取数据和如何解析数据,接下来,我们做一个小练习,把京东的手机数据抓取下来。

5.1需求分析

首先访问京东,搜索手机,分析页面,我们抓取以下商品数据:

商品图片、价格、标题、商品详情页等。

5.1.2 SPU和SKU

除了以上的四个属性外,我们发现上图中的部分手机有多种产品,我们应该每一种都抓取,那么这里就必须要了解SPU和SKU的概念。

SPU = Standard Product Unit(标准产品单位)

SPU是商品信息聚合的最小单位,是一组可复用、易检索的标准化信息的采合,该集合描述了一个产品的特性。即:属性值、特性相同的商品就可以成为一个SPU。

SKU = stock kepping unti(库存计量单位)

SKU 即库存进出计量单位,可以是以件、盒、托盘等为单位。SKU是物理上不可分割的最小存货单元。在使用时,要根据不同业态,不同管理类模式来处理。在服装、鞋类商品中使用最多最普遍。

5.2开发准备

5.2.1 建表:

CREATE TABLE `jd_item` (

`id` bigint(10) NOT NULL AUTO_INCREMENT COMMENT '主键id',

`spu` bigint(15) NULL DEFAULT NULL COMMENT '商品集合id',

`sku` bigint(15) NULL DEFAULT NULL COMMENT '商品最小单元id',

`title` varchar(100) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL COMMENT '商品标题',

`price` bigint(10) NULL DEFAULT NULL COMMENT '商品价格',

`pic` varchar(200) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL COMMENT '商品图片',

`url` varchar(100) CHARACTER SET utf8 COLLATE utf8_general_ci NULL DEFAULT NULL COMMENT '商品详情地址',

`created` datetime NULL DEFAULT NULL COMMENT '创建时间',

`updated` datetime NULL DEFAULT NULL COMMENT '更新时间',

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 1 CHARACTER SET = utf8 COLLATE = utf8_general_ci COMMENT = '京东商品表' ROW_FORMAT = Dynamic;

5.2.2 添加依赖:

使用Spring Boot +Spring Data JPA和定时任务进行开发

需要创建Maven工程并添加以下依赖

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.3.4.RELEASE</version>

<relativePath/>

</parent>

<!--SpringMVC-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!--MyBatisPlus-->

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-boot-starter</artifactId>

<version>3.1.2</version>

</dependency>

<!-- mp自动代码生成-->

<dependency>

<groupId>com.baomidou</groupId>

<artifactId>mybatis-plus-generator</artifactId>

<version>3.0.7.1</version>

</dependency>

<!-- velocity 模板引擎, 默认 -->

<dependency>

<groupId>org.apache.velocity</groupId>

<artifactId>velocity-engine-core</artifactId>

<version>2.0</version>

</dependency>

<!-- freemarker 模板引擎 -->

<dependency>

<groupId>org.freemarker</groupId>

<artifactId>freemarker</artifactId>

<version>2.3.31</version>

</dependency>

<!-- beetl 模板引擎 -->

<dependency>

<groupId>com.ibeetl</groupId>

<artifactId>beetl</artifactId>

<version>2.2.5</version>

</dependency>

<!--MySql连接包-->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

<!--HttpClient-->

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

</dependency>

<!--Jsoup-->

<dependency>

<groupId>org.jsoup</groupId>

<artifactId>jsoup</artifactId>

<version>1.13.1</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<!--工具包-->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

</dependency>

5.2.3 添加配置文件

application.properties

# Datasource

spring.datasource.driver-class-name=com.mysql.cj.jdbc.Driver

spring.datasource.url=jdbc:mysql://192.168.8.32/spider-demo1?characterEncoding=UTF-8&useSSL=false

spring.datasource.username=root

spring.datasource.password=123456

5.3代码实现

5.3.1 MybatisPlus生成实体类

省略

5.3.2 定义接口及实现类

ItemService

/**

* @Description: 商品信息

* @Author: SunJie

* @Date: 2021/7/12

* @Version: V1.0

*/

public interface ItemService {

/**

* 保存商品

* @param item

*/

void save(Item item);

/**

* 根据条件查询商品

* @param item

* @return

*/

List<Item> findAll(Item item);

}

ItemServiceImpl

/**

* @Description: 商品信息实现类

* @Author: SunJie

* @Date: 2021/7/12

* @Version: V1.0

*/

@Service

public class ItemServiceImpl implements ItemService {

@Resource

private JdItemMapper itemMapper;

/**

* 保存商品

* @param item

*/

@Override

@Transactional//开启事务

public void save(Item item) {

itemMapper.insert(item);

}

/**

* 根据条件查询商品

* @param item

* @return

*/

@Override

public List<Item> findAll(Item item) {

QueryWrapper<Item> queryWrapper = new QueryWrapper<>(item);

return itemMapper.selectList(queryWrapper);

}

}

5.3.3 添加启动类

Application

/**

* @Description: 启动类

* @Author: SunJie

* @Date: 2021/7/14

* @Version: V1.0

*/

@MapperScan("cn.cfit.spider.dao")

@EnableScheduling//添加定时任务,需要先开启定时任务,需要添加注解

@SpringBootApplication

public class Application {

public static void main(String[] args) {

SpringApplication.run(Application.class, args);

}

}

5.3.4 封装HttpClient连接池

/**

* @Description: HttpClient连接池

* @Author: SunJie

* @Date: 2021/7/14

* @Version: V1.0

*/

@Configuration

public class HttpClientPoolConfig {

@Bean

public CloseableHttpClient getHttpClient(){

PoolingHttpClientConnectionManager cm = new PoolingHttpClientConnectionManager();

//设置最大连接数

cm.setMaxTotal(200);

//设置每个主机的并发数

cm.setDefaultMaxPerRoute(20);

return HttpClients.custom().setConnectionManager(cm).build();

}

}

5.3.5 封装HttpClient工具包

/**

* @Description: Http工具类

* @Author: SunJie

* @Date: 2021/7/14

* @Version: V1.0

*/

@Slf4j

@Component

public class HttpUtils {

@Resource

private HttpClientPoolConfig httpClientConfig;

/**

* 根据请求地址获取页面数据

*

* @param url 需要抓取的地址

* @return 页面数据

*/

public String doGetHtml(String url) {

//获取HttpClient

CloseableHttpClient httpClient = httpClientConfig.getHttpClient();

//创建HttpGet对象,设置url地址

HttpGet httpGet = new HttpGet(url);

//配置请求信息

httpGet.setConfig(getConfig());

//使用HttpClient发起请求,获取响应

try (CloseableHttpResponse response = httpClient.execute(httpGet)) {

//解析响应,返回结果

if (response.getStatusLine().getStatusCode() == 200) {

log.info("抓取数据成功,进行数据解析...");

return EntityUtils.toString(Objects.requireNonNull(response.getEntity()), "utf8");

}

} catch (IOException e) {

log.info("抓取数据成功,但是数据为空");

e.printStackTrace();

}

return "";

}

/**

* 下载图片

*

* @param url 需要抓取的地址

* @param outFileUrl 文件输出路径

* @return 图片名称

*/

public String doGetImage(String url, String outFileUrl) {

//获取HttpClient

CloseableHttpClient httpClient = httpClientConfig.getHttpClient();

//创建HttpGet对象,设置url地址

HttpGet httpGet = new HttpGet(url);

//配置请求信息

httpGet.setConfig(getConfig());

//使用HttpClient发起请求,获取响应

try (CloseableHttpResponse response = httpClient.execute(httpGet)) {

//解析响应,返回结果

if (response.getStatusLine().getStatusCode() == 200) {

log.info("抓取数据成功,进行数据解析...");

//获取图片名后缀,重命名

String picName = UUID.randomUUID().toString() + url.substring(url.lastIndexOf("."));

OutputStream outputStream = new FileOutputStream(outFileUrl + picName);

//下载图片

Objects.requireNonNull(response.getEntity()).writeTo(outputStream);

return outFileUrl + picName;

}

} catch (IOException e) {

log.info("抓取数据成功,但是数据为空");

e.printStackTrace();

}

return "";

}

/**

* @return 配置请求信息

*/

private static RequestConfig getConfig() {

return RequestConfig.custom().setConnectTimeout(1000)//创建连接的最长时间,单位毫秒

.setConnectionRequestTimeout(500)//获取连接的最长时间,单位毫秒

.setSocketTimeout(10000)//数据传输的最长时间,单位毫秒

.build();

}

}

5.3.6 实现数据抓取

使用定时任务,定时抓取最新的数据

/**

* @Description: 商品信息

* @Author: SunJie

* @Date: 2021/7/14

* @Version: V1.0

*/

@Slf4j

@Component

public class ItemTask {

/**

* 获取Http工具

*/

@Resource

private HttpUtils httpUtils;

/**

* 获取线程池

*/

@Resource

private ThreadPoolConfig threadPoolConfig;

/**

* 商品信息

*/

@Resource

private ItemService itemService;

/**

* json 解析工具

*/

private static final ObjectMapper MAPPER = new ObjectMapper();

//当下载任务完成后,间隔多久进行下次触发

@Scheduled(fixedDelay = 100 * 1000)

public void itemTask(){

//声明需要解析的初始地址

String url = "https://search.jd.com/Search?keyword=%E6%89%8B%E6%9C%BA&suggest=1.his.0.0&wq=%E6%89%8B%E6%9C%BA&pvid=9188f8b1523e4c73b7bd9972b05ccf1e&s=57&click=0&page=";

//获取线程池

ThreadPoolTaskExecutor executor = threadPoolConfig.asyncServiceExecutor();

//多线程执行

executor.submit(() -> {

//按照页面对手机的搜索结果进行遍历解析

for (int i = 1; i < 10; i = i + 2) {

String html = httpUtils.doGetHtml(url + i);

//解析页面获取商品数据,并存储

try {

parse(html);

} catch (Exception e) {

e.printStackTrace();

}

}

});

log.info("信息抓取完成!");

}

/**

* 页面解析

*

* @param html 页面

*/

private void parse(String html) throws Exception {

//解析html获取document

Document parse = Jsoup.parse(html);

//获取li

Elements elements = parse.select("div#J_goodsList > ul > li");

for (Element element : elements) {

//获取spu

long spu = Long.parseLong(element.attr("data-spu").equals("") ?

element.attr("data-sku") : element.attr("data-spu"));

//获取sku

Elements skuLis = element.select("li.ps-item");

for (Element skus : skuLis) {

long sku = Long.parseLong(skus.select("[data-sku]").attr("data-sku"));

//根据sku查询商品数据,如果商品存在则跳出本地循环

Item item = new Item();

item.setSku(sku);

if (!CollectionUtils.isEmpty(itemService.findAll(item))) {

continue;

}

//获取商品的价格

String priceJson = httpUtils.doGetHtml("https://p.3.cn/prices/mgets?skuIds=J_" + sku);

long price = MAPPER.readTree(priceJson).get(0).get("p").asLong();

//获取商品的图片

String picUrl = "https:" + skus.select("img[data-sku]").first().attr("data-lazy-img");

picUrl = picUrl.replace("/n7/", "/n1/");

//下载图片

picUrl = httpUtils.doGetImage(picUrl, "C:\\Users\\Pactera\\Desktop\\images\\");

//获取商品详情的url

String itemUrl = "https://item.jd.com/" + sku + ".html";

//获取商品标题

String itemHtml = httpUtils.doGetHtml(itemUrl);

String title = Jsoup.parse(itemHtml).select("div.sku-name").text();

//组装item

item.setSpu(spu);

item.setUrl(itemUrl);

item.setTitle(title);

item.setPrice(price);

item.setPic(picUrl);

item.setUrl(itemUrl);

item.setCreated(LocalDateTime.now());

item.setUpdated(item.getCreated());

//信息存储

itemService.save(item);

}

}

}

}