环境准备

1、主机准备

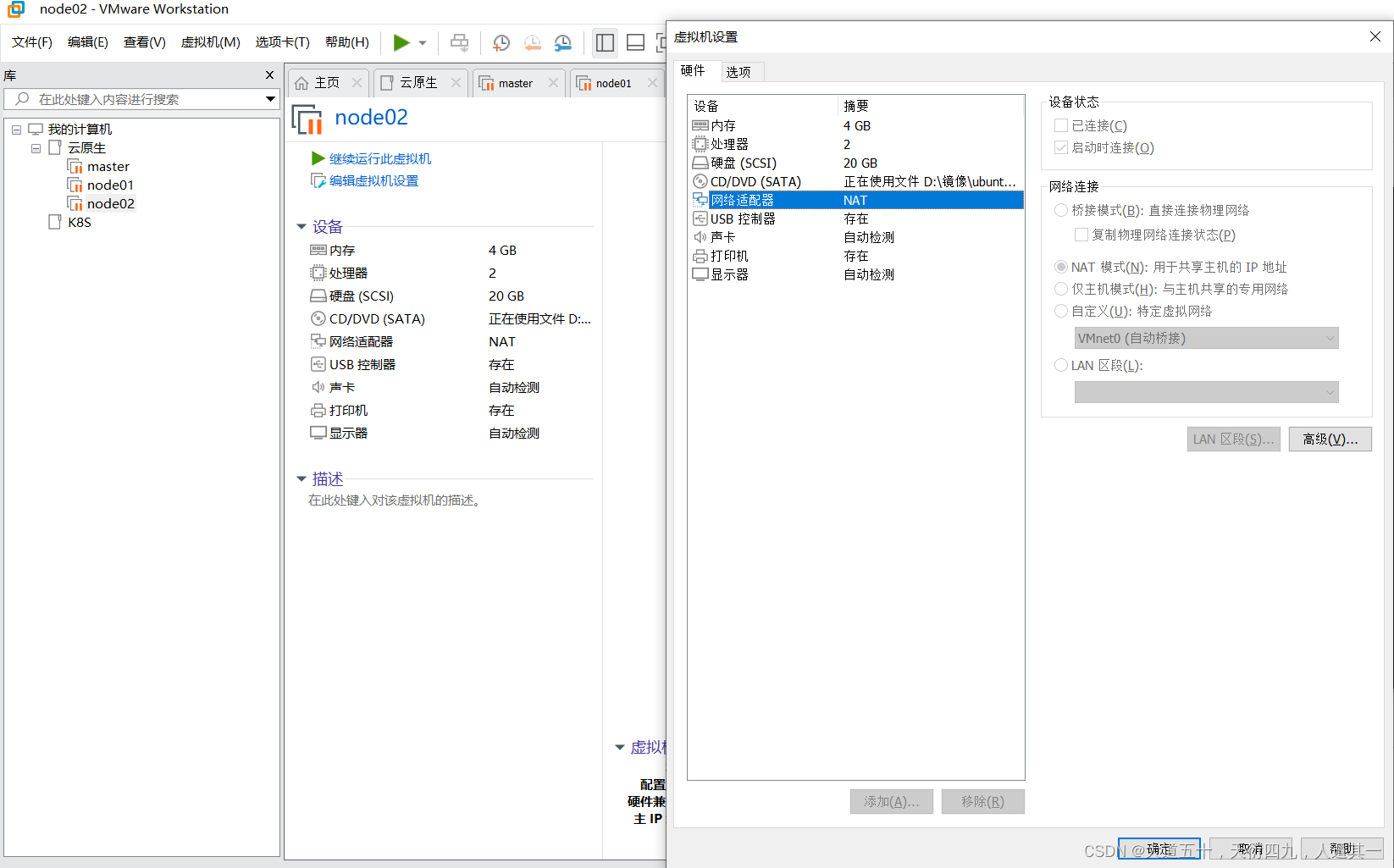

VMware Workstation安装3台虚拟机

网卡模式为NAT模式

2、版本说明

系统版本:ubuntu-22.04.3-desktop-amd64

Kubernetes版本:v1.26.3

容器运行时版本:containerd v1.6.20

客户端版本:nerdctl-1.3.0

CNI插件版本:cni-plugins-linux-amd64-v1.2.0

系统初始化(所有节点)

1、关闭防火墙

Ubuntu 自带一个配置防火墙配置工具,称为 UFW。确保防火墙状态为inactive

# ufw disable

# ufw status

Status: inactive

2、关闭交换分区

通过kubeadm部署集群时会检查当前主机是否禁用了Swap设备,否则会导致部署失败。

# swapoff -a

# free

root@master:/home/user# free

total used free shared buff/cache available

Mem: 3964464 1420180 1345660 15060 1198624 2280516

Swap: 0 0 0

3、配置时间同步

# apt install chrony

# systemctl start chrony.service

检查时间是否同步

# chronyc tracking -V

Reference ID : CA760182 (time.neu.edu.cn)

Stratum : 2

Ref time (UTC) : Mon Dec 18 11:13:49 2023

System time : 0.000578838 seconds slow of NTP time

Last offset : -0.000388255 seconds

RMS offset : 0.000761426 seconds

Frequency : 5.403 ppm fast

Residual freq : -0.105 ppm

Skew : 14.889 ppm

Root delay : 0.023036918 seconds

Root dispersion : 0.003026418 seconds

Update interval : 64.8 seconds

Leap status : Normal

4、设置host映射和主机名

hostnamectl set-hostname master

cat >> /etc/hosts << EOF

192.168.0.174 master

192.168.0.175 node1

192.168.0.176 node2

EOF

安装容器运行时、客户端工具和CNI插件

1、本示例通过以下脚本源码安装,安装包和脚本获取如下

链接:https://pan.baidu.com/s/1r9wS8OUj-OQxtSId-KN3pQ?pwd=hd3i

提取码:hd3i

#!/bin/bash

DIR=`pwd`

PACKAGE_NAME="docker-20.10.19.tgz"

DOCKER_FILE=${DIR}/${PACKAGE_NAME}

#read -p "请输入使用docker server的普通用户名称,默认为docker:" USERNAME

if test -z ${USERNAME};then

USERNAME=docker

fi

centos_install_docker(){

grep "Kernel" /etc/issue &> /dev/null

if [ $? -eq 0 ];then

/bin/echo "当前系统是`cat /etc/redhat-release`,即将开始系统初始化、配置docker-compose与安装docker" && sleep 1

systemctl stop firewalld && systemctl disable firewalld && echo "防火墙已关闭" && sleep 1

systemctl stop NetworkManager && systemctl disable NetworkManager && echo "NetworkManager" && sleep 1

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux && setenforce 0 && echo "selinux 已关闭" && sleep 1

\cp ${DIR}/limits.conf /etc/security/limits.conf

\cp ${DIR}/sysctl.conf /etc/sysctl.conf

/bin/tar xvf ${DOCKER_FILE}

\cp docker/* /usr/local/bin

mkdir /etc/docker && \cp daemon.json /etc/docker

\cp containerd.service /lib/systemd/system/containerd.service

\cp docker.service /lib/systemd/system/docker.service

\cp docker.socket /lib/systemd/system/docker.socket

\cp ${DIR}/docker-compose-Linux-x86_64_1.28.6 /usr/bin/docker-compose

groupadd docker && useradd docker -s /sbin/nologin -g docker

id -u ${USERNAME} &> /dev/null

if [ $? -ne 0 ];then

useradd ${USERNAME}

usermod ${USERNAME} -G docker

else

usermod ${USERNAME} -G docker

fi

docker_install_success_info

fi

}

ubuntu_install_docker(){

grep "Ubuntu" /etc/issue &> /dev/null

if [ $? -eq 0 ];then

/bin/echo "当前系统是`cat /etc/issue`,即将开始系统初始化、配置docker-compose与安装docker" && sleep 1

\cp ${DIR}/limits.conf /etc/security/limits.conf

\cp ${DIR}/sysctl.conf /etc/sysctl.conf

/bin/tar xvf ${DOCKER_FILE}

\cp docker/* /usr/local/bin

mkdir /etc/docker && \cp daemon.json /etc/docker

\cp containerd.service /lib/systemd/system/containerd.service

\cp docker.service /lib/systemd/system/docker.service

\cp docker.socket /lib/systemd/system/docker.socket

\cp ${DIR}/docker-compose-Linux-x86_64_1.28.6 /usr/bin/docker-compose

groupadd docker && useradd docker -r -m -s /sbin/nologin -g docker

id -u ${USERNAME} &> /dev/null

if [ $? -ne 0 ];then

groupadd -r ${USERNAME}

useradd -r -m -s /bin/bash -g ${USERNAME} ${USERNAME}

usermod ${USERNAME} -G docker

else

usermod ${USERNAME} -G docker

fi

docker_install_success_info

fi

}

ubuntu_install_containerd(){

DIR=`pwd`

PACKAGE_NAME="containerd-1.6.20-linux-amd64.tar.gz"

CONTAINERD_FILE=${DIR}/${PACKAGE_NAME}

NERDCTL="nerdctl-1.3.0-linux-amd64.tar.gz"

CNI="cni-plugins-linux-amd64-v1.2.0.tgz"

RUNC="runc.amd64"

mkdir -p /etc/containerd /etc/nerdctl

tar xvf ${CONTAINERD_FILE} && cp bin/* /usr/local/bin/

\cp runc.amd64 /usr/bin/runc && chmod a+x /usr/bin/runc

\cp config.toml /etc/containerd/config.toml

\cp containerd.service /lib/systemd/system/containerd.service

#CNI

mkdir /opt/cni/bin -p

tar xvf ${CNI} -C /opt/cni/bin/

#nerdctl

tar xvf ${NERDCTL} -C /usr/local/bin/

\cp nerdctl.toml /etc/nerdctl/nerdctl.toml

containerd_install_success_info

}

containerd_install_success_info(){

/bin/echo "正在启动containerd server并设置为开机自启动!"

#start containerd service

systemctl daemon-reload && systemctl restart containerd && systemctl enable containerd

/bin/echo "containerd is:" `systemctl is-active containerd`

sleep 0.5 && /bin/echo "containerd server安装完成,欢迎进入containerd的容器世界!" && sleep 1

}

docker_install_success_info(){

/bin/echo "正在启动docker server并设置为开机自启动!"

systemctl enable containerd.service && systemctl restart containerd.service

systemctl enable docker.service && systemctl restart docker.service

systemctl enable docker.socket && systemctl restart docker.socket

sleep 0.5 && /bin/echo "docker server安装完成,欢迎进入docker世界!" && sleep 1

}

usage(){

echo "使用方法为[shell脚本 containerd|docker]"

}

main(){

RUNTIME=$1

case ${RUNTIME} in

docker)

centos_install_docker

ubuntu_install_docker

;;

containerd)

ubuntu_install_containerd

;;

*)

usage;

esac;

}

main $1

2、安装和验证

# tar xvf runtime-docker20.10.19-containerd1.6.20-binary-install.tar.gz

# bash runtime-install.sh containerd

# containerd -v

containerd github.com/containerd/containerd v1.6.20 2806fc1057397dbaeefbea0e4e17bddfbd388f38

# nerdctl -v

nerdctl version 1.3.0

3、修改containerd配置文件

修改启用cgroup driver为systemd(kubelet需要让docker容器引擎使用systemd作为CGroup的驱动,其默认值为cgroupfs)。

修改拉取pause镜像地址为国内地址。

# mkdir /etc/containerd

# containerd config default > /etc/containerd/config.toml

# vim /etc/containerd/config.toml

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7"

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://9916w1ow.mirror.aliyuncs.com"]

# containerd config dump | grep -i -E "systemd|mirror"

systemd_cgroup = false

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://9916w1ow.mirror.aliyuncs.com"]

# systemctl restart containerd && systemctl enable containerd

安装kubeadm、kubectl、kubelet(所有节点)

root@k8s-master1:~# apt-get update && apt-get install -y apt-transport-https -y

root@k8s-node1:~# apt-get update && apt-get install -y apt-transport-https -y

root@k8s-node2:~# apt-get update && apt-get install -y apt-transport-https -y

root@k8s-master1:~# curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

root@k8s-node1:~# curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

root@k8s-node2:~# curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

root@k8s-master1:~# cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

root@k8s-node1:~# cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

root@k8s-node2:~# cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

root@k8s-master1:~# apt-get update && apt-cache madison kubeadm

root@k8s-node1:~# apt-get update && apt-cache madison kubeadm

root@k8s-node2:~# apt-get update && apt-cache madison kubeadm

root@k8s-master1:~# apt-get install -y kubeadm=1.26.3-00 kubectl=1.26.3-00 kubelet=1.26.3-00

root@k8s-node1:~# apt-get install -y kubeadm=1.26.3-00 kubectl=1.26.3-00 kubelet=1.26.3-00

下载kubernetes镜像

root@k8s-master1:~# kubeadm config images list --kubernetes-version v1.26.3

root@k8s-master1:~# vim images-down.sh

#!/bin/bash

nerdctl pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.26.3

nerdctl pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controllermanager:v1.26.3

nerdctl pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.26.3

nerdctl pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.26.3

nerdctl pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9

nerdctl pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.6-0

nerdctl pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.9.3

root@k8s-master1:~# bash images-down.sh

内核参数优化

1、修改内核参数配置文件

root@k8s-master1:~# cat /etc/sysctl.conf

net.ipv4.ip_forward=1

vm.max_map_count=262144

kernel.pid_max=4194303

fs.file-max=1000000

net.ipv4.tcp_max_tw_buckets=6000

net.netfilter.nf_conntrack_max=2097152

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

2、内核模块开机挂载

root@k8s-master1:~#vim /etc/modules-load.d/modules.conf

ip_vs

ip_vs_lc

ip_vs_lblc

ip_vs_lblcr

ip_vs_rr

ip_vs_wrr

ip_vs_sh

ip_vs_dh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

ip_tables

ip_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

xt_set

br_netfilter

nf_conntrack

overlay

3、验证内核模块与内存参数

root@k8s-master1:~# lsmod | grep br_netfilter

br_netfilter 32768 0

bridge 307200 1 br_netfilter

root@k8s-master1:~# sysctl -a | grep bridge-nf-call-iptables

net.bridge.bridge-nf-call-iptables = 1

kubernetes集群初始化(主节点)

root@k8s-master1:~# kubeadm init --apiserver-advertise-address=11.0.1.131 --apiserver-bind-port=6443 --kubernetes-version=v1.26.3 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=cluster.local --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --ignore-preflight-errors=swap

root@k8s-master1:~# mkdir -p $HOME/.kube

root@k8s-master1:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@k8s-master1:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config

#参数说明

--apiserver-advertise-address string 设置 apiserver 绑定的 IP.

--apiserver-bind-port int32 设置apiserver 监听的端口. (默认 6443)

--apiserver-cert-extra-sans strings api证书中指定额外的Subject Alternative Names (SANs) 可以是IP 也可以是DNS名称。 证书是和SAN绑定的。

--cert-dir string 证书存放的目录 (默认 "/etc/kubernetes/pki")

--certificate-key string kubeadm-cert secret 中 用于加密 control-plane 证书的key

--config string kubeadm 配置文件的路径.

--cri-socket string CRI socket 文件路径,如果为空 kubeadm 将自动发现相关的socket文件; 只有当机器中存在多个 CRI socket 或者 存在非标准 CRI socket 时才指定.

--dry-run 测试,并不真正执行;输出运行后的结果.

--feature-gates string 指定启用哪些额外的feature 使用 key=value 对的形式。

--help -h 帮助文档

--ignore-preflight-errors strings 忽略前置检查错误,被忽略的错误将被显示为警告. 例子: 'IsPrivilegedUser,Swap'. Value 'all' ignores errors from all checks.

--image-repository string 选择拉取 control plane images 的镜像repo (default "k8s.gcr.io")

--kubernetes-version string 选择K8S版本. (default "stable-1")

--node-name string 指定node的名称,默认使用 node 的 hostname.

--pod-network-cidr string 指定 pod 的网络, control plane 会自动将 网络发布到其他节点的node,让其上启动的容器使用此网络

--service-cidr string 指定service 的IP 范围. (default "10.96.0.0/12")

--service-dns-domain string 指定 service 的 dns 后缀, e.g. "myorg.internal". (default "cluster.local")

--skip-certificate-key-print 不打印 control-plane 用于加密证书的key.

--skip-phases strings 跳过指定的阶段(phase)

--skip-token-print 不打印 kubeadm init 生成的 default bootstrap token

--token string 指定 node 和control plane 之间,简历双向认证的token ,格式为 [a-z0-9]{6}\.[a-z0-9]{16} - e.g. abcdef.0123456789abcdef

--token-ttl duration token 自动删除的时间间隔。 (e.g. 1s, 2m, 3h). 如果设置为 '0', token 永不过期 (default 24h0m0s)

--upload-certs 上传 control-plane 证书到 kubeadm-certs Secret.

安装网络插件

网络插件有flannel、calico、canal 和 weave,根据自己的需求选择。

1、安装flannel

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

2、安装calico

curl https://raw.githubusercontent.com/projectcalico/calico/v3.26.4/manifests/calico.yaml -O

kubectl apply -f calico.yaml

工作节点添加

1、安装完成网络插件后master节点显示Ready状态

root@master:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 3d18h v1.26.3

2、添加工作节点

将节点加入集群,在初始化Master节点会生成kubeadm join命令,要使用主节点初始化过程中记录的kubeadm join命令

kubeadm join 11.0.1.131:6443 --token znxygv.852vaiqg9ldsq4vn --discovery-token-ca-cert-hash sha256:995f8cd06cd503ce5e365852781de0911b63d88cb50ae04b6ccc7df070246748

3、验证节点添加结果

最终状态为3个节点状态为Ready,所有Pod状态为Running。

root@master:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 3d18h v1.26.3

node01 Ready <none> 3d14h v1.26.3

node02 Ready <none> 3d14h v1.26.3

root@master:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-79bfdd4898-j5r2v 1/1 Running 3 (2d21h ago) 3d13h

kube-system calico-node-p4b96 1/1 Running 1 (3d ago) 3d13h

kube-system calico-node-tx9tx 1/1 Running 1 (3d ago) 3d13h

kube-system calico-node-w479t 1/1 Running 0 3d13h

kube-system coredns-567c556887-55wl2 1/1 Running 1 (3d ago) 3d18h

kube-system coredns-567c556887-9dgds 1/1 Running 1 (3d ago) 3d18h

kube-system etcd-master 1/1 Running 1 (3d ago) 3d18h

kube-system kube-apiserver-master 1/1 Running 1 (3d ago) 3d18h

kube-system kube-controller-manager-master 1/1 Running 4 (13h ago) 3d18h

kube-system kube-proxy-9c4qf 1/1 Running 1 (3d ago) 3d14h

kube-system kube-proxy-dfp6p 1/1 Running 1 (3d ago) 3d14h

kube-system kube-proxy-plq9r 1/1 Running 1 (3d ago) 3d18h

kube-system kube-scheduler-master 1/1 Running 5 (13h ago) 3d18h

myserver myserver-nginx-deployment-596d5d9799-hrfsv 1/1 Running 0 2d23h

myserver myserver-tomcat-app1-deployment-6bb596979f-n29nq 1/1 Running 0 2d23h

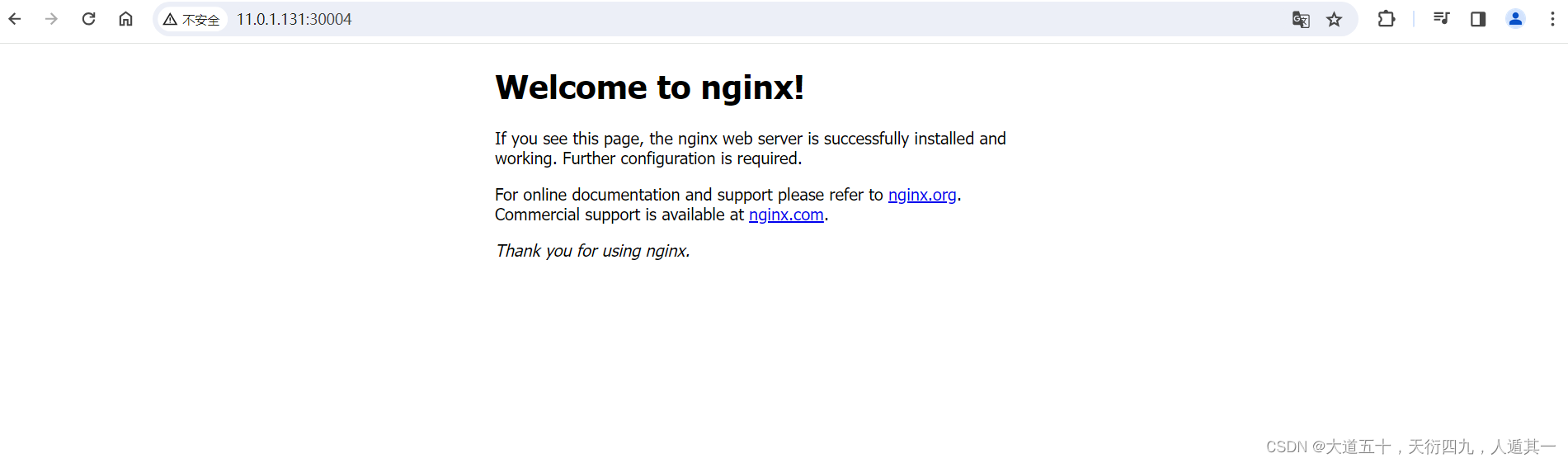

部署nginx工作负载验证集群可用性

1、通过yaml文件声明式部署工作负载。

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: myserver-nginx-deployment-label

name: myserver-nginx-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-nginx-selector

template:

metadata:

labels:

app: myserver-nginx-selector

spec:

containers:

- name: myserver-nginx-container

image: nginx

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

# resources:

# limits:

# cpu: 2

# memory: 2Gi

# requests:

# cpu: 500m

# memory: 1Gi

---

kind: Service

apiVersion: v1

metadata:

labels:

app: myserver-nginx-service-label

name: myserver-nginx-service

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30004

- name: https

port: 443

protocol: TCP

targetPort: 443

nodePort: 30443

selector:

app: myserver-nginx-selector

# kubectl apply -f nginx.yaml

# kubectl get pod -n myserver

NAME READY STATUS RESTARTS AGE

myserver-nginx-deployment-596d5d9799-hrfsv 1/1 Running 0 2d23h

root@master:~# kubectl get svc -n myserver

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myserver-nginx-service NodePort 10.200.211.39 <none> 80:30004/TCP,443:30443/TCP 3d12h

3、通过集群节点IP访问nginx