2020全国高校计算机能力挑战赛——人工智能应用赛

样题

样本数据预处理

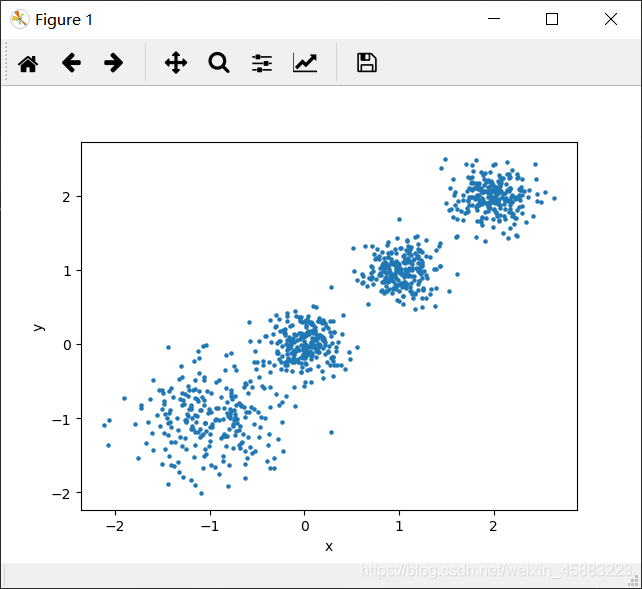

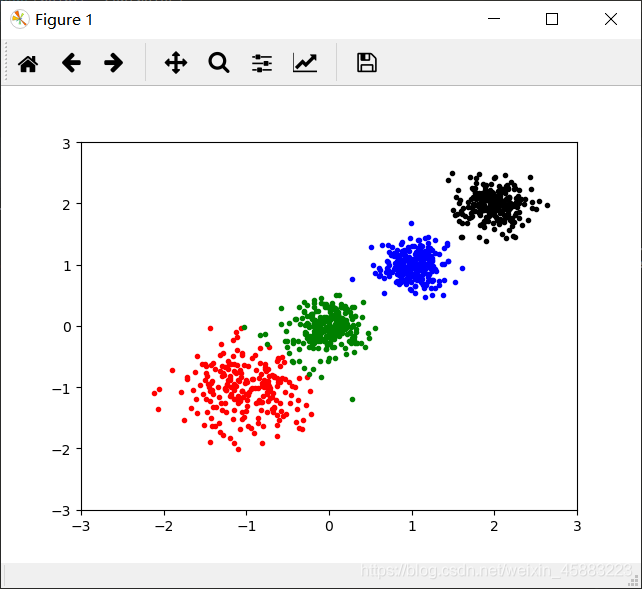

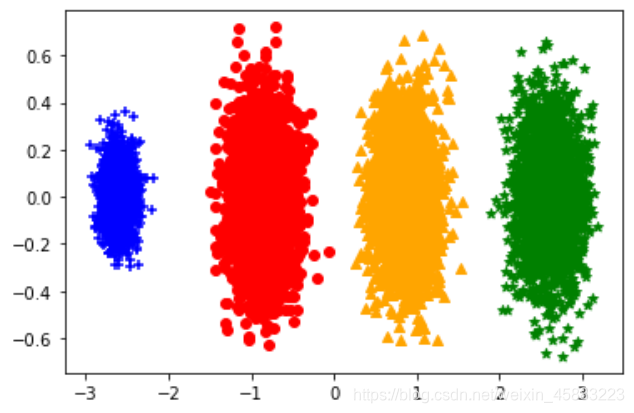

数据聚类并可视化

所给数据为 1000 个样本的特征数据,每一行表示一个样本的 2 维特征。现要求使用Python 语言编程实现(1)将原始数据读取并在二维坐标轴上可视化;(2)对数据进行聚类(聚为 4 类);(3)将聚类结果在二维坐标轴上可视化,每一类的数据需用不同颜色进行区分。

from pandas import *

from matplotlib import pyplot as plt

data = read_csv('data.csv', delimiter='\t', skiprows=2)

list_data = data.values.tolist()

s = []

x = []

y = []

data = []

for i in list_data:

s.append(i[0].split(','))

for i in range(len(s)):

a, b = s[i]

x.append(float(a))

y.append(float(b))

data.append([float(a),float(b)])

plt.xlabel('x')

plt.ylabel('y')

plt.scatter(x, y, s=5)

plt.show()

import numpy as np

import matplotlib.pyplot as plt

from data import data

import random

import pandas as pd

# 计算欧拉距离

def calcDis(dataSet, centroids, k):

clalist = []

for data in dataSet:

diff = np.tile(data, (k,

1)) - centroids # 相减 (np.tile(a,(2,1))就是把a先沿x轴复制1倍,即没有复制,仍然是 [0,1,2]。 再把结果沿y方向复制2倍得到array([[0,1,2],[0,1,2]]))

squaredDiff = diff ** 2 # 平方

squaredDist = np.sum(squaredDiff, axis=1) # 和 (axis=1表示行)

distance = squaredDist ** 0.5 # 开根号

clalist.append(distance)

clalist = np.array(clalist) # 返回一个每个点到质点的距离len(dateSet)*k的数组

return clalist

# 计算质心

def classify(dataSet, centroids, k):

# 计算样本到质心的距离

clalist = calcDis(dataSet, centroids, k)

# 分组并计算新的质心

minDistIndices = np.argmin(clalist, axis=1) # axis=1 表示求出每行的最小值的下标

newCentroids = pd.DataFrame(dataSet).groupby(

minDistIndices).mean() # DataFramte(dataSet)对DataSet分组,groupby(min)按照min进行统计分类,mean()对分类结果求均值

newCentroids = newCentroids.values

# 计算变化量

changed = newCentroids - centroids

return changed, newCentroids

# 使用k-means分类

def kmeans(dataSet, k):

# 随机取质心

centroids = random.sample(dataSet, k)

# 更新质心 直到变化量全为0

changed, newCentroids = classify(dataSet, centroids, k)

while np.any(changed != 0):

changed, newCentroids = classify(dataSet, newCentroids, k)

centroids = sorted(newCentroids.tolist()) # tolist()将矩阵转换成列表 sorted()排序

# 根据质心计算每个集群

cluster = []

clalist = calcDis(dataSet, centroids, k) # 调用欧拉距离

minDistIndices = np.argmin(clalist, axis=1)

for i in range(k):

cluster.append([])

for i, j in enumerate(minDistIndices): # enymerate()可同时遍历索引和遍历元素

cluster[j].append(dataSet[i])

return centroids, cluster

def get_distance(p1, p2):

diff = [x - y for x, y in zip(p1, p2)]

distance = np.sqrt(sum(map(lambda x: x ** 2, diff)))

return distance

# 计算多个点的中心

def calc_center_point(cluster):

N = len(cluster)

m = np.matrix(cluster).transpose().tolist()

center_point = [sum(x) / N for x in m]

return center_point

# 检查两个点是否有差别

def check_center_diff(center, new_center):

n = len(center)

for c, nc in zip(center, new_center):

if c != nc:

return False

return True

# K-means算法的实现

def K_means(points, center_points):

k = len(center_points) # k值大小

tot = 0

while True: # 迭代

temp_center_points = [] # 记录中心点

clusters = [] # 记录聚类的结果

for c in range(0, k):

clusters.append([]) # 初始化

# 针对每个点,寻找距离其最近的中心点(寻找组织)

for i, data in enumerate(points):

distances = []

for center_point in center_points:

distances.append(get_distance(data, center_point))

index = distances.index(min(distances)) # 找到最小的距离的那个中心点的索引,

clusters[index].append(data) # 那么这个中心点代表的簇,里面增加一个样本

tot += 1

print(tot, '次迭代 ', clusters)

k = len(clusters)

colors = ['r.', 'g.', 'b.', 'k.', 'y.'] # 颜色和点的样式

for i, cluster in enumerate(clusters):

data = np.array(cluster)

data_x = [x[0] for x in data]

data_y = [x[1] for x in data]

plt.plot(data_x, data_y, colors[i])

plt.axis([-3, 3, -3, 3])

# 重新计算中心点(该步骤可以与下面判断中心点是否发生变化这个步骤,调换顺序)

for cluster in clusters:

temp_center_points.append(calc_center_point(cluster))

# 在计算中心点的时候,需要将原来的中心点算进去

for j in range(0, k):

if len(clusters[j]) == 0:

temp_center_points[j] = center_points[j]

# 判断中心点是否发生变化:即,判断聚类前后样本的类别是否发生变化

for c, nc in zip(center_points, temp_center_points):

if not check_center_diff(c, nc):

center_points = temp_center_points[:] # 复制一份

break

else: # 如果没有变化,那么退出迭代,聚类结束

break

plt.show()

return clusters # 返回聚类的结果

if __name__ == '__main__':

dataset = data

centroids, cluster = kmeans(dataset, 4)

points = data

clusters = K_means(points, centroids)

print('#######最终结果##########')

for i, cluster in enumerate(clusters):

print('cluster ', i, ' ', cluster)

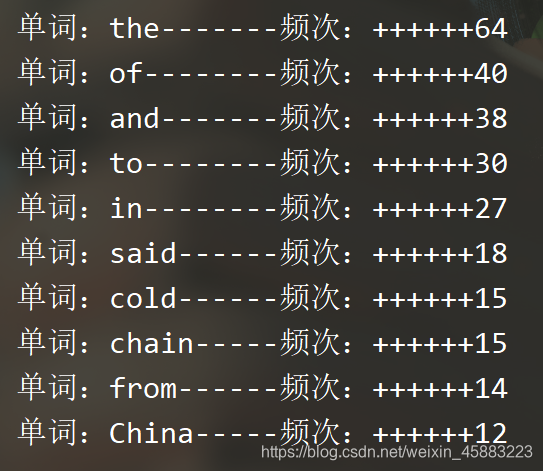

统计词频并输出高频词汇

所给数据为某日中国日报英文版的一篇新闻报道,现要求使用 Python 语言编写程序统计其中出线频率最高的十个单词,输出对应的单词内容和频率(以字典形式呈现)。

import jieba

file = open("data.txt", "r", encoding='utf-8') # 此处需打开txt格式且编码为UTF-8的文本

txt = file.read()

words = jieba.lcut(txt) # 使用jieba进行分词,将文本分成词语列表

count = {}

for word in words: # 使用 for 循环遍历每个词语并统计个数

if len(word) < 2: # 排除单个字的干扰,使得输出结果为词语

continue

else:

count[word] = count.get(word, 0) + 1 # 如果字典里键为 word 的值存在,则返回键的值并加一,如果不存在键word,则返回0再加上1

list = list(count.items()) # 将字典的所有键值对转化为列表

list.sort(key=lambda x: x[1], reverse=True) # 对列表按照词频从大到小的顺序排序

for i in range(10): # 此处统计排名前十的单词,所以range(10)

word, number = list[i]

print("单词:{:-<10}频次:{:+>8}".format(word, number))

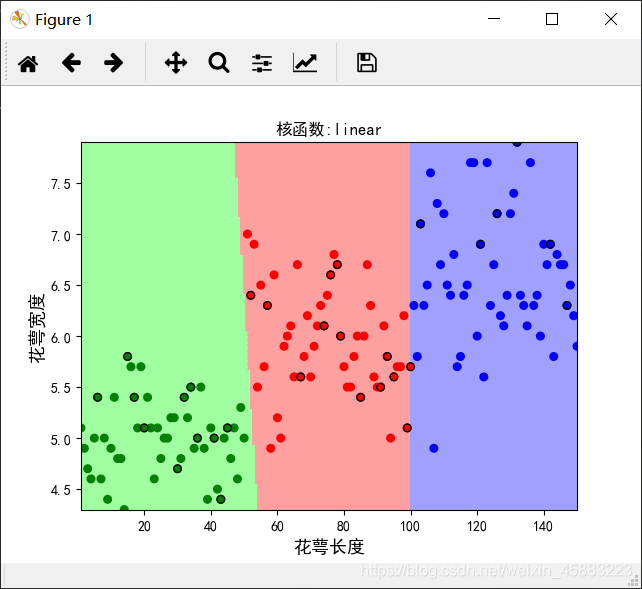

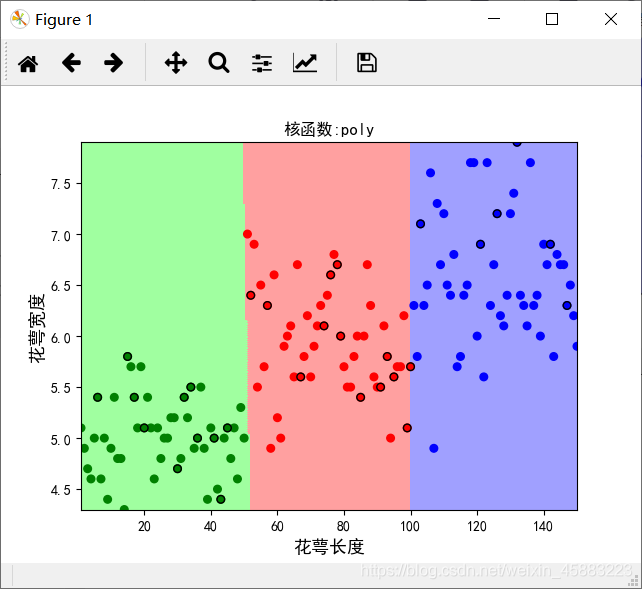

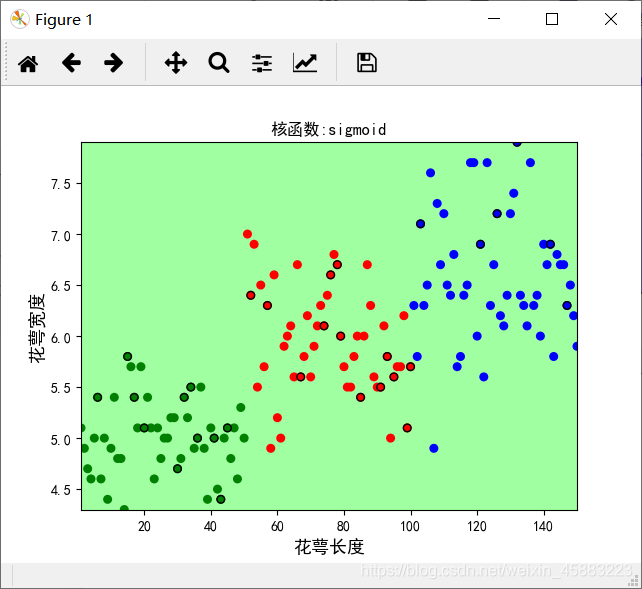

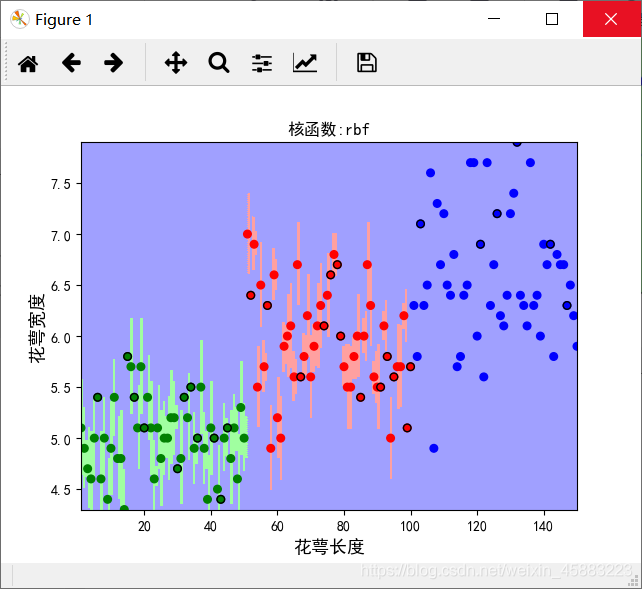

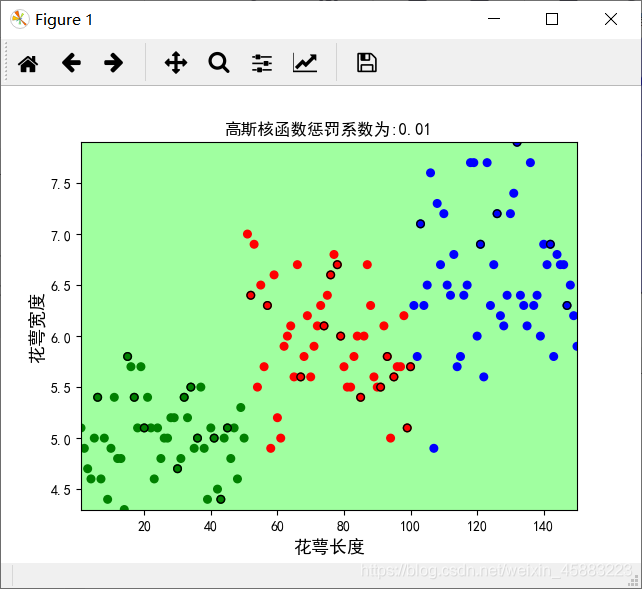

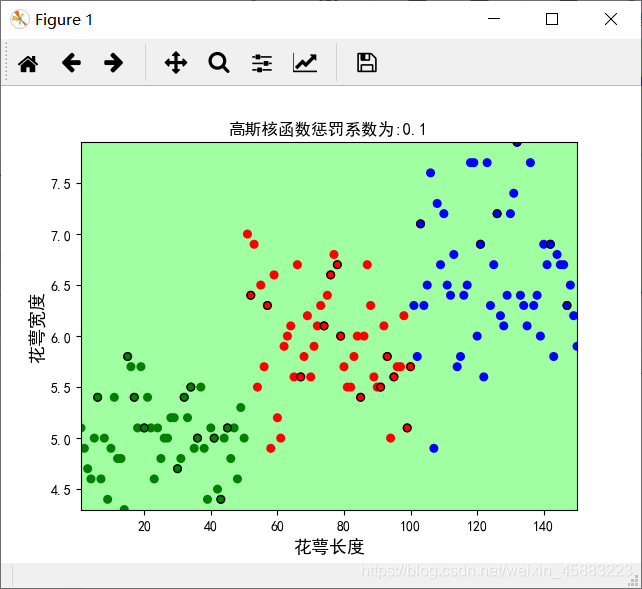

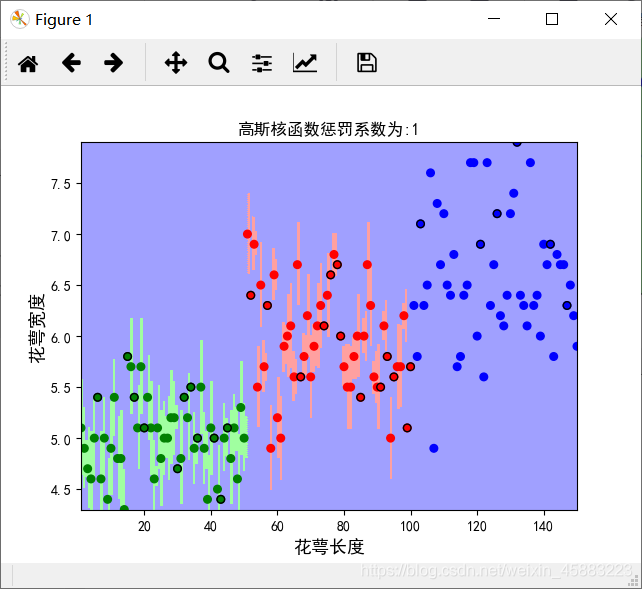

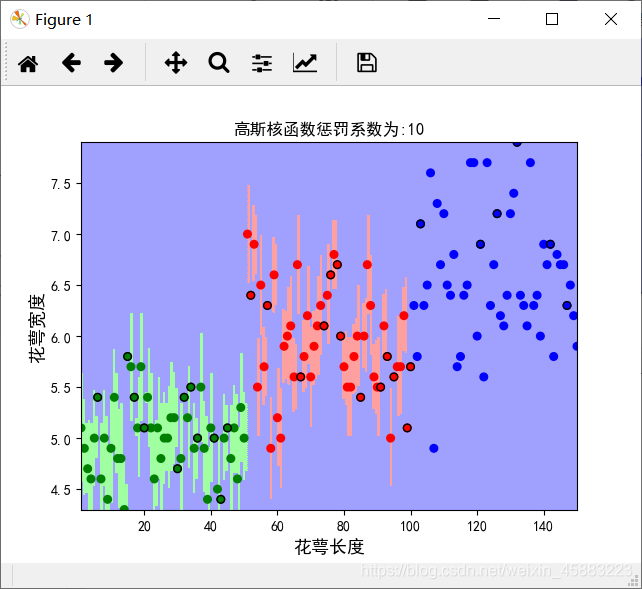

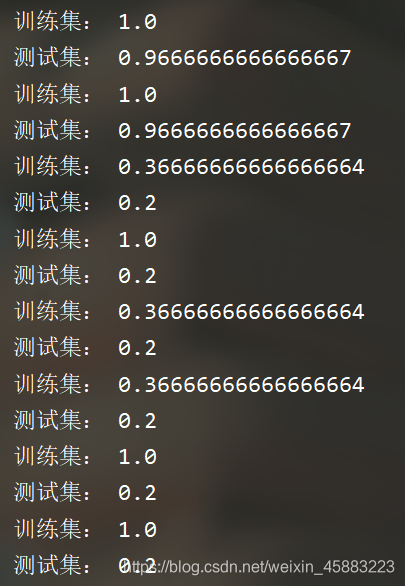

传统机器学习算法应用

任务: 使用 SVM 模型训练分类器。数据集内包含 3 类鸢尾花,分别为山鸢尾(Iris-setosa)、变色鸢尾(Iris-versicolor)和维吉尼亚鸢尾(Iris-virginica)。每类各 50 个数据,每条记录有 4 项特征:花萼长度、花萼宽度、花瓣长度、花瓣宽度。

要求:

(1)80%数据用于训练,20%数据用于测试。

(2)输出错误项的惩罚系数为 1 时,不同核函数训练得到的模型的测试准确率。

(3)输出核函数固定为高斯核函数时,惩罚系数分别为 0.01,0.1,1,10 时2候的测试准确率。

from sklearn import svm

import numpy as np

import matplotlib.pyplot as plt

import matplotlib

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# define converts(字典)

def Iris_label(s):

it = {b'Iris-setosa': 0, b'Iris-versicolor': 1, b'Iris-virginica': 2}

return it[s]

# 1.读取数据集

path = 'Iris.csv'

data = np.loadtxt(path, dtype=float, delimiter=',', converters={5: Iris_label}, skiprows=1)

# converters={5:Iris_label}中“5”指的是第6列:将第6列的str转化为label(number)

# print(data.shape)

# 2.划分数据与标签

x, y = np.split(data, indices_or_sections=(5,), axis=1) # x为数据,y为标签

x = x[:, 0:2]

train_data, test_data, train_label, test_label = train_test_split(x, y, random_state=1, train_size=0.8, test_size=0.2) # sklearn.model_selection.

# print(train_data.shape)

kernels = ['linear','poly','sigmoid','rbf']

for i in range(1, len(kernels)+1):

# 3.训练svm分类器

classifier = svm.SVC(C=1, kernel=kernels[i-1], gamma=10, decision_function_shape='ovo') # ovr:一对多策略

classifier.fit(train_data, train_label.ravel()) # ravel函数在降维时默认是行序优先

# 4.计算svc分类器的准确率

print("训练集:", classifier.score(train_data, train_label))

print("测试集:", classifier.score(test_data, test_label))

# 也可直接调用accuracy_score方法计算准确率

# tra_label = classifier.predict(train_data) # 训练集的预测标签

# tes_label = classifier.predict(test_data) # 测试集的预测标签

# print("训练集:", accuracy_score(train_label, tra_label))

# print("测试集:", accuracy_score(test_label, tes_label))

# 查看决策函数

# print('train_decision_function:\n', classifier.decision_function(train_data)) # (90,3)

# print('predict_result:\n', classifier.predict(train_data))

# 5.绘制图形

# 确定坐标轴范围

x1_min, x1_max = x[:, 0].min(), x[:, 0].max() # 第0维特征的范围

x2_min, x2_max = x[:, 1].min(), x[:, 1].max() # 第1维特征的范围

x1, x2 = np.mgrid[x1_min:x1_max:200j, x2_min:x2_max:200j] # 生成网络采样点

grid_test = np.stack((x1.flat, x2.flat), axis=1) # 测试点

# 指定默认字体

matplotlib.rcParams['font.sans-serif'] = ['SimHei']

# 设置颜色

cm_light = matplotlib.colors.ListedColormap(['#A0FFA0', '#FFA0A0', '#A0A0FF'])

cm_dark = matplotlib.colors.ListedColormap(['g', 'r', 'b'])

grid_hat = classifier.predict(grid_test) # 预测分类值

grid_hat = grid_hat.reshape(x1.shape) # 使之与输入的形状相同

plt.pcolormesh(x1, x2, grid_hat, cmap=cm_light) # 预测值的显示

plt.scatter(x[:, 0], x[:, 1], c=y[:, 0], s=30, cmap=cm_dark) # 样本

plt.scatter(test_data[:, 0], test_data[:, 1], c=test_label[:, 0], s=30, edgecolors='k', zorder=2,

cmap=cm_dark) # 圈中测试集样本点

plt.xlabel('花萼长度', fontsize=13)

plt.ylabel('花萼宽度', fontsize=13)

plt.xlim(x1_min, x1_max)

plt.ylim(x2_min, x2_max)

plt.title('核函数:{}'.format(kernels[i-1]))

plt.show()

c = [0.01, 0.1, 1, 10]

for i in range(1, len(c)+1):

classifier = svm.SVC(C=c[i-1], kernel='rbf', gamma=10, decision_function_shape='ovo') # ovr:一对多策略

classifier.fit(train_data, train_label.ravel()) #

print("训练集:", classifier.score(train_data, train_label))

print("测试集:", classifier.score(test_data, test_label))

x1_min, x1_max = x[:, 0].min(), x[:, 0].max() # 第0维特征的范围

x2_min, x2_max = x[:, 1].min(), x[:, 1].max() # 第1维特征的范围

x1, x2 = np.mgrid[x1_min:x1_max:200j, x2_min:x2_max:200j] # 生成网络采样点

grid_test = np.stack((x1.flat, x2.flat), axis=1) # 测试点

matplotlib.rcParams['font.sans-serif'] = ['SimHei']

cm_light = matplotlib.colors.ListedColormap(['#A0FFA0', '#FFA0A0', '#A0A0FF'])

cm_dark = matplotlib.colors.ListedColormap(['g', 'r', 'b'])

grid_hat = classifier.predict(grid_test) # 预测分类值

grid_hat = grid_hat.reshape(x1.shape) # 使之与输入的形状相同

plt.pcolormesh(x1, x2, grid_hat, cmap=cm_light) # 预测值的显示

plt.scatter(x[:, 0], x[:, 1], c=y[:, 0], s=30, cmap=cm_dark) # 样本

plt.scatter(test_data[:, 0], test_data[:, 1], c=test_label[:, 0], s=30, edgecolors='k', zorder=2,

cmap=cm_dark) # 圈中测试集样本点

plt.xlabel('花萼长度', fontsize=13)

plt.ylabel('花萼宽度', fontsize=13)

plt.xlim(x1_min, x1_max)

plt.ylim(x2_min, x2_max)

plt.title('高斯核函数惩罚系数为:{}'.format(c[i-1]))

plt.show()

深度学习算法应用

图片分类模型训练

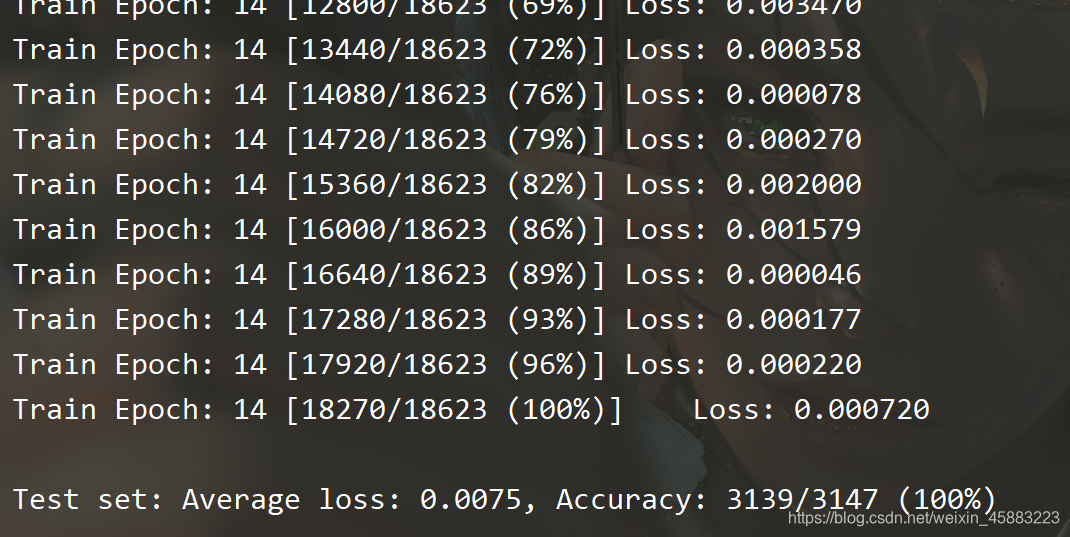

数据中给出了手写数字识别 mnist 数据集的 0,1 和 2 的全部数据,包括训练和测试。

现要求利用卷积神经网络或全连接网络在训练集进行训练,并输出测试集的准确率。需提交所有代码及运行截图。

#from __future__ import print_function

import argparse

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

from torch.optim.lr_scheduler import StepLR

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 32, 3, 1)

self.conv2 = nn.Conv2d(32, 64, 3, 1)

self.dropout1 = nn.Dropout2d(0.25)

self.dropout2 = nn.Dropout2d(0.5)

self.fc1 = nn.Linear(9216, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.dropout1(x)

x = torch.flatten(x, 1)

x = self.fc1(x)

x = F.relu(x)

x = self.dropout2(x)

x = self.fc2(x)

output = F.log_softmax(x, dim=1)

return output

def train(args, model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % args.log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset), 100. * batch_idx / len(train_loader), loss.item()))

if args.dry_run:

break

def test(model, device, test_loader):

model.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += F.nll_loss(output, target, reduction='sum').item() # sum up batch loss

pred = output.argmax(dim=1, keepdim=True) # get the index of the max log-probability

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

def main():

# Training settings

parser = argparse.ArgumentParser(description='PyTorch MNIST Example')

parser.add_argument('--batch-size', type=int, default=64, metavar='N',

help='input batch size for training (default: 64)')

parser.add_argument('--test-batch-size', type=int, default=1000, metavar='N',

help='input batch size for testing (default: 1000)')

parser.add_argument('--epochs', type=int, default=14, metavar='N',

help='number of epochs to train (default: 14)')

parser.add_argument('--lr', type=float, default=1.0, metavar='LR',

help='learning rate (default: 1.0)')

parser.add_argument('--gamma', type=float, default=0.7, metavar='M',

help='Learning rate step gamma (default: 0.7)')

parser.add_argument('--no-cuda', action='store_true', default=False,

help='disables CUDA training')

parser.add_argument('--dry-run', action='store_true', default=False,

help='quickly check a single pass')

parser.add_argument('--seed', type=int, default=1, metavar='S',

help='random seed (default: 1)')

parser.add_argument('--log-interval', type=int, default=10, metavar='N',

help='how many batches to wait before logging training status')

parser.add_argument('--save-model', action='store_true', default=False,

help='For Saving the current Model')

args = parser.parse_args()

use_cuda = not args.no_cuda and torch.cuda.is_available()

torch.manual_seed(args.seed)

device = torch.device("cuda" if use_cuda else "cpu")

kwargs = {'batch_size': args.batch_size}

if use_cuda:

kwargs.update({'num_workers': 1,

'pin_memory': True,

'shuffle': True},

)

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

train_loader = torch.utils.data.DataLoader(

datasets.ImageFolder('train',

transform=transform), **kwargs)

test_loader = torch.utils.data.DataLoader(

datasets.ImageFolder('test', transform=transform), **kwargs)

model = Net().to(device)

optimizer = optim.Adadelta(model.parameters(), lr=args.lr)

scheduler = StepLR(optimizer, step_size=1, gamma=args.gamma)

for epoch in range(1, args.epochs + 1):

train(args, model, device, train_loader, optimizer, epoch)

test(model, device, test_loader)

scheduler.step()

if args.save_model:

torch.save(model.state_dict(), "mnist_cnn.pt")

if __name__ == '__main__':

main()

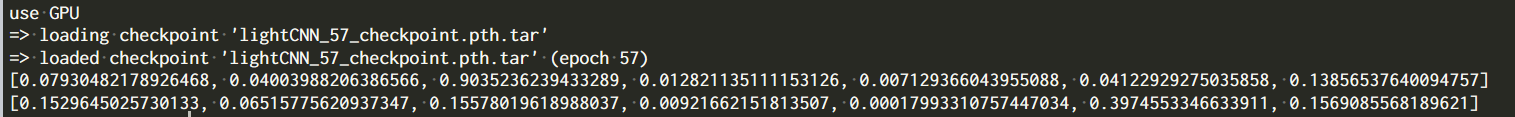

使用已训练好模型对给定数据进行预测

所给数据是一个用于图片车型分类的完整文件(包括已训练的模型,及相关代码),现要求根据所给定的文件,预测所给两张图片属于每一个车型的概率。

注:输出的概率对应的七种车型为:

‘JiaoChe’‘MianBao’‘HuoChe’‘KeChe’‘GuaChe’‘MoTuo’‘SanLun’

from models import *

import csv

import os

import os.path

import torchvision.transforms as transforms

import torch.backends.cudnn as cudnn

import torch

import torch.nn as nn

import numpy as np

from PIL import Image, ImageEnhance

import onnxruntime as rt

use_gpu = torch.cuda.is_available()

num_classes = 7

model = ml_net(num_classes=num_classes)

if use_gpu:

print('use GPU')

model = model.cuda()

model.eval()

resume ='lightCNN_57_checkpoint.pth.tar'

if resume:

if os.path.isfile(resume):

print("=> loading checkpoint '{}'".format(resume))

checkpoint = torch.load(resume)

start_epoch = checkpoint['epoch']

model.load_state_dict(checkpoint['state_dict'])

print("=> loaded checkpoint '{}' (epoch {})"

.format(resume, checkpoint['epoch']))

else:

print("=> no checkpoint found at '{}'".format(resume))

transform = transforms.Compose([

transforms.Resize((200, 312)),

transforms.ToTensor(),

])

imgsrc1 = Image.open('test1.jpg').convert('RGB')

img1 = transform(imgsrc1)

input1 = img1.unsqueeze(0).to('cuda')

output1,_ = model(input1)

outputlist1 = output1.data.cpu().numpy()[0].tolist()

print(outputlist1)

imgsrc2 = Image.open('test2.jpg').convert('RGB')

img2 = transform(imgsrc2)

input2 = img2.unsqueeze(0).to('cuda')

output2,_ = model(input2)

outputlist2 = output2.data.cpu().numpy()[0].tolist()

print(outputlist2)

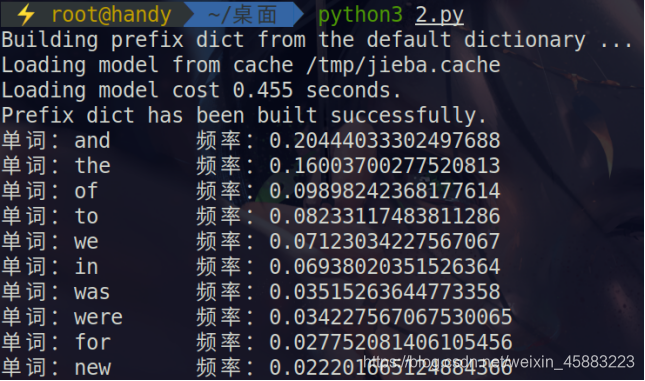

真题

样本数据预处理

任务:统计词频并输出高频词汇。

所给数据为某日中国日报英文版的一篇新闻报道,现要求使用Python语言编写程序统计其中出线频率最高的十个单词,输出对应的单词内容和频率(以字典

形式呈现)。 提交代码与运行结果截图。

说明: (1) 需在代码中排除标点符号干扰,即标点符号不被当作单词或单词的一部分。

(2)若单词中有大写字母需转换为小写字母进行统计,即大小写不敏感。

(3)空格不记为单词。

(4)不限制使用其他第三方自然语言处理库,但需注明。

import jieba

file = open("data.txt", "r", encoding='utf-8')

txt = file.read()

words = jieba.lcut(txt)

count = {}

for word in words:

word = word.lower()

if len(word) < 2:

continue

else:

count[word] = count.get(word, 0) + 1

list = list(count.items())

list.sort(key=lambda x: x[1], reverse=True)

for i in range(10):

word, number = list[i]

frequency = number / len(list)

print("单词:{: <10}频率:{: >8}".format(word, frequency))

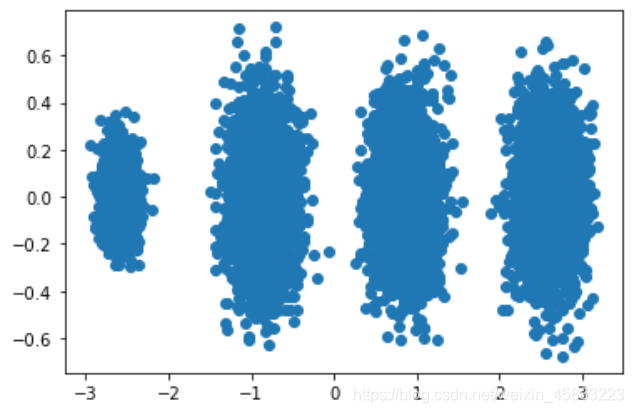

传统机器学习算法应用

任务:数据降维、聚类井可视化。 所给数据为10000个样本的特征数据,每-行表示一个样本的3维特征。现要求使用Python语言编程实现:

(1)将原始数据利用PCA算法降为二维并在二维坐标轴上可视化;

(2)对降维后的数据利用K-means算法进行聚类(聚为4类) ;

(3)将聚类结果在二维坐标轴上可视化,每类的数据需用不同颜色进行区分。

import numpy as np

from sklearn.cluster import KMeans

from sklearn.decomposition import PCA

import pandas as pd

import matplotlib.pyplot as plt

raw_csv = pd.read_csv("test.csv", header=None)

raw_data = raw_csv.values.tolist()

X = np.array(raw_data)

pca = PCA(n_components=2)

pca.fit(X)

newX = pca.fit_transform(X)

x = []

y = []

for i in newX:

x.append(i[0])

y.append(i[1])

plt.scatter(x, y)

plt.show()

estimator = KMeans(n_clusters=4)

estimator.fit(newX)

label_pred = estimator.labels_

x0 = newX[label_pred == 0]

x1 = newX[label_pred == 1]

x2 = newX[label_pred == 2]

x3 = newX[label_pred == 3]

plt.scatter(x0[:, 0], x0[:, 1], c="red", marker='o')

plt.scatter(x1[:, 0], x1[:, 1], c="green", marker='*')

plt.scatter(x2[:, 0], x2[:, 1], c="blue", marker='+')

plt.scatter(x3[:, 0], x3[:, 1], c="orange", marker='^')

plt.xlabel('x')

plt.ylabel('y')

plt.show()

深度学习算法应用

任务:图分类模型训练。

数据中给出了猫狗两类图片数据,包括训练集和测试集。现要求利用卷积神经网络或全连接网络在训练集进行训练,并输出测试集的准确率。需提交所有代

码及运行截图。

说明: (1)本体旨在考察参赛者使用深度学习库解决具体计算机视觉问题的完整流程,不以测试集准确率作为评分标准;

(2)PyTorch或TensorFlow均可。

(3)所有代码和答题卡打包为一个文件。

Dataload.py

import os

from torchvision import transforms,datasets

from torch.utils.data import DataLoader

def Datalod(mode="train"):

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

vgg_format = transforms.Compose([

transforms.CenterCrop(224),

transforms.ToTensor(),

normalize,

])

data_dir = './Data'

if(mode=="train"):

train_data = datasets.ImageFolder(os.path.join(data_dir, "train"), vgg_format)

loader_train = DataLoader(train_data, batch_size=16, shuffle=True, num_workers=4)

return loader_train,len(train_data)

else:

test_data = datasets.ImageFolder(os.path.join(data_dir, "test"), vgg_format)

loader_test = DataLoader(test_data, batch_size=5, shuffle=False, num_workers=4)

return loader_test ,len(test_data)

model.py

import torch

import torch.nn as nn

from torchvision import models

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

def Model():

model_vgg = models.vgg16(pretrained=True)

model_vgg_new = model_vgg

for param in model_vgg_new.parameters():

param.requires_grad = False

model_vgg_new.classifier._modules['6'] = nn.Linear(4096, 2)

model_vgg_new.classifier._modules['7'] = torch.nn.LogSoftmax(dim = 1)

model_vgg_new = model_vgg_new.to(device)

return model_vgg_new

train.py

import numpy as np

import torch

import torch.nn as nn

from model import Model

from Dataload import Datalod

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

train_loder,train_size=Datalod("train")

model=Model()

criterion = nn.NLLLoss()

optimizer_vgg = torch.optim.SGD(model.classifier[6].parameters(), lr=0.001)

def train_model(model, dataloader, size, epochs=1, optimizer=None):

model.train()

max_acc = 0

for epoch in range(epochs):

running_loss = 0.0

running_corrects = 0

count = 0

for inputs, classes in dataloader:

inputs = inputs.to(device)

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs, classes)

optimizer = optimizer

optimizer.zero_grad()

loss.backward()

optimizer.step()

_, preds = torch.max(outputs.data, 1)

# statistics

running_loss += loss.data.item()

running_corrects += torch.sum(preds == classes.data)

count += len(inputs)

epoch_loss = running_loss / size

epoch_acc = running_corrects.data.item() / size

print('Loss: {:.4f} Acc: {:.4f}'.format(

epoch_loss, epoch_acc))

if epoch_acc > max_acc:

max_acc = epoch_acc

path = './Model/model.pth'

torch.save(model, path)

def test_model(model, dataloader, size):

model.eval()

predictions = np.zeros(size)

all_classes = np.zeros(size)

all_proba = np.zeros((size, 2))

i = 0

running_loss = 0.0

running_corrects = 0

for inputs, classes in dataloader:

inputs = inputs.to(device)

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs, classes)

_, preds = torch.max(outputs.data, 1)

# statistics

running_loss += loss.data.item()

running_corrects += torch.sum(preds == classes.data)

predictions[i:i + len(classes)] = preds.to('cpu').numpy()

all_classes[i:i + len(classes)] = classes.to('cpu').numpy()

all_proba[i:i + len(classes), :] = outputs.data.to('cpu').numpy()

i += len(classes)

print('Testing: No. ', i, ' process ... total: ', size)

epoch_loss = running_loss / size

epoch_acc = running_corrects.data.item() / size

print('Loss: {:.4f} Acc: {:.4f}'.format(

epoch_loss, epoch_acc))

return predictions, all_proba, all_classes

if __name__ == '__main__':

train_model(model, train_loder, size=train_size, epochs=20,optimizer=optimizer_vgg)

test.py

import numpy as np

import torch

from Dataload import Datalod

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

test_loder,test_size=Datalod("test")

model_vgg_new = torch.load('./Model/model.pth')

model_vgg_new = model_vgg_new.to(device)

def test(model ,dataloader,size):

model.eval()

count=0;

for inputs ,classes in dataloader:

inputs = inputs.to(device)

outputs = model(inputs)

_ ,preds = torch.max(outputs.data ,1)

pred=preds.cpu().data.numpy()

real=classes.cpu().data.numpy()

for i in range(len(pred)):

if(pred[i]==real[i]):

count=count+1

print("Acc: ",count/size)

if __name__ == '__main__':

test(model_vgg_new,test_loder,test_size)