本文是参考各位博客朋友的笔记做了实操整理勿喷。

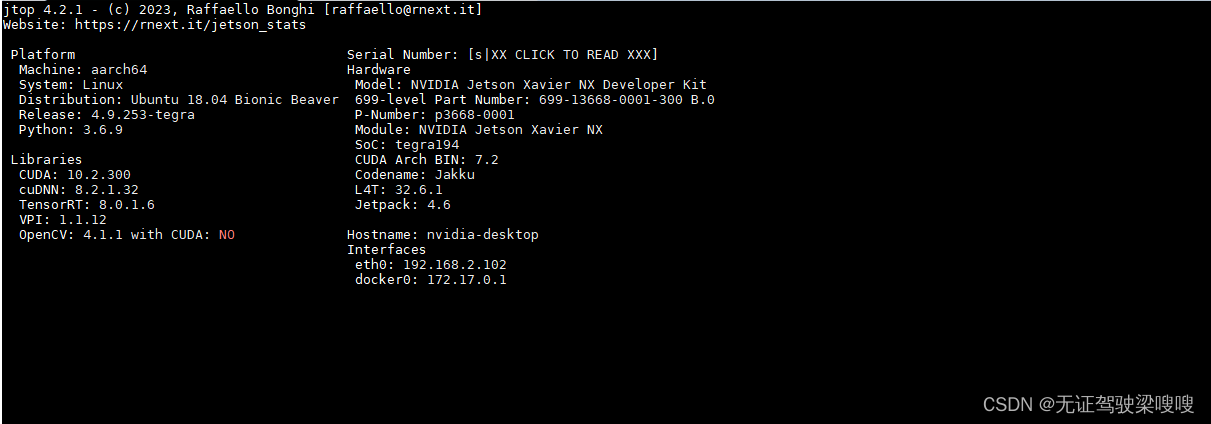

硬件设备nvidia JETSON NX TX2_NX

软件版本BSP3273(Jetpack4.6.3)再次分享一下刷机指导

Jetson Linux R32.7.3

NVIDIA® Jetson Linux 驱动程序包是 Jetson™ 的主板支持包。它包括Linux内核,UEFI引导加载程序,NVIDIA驱动程序,闪存实用程序,基于Ubuntu的示例文件系统以及Jetson平台的更多内容。

NVIDIA Jetson Linux 32.7.3

Jetson Linux 32.7.3 是 Jetson Linux 32.7.1 之上的次要版本,包含安全修复。其余功能与 Jetson Linux 32.7.2 相同。它支持所有Jetson模块:Jetson AGX Xavier系列,Jetson Xavier NX系列,Jetson TX2系列,Jetson TX1和Jetson Nano。还支持所有 Jetson 开发人员工具包。

Jetson Linux 32.7.3 包含在 JetPack 4.6.3 中

有关详细文档,请参阅在线 Jetson Linux 开发人员指南。

支持的功能

- 支持带有海力士内存的 Jetson TX2 模块:SKH 161-0434-100 H9HCNNNBKUMLXR-NEE

Jetson Linux 上的 Vulkan 支持

下载和链接

要访问其他 Jetson Linux 发布页面,请访问 Jetson Linux 存档。

Previous Jetson Linux Versions版本查看

| JETSON LINUX VERSION | Jetson AGX Xavier | Jetson AGX Xavier Industrial | Jetson Xavier NX | Jetson TX2 NX | Jetson TX2 | Jetson TX2i | Jetson TX2 4GB | Jetson TX1 | Jetson Nano |

|---|---|---|---|---|---|---|---|---|---|

| 32.7.3 > November 2022 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| 32.7.2 > April 2022 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| 32.7.1 > February 2022 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| 32.6.1 > August 2021 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| 32.5.2 > July 2021 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | |

| 32.5.1 > February 2021 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | |

| 32.5 > January 2021 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

官方开发套件使用sdkmanger工具刷完对应的jetpack和OS镜像即可,第三方厂家的按照他们的指导手册刷机到此。

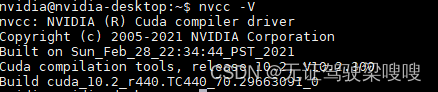

系统和jetaoack安装完查看cuda版本的命令nvcc -V无法输出,在~/.bashrc文件内添加如下内容即可。

export PATH=/usr/local/cuda-10.2/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-10.2/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

export CUDA_ROOT=/usr/local/cuda 保存退出后 source ~/.bashrc

sudo apt-get install python3-pip

sudo -H pip3 install jetson-stats

sudo jtop二、安装pip3 并配置所需要的库

sudo apt-get update

sudo apt-get install python3-pip python3-dev -y

sudo apt-get install build-essential make cmake cmake-curses-gui -y

sudo apt-get install git g++ pkg-config curl -y

sudo apt-get install libatlas-base-dev gfortran libcanberra-gtk-module libcanberra-gtk3-module -y

sudo apt-get install libhdf5-serial-dev hdf5-tools -y

sudo apt-get install nano locate screen -y

三、安装所需的依赖

sudo apt-get install libfreetype6-dev -y

sudo apt-get install protobuf-compiler libprotobuf-dev openssl -y

sudo apt-get install libssl-dev libcurl4-openssl-dev -y

sudo apt-get install cython3 -y

sudo apt-get install curl

sudo apt-get install libssl-dev libcurl4-openssl-dev

四、安装opencv的系统级依赖,一些编解码的库

sudo apt-get install build-essential -y

sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev -y

sudo apt-get install python-dev python-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff5-dev libdc1394-22-dev -y

sudo apt-get install libavcodec-dev libavformat-dev libswscale-dev libv4l-dev liblapacke-dev -y

sudo apt-get install libxvidcore-dev libx264-dev -y

sudo apt-get install libatlas-base-dev gfortran -y

sudo apt-get install ffmpeg -y

五、更新 cmake

这一步是必须的,因为arm架构的很多东西都要从源码编译,下面是3.13之前使用3.20也是可以的

wget http://www.cmake.org/files/v3.13/cmake-3.13.0.tar.gz

tar xpvf cmake-3.13.0.tar.gz cmake-3.13.0/ #解压

cd cmake-3.13.0/

./bootstrap --system-curl # 慢

make -j4 #慢

echo 'export PATH=~/cmake-3.13.0/bin/:$PATH' >> ~/.bashrc

source ~/.bashrc #更新.bashrc

如果遇到问题 在cmake 上找不到CURL(缺少: CURL_LIBRARY CURL_INCLUDE_DIR)

其实就是上面下载安装依赖和库的时候没安装这两项,补上就好了:

sudo apt-get install curl

sudo apt-get install libssl-dev libcurl4-openssl-dev

一个小tips:

jetson 设备会出现大容量设备无法挂载的情况,一条安装命令解决:

sudo apt-get install exfat-utils

六、安装 pytorch

注意:为啥不使用torch官网给出的命令安装,只要cpu是arm芯片,无论是windows linux maxos都不能使用官网命令!

下载pytorch1.8 nvidia 官网torch 带cuda 版本的whl文件下载地址

这个whl文件 在torch 官网是找不到 aarch 版本的,在github上也有 aarch 别的各个版本的 aarch离线whl 文件下载网址,但是这些都不带 cuda,都是cpu 版本。实在找不到可以私信我发你。

pytorch

sudo apt-get install python3-pip libopenblas-base libopenmpi-dev libomp-dev

pip3 install Cython

pip3 install numpy torch-1.10.0-cp36-cp36m-linux_aarch64.whltorchvision

$ sudo apt-get install libjpeg-dev zlib1g-dev libpython3-dev libavcodec-dev libavformat-dev libswscale-dev

$ git clone --branch v0.11.1 https://github.com/pytorch/vision torchvision # see below for version of torchvision to download

$ cd torchvision

$ export BUILD_VERSION=0.11.1 # where 0.x.0 is the torchvision version

$ python3 setup.py install --user

如果这里出现了 illegal instruction(core dumped)错误

错误的原因是没有添加系统变量,因为jetson系列开发板的核心架构非常规。

终端打开:

sudo vi ~/.bashrc

在最底部加上:

export OPENBLAS_CORETYPE=ARMV8

然后:source ~/.bashrc 一下,再进行操作:

cd torchvision # 进入到这个包的目录下

export BUILD_VERSION=0.11.1

python3 setup.py install --user

可能会自动安装 pillow 9.5.0版本,这时候pillow 的版本过高

在python3 环境中导入 torchvision 时报错如下:

SyntaxError: future feature annotations is not defined

检验一下,是否安装成功:

python3

import torch

import torchvision

print(torch.cuda.is_available()) # 这一步如果输出True那么就成功了!

exit() # 最后退出python编译

或者直接复制如下代码查看即可

nvidia@nvidia-desktop:~$ python3 pytorch_ceck.py

1.10.0

CUDA available: True

cuDNN version: 8201

Tensor a = tensor([0., 0.], device='cuda:0')

Tensor b = tensor([0.4357, 1.5914], device='cuda:0')

Tensor c = tensor([0.4357, 1.5914], device='cuda:0')

nvidia@nvidia-desktop:~$

nvidia@nvidia-desktop:~$

nvidia@nvidia-desktop:~$

nvidia@nvidia-desktop:~$

nvidia@nvidia-desktop:~$ cat pytorch_ceck.py

import torch

print(torch.__version__)

print('CUDA available: ' + str(torch.cuda.is_available()))

print('cuDNN version: ' + str(torch.backends.cudnn.version()))

a = torch.cuda.FloatTensor(2).zero_()

print('Tensor a = ' + str(a))

b = torch.randn(2).cuda()

print('Tensor b = ' + str(b))

c = a + b

print('Tensor c = ' + str(c))

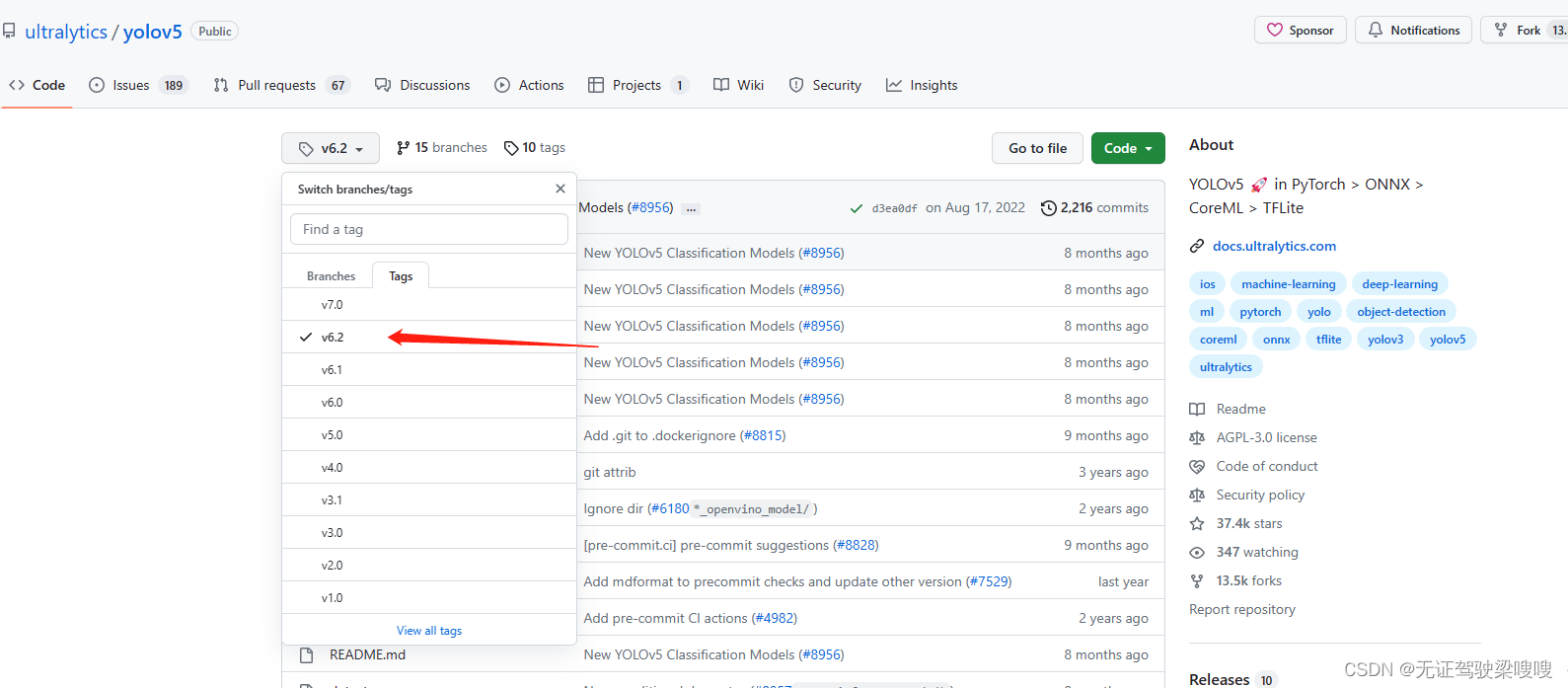

八、下载 yolov5-6.2源码

GitHub - ultralytics/yolov5 at v6.2

git clone --branch v6.2 https://github.com/ultralytics/yolov5.git

cd yolov5

git clone --branch yolov5-v6.2 https://github.com/wang-xinyu/tensorrtx.git不放心可以先查一下版本

nvidia@nvidia-desktop:~/yolov5$ git log

commit d3ea0df8b9f923685ce5f2555c303b8eddbf83fd (HEAD, tag: v6.2)

Author: Glenn Jocher <[email protected]>

Date: Wed Aug 17 11:59:01 2022 +0200

New YOLOv5 Classification Models (#8956)

* Update

* Logger step fix: Increment step with epochs (#8654)

* enhance

* revert

* allow training from scratch

* [pre-commit.ci] auto fixes from pre-commit.com hooks

for more information, see https://pre-commit.ci

* Update --img argument from train.py

single line

* fix image size from 640 to 128

* suport custom dataloader and augmentation

* [pre-commit.ci] auto fixes from pre-commit.com hooks

for more information, see https://pre-commit.ci

* format

* Update dataloaders.py

* Single line return, single line comment, remove unused argument

* address PR comments

* fix spelling

* don't augment eval set

* use fstring

* update augmentations.py

* new maning convention for transforms

nvidia@nvidia-desktop:~/yolov5$ git clone -b yolov5-v6.2 https://github.com/wang-xinyu/tensorrtx.git

Cloning into 'tensorrtx'...

remote: Enumerating objects: 2317, done.

remote: Counting objects: 100% (84/84), done.

remote: Compressing objects: 100% (67/67), done.

remote: Total 2317 (delta 33), reused 51 (delta 17), pack-reused 2233

Receiving objects: 100% (2317/2317), 1.94 MiB | 4.40 MiB/s, done.

Resolving deltas: 100% (1464/1464), done.

Note: checking out '165b0a428c356ab9e2cb8d2c8f3d4aae3ea74eac'.

You are in 'detached HEAD' state. You can look around, make experimental

changes and commit them, and you can discard any commits you make in this

state without impacting any branches by performing another checkout.

If you want to create a new branch to retain commits you create, you may

do so (now or later) by using -b with the checkout command again. Example:

git checkout -b <new-branch-name>

nvidia@nvidia-desktop:~/yolov5$ git log

commit d3ea0df8b9f923685ce5f2555c303b8eddbf83fd (HEAD, tag: v6.2)

Author: Glenn Jocher <[email protected]>

Date: Wed Aug 17 11:59:01 2022 +0200

New YOLOv5 Classification Models (#8956)

安装所需依赖;

pip3 install scikit-buildpip3 install -r requirements.txt执行前注释掉内部的torch和torchvision

# YOLOv5 requirements

# Usage: pip install -r requirements.txt

# Base ----------------------------------------

matplotlib>=3.2.2

numpy>=1.18.5

opencv-python>=4.1.1

Pillow>=7.1.2

PyYAML>=5.3.1

requests>=2.23.0

scipy>=1.4.1

#torch>=1.7.0

#torchvision>=0.8.1

tqdm>=4.64.0

protobuf<=3.20.1 # https://github.com/ultralytics/yolov5/issues/8012

# Logging -------------------------------------

tensorboard>=2.4.1

# wandb

# clearml

# Plotting ------------------------------------

pandas>=1.1.4

seaborn>=0.11.0

# Export --------------------------------------

# coremltools>=5.2 # CoreML export

# onnx>=1.9.0 # ONNX export

# onnx-simplifier>=0.4.1 # ONNX simplifier

# nvidia-pyindex # TensorRT export

# nvidia-tensorrt # TensorRT export

# scikit-learn==0.19.2 # CoreML quantization

# tensorflow>=2.4.1 # TFLite export (or tensorflow-cpu, tensorflow-aarch64)

# tensorflowjs>=3.9.0 # TF.js export

# openvino-dev # OpenVINO export

# Extras --------------------------------------

ipython # interactive notebook

psutil # system utilization

thop>=0.1.1 # FLOPs computation

# albumentations>=1.0.3

# pycocotools>=2.0 # COCO mAP

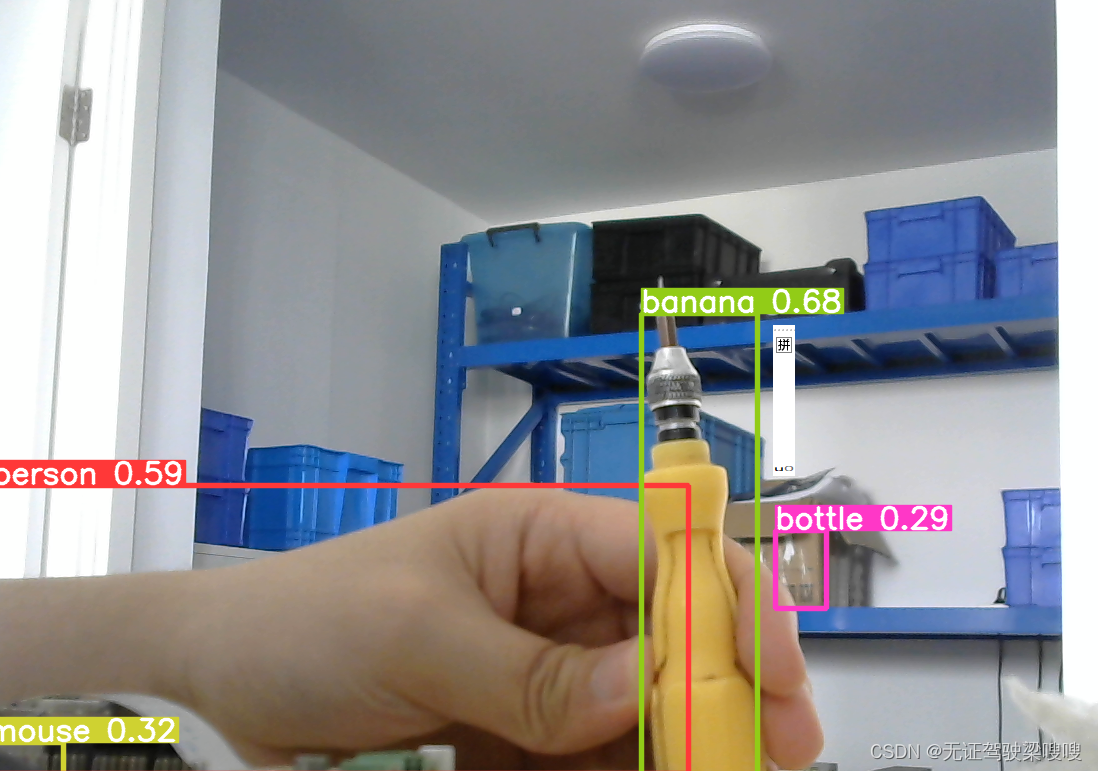

# roboflow

python3 detect.py --source 0报错如下:

TypeError: unlink() got an unexpected keyword argument 'missing_ok'

不会改直接先屏蔽

nvidia@nvidia-desktop:~/yolov5$ sudo vi /home/nvidia/yolov5/utils/downloads.py File "/home/nvidia/yolov5/utils/downloads.py", line 47, in safe_download

file.unlink(missing_ok=True) # remove partial downloads

TypeError: unlink() got an unexpected keyword argument 'missing_ok

注释掉第47行居然通过了。

额,误差好大。

源码配置说明

在命令行参数选择模型 n/s/m/l/x/n6/ns/s6/m6/l6/x6

输入尺寸的定义在yololayer.h

类别数的定义在yololayer.h中,如果是自定义模型,不要忘记修改

INT8/FP16/FP32可以通过yolov5.cpp中的宏进行选择

GPU ID可以通过yolov5.cpp中的宏指定

NMS阈值的设置在yolov5.cpp中

BBox置信度阈值的设置在yolov5.cpp中

Batch size的设置在yolov5.cpp中

3. 运行

3.1 .pt转.wts

tensorrtx项目通过tensorRT的Layer API一层层搭建模型,模型权重的加载则通过自定义方式实现,通过get_wts.py文件将yolov5模型的权重即yolov5.pt保存成yolov5.wts,生成的yolov5.wts文件即作者自定义的权重文件方便后续加载使用。

权重的生成在本地完成即可,将转换生成的yolov5.wts文件拷贝回到yolov5文件夹下

yolov5s.wts文件生成指令如下:

nvidia@nvidia-desktop:~/yolov5$ mkdir weights

nvidia@nvidia-desktop:~/yolov5$ cp yolov5s.pt weights/

nvidia@nvidia-desktop:~/yolov5$ python3 gen_wts.py -w weights/yolov5s.pt yolov5s.wts

usage: gen_wts.py [-h] -w WEIGHTS [-o OUTPUT] [-t {detect,cls}]

gen_wts.py: error: unrecognized arguments: yolov5s.wts

nvidia@nvidia-desktop:~/yolov5$ python3 gen_wts.py -w weights/yolov5s.pt -o yolov5s.wts

Generating .wts for detect model

YOLOv5 🚀 v6.2-0-gd3ea0df Python-3.6.9 torch-1.10.0 CPU

Loading weights/yolov5s.pt

Writing into yolov5s.wts

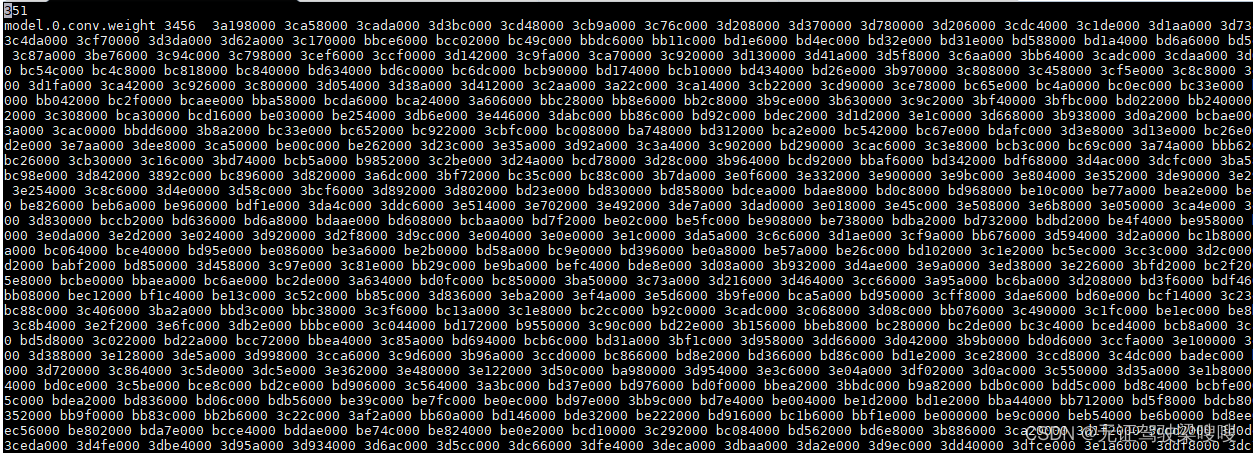

生成的wts权重文件部分内容如下图所示

.wts文件为纯文本文件

351为模型所有键对应的数目即表示它有多少行(不包括自身)

每一行形式是 [权重名称] [value count = N] [value1] [value2] … [valueN]

model.0.conv.weight为模型权重保存的第一个键的名称

3456为模型权重保存的第一个键对应值的总长度

后面的数字为模型权重保存的第一个键对应的值,以十六进制的形式进行保存

3.2 build

加载yolov5s.wts权重文件,并通过tensorRT序列化生成engine引擎文件。注意先修改下yololayer.h中的CLASS_NUM,修改为自训练模型的类别数。如下图所示,本次训练的模型类别数为1,故将CLASS_NUM修改为1。

修改完成后便可进行编译生成引擎文件,指令如下

mkdir build

195 cd build/

196 ls

197 cp ../../../yolov5s.wts .

198 cmake .. && make -j6

199 ls

200 sudo ./yolov5 -s yolov5s.wts yolov5s.engine s

201 vi yololayer.h

202 cd ..

203 vi yololayer.h

204 cmake .. && make -j6

205 cd build/

206 cmake .. && make -j6

207 sudo ./yolov5 -s yolov5s.wts yolov5s.engine s

图解如下所示,执行完成之后会在build目录下生成yolov5s.engine引擎文件

3.3 run

通过tensorRT生成的engine文件进行模型推理,指令如下

sudo ./yolov5 -d yolov5s.engine ../../../data/images/3.4 usb摄像头测试,yolov5.cpp代码地址

mv yolov5.cpp yolov5.cpp1

230 ls

231 vi yolov5.cpp1

232 vi yolov5.cpp

233 cd build/

234 sudo ./yolov5 -d yolov5s.engine ../samples

235 cd ..

236 ls

237 cd build/

238 make -j6

239 sudo ./yolov5 -d yolov5s.engine ../samples

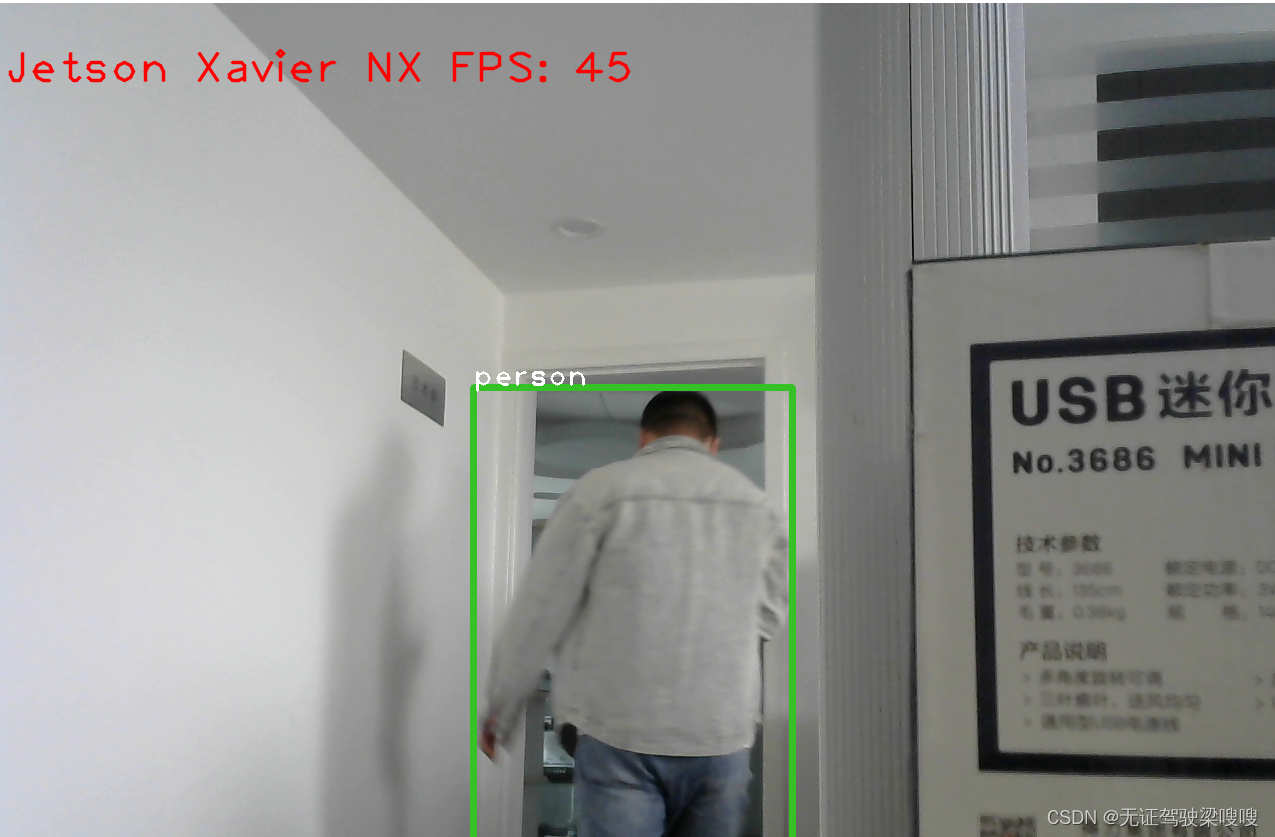

3.5 csi摄像机测试

csi目前先借助deepstream辅助一下测试,后面弄一下c++

cd /opt/nvidia/deepstream/deepstream-6.0/samples/configs/deepstream-app/

deepstream-app -c source8_1080p_dec_infer-resnet_tracker_tiled_display_fp16_nano.txt

WeChat_20230418163153

找一个大佬的项目

git clone https://github.com/DanaHan/Yolov5-in-Deepstream-5.0.gitnvidia@nvidia-desktop:~/yolov5$ git clone https://github.com/DanaHan/Yolov5-in-Deepstream-5.0.git

Cloning into 'Yolov5-in-Deepstream-5.0'...

remote: Enumerating objects: 93, done.

remote: Counting objects: 100% (93/93), done.

remote: Compressing objects: 100% (73/73), done.

remote: Total 93 (delta 26), reused 86 (delta 20), pack-reused 0

Unpacking objects: 100% (93/93), done.

nvidia@nvidia-desktop:~/yolov5$ cd Yolov5-in-Deepstream-5.0/

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0/nvdsinfer_custom_impl_Yolo$ vi nvdsparsebbox_Yolo.cpp

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0/nvdsinfer_custom_impl_Yolo$ make这里注意一下你的cuda版本可以先修改Makefile改成当前环境的cuda版本。

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0/nvdsinfer_custom_impl_Yolo$ cd ..

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ cp /home/nvidia/yolov5/tensorrtx/yolov5/build/

_bus.jpg CMakeFiles/ libmyplugins.so yolov5 yolov5s.engine _zidane.jpg

CMakeCache.txt cmake_install.cmake Makefile yolov5-cls yolov5s.wts

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ cp /home/nvidia/yolov5/tensorrtx/yolov5/build/libmyplugins.so .

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ cp /home/nvidia/yolov5/tensorrtx/yolov5/build/yolov5 .

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ cp /home/nvidia/yolov5/tensorrtx/yolov5/build/yolov5s.engine .

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ ls

config_infer_primary_yoloV5.txt deepstream_app_config_yoloV5.txt includes libmyplugins.so nvdsinfer_custom_impl_Yolo yolov5 yolov5s.engine

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ tourch labels.txt

-bash: tourch: command not found

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ touch labels.txt

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ la labels.txt

labels.txt

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ vi labels.txt

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ vi

config_infer_primary_yoloV5.txt includes/ nvdsinfer_custom_impl_Yolo/ yolov5s.engine

deepstream_app_config_yoloV5.txt labels.txt yolov5

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ vi

config_infer_primary_yoloV5.txt includes/ nvdsinfer_custom_impl_Yolo/ yolov5s.engine

deepstream_app_config_yoloV5.txt labels.txt yolov5

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ vi deepstream_app_config_yoloV5.txt

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ vi config_infer_primary_yoloV5.txt

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ vi deepstream_app_config_yoloV5.txt

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$ ls

config_infer_primary_yoloV5.txt deepstream_app_config_yoloV5.txt includes labels.txt libmyplugins.so nvdsinfer_custom_impl_Yolo yolov5 yolov5s.engine

nvidia@nvidia-desktop:~/yolov5/Yolov5-in-Deepstream-5.0/Deepstream 5.0$

测试mp4视频

在Yolov5-in-Deepstream-5.0/Deepstream 5.0目录下运行如下,运行前记得修改路径

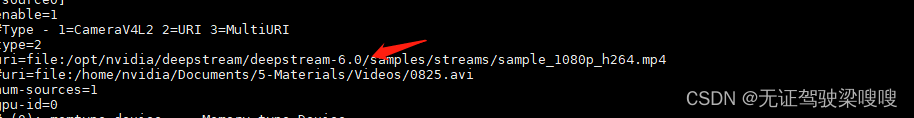

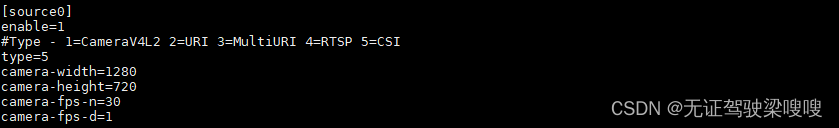

LD_PRELOAD=./libmyplugins.so deepstream-app -c deepstream_app_config_yoloV5.txt使用CSI摄像头测试

修改deepstream_app_config_yoloV5.txt,添加如下内容

视频测试

有点草稿,还得先搞docker就先到这后面再补进来