MobileNetV2网络模型详解及复现

一.首先我们简单回顾一下MobileNetV2网络结构

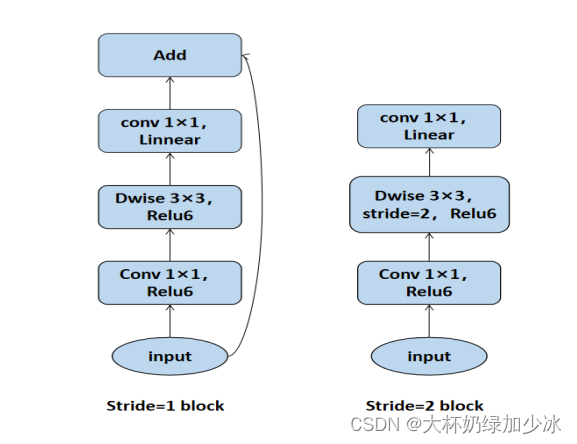

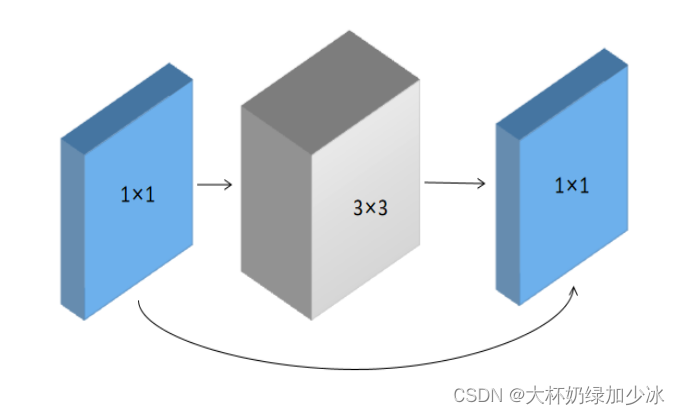

在这一节中我们主要讲解改进MobileNetV2网络的结构。在介绍MobileNetV2网络之前,先让我们回顾一下原始MobileNetV2网络,在原始MobileNetV2网络中提出了两种不同的block,其结构如图所示。残差结构与倒置残差结构的区别,首先在于维度变化,在残差结构中,先进行降维再进行升维,其结构两头大、中间小。但在倒置残差结构中,是先进行升维再降维。残差结构与倒置残差结构的对比如图所示。倒置残差块的设计能够使网络架构节省计算成本,并且倒置残差块中的扩张卷积增加了通道之间的信息交互,使得网络可以更好地捕获特征。

二.MobileNetV2网络模型复现

(1)训练集

import os

import sys

import glob

import json

import matplotlib as plt

import tensorflow as tf

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tqdm import tqdm

import numpy as np

from model import MobileNetV2

def main():

# data_root = os.path.abspath(os.path.join(os.getcwd(), "E:\study\MobileNet\data")) # get data root path

data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

image_path = data_root + "study/mobilenet/data/"

train_dir = image_path + "train"

validation_dir = image_path + "test"

# image_path = os.path.join(data_root, "test", "abBlur") # flower data set path

# train_dir = os.path.join(image_path, "train")

# validation_dir = os.path.join(image_path, "val")

# assert os.path.exists(train_dir), "cannot find {}".format(train_dir)

# assert os.path.exists(validation_dir), "cannot find {}".format(validation_dir)

im_height = 224

im_width = 224

batch_size = 16

epochs = 3

num_classes = 8

def pre_function(img):

# img = im.open('test.jpg')

# img = np.array(img).astype(np.float32)

img = img / 255.

img = (img - 0.5) * 2.0

return img

# data generator with data augmentation

train_image_generator = ImageDataGenerator(horizontal_flip=True,

preprocessing_function=pre_function)

validation_image_generator = ImageDataGenerator(preprocessing_function=pre_function)

train_data_gen = train_image_generator.flow_from_directory(directory=train_dir,

batch_size=batch_size,

shuffle=True,

target_size=(im_height, im_width),

class_mode='categorical')

total_train = train_data_gen.n

# get class dict

class_indices = train_data_gen.class_indices

# transform value and key of dict

inverse_dict = dict((val, key) for key, val in class_indices.items())

# write dict into json file

json_str = json.dumps(inverse_dict, indent=8)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

val_data_gen = validation_image_generator.flow_from_directory(directory=validation_dir,

batch_size=batch_size,

shuffle=False,

target_size=(im_height, im_width),

class_mode='categorical')

# img, _ = next(train_data_gen)

total_val = val_data_gen.n

print("using {} images for training, {} images for validation.".format(total_train,

total_val))

# create model except fc layer

feature = MobileNetV2(include_top=False)

# download weights 链接: https://pan.baidu.com/s/1YgFoIKHqooMrTQg_IqI2hA 密码: 2qht

# pre_weights_path = './pretrain_weights.ckpt'

# assert len(glob.glob(pre_weights_path+"*")), "cannot find {}".format(pre_weights_path)

# feature.load_weights(pre_weights_path)

# feature.trainable = False

feature.summary()

# add last fc layer

model = tf.keras.Sequential([feature,

tf.keras.layers.GlobalAvgPool2D(),

tf.keras.layers.Dropout(rate=0.5),

tf.keras.layers.Dense(num_classes),

tf.keras.layers.Softmax()])

# model.summary()

# using keras low level api for training

loss_object = tf.keras.losses.CategoricalCrossentropy(from_logits=False)

optimizer = tf.keras.optimizers.Adam(learning_rate=0.001)

train_loss = tf.keras.metrics.Mean(name='train_loss')

train_accuracy = tf.keras.metrics.CategoricalAccuracy(name='train_accuracy')

val_loss = tf.keras.metrics.Mean(name='val_loss')

val_accuracy = tf.keras.metrics.CategoricalAccuracy(name='val_accuracy')

@tf.function

def train_step(images, labels):

with tf.GradientTape() as tape:

output = model(images, training=True)

loss = loss_object(labels, output)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train_loss(loss)

train_accuracy(labels, output)

@tf.function

def val_step(images, labels):

output = model(images, training=False)

loss = loss_object(labels, output)

val_loss(loss)

val_accuracy(labels, output)

best_val_acc = 0.

for epoch in range(epochs):

train_loss.reset_states() # clear history info

train_accuracy.reset_states() # clear history info

val_loss.reset_states() # clear history info

val_accuracy.reset_states() # clear history info

# train

train_bar = tqdm(range(total_train // batch_size), file=sys.stdout)

for step in train_bar:

images, labels = next(train_data_gen)

train_step(images, labels)

# print train process

train_bar.desc = "train epoch[{}/{}] loss:{:.3f}, acc:{:.3f}".format(epoch + 1,

epochs,

train_loss.result(),

train_accuracy.result())

# validate

val_bar = tqdm(range(total_val // batch_size), file=sys.stdout)

for step in val_bar:

val_images, val_labels = next(val_data_gen)

val_step(val_images, val_labels)

# print val process

val_bar.desc = "valid epoch[{}/{}] loss:{:.3f}, acc:{:.3f}".format(epoch + 1,

epochs,

val_loss.result(),

val_accuracy.result())

# only save best weights

if val_accuracy.result() > best_val_acc:

best_val_acc = val_accuracy.result()

model.save_weights("./save_weights/resMobileNetV2.ckpt", save_format="tf")

if __name__ == '__main__':

main()

(2)网络模型(model)

from tensorflow.keras import layers, Model, Sequential

def _make_divisible(ch, divisor=8, min_ch=None):

"""

This function is taken from the original tf repo.

It ensures that all layers have a channel number that is divisible by 8

It can be seen here:

https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py

"""

if min_ch is None:

min_ch = divisor

new_ch = max(min_ch, int(ch + divisor / 2) // divisor * divisor)

# Make sure that round down does not go down by more than 10%.

if new_ch < 0.9 * ch:

new_ch += divisor

return new_ch

class ConvBNReLU(layers.Layer):

def __init__(self, out_channel, kernel_size=3, stride=1, **kwargs):

super(ConvBNReLU, self).__init__(**kwargs)

self.conv = layers.Conv2D(filters=out_channel, kernel_size=kernel_size,

strides=stride, padding='SAME', use_bias=False, name='Conv2d')

self.bn = layers.BatchNormalization(momentum=0.9, epsilon=1e-5, name='BatchNorm')

self.activation = layers.ReLU(max_value=6.0)

def call(self, inputs, training=False):

x = self.conv(inputs)

x = self.bn(x, training=training)

x = self.activation(x)

return x

class InvertedResidual(layers.Layer):

def __init__(self, in_channel, out_channel, stride, expand_ratio, **kwargs):

super(InvertedResidual, self).__init__(**kwargs)

self.hidden_channel = in_channel * expand_ratio

self.use_shortcut = stride == 1 and in_channel == out_channel

layer_list = []

if expand_ratio != 1:

# 1x1 pointwise conv

layer_list.append(ConvBNReLU(out_channel=self.hidden_channel, kernel_size=1, name='expand'))

layer_list.extend([

# 3x3 depthwise conv

layers.DepthwiseConv2D(kernel_size=3, padding='SAME', strides=stride,

use_bias=False, name='depthwise'),

layers.BatchNormalization(momentum=0.9, epsilon=1e-5, name='depthwise/BatchNorm'),

layers.ReLU(max_value=6.0),

# 1x1 pointwise conv(linear)

layers.Conv2D(filters=out_channel, kernel_size=1, strides=1,

padding='SAME', use_bias=False, name='project'),

layers.BatchNormalization(momentum=0.9, epsilon=1e-5, name='project/BatchNorm')

])

self.main_branch = Sequential(layer_list, name='expanded_conv')

def call(self, inputs, training=False, **kwargs):

if self.use_shortcut:

return inputs + self.main_branch(inputs, training=training)

else:

return self.main_branch(inputs, training=training)

def MobileNetV2(im_height=224,

im_width=224,

num_classes=1000,

alpha=1.0,

round_nearest=8,

include_top=True):

block = InvertedResidual

input_channel = _make_divisible(32 * alpha, round_nearest)

last_channel = _make_divisible(1280 * alpha, round_nearest)

inverted_residual_setting = [

# t, c, n, s

[1, 16, 1, 1],

[6, 24, 2, 2],

[6, 32, 3, 2],

[6, 64, 4, 2],

[6, 96, 3, 1],

[6, 160, 3, 2],

[6, 320, 1, 1],

]

input_image = layers.Input(shape=(im_height, im_width, 3), dtype='float32')

# conv1

x = ConvBNReLU(input_channel, stride=2, name='Conv')(input_image)

# building inverted residual residual blockes

for idx, (t, c, n, s) in enumerate(inverted_residual_setting):

output_channel = _make_divisible(c * alpha, round_nearest)

for i in range(n):

stride = s if i == 0 else 1

x = block(x.shape[-1],

output_channel,

stride,

expand_ratio=t)(x)

# building last several layers

x = ConvBNReLU(last_channel, kernel_size=1, name='Conv_1')(x)

if include_top is True:

# building classifier

x = layers.GlobalAveragePooling2D()(x) # pool + flatten

x = layers.Dropout(0.2)(x)

output = layers.Dense(num_classes, name='Logits')(x)

else:

output = x

model = Model(inputs=input_image, outputs=output)

return model

(3)数据集图像处理(utils)

import os

import json

import random

import tensorflow as tf

import matplotlib.pyplot as plt

def read_split_data(root: str, val_rate: float = 0.2):

random.seed(0) # 保证随机划分结果一致

assert os.path.exists(root), "dataset root: {} does not exist.".format(root)

# 遍历文件夹,一个文件夹对应一个类别

flower_class = [cla for cla in os.listdir(root) if os.path.isdir(os.path.join(root, cla))]

# 排序,保证顺序一致

flower_class.sort()

# 生成类别名称以及对应的数字索引

class_indices = dict((k, v) for v, k in enumerate(flower_class))

json_str = json.dumps(dict((val, key) for key, val in class_indices.items()), indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

train_images_path = [] # 存储训练集的所有图片路径

train_images_label = [] # 存储训练集图片对应索引信息

val_images_path = [] # 存储验证集的所有图片路径

val_images_label = [] # 存储验证集图片对应索引信息

every_class_num = [] # 存储每个类别的样本总数

supported = [".jpg", ".JPG", ".jpeg", ".JPEG"] # 支持的文件后缀类型

# 遍历每个文件夹下的文件

for cla in flower_class:

cla_path = os.path.join(root, cla)

# 遍历获取supported支持的所有文件路径

images = [os.path.join(root, cla, i) for i in os.listdir(cla_path)

if os.path.splitext(i)[-1] in supported]

# 获取该类别对应的索引

image_class = class_indices[cla]

# 记录该类别的样本数量

every_class_num.append(len(images))

# 按比例随机采样验证样本

val_path = random.sample(images, k=int(len(images) * val_rate))

for img_path in images:

if img_path in val_path: # 如果该路径在采样的验证集样本中则存入验证集

val_images_path.append(img_path)

val_images_label.append(image_class)

else: # 否则存入训练集

train_images_path.append(img_path)

train_images_label.append(image_class)

print("{} images were found in the dataset.\n{} for training, {} for validation".format(sum(every_class_num),

len(train_images_path),

len(val_images_path)

))

plot_image = False

if plot_image:

# 绘制每种类别个数柱状图

plt.bar(range(len(flower_class)), every_class_num, align='center')

# 将横坐标0,1,2,3,4替换为相应的类别名称

plt.xticks(range(len(flower_class)), flower_class)

# 在柱状图上添加数值标签

for i, v in enumerate(every_class_num):

plt.text(x=i, y=v + 5, s=str(v), ha='center')

# 设置x坐标

plt.xlabel('image class')

# 设置y坐标

plt.ylabel('number of images')

# 设置柱状图的标题

plt.title('flower class distribution')

plt.show()

return train_images_path, train_images_label, val_images_path, val_images_label

def generate_ds(data_root: str,

im_height: int,

im_width: int,

batch_size: int,

val_rate: float = 0.1):

"""

读取划分数据集,并生成训练集和验证集的迭代器

:param data_root: 数据根目录

:param im_height: 输入网络图像的高度

:param im_width: 输入网络图像的宽度

:param batch_size: 训练使用的batch size

:param val_rate: 将数据按给定比例划分到验证集

:return:

"""

train_img_path, train_img_label, val_img_path, val_img_label = read_split_data(data_root, val_rate=val_rate)

AUTOTUNE = tf.data.experimental.AUTOTUNE

def process_train_info(img_path, label):

image = tf.io.read_file(img_path)

image = tf.image.decode_jpeg(image, channels=3)

image = tf.image.convert_image_dtype(image, tf.float32)

# image = tf.cast(image, tf.float32)

# image = tf.image.resize(image, [im_height, im_width])

image = tf.image.resize_with_crop_or_pad(image, im_height, im_width)

image = tf.image.random_flip_left_right(image)

image = (image - 0.5) / 0.5

return image, label

def process_val_info(img_path, label):

image = tf.io.read_file(img_path)

image = tf.image.decode_jpeg(image, channels=3)

image = tf.image.convert_image_dtype(image, tf.float32)

# image = tf.cast(image, tf.float32)

# image = tf.image.resize(image, [im_height, im_width])

image = tf.image.resize_with_crop_or_pad(image, im_height, im_width)

image = (image - 0.5) / 0.5

return image, label

# Configure dataset for performance

def configure_for_performance(ds,

shuffle_size: int,

shuffle: bool = False):

ds = ds.cache() # 读取数据后缓存至内存

if shuffle:

ds = ds.shuffle(buffer_size=shuffle_size) # 打乱数据顺序

ds = ds.batch(batch_size) # 指定batch size

ds = ds.prefetch(buffer_size=AUTOTUNE) # 在训练的同时提前准备下一个step的数据

return ds

train_ds = tf.data.Dataset.from_tensor_slices((tf.constant(train_img_path),

tf.constant(train_img_label)))

total_train = len(train_img_path)

# Use Dataset.map to create a dataset of image, label pairs

train_ds = train_ds.map(process_train_info, num_parallel_calls=AUTOTUNE)

train_ds = configure_for_performance(train_ds, total_train, shuffle=True)

val_ds = tf.data.Dataset.from_tensor_slices((tf.constant(val_img_path),

tf.constant(val_img_label)))

total_val = len(val_img_path)

# Use Dataset.map to create a dataset of image, label pairs

val_ds = val_ds.map(process_val_info, num_parallel_calls=AUTOTUNE)

val_ds = configure_for_performance(val_ds, total_val)

return train_ds, val_ds

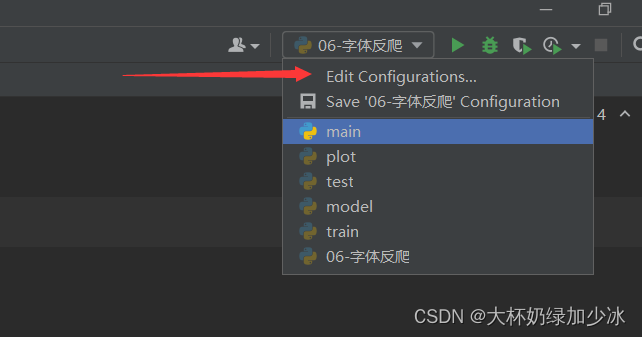

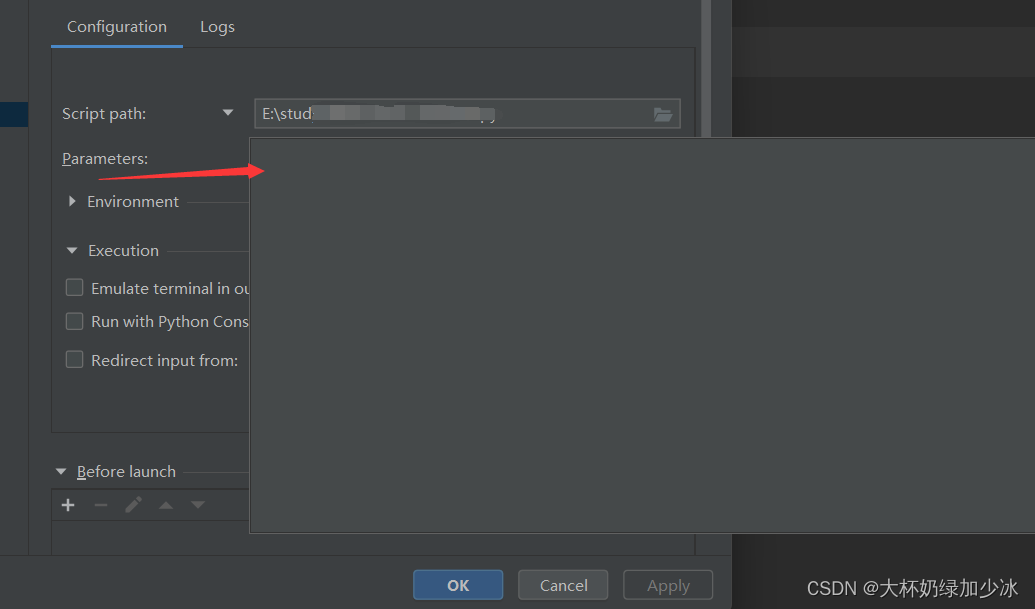

三.注意事项

3.1 注意文件的路径需要更改,有两中方式:

第一种:直接配置路径

data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

image_path = data_root + "study/mobilenet/data/"

train_dir = image_path + "train"

validation_dir = image_path + "test"

第二种:在系统中配置路径,如下图所示

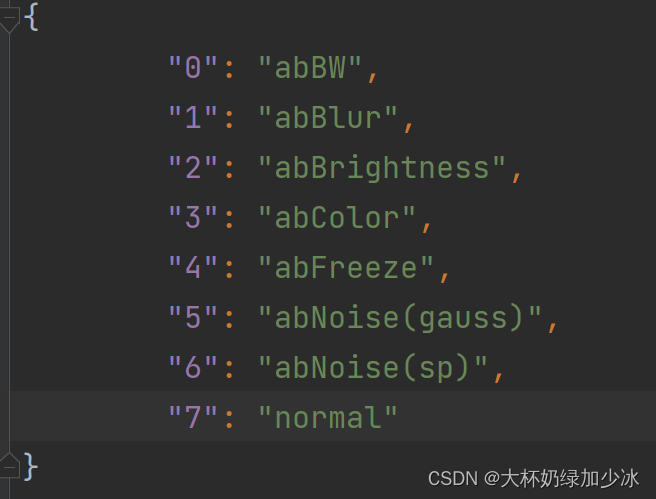

3.2 需要手动创建一个.json文件

你的数据集中有多少类别的图像,就在json文件中创建多少个,注意需要一一对应上每个数据集的名字!如在我使用的数据集中,有8个类别的数据集。