docker网络--多机通信--4--ingress

一.介绍

关于docker的ingress网络,看了很多相关的资料。想把看到的东西,从如下3个方面总结一下,以便加深记忆和实际的使用。

第一方面:“啥”,“增”,“删”,“改”,“查”

第二方面:通过实验,说明原理(iptables链表分析,ipvs分析,tcpdump抓包数据跳转分析)

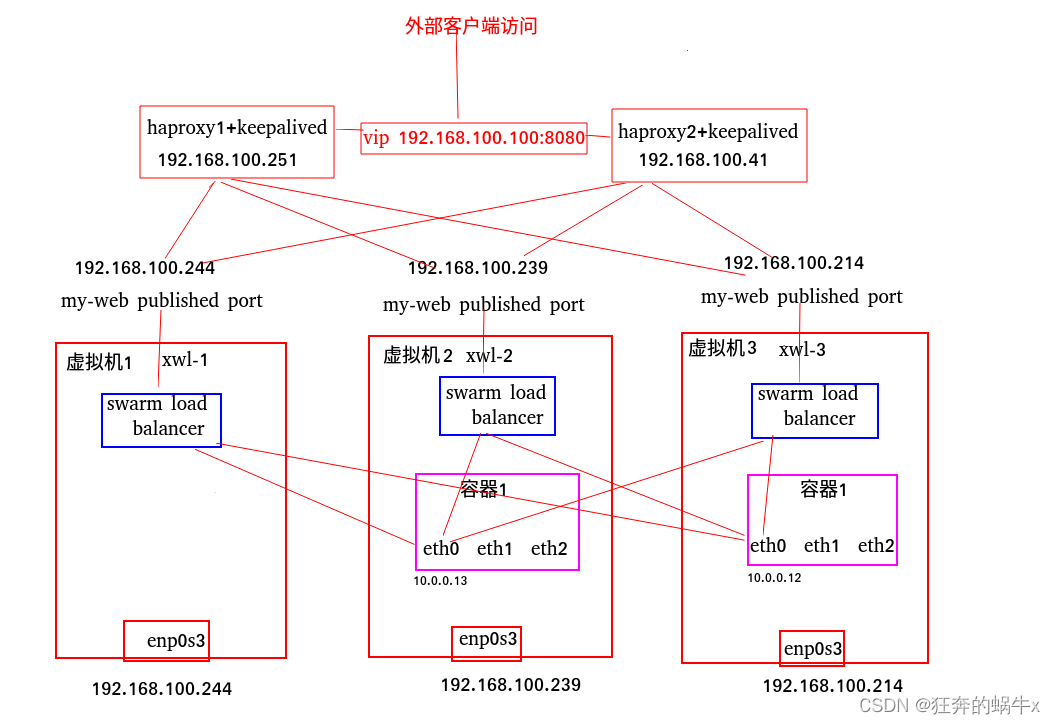

第三方面:通过ingress网络,单个节点能够做到负载均衡,那么如何将所有的节点做成只有一个访问接口,并且高可用,docker官网提到了,可以使用haproxy做这些节点的负载均衡器,我查资料的时候,发现haproxy和keepalived是结合使用的,keepalived可以做到高可用,就是haproxy一台挂掉,另外一台可以顶上去

对于第一方面的内容,docker官网的这篇文章,基本上都有涉及,Manage swarm service networks

对于第一方面的内容,这篇博客也有所涉及,Swarm 的 ingress网络

对于第二方面的内容,docker官网的这篇文章,会有涉及,Use swarm mode routing mesh

对于第二方面的内容,关于实验部分,是很简单且很快能够完成的,主要难点,就理解其中的逻辑和原理。理解其原理,需要理解如下几方面的内容

1.首先得理解iptables的原理,关于iptables的原理,可以参考文章,iptables(一)原理(当然还可以上网查询其他的)

2.需要理解iptables关于mangle表的,这个可以做qos,修改数据包啥的,很有有用,这篇文章,iptables 的mangle表

3.因为ingress routing mesh可以使用ipvs做负载均衡,ipvs其实就是LVS,关于lvs的原理部分,我觉得讲解的比lvs官网更详细更明白,请参考文章,linux lvs的工作原理

4.lvs可以使用ipvsadm命令进行配置,关于如何使用ipvs命令进行配置,可以参考文章,ipvsadm 命令详解

5.理解关于docker的ingress的网络原理,还需要理解docker cnm网络模型,请参考文章,Docker网络(CNM、Libnetwork和驱动)简介

6.理解docker的dns,可以参考文章,Docker Service Discovery服务发现

7.需要使用命令nsenter进入网络namespace中,可以参考这篇文章,nsenter命令简介

8.需要使用tcpdump抓包进行数据包的分析,关于tcpdump的使用,可以参考文章,Tcpdump抓包命令详解

PS:

1.docker Ingress Load Balancing在endpoint的模式默认使用vip的情况下,能够进行负载均衡,关于docker swarm的负载均衡方面,请参考文章,docker swarm mode的服务发现和LB详解,请参考文章,Docker swarm中的LB和服务发现详解(该篇文章内容和现在docker版本情况有出入,但是整体还是不错的)

2.对于docker ingress负载均衡,只能做到单个节点的负载均衡,而不能做到所有节点的负载均衡,做到集群节点的负载均衡,docker官网推荐haproxy`,如果要做到高可用,还需要用到keepalived,关于haproxy+keepalived,可以参考文章,部署haproxy和keepalived服务,当然也可以将haproxy+keepalived进行docker化,可以参考文章,docker下用keepalived+Haproxy实现高可用负载均衡集群

二.ingress网络

1.啥

docker swarm的ingress网络(本质上还是overlay网络)又叫 Ingress Routing Mesh

主要是为了实现把service的服务端口对外发布出去,让其能够被外部网络访问到。

ingress routing mesh是docker swarm网络里最复杂的一部分内容,包括多方面的内容:

1.iptables的 Destination NAT流量转发

2.Linux bridge, network namespace

3.使用IPVS技术做负载均衡

4.包括容器间的通信(overlay)和入方向流量的端口转发

2.增

生成ingress网络主要可以有2种方式

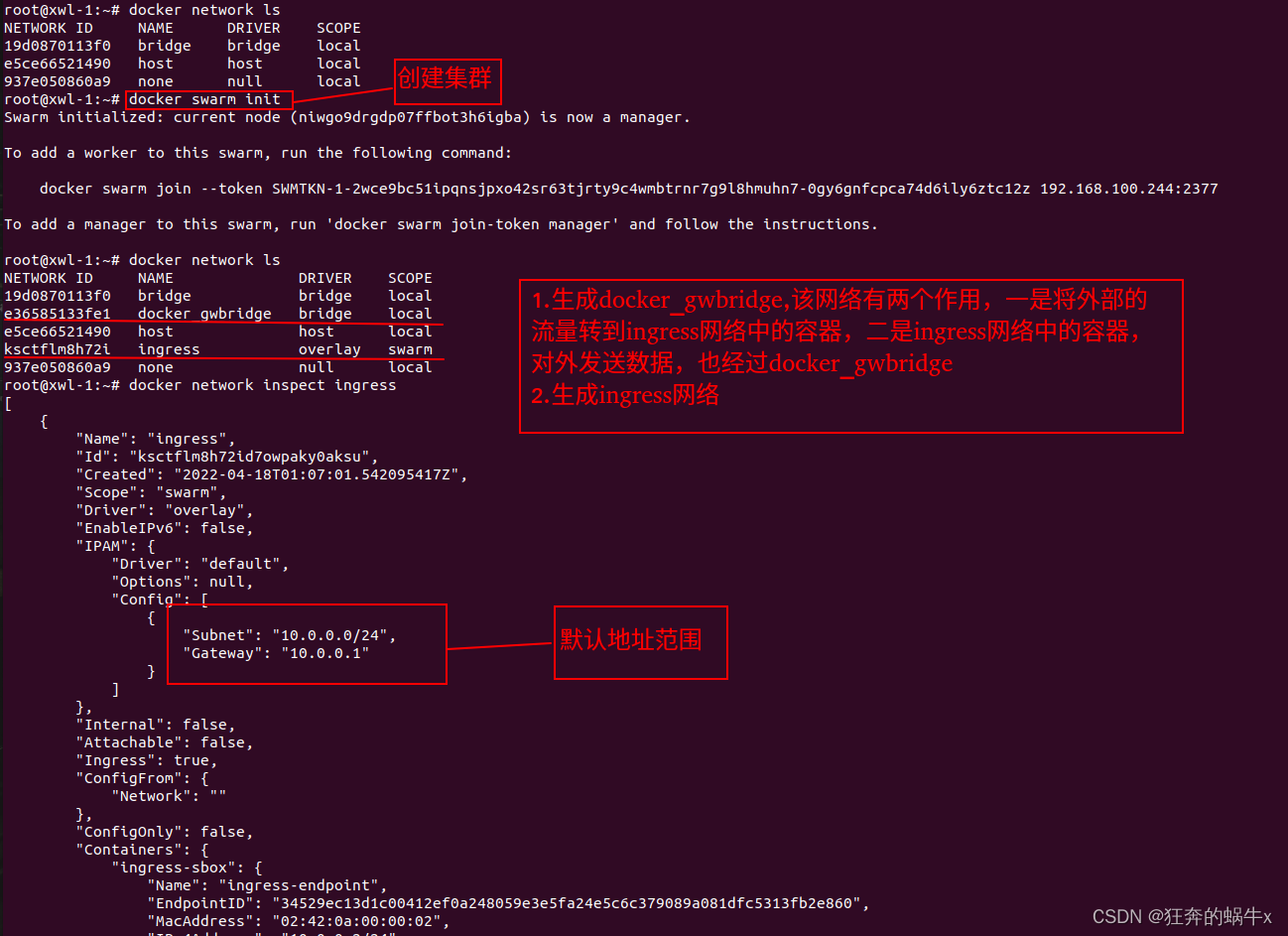

1.使用命令创建集群或者加入的时候,会自动生成ingress网络和docker_bridge网络,默认方式

在主节点

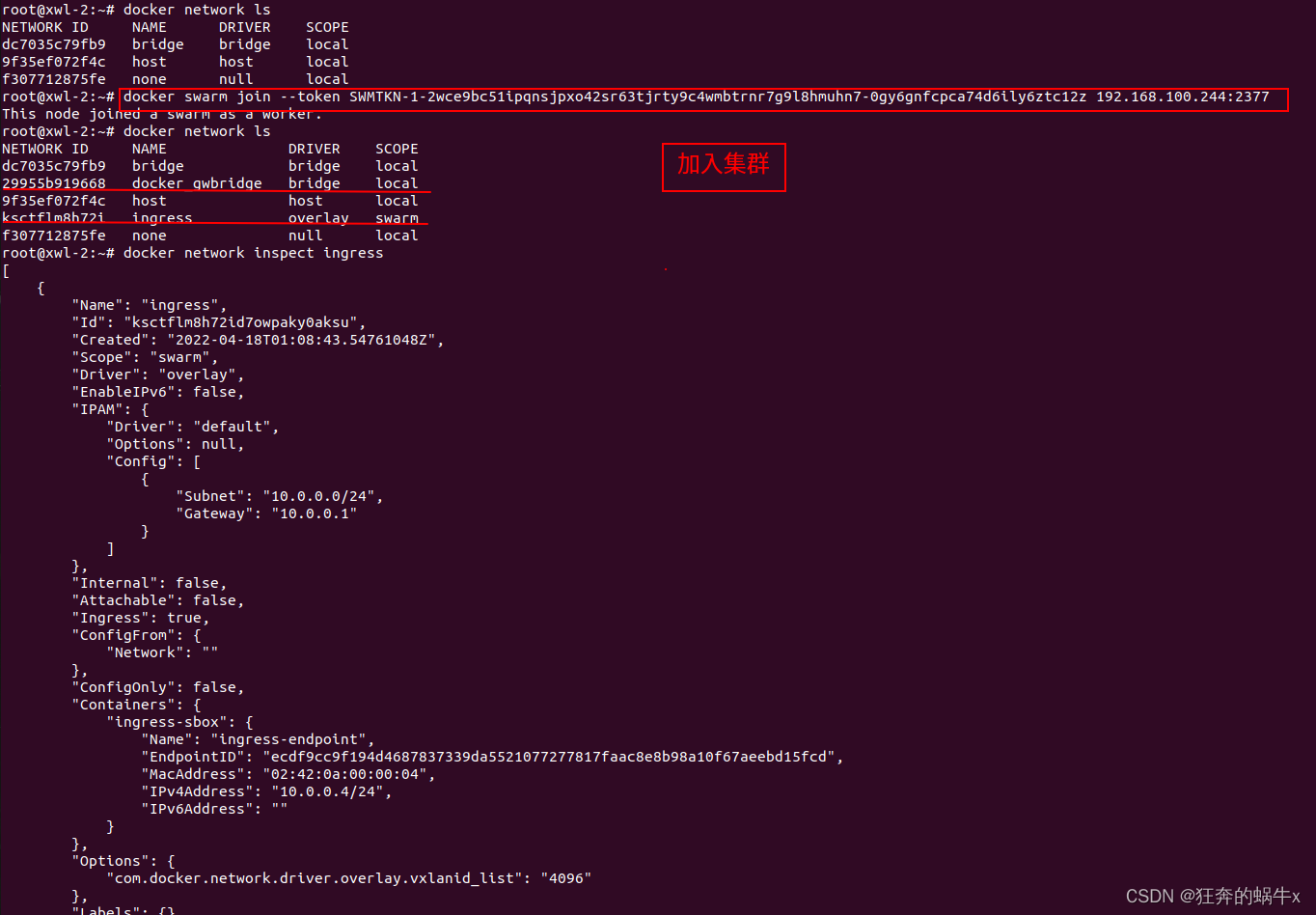

在从节点上

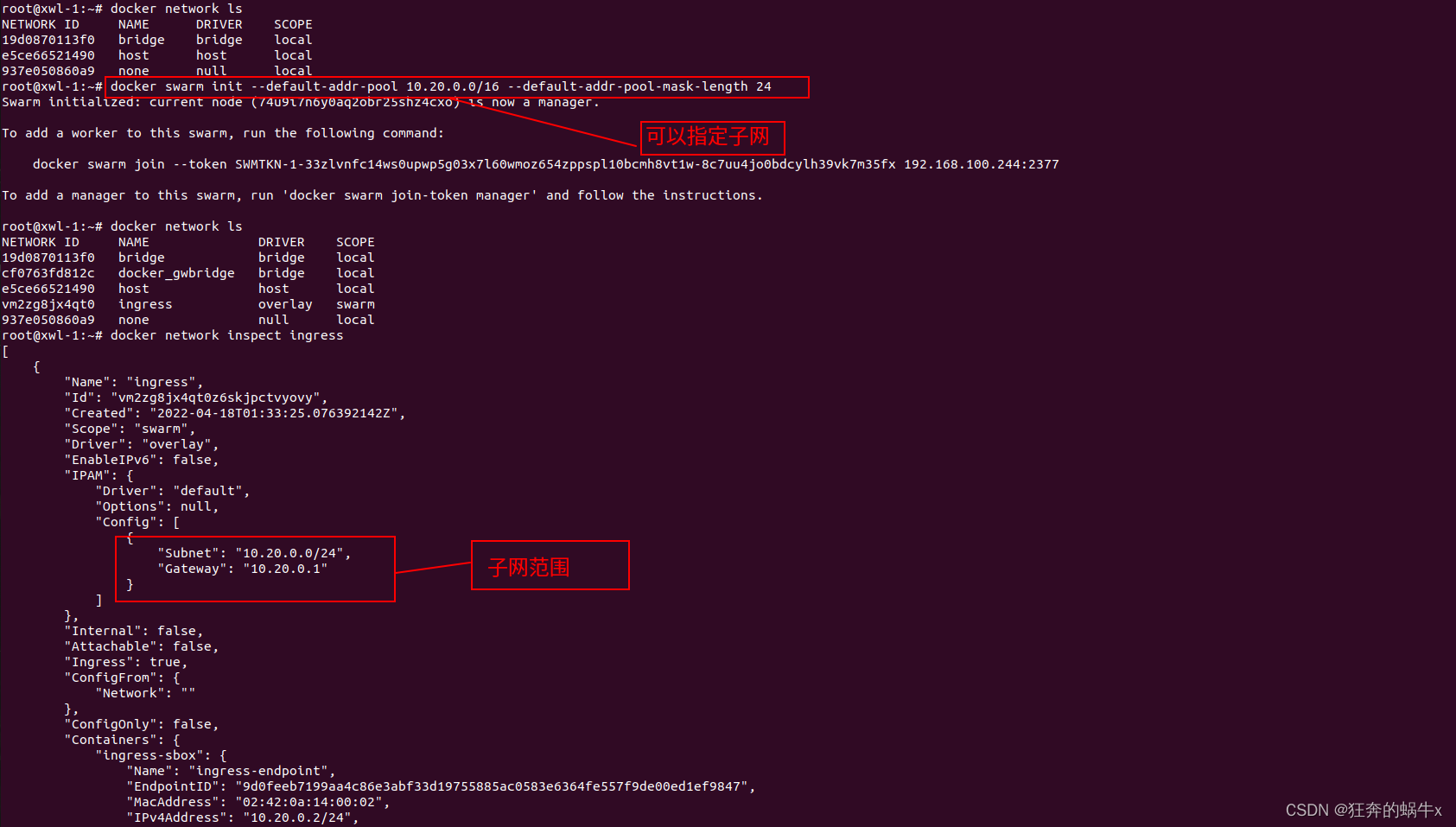

2.在创建集群的时候,可以定义ingress的子网

在主节点上(从节点的ingress始终和主节点的保持一致,其实他们就是同一个)

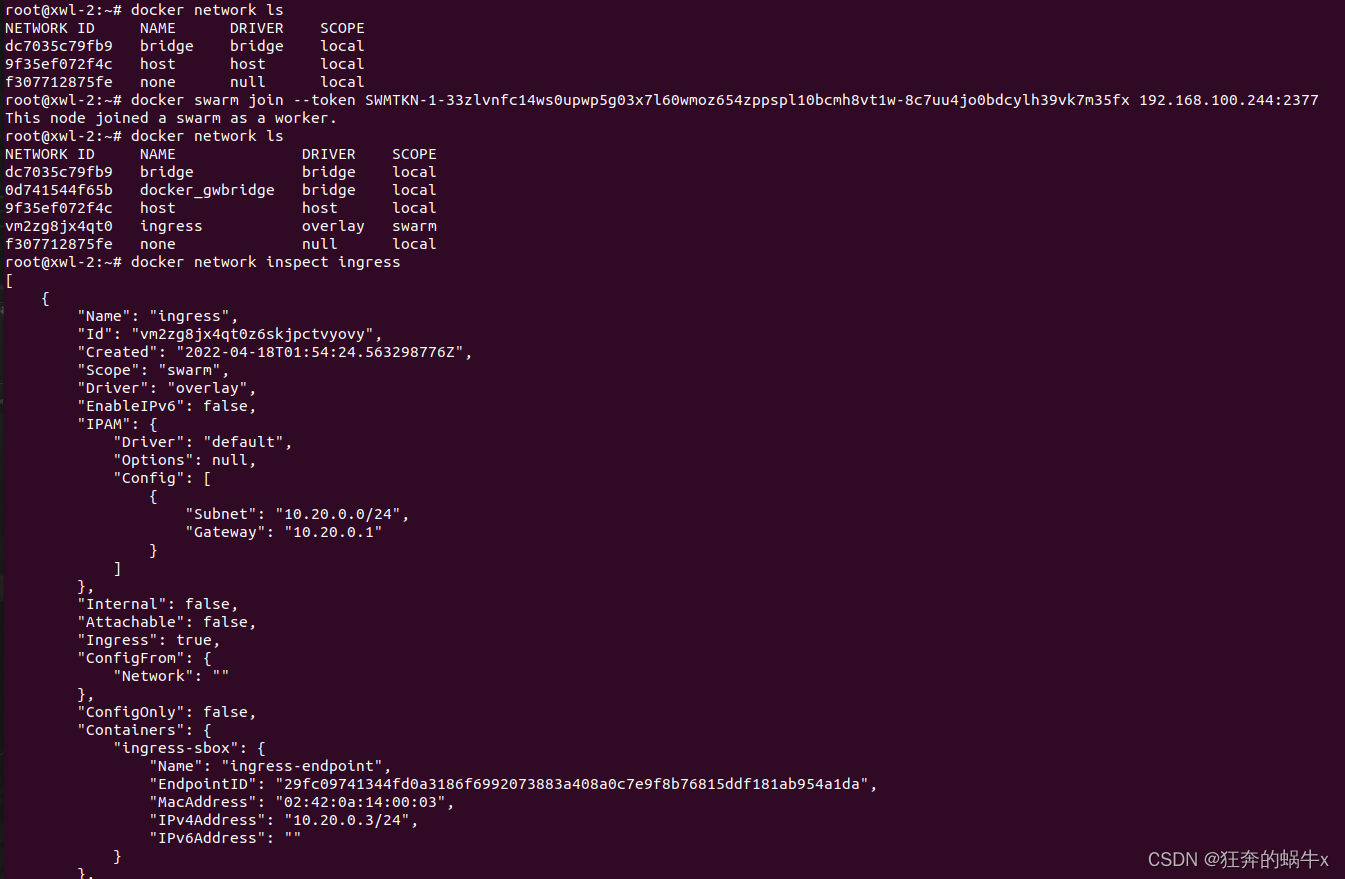

从节点

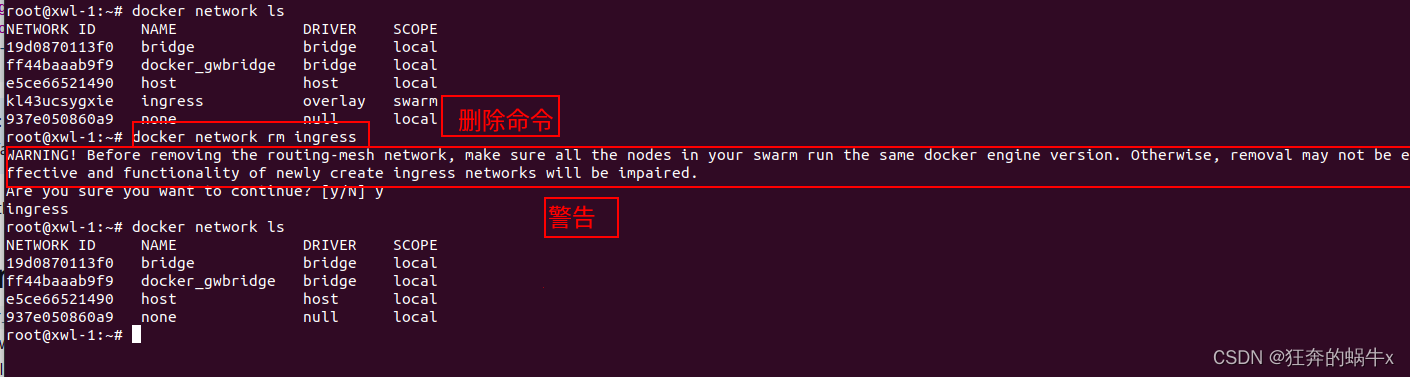

3.删

一般情况下,不能删除ingress网络,如果真要删除,可以使用如下命令

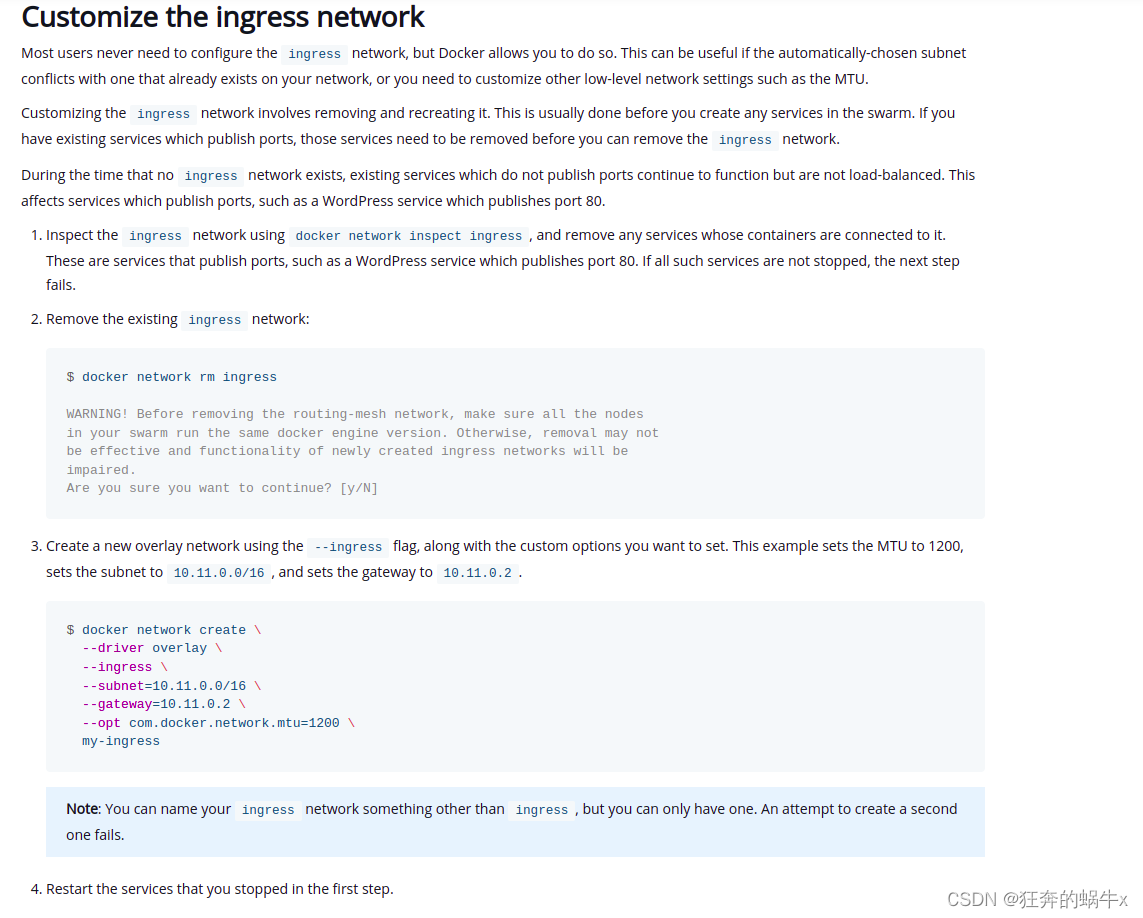

4.改

关于如何修改docker的ingress网络,docker的官网的步骤很详细,可以参考一下。该文章具体地址,Manage swarm service networks

如下的截图,就是摘抄自上述链接,亲测可行

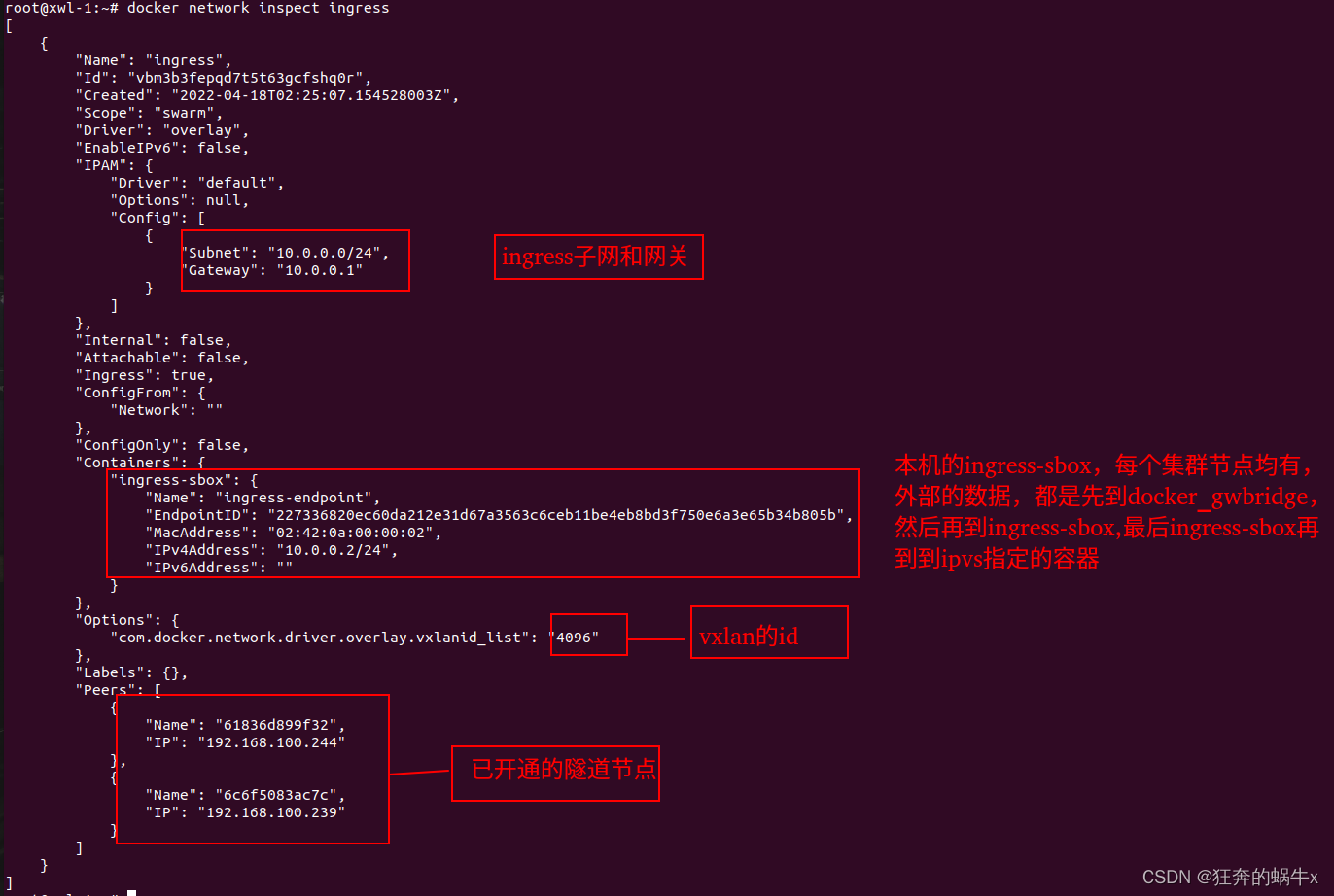

5.查

查询ingress的网络,可以直接使用命令docker inspect

三.ingress实验

1.说明

该实验主要说明ingress网络是如何使用的,实验主要是参考官网,Use swarm mode routing mesh文章做的实验,我在实验步骤,会详细解释负载均衡的原理。routing mesh说的就是ingress,同一个东西。

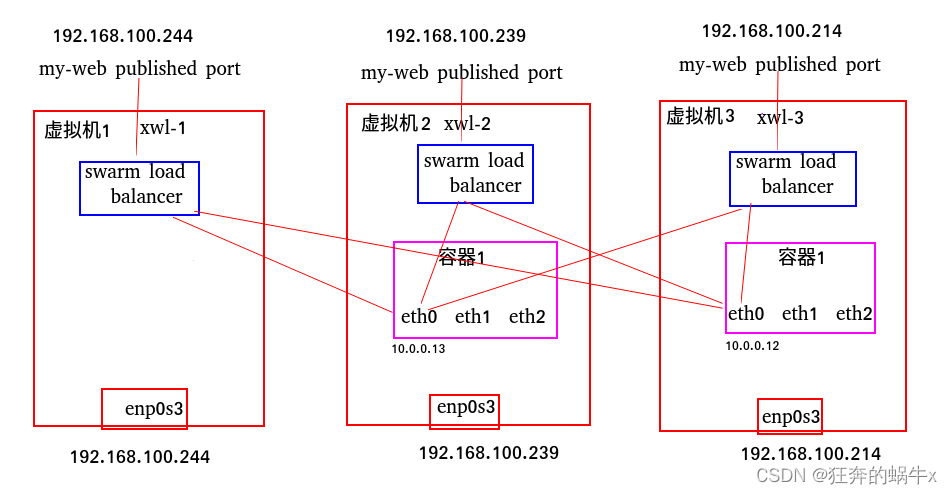

2.整体拓扑图

3.实验步骤

1.预置条件

| 机器名称 | 系统 | IP | docker版本 |

|---|---|---|---|

| xwl-1 | ubuntu-server 20.04 | 192.168.100.244 | 20.10.7 |

| xwl-2 | ubuntu-server 20.04 | 192.168.100.239 | 20.10.7 |

| xwl-3 | ubuntu-server 20.04 | 192.168.100.214 | 20.10.7 |

2.步骤

1.主节点创建集群,两个从节点均加入集群

在主节点xwl-1上:

root@xwl-1:~# docker swarm init

Swarm initialized: current node (yik2tfaq9zzhw87v72vq9k1d3) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-4qhprzxics5pdoc6w1fncsoqpgxkoom7056xb4j7k4y50arud1-250zwrdzspkzq4bayp41l6c36 192.168.100.244:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

在从节点xwl-2上

root@xwl-2:~# docker swarm join --token SWMTKN-1-4qhprzxics5pdoc6w1fncsoqpgxkoom7056xb4j7k4y50arud1-250zwrdzspkzq4bayp41l6c36 192.168.100.244:2377

This node joined a swarm as a worker.

root@xwl-2:~#

在从节点xwl-3上

root@xwl-3:~# docker swarm join --token SWMTKN-1-4qhprzxics5pdoc6w1fncsoqpgxkoom7056xb4j7k4y50arud1-250zwrdzspkzq4bayp41l6c36 192.168.100.244:2377

This node joined a swarm as a worker.

root@xwl-3:~#

在主节点xwl-1上,查看node节点情况

root@xwl-1:~# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

yik2tfaq9zzhw87v72vq9k1d3 * xwl-1 Ready Active Leader 20.10.7

ptd9v78k67qllu9m5st3k9fur xwl-2 Ready Active 20.10.7

oiq4zxuv7svsoow2ryz3epm87 xwl-3 Ready Active 20.10.7

root@xwl-1:~#

2.创建一个名称为my-network的overlay网络

root@xwl-1:~# docker network create --driver overlay my-network

zdrudytj0iwvo7fce1nawpzxy

root@xwl-1:~# docker network inspect my-network

[

{

"Name": "my-network",

"Id": "zdrudytj0iwvo7fce1nawpzxy",

"Created": "2022-04-18T03:28:10.152028784Z",

"Scope": "swarm",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{在这里插入代码片

"Subnet": "10.0.1.0/24",

"Gateway": "10.0.1.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": null,

"Options": {

"com.docker.network.driver.overlay.vxlanid_list": "4097"

},

"Labels": null

}

]

root@xwl-1:~#

3.创建service,并且查看service在那些节点运行

root@xwl-1:~# docker service create --name my-web -p 8080:8000 --replicas 2 --network my-network jwilder/whoami

pu90mzyutp09ur7xo1mz0thck

overall progress: 2 out of 2 tasks

1/2: running [==================================================>]

2/2: running [==================================================>]

verify: Service converged

root@xwl-1:~# docker service ps my-web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

y1ajwgzckyvl my-web.1 jwilder/whoami:latest xwl-3 Running Running 20 seconds ago

oka866geessz my-web.2 jwilder/whoami:latest xwl-2 Running Running 10 seconds ago

root@xwl-1:~#

4.使用外部的机器访问xwl-2的机器的8080端口

查询两个从节点的容器id

从节点xwl-2上

root@xwl-2:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7b55ca3a71ea jwilder/whoami:latest "/app/http" 5 minutes ago Up 5 minutes 8000/tcp my-web.2.oka866geesszx7p8s3udknjth

root@xwl-2:~#

从节点xwl-3上

root@xwl-3:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

15fd7585fa70 jwilder/whoami:latest "/app/http" 5 minutes ago Up 5 minutes 8000/tcp my-web.1.y1ajwgzckyvlppprksu929ste

root@xwl-3:~#

使用外部的机器访问xwl-2机器上的8080端口,会发现请求会轮循调度到不同的容器上

(base) root@test-SATELLITE-PRO-C40-H:~# curl http://192.168.100.239:8080

I'm 15fd7585fa70

(base) root@test-SATELLITE-PRO-C40-H:~# curl http://192.168.100.239:8080

I'm 7b55ca3a71ea

(base) root@test-SATELLITE-PRO-C40-H:~# curl http://192.168.100.239:8080

I'm 15fd7585fa70

(base) root@test-SATELLITE-PRO-C40-H:~# curl http://192.168.100.239:8080

I'm 7b55ca3a71ea

(base) root@test-SATELLITE-PRO-C40-H:~#

4.原理说明

1.查询节点机器xwl-2上的ip,重点关注docker_gwbridge的ip

root@xwl-2:~# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:78ff:fe9e:677f prefixlen 64 scopeid 0x20<link>

ether 02:42:78:9e:67:7f txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 5 bytes 526 (526.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker_gwbridge: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.23.0.1 netmask 255.255.0.0 broadcast 172.23.255.255

inet6 fe80::42:55ff:fe49:c86b prefixlen 64 scopeid 0x20<link>

ether 02:42:55:49:c8:6b txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 12 bytes 1240 (1.2 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

enp0s3: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.100.239 netmask 255.255.255.0 broadcast 192.168.100.255

inet6 fe80::a00:27ff:feb6:346 prefixlen 64 scopeid 0x20<link>

ether 08:00:27:b6:03:46 txqueuelen 1000 (Ethernet)

RX packets 2837215 bytes 2142871201 (2.1 GB)

RX errors 0 dropped 14 overruns 0 frame 0

TX packets 902543 bytes 110036132 (110.0 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

2.查询节点机器xwl-2上的iptables的nat表

root@xwl-2:~# iptables -t nat -vnL

Chain PREROUTING (policy ACCEPT 555 packets, 54394 bytes)

pkts bytes target prot opt in out source destination

29051 2210K DOCKER-INGRESS all -- * * 0.0.0.0/0 0.0.0.0/0 ADDR均衡TYPE match dst-type LOCAL

29095 2214K DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT 555 packets, 54394 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 124 packets, 7719 bytes)

pkts bytes target prot opt in out source destination 邮件

181 13491 DOCKER-INGRESS all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

0 0 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain POSTROUTING (policy ACCEPT 124 packets, 7719 bytes)

pkts bytes target prot opt in out source destination

0 0 MASQUERADE all -- * !docker_gwbridge 172.23.0.0/16 0.0.0.0/0

5 200 MASQUERADE all -- * docker_gwbridge 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match src-type LOCAL

0 0 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

Chain DOCKER (2 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- docker_gwbridge * 0.0.0.0/0 0.0.0.0/0

0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0

邮件

Chain DOCKER-INGRESS (2 references)

pkts bytes target prot opt in out source destination

0 0 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:8080 to:172.23.0.2:8080

29231 2224K RETURN all -- * * 0.0.0.0/0 0.0.0.0/0

root@xwl-2:~#

根据这个iptables的nat表,我们可以判断,当外部数据访问机器xwl-2的8080端口的时候,会转到172.23.0.2:8080端口上去,将数据包进行DNAT转换,改变数据包的ip

PREROUTING–>DOCKER-INGRESS–>INPUT

3.进入ingress——sbox的namespace中,并查询ip

root@xwl-2:~# nsenter --net=/var/run/docker/netns/ingress_sbox sh

# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.0.0.3 netmask 255.255.255.0 broadcast 10.0.0.255

ether 02:42:0a:00:00:03 txqueuelen 0 (Ethernet)

RX packets 27 bytes 2400 (2.4 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 32 bytes 2524 (2.5 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.23.0.2 netmask 255.255.0.0 broadcast 172.23.255.255

ether 02:42:ac:17:00:02 txqueuelen 0 (Ethernet)

RX packets 48 bytes 3848 (3.8 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 21 bytes 2072 (2.0 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmas邮件k 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ingress中存在172.23.0.2这个ip,数据会流转到该网卡上

3.在虚拟机xwl-2上安装ipvsadm,这个主要用来一会查询ipvs规则的

root@xwl-2:~# apt install ipvsadm

4.在主节点xwl-1上,查询服务my-web的虚拟ip是啥

root@xwl-1:~# docker service inspect my-web

[

{

............省略..............

"Endpoint": {

"Spec": {

"Mode": "vip",

"Ports": [

{

"Protocol": "tcp",

"TargetPort": 8000,

"PublishedPort": 8080,

"PublishMode": "ingress"

}

]

},

"Ports": [

{

"Protocol": "tcp",

"TargetPort": 8000,

"PublishedPort": 8080,

"PublishMode": "ingress"

}

],

"VirtualIPs": [

{

"NetworkID": "ehpbawhmww0tulyq6txip8b9o",

"Addr": "10.0.0.11/24"

#就是这个vip地址

},

{

"NetworkID": "zdrudytj0iwvo7fce1nawpzxy",

"Addr": "10.0.1.12/24"

#这个是集群内部的vip地址

}

]

}

}

]

root@xwl-1:~#

每个服务启动后所有的节点都会更新自己的VIP,把新的服务端口号和服务的信息建立一个关系,VIP是基于虚拟IP的负载均衡,VIP可以通过虚拟IP解析到真实IP,然后访问到服务。

5.查看ingress中iptables的mangle表和nat表,我觉得发往172.23.0.2:8080的数据包,会在某一个地方变更为vip的地址,端口不变,目前不知道是在那个地方会去变更这个地址,花了很长时间,尚未找到,但是我现在先就这么理解吧。如果谁知道,能告诉我一下,真的谢谢了。

root@xwl-2:~# nsenter --net=/var/run/docker/netns/ingress_sbox sh

# iptables -t mangle -vnL

#PREROUTING链,说明所有到达ingress-sbox的8080端口的数据,都打上标记0x2d1

Chain PREROUTING (policy ACCEPT 50 packets, 3770 bytes)

pkts bytes target prot opt in out source destination

30 2020 MARK tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:8080 MARK set 0x2d1

#INPUT链,所有目的地址为vip10.0.0.11的数据,都打上标记0x2d1

Chain INPUT (policy ACCEPT 30 packets, 2020 bytes)

pkts bytes target prot opt in out source destination

0 0 MARK all -- * * 0.0.0.0/0 10.0.0.11 MARK set 0x2d1

Chain FORWARD (policy ACCEPT 20 packets, 1750 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 30 packets, 2020 bytes)

pkts bytes target prot opt in out source destination

Chain POSTROUTING (policy ACCEPT 50 packets, 3770 bytes)

pkts bytes target prot opt in out source destination

#

#

#

# iptables -t nat -vnL

Chain PREROUTING (policy ACCEPT 5 packets, 300 bytes)

pkts bytes target prot opt in out source destination

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER_OUTPUT all -- * * 0.0.0.0/0 127.0.0.11

Chain POSTROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER_POSTROUTING all -- * * 0.0.0.0/0 127.0.0.11

#进行SNAT源地址转换,转换为ingress的10.0.0.3地址,并且调用ipvs模块进行负载均衡,选择vip后面的对应的容器

5 300 SNAT all -- * * 0.0.0.0/0 10.0.0.0/24 ipvs to:10.0.0.3

Chain DOCKER_OUTPUT (1 references)

pkts bytes target prot opt in out source destination

0 0 DNAT tcp -- * * 0.0.0.0/0 127.0.0.11 tcp dpt:53 to:127.0.0.11:37525

0 0 DNAT udp -- * * 0.0.0.0/0 127.0.0.11 udp dpt:53 to:127.0.0.11:60751

Chain DOCKER_POSTROUTING (1 references)

pkts bytes target prot opt in out source destination

0 0 SNAT tcp -- * * 127.0.0.11 0.0.0.0/0 tcp spt:37525 to::53

0 0 SNAT udp -- * * 127.0.0.11 0.0.0.0/0 udp spt:60751 to::53

#

6.查看ipvs,负载均衡使用的是lvs-nat模式,还能看到真实的服务器

root@xwl-2:~# ipvsadm -S

-A -f 721 -s rr

-a -f 721 -r 10.0.0.12:0 -m -w 1

-a -f 721 -r 10.0.0.13:0 -m -w 1

-A --add-service 在内核的虚拟服务器表中添加一条新的虚拟服务器记录。也就是增加一台新的虚拟服务器。

-s --scheduler scheduler 使用的调度算法,有这样几个选项rr|wrr|lc|wlc|lblc|lblcr|dh|sh|sed|nq,默认的调度算法是: wlc.

这里的调度算法rr就是轮循

-f --fwmark-service fwmark 说明是经过iptables标记过的服务类型。

mack值是721,就是我们mangle表打的标记,mangle表的标记是16进制,转化为10进制就是721

-a --add-server 在内核虚拟服务器表的一条记录里添加一条新的真实服务器记录。也就是在一个虚拟服务器中增加一台新的真实服务器

-r --real-server server-address 真实的服务器[Real-Server:port]

-m --masquerading 指定LVS 的工作模式为NAT 模式

关于lvs的nat模式,详细原理可以参看官网

-w --weight weight 真实服务器的权值

这里面服务器的权重值都为1,说明概率是相同的

7.使用tcpdump抓包,观察数据包的流向

在192.168.100.146机器上访问机器xwl-2(192.168.100.239:8080)

(base) root@test-SATELLITE-PRO-C40-H:~# curl http://192.168.100.239:8080

I'm 7b55ca3a71ea

在xwl-2机器的监听ingress-sbox上的eth1网卡(172.23.0.2)

我们发现目的地址,确实是通过iptables将目的地址192.168.100.239修改成了172.23.0.2,端口不变

root@xwl-2:~# nsenter --net=/var/run/docker/netns/ingress_sbox

root@xwl-2:~# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.0.0.3 netmask 255.255.255.0 broadcast 10.0.0.255

ether 02:42:0a:00:00:03 txqueuelen 0 (Ethernet)

RX packets 55 bytes 4798 (4.7 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 69 bytes 5258 (5.2 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.23.0.2 prot opt in out source destination

13 780 REDIRprot opt in out source destination

13 780 REDIRECT tcp ECT tcp netmask 255.255.0.0 broadcast 172.23.255.255

ether 02:42:ac:17:00:02 txqueuelen 0 (Ethernet)

RX packets 88 bytes 6792 (6.7 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 48 bytes 4396 (4.3 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 124 bytes 11812 (11.8 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 124 bytes 11812 (11.8 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

root@xwl-2:~# tcpdump -i eth1

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth1, link-type EN10MB (Ethernet), capture size 262144 bytes

07:14:38.969238 IP 192.168.100.146.36560 > 172.23.0.2.http-alt: Flags [S], seq 2834092680, win 64240, options [mss 1460,sackOK,TS val 4279367965 ecr 0,nop,wscale 7], length 0

07:14:38.969375 IP 172.23.0.2.http-alt > 192.168.100.146.36560: Flags [S.], seq 1099711283, ack 2834092681, win 64308, options [mss 1410,sackOK,TS val 1107544226 ecr 4279367965,nop,wscale 7], length 0

07:14:38.969721 IP 192.168.100.146.36560 > 172.23.0.2.http-alt: Flags [.], ack 1, win 502, options [nop,nop,TS val 4279367965 ecr 1107544226], length 0

07:14:38.969723 IP 192.168.100.146.36560 > 172.23.0.2.http-alt: Flags [P.], seq 1:85, ack 1, win 502, options [nop,nop,TS val 4279367965 ecr 1107544226], length 84: HTTP: GET / HTTP/1.1

07:14:38.969832 IP 172.23.0.2.http-alt > 192.168.100.146.36560: Flags [.], ack 85, win 502, options [nop,nop,TS val 1107544226 ecr 4279367965], length 0

07:14:38.970701 IP 172.23.0.2.http-alt > 192.168.100.146.36560: Flags [P.], seq 1:135, ack 85, win 502, options [nop,nop,TS val 1107544227 ecr 4279367965], length 134: HTTP: HTTP/1.1 200 OK

07:14:38.970879 IP 192.168.100.146.36560 > 172.23.0.2.http-alt: Flags [.], ack 135, win 501, options [nop,nop,TS val 4279367966 ecr 1107544227], length 0

07:14:38.971039 IP 192.168.100.146.36560 > 172.23.0.2.http-alt: Flags [F.], seq 85, ack 135, win 501, options [nop,nop,TS val 4279367967 ecr 1107544227], length 0

07:14:38.971121 IP 172.23.0.2.http-alt > 192.168.100.146.36560: Flags [F.], seq 135, ack 86, win 502, options [nop,nop,TS val 1107544228 ecr 4279367967], length 0

07:14:38.971283 IP 192.168.100.146.36560 > 172.23.0.2.http-alt: Flags [.], ack 136, win 501, options [nop,nop,TS val 4279367967 ecr 1107544228], length 0

07:14:43.984258 ARP, Request who-has 172.23.0.1 tell 172.23.0.2, length 28

07:14:43.984339 ARP, Request who-has 172.23.0.2 tell 172.23.0.1, length 28

07:14:43.984762 ARP, Reply 172.23.0.2 is-at 02:42:ac:17:00:02 (oui Unknown), length 28

07:14:43.984732 ARP, Reply 172.23.0.1 is-at 02:42:55:49:c8:6b (oui Unknown), length 28

8.在xwl-2机器的监听ingress-sbox上的eth0网卡(10.0.0.3)

数据通过ingress_sbox的eth1网卡到eth0网卡,然后通过eth0网卡,经过ipvs后,流转到本机的容器7b55ca3a71ea(10.0.0.13),如果再运行一次,流转到另外一个机器xwl-3机器的容器15fd7585fa70(10.0.0.12)

root@xwl-2:~# nsenter --net=/var/run/docker/netns/ingress_sbox

root@xwl-2:~# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.0.0.3 netmask 255.255.255.0 broadcast 10.0.0.255

ether 02:42:0a:00:00:03 txqueuelen 0 (Ethernet)

RX packets 55 bytes 4798 (4.7 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 69 bytes 5258 (5.2 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.23.0.2 netmask 255.255.0.0 broadcast 172.23.255.255

ether 02:42:ac:17:00:02 txqueuelen 0 (Ethernet)

RX packets 88 bytes 6792 (6.7 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 48 bytes 4396 (4.3 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 124 bytes 11812 (11.8 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 124 bytes 11812 (11.8 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

root@xwl-2:~# tcpdump -i eth0

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

07:14:38.969299 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [S], seq 2834092680, win 64240, options [mss 1460,sackOK,TS val 4279367965 ecr 0,nop,wscale 7], length 0

07:14:38.969367 IP 10.0.0.13.http-alt > 10.0.0.3.36560: Flags [S.], seq 1099711283, ack 2834092681, win 64308, options [mss 1410,sackOK,TS val 1107544226 ecr 4279367965,nop,wscale 7], length 0

07:14:38.969747 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [.], ack 1, win 502, options [nop,nop,TS val 4279367965 ecr 1107544226], length 0

07:14:38.969755 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [P.], seq 1:85, ack 1, win 502, options [nop,nop,TS val 4279367965 ecr 1107544226], length 84: HTTP: GET / HTTP/1.1

07:14:38.969823 IP 10.0.0.13.http-alt > 10.0.0.3.36560: Flags [.], ack 85, win 502, options [nop,nop,TS val 1107544226 ecr 4279367965], length 0

07:14:38.970690 IP 10.0.0.13.http-alt > 10.0.0.3.36560: Flags [P.], seq 1:135, ack 85, win 502, options [nop,nop,TS val 1107544227 ecr 4279367965], length 134: HTTP: HTTP/1.1 200 OK

07:14:38.970963 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [.], ack 135, win 501, options [nop,nop,TS val 4279367966 ecr 1107544227], length 0

07:14:38.971055 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [F.], seq 85, ack 135, win 501, options [nop,nop,TS val 4279367967 ecr 1107544227], length 0

07:14:38.971113 IP 10.0.0.13.http-alt > 10.0.0.3.36560: Flags [F.], seq 135, ack 86, win 502, options [nop,nop,TS val 1107544228 ecr 4279367967], length 0

07:14:38.971309 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [.], ack 136, win 501, options [nop,nop,TS val 4279367967 ecr 1107544228], length 0

07:14:43.984313 ARP, Request who-has 10.0.0.13 tell 10.0.0.3, length 28

07:14:43.984745 ARP, Request who-has 10.0.0.3 tell 10.0.0.13, length 28

07:14:43.984840 ARP, Reply 10.0.0.3 is-at 02:42:0a:00:00:03 (oui Unknown), length 28

07:14:43.984871 ARP, Reply 10.0.0.13 is-at 02:42:0a:00:00:0d (oui Unknown), length 28

本机容器

root@xwl-2:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7b55ca3a71ea jwilder/whoami:latest "/app/http" 4 hours ago Up 4 hours 8000/tcp my-web.2.oka866geesszx7p8s3udknjth

root@xwl-2:~#

9.在xwl-2机器的监听容器7b55ca3a71ea(10.0.0.13)的eth0网卡,发现数据流到了容器

root@xwl-2:~# nseprot opt in out source destination

13 780 REDIRECT tcp nter --net=/var/run/docker/netns/f4f915f5b6aa

root@xwl-2:~# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.0.0.13 netmask 255.255.255.0 broadcast 10.0.0.255

ether 02:42:0a:00:00:0d txqueuelen 0 (Ethernet)

RX packets 83 bytes 6382 (6.3 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 56 bytes 5208 (5.2 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.0.1.14 netmask 255.255.255.0 broadcast 10.0.1.255

ether 02:42:0a:00:01:0e txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.23.0.3 netmask 255.255.0.0 broadcast 172.23.255.255

ether 02:42:ac:17:00:03 txqueuelen 0 (Ethernet)

RX packets 21 bytes 1546 (1.5 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1000 (Local Loopback)

RX packets 32 bytes 2960 (2.9 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 32 bytes 2960 (2.9 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

root@xwl-2:~# tcpdump -i eth0

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

07:14:38.969317 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [S], seq 2834092680, win 64240, options [mss 1460,sackOK,TS val 4279367965 ecr 0,nop,wscale 7], length 0

07:14:38.969359 IP 10.0.0.13.http-alt > 10.0.0.3.36560: Flags [S.], seq 1099711283, ack 2834092681, win 64308, options [mss 1410,sackOK,TS val 1107544226 ecr 4279367965,nop,wscale 7], length 0

07:14:38.969760 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [.], ack 1, win 502, options [nop,nop,TS val 4279367965 ecr 1107544226], length 0

07:14:38.969761 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [P.], seq 1:85, ack 1, win 502, options [nop,nop,TS val 4279367965 ecr 1107544226], length 84: HTTP: GET / HTTP/1.1

07:14:38.969816 IP 10.0.0.13.http-alt > 10.0.0.3.36560: Flags [.], ack 85, win 502, options [nop,nop,TS val 1107544226 ecr 4279367965], length 0

07:14:38.970681 IP 10.0.0.13.http-alt > 10.0.0.3.36560: Flags [P.], seq 1:135, ack 85, win 502, options [nop,nop,TS val 1107544227 ecr 4279367965], length 134: HTTP: HTTP/1.1 200 OK

07:14:38.970970 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [.], ack 135, win 501, options [nop,nop,TS val 4279367966 ecr 1107544227], length 0

07:14:38.971060 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [F.], seq 85, ack 135, win 501, options [nop,nop,TS val 4279367967 ecr 1107544227], length 0

07:14:38.971107 IP 10.0.0.13.http-alt > 10.0.0.3.36560: Flags [F.], seq 135, ack 86, win 502, options [nop,nop,TS val 1107544228 ecr 4279367967], length 0

07:14:38.971314 IP 10.0.0.3.36560 > 10.0.0.13.http-alt: Flags [.], ack 136, win 501, options [nop,nop,TS val 4279367967 ecr 1107544228], length 0略

07:14:43.984293 ARP, Request who-has 10.0.0.3 tell 10.0.0.13, length 28

07:14:43.984749 ARP, Request who-has 10.0.0.13 tell 10.0.0.3, length 28

07:14:43.984853 ARP, Reply 10.0.0.13 is-at 02:42:0a:00:00:0d (oui Unknown), length 28

07:14:43.984869 ARP, Reply 10.0.0.3 is-at 02:42:0a:00:00:03 (oui Unknown), length 28

我们发现流向容器的数据包的端口仍然是8080(http-alt),实际上容器上开放的端口是8000,这个怎么处理的,这个是通过iptables将端口重定向到8000

看容器的iptables

root@xwl-2:~# nsenter --net=/var/run/docker/netns/f4f915f5b6aa

root@xwl-2:~# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.0.0.13 netmask 255.255.255.0 broadcast 10.0.0.255

ether 02:42:0a:00:00:0d txqueuelen 0 (Ethernet)

RX packets 91 bytes 6954 (6.9 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 62 bytes 5698 (5.6 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.0.1.14 netmask 255.255.255.0 broadcast 10.0.1.255

ether 02:42:0a:00:01:0e txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.23.0.3 netmask 255.255.0.0 broadcast 172.23.255.255

ether 02:42:ac:17:00:03 txqueuelen 0 (Ethernet)

RX packets 21 bytes 1546 (1.5 KB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

............略.................

root@xwl-2:~# iptables -t nat -vnL

Chain PREROUTING (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

13 780 REDIRECT tcp -- * * 0.0.0.0/0 10.0.0.13 tcp dpt:8080 redir ports 8000

Chain INPUT (policy ACCEPT 13 packets, 780 bytes)

pkts bytes target prot opt in out source destination

顶上去

Chain OUTPUT (policy ACCEPT 20 packets, 1570 bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER_OUTPUT all -- * * 0.0.0.0/0 127.0.0.11

....................略..................

root@xwl-2:~#

10.总结

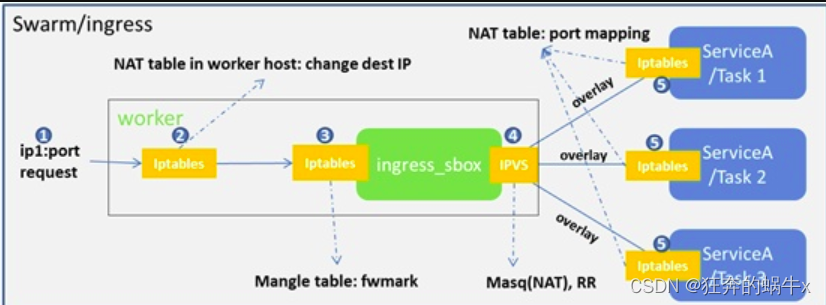

1.客户端请求ip和端口,到达其中任意1个节点,然后节点机器通过iptables修改目标ip地址为172.xx.0.2,进入到ingress_sbox中,然后将指定端口的数据包打标记,然后通过ipvs负载均衡到后端的容器,后端的容器重定向端口号

下图是摘抄这篇文章,Docker swarm中的LB和服务发现详解

四.外部负载均衡(Haproxy+keepalived) +ingress

1.说明

该实验是在上一个实验的基础之上做的,在上一个实验的基础之上,增加了haproxy+keepalived

haproxy可以用来做负载均衡用的,我们上一个实验,我们可以通过访问3个ip和端口,能够访问容器,现在使用haproxy可以代理这个3个节点,keepalived主要用来做高可用的,就是一台haproxy挂掉之后,另外一个可以顶上去

2.整体拓扑图

3.实验步骤

1.预置条件

| 机器名称 | 系统 | IP | docker版本 |

|---|---|---|---|

| xwl-1 | ubuntu-server 20.04 | 192.168.100.244 | 20.10.7 |

| xwl-2 | ubuntu-server 20.04 | 192.168.100.239 | 20.10.7 |

| xwl-3 | ubuntu-server 20.04 | 192.168.100.214 | 20.10.7 |

| haproxy1 | ubuntu-server 20.04 | 192.168.100.251 | 20.10.7 |

| haproxy2 | ubuntu-server 20.04 | 192.168.100.41 | 20.10.7 |

2.步骤

1.在机器haproxy1和haproxy2上安装haproxy和keepalived

root@haproxy1:~# apt-get -y install haproxy keepalived

Reading package lists... Done

Building dependency tree

Reading state information... Done

...........省略................

root@haproxy2:~# apt-get -y install haproxy keepalived

Reading package lists... Done

Building dependency tree

Reading state information... Done

...........省略................

2.在haproxy1机器上编辑keepalived配置文件,并将keepalived设置成开机自启动

root@haproxy1:~# cp /usr/share/doc/keepalived/samples/keepalived.conf.sample /etc/keepalived/keepalived.conf

root@haproxy1:~# nano /etc/keepalived/keepalived.conf

#编辑完成后,查看一下

root@haproxy1:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

interface enp0s3

virtual_router_id 50

nopreempt

priority 100

advert_int 1

virtual_ipaddress {

192.168.100.100 dev enp0s3 label enp0s3:0

}

}

virtual_server 10.10.10.2 1358 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

sorry_server 192.168.200.200 1358

real_server 192.168.200.2 1358 {

weight 1

HTTP_GET {

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

}

#设置成开机自启动

root@haproxy1:~# systemctl start keepalived

root@haproxy1:~# systemctl enable keepalived

3.将haproxy1机器上的keepalived.cfg配置文件拷贝到haproxy2机器上

root@haproxy1:~# scp /etc/keepalived/keepalived.conf [email protected]:/etc/keepalived/keepalived.conf

The authenticity of host '192.168.100.41 (192.168.100.41)' can't be established.

ECDSA key fingerprint is SHA256:8KwMmYtM6BGEuhMIy8q7zK9WyARxjqRa7NDuQba8ZH8.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.100.41' (ECDSA) to the list of known hosts.

[email protected]'s password:

keepalived.conf

4.在haproxy2机器上修改keepalived.cfg优先级后,并且也将该服务设置成开机自启动

root@haproxy2:/etc/haproxy# nano /etc/keepalived/keepalived.conf

root@haproxy2:/etc/haproxy# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

interface enp0s3

virtual_router_id 50

nopreempt

priority 50

advert_int 1

virtual_ipaddress {

192.168.100.100 dev enp0s3 label enp0s3:0

}

}

virtual_server 10.10.10.2 1358 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

root@haproxy2:/etc/haproxy#

sorry_server 192.168.200.200 1358

real_server 192.168.200.2 1358 {

weight 1

HTTP_GET {

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

retry 3

delay_before_retry 3

}

}

}

root@haproxy2:/etc/haproxy# systemctl start keepalived

root@haproxy2:/etc/haproxy# systemctl enable keepalived

root@haproxy2:/etc/haproxy#

5.配置haproxy.cfg文件,在haproxy1机器上

root@haproxy1:~# nano /etc/haproxy/haproxy.cfg

root@haproxy1:~# cat /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4096

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen ingress

bind 192.168.100.100:8080

balance roundrobin

server xwl-1 192.168.100.244:8080 check

server xwl-2 192.168.100.239:8080 check

server xwl-3 192.168.100.214:8080 check

root@haproxy1:~#

6.haproxy.cfg文件配置完成后,在haproxy1上重启haproxy服务

root@haproxy1:~# systemctl restart haproxy

重启服务后,可能会报如下的错误,可以参考链接解决问题(haproxy 无法启动,需要添加内核参数):

r 19 05:36:05 haproxy1 systemd[1]: Failed to start HAProxy Load Balancer.

Apr 19 05:36:05 haproxy1 systemd[1]: haproxy.service: Scheduled restart job, restart counter is at 5.

Apr 19 05:36:05 haproxy1 systemd[1]: Stopped HAProxy Load Balancer.

Apr 19 05:36:05 haproxy1 systemd[1]: haproxy.service: Start request repeated too quickly.

Apr 19 05:36:05 haproxy1 systemd[1]: haproxy.service: Failed with result 'exit-code'.

7.在haproxy2上,将haproxy1上的haproxy.cfg文件拷贝过来,并且冲haproxy服务

root@haproxy2:/etc/haproxy# scp [email protected]:/etc/haproxy/haproxy.cfg /etc/haproxy/

[email protected]'s password:

haproxy.cfg 100% 1302 1.4MB/s 00:00

root@haproxy2:/etc/haproxy# systemctl restart haproxy.service

root@haproxy2:/etc/haproxy#

8.使用外部的机器进行验证

test@test-SATELLITE-PRO-C40-H:~$ sudo -i

(base) root@test-SATELLITE-PRO-C40-H:~# curl http://192.168.100.100:8080

I'm 83384acb6d7d

(base) root@test-SATELLITE-PRO-C40-H:~# curl http://192.168.100.100:8080

I'm fb754dc20225

(base) root@test-SATELLITE-PRO-C40-H:~# curl http://192.168.100.100:8080

I'm 83384acb6d7d

(base) root@test-SATELLITE-PRO-C40-H:~# curl http://192.168.100.100:8080

I'm fb754dc20225

(base) root@test-SATELLITE-PRO-C40-H:~#

这样就完成了,达成我们整体拓扑图效果

五.遗留问题

1.目前对于ingress的负载均衡,本质上LVS的NAT模式,该模式的特点是所有的数据会原路返回,这样的话,所有的数据都会经过调度器,会导致调度器的负载很大。后面需要研究更改LVS的负载均衡模式,修改成DR或者TUN模式。

2.目前对于ingress的负载均衡时时,endpoint是设置成默认vip模式的,如果设置dnsrr模式,ingress是无法进行负载均衡的,当endpoint的模式是dnsrr的时候,需要使用haproxy等方式进行负载均衡,目前,未对该模式进行试验。

六.参考链接

1.docker官网,关于ingress网络

https://docs.docker.com/engine/swarm/ingress/#bypass-the-routing-mesh

2.docker官网,关于docker swarm service

https://docs.docker.com/engine/swarm/networking/#configure-service-discovery

3.ingress网络讲解

https://blog.csdn.net/weixin_48447848/article/details/122678297

4.本篇文章,关于运行docker haproxy

https://blog.csdn.net/easylife206/article/details/122374851

5.iptables原理讲解

https://blog.csdn.net/wzj_110/article/details/108875481

6.iptables 的mangle表

https://blog.csdn.net/lee244868149/article/details/45113585

7.关于docker cnm的网络理解

http://c.biancheng.net/view/3185.html

8.nsenter命令讲解

https://www.jianshu.com/p/1a49116f00a9

9.docker swarm中关于dns解析的

http://c.biancheng.net/view/3194.html

10.关于lvs的工作原理,讲解的比官网更详细

https://www.cnblogs.com/yangjianbo/articles/8430034.html

11.lvs的工作原理,官方网站

http://www.linuxvirtualserver.org/zh/lvs3.html

12.ipvsadm命令讲解

https://blog.csdn.net/qq_34167423/article/details/117418700

13.Docker swarm中的LB和服务发现详解

https://www.jianshu.com/p/c83a9173459f

14.linux的LVS原理

https://www.cnblogs.com/hongdada/p/9758939.html

16.tcpdump命令说明

https://blog.csdn.net/Romanticn_chu/article/details/116052964

17.关于haproxy+keepalived部署

https://www.cnblogs.com/yinzhengjie/p/12174356.html

18.关于haproxy+keepalived部署使用docker进行部署

https://blog.csdn.net/qq_21108311/article/details/82973763