正常访问最多获取一页数据,要登录才能获取两页数据,也可以去淘宝花费1,2块买个vip,这样就能获取多条数据了.

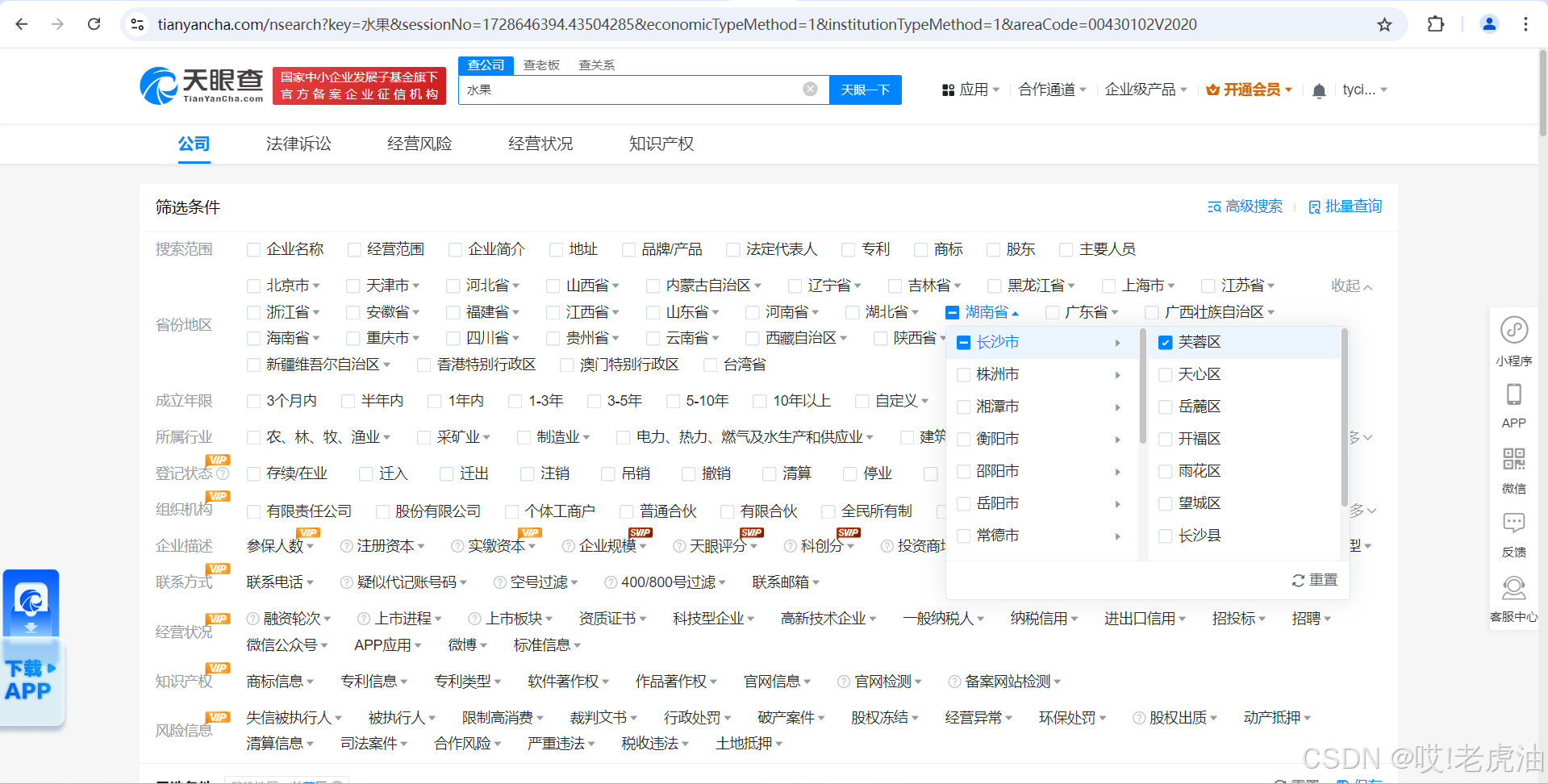

我们先随便搜索一个关键词公司

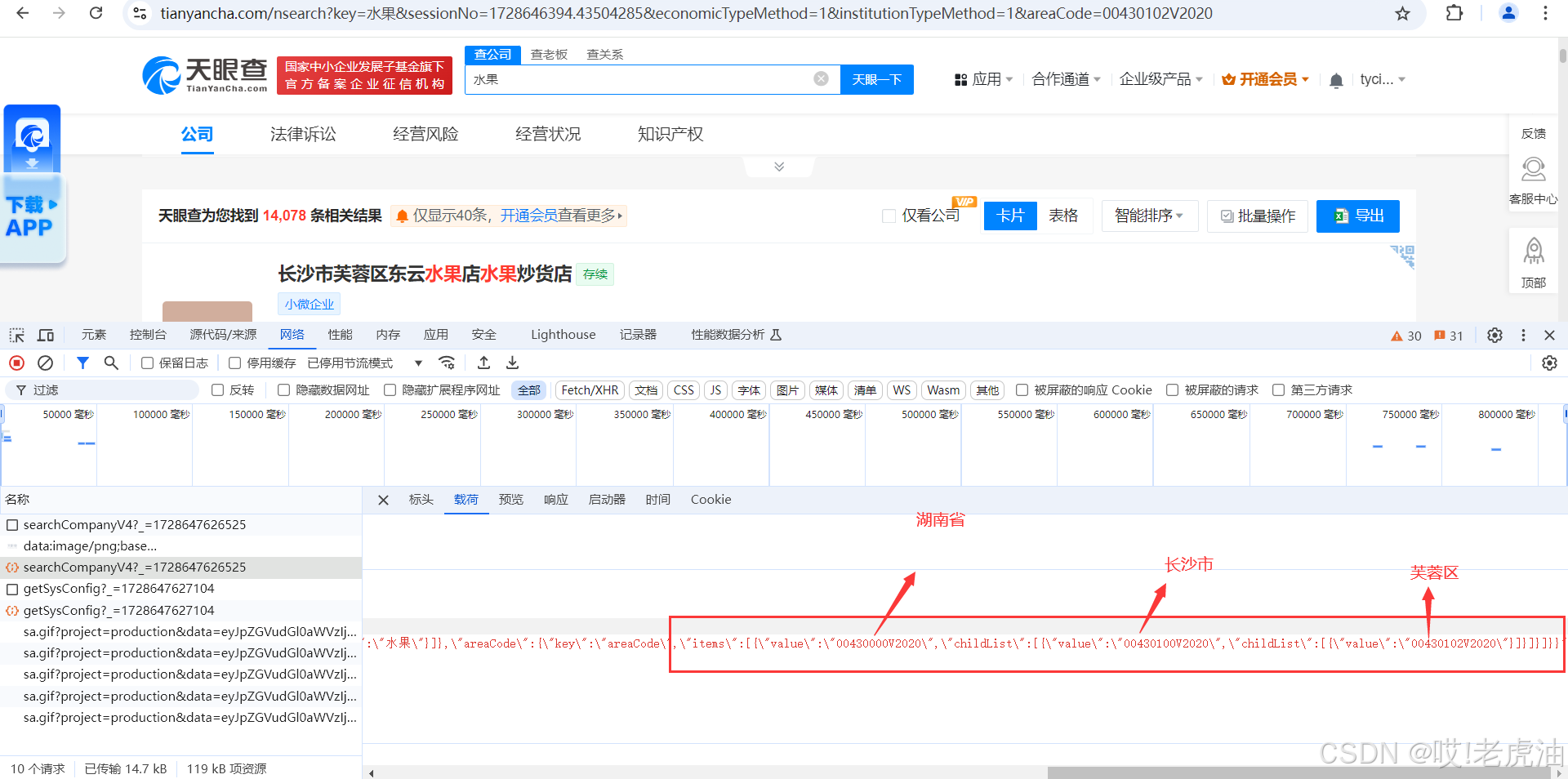

然后先选择一个城市当中一个区

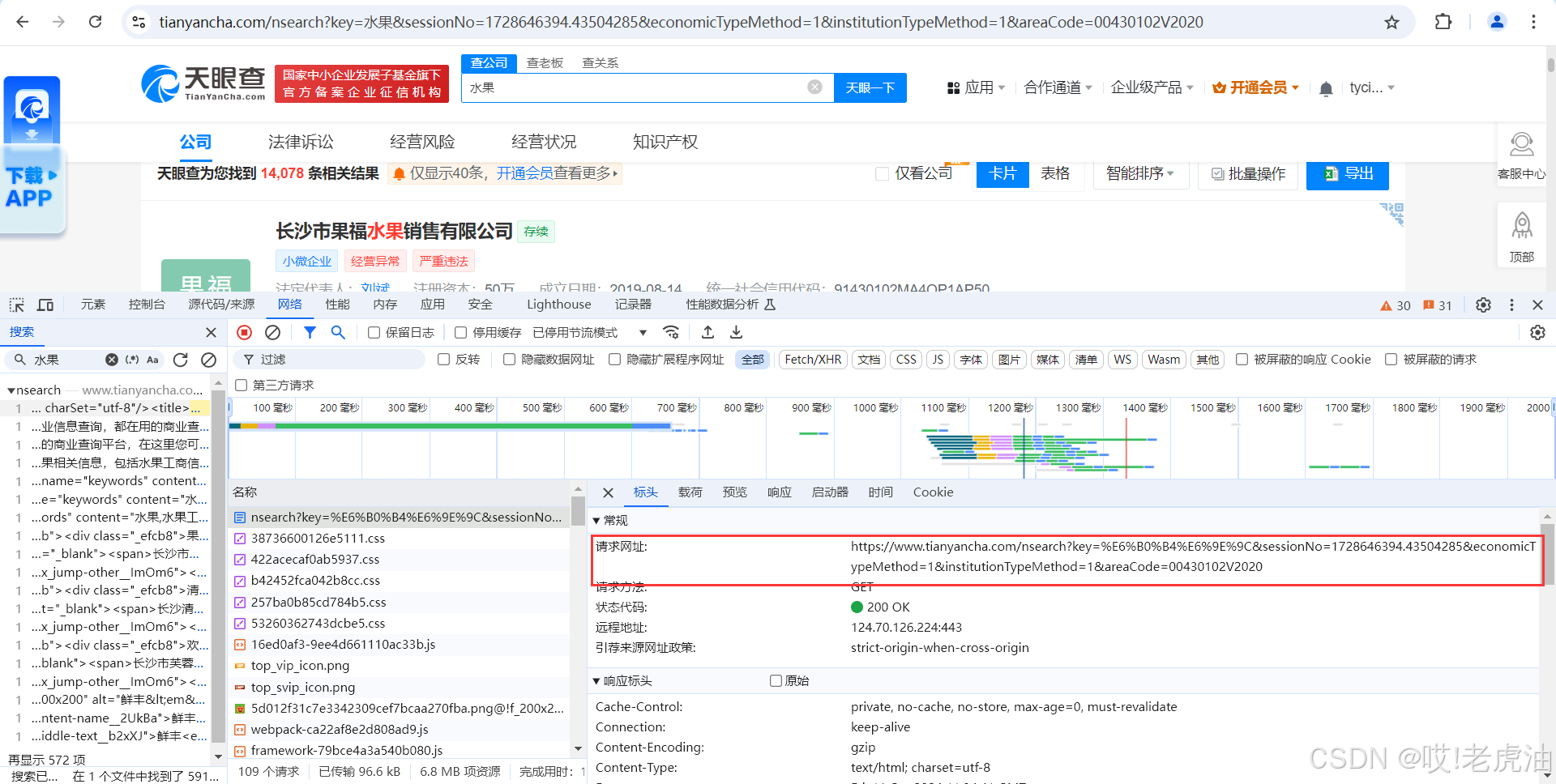

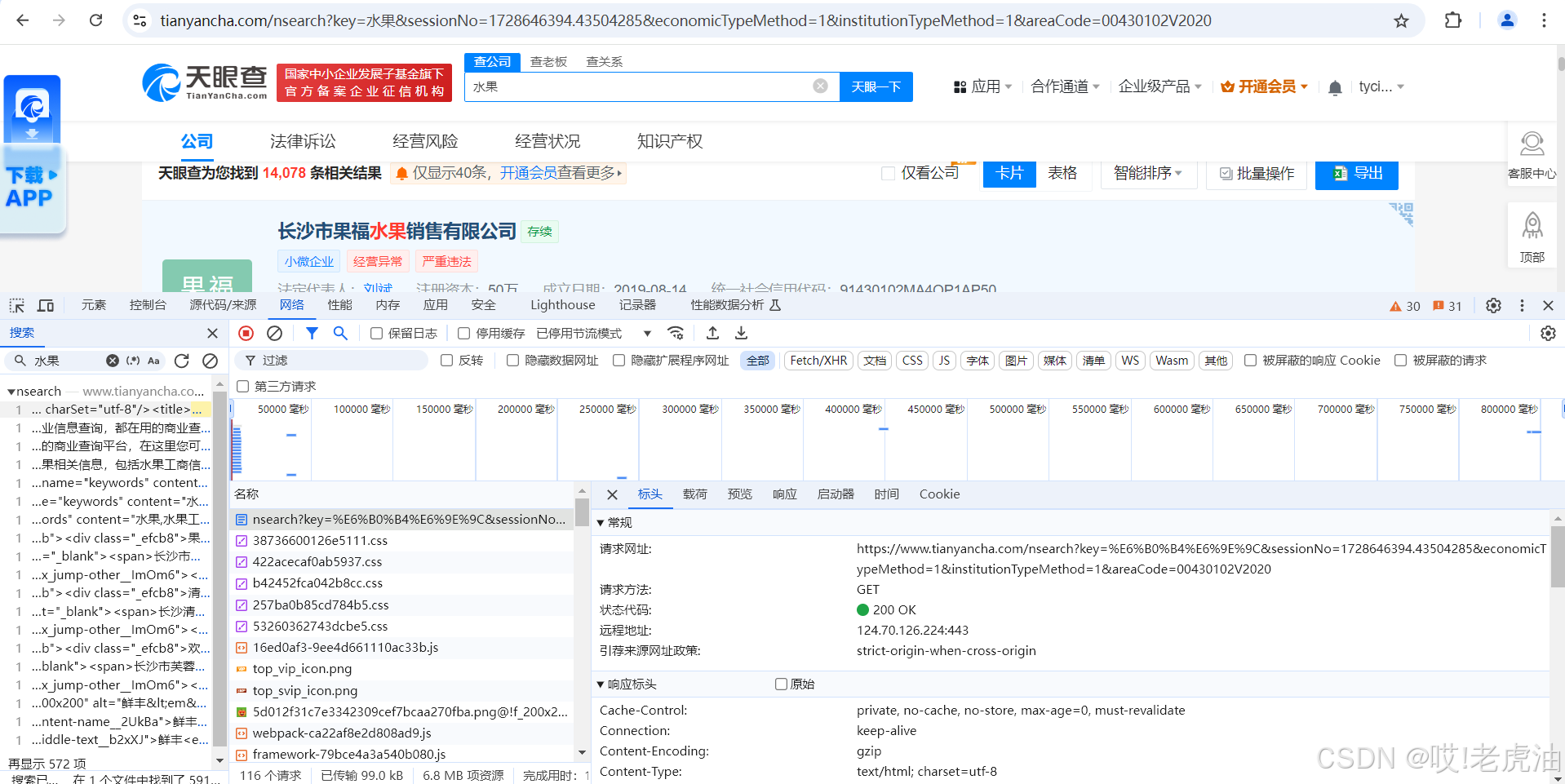

我们正常进来按F12刷新后都会以为是下方接口

确确实实里面有数据,但是有坑.我们网络爬虫一定要细致!!!想想要是我爬第二页或爬别的地区是不是载荷有变化.先说说坑在那吧.

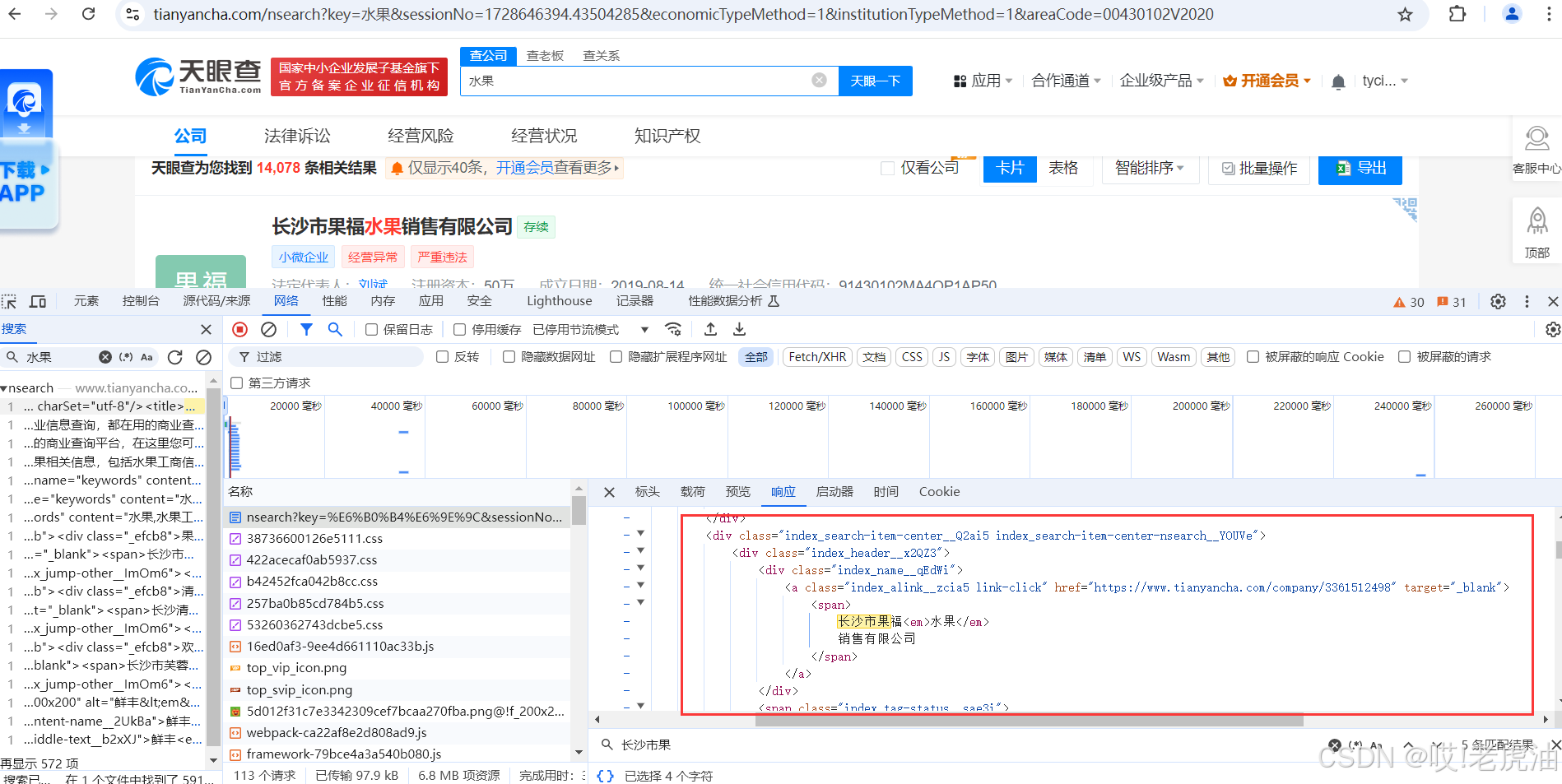

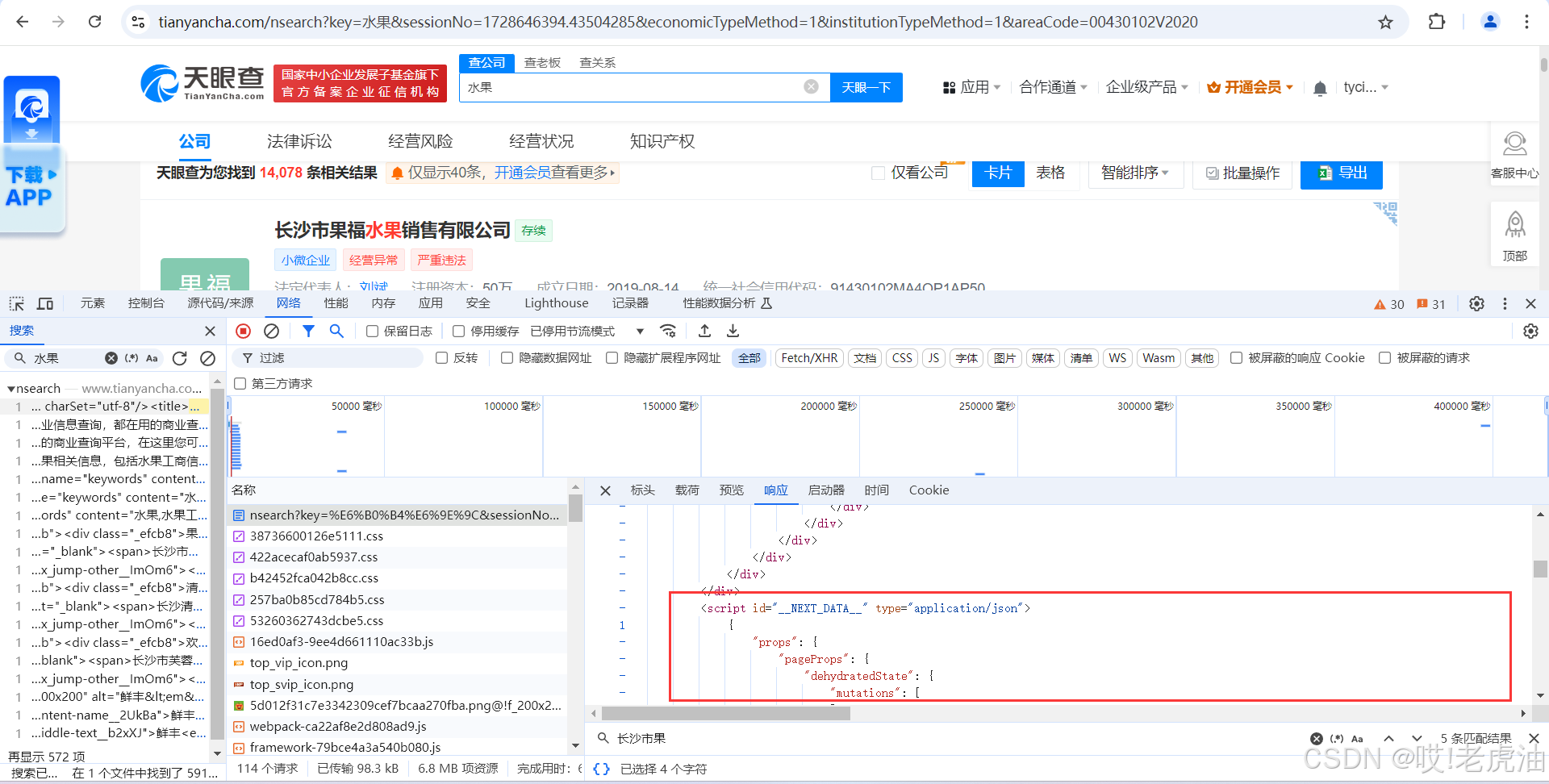

正常这里get请求传header之后就能获取,切记这里别急着bs4解析数据其实在图2里有json数据

只需要进行切片获取到数据进行字符串转json数据就可以键值对提取了

接下来就是开篇了.

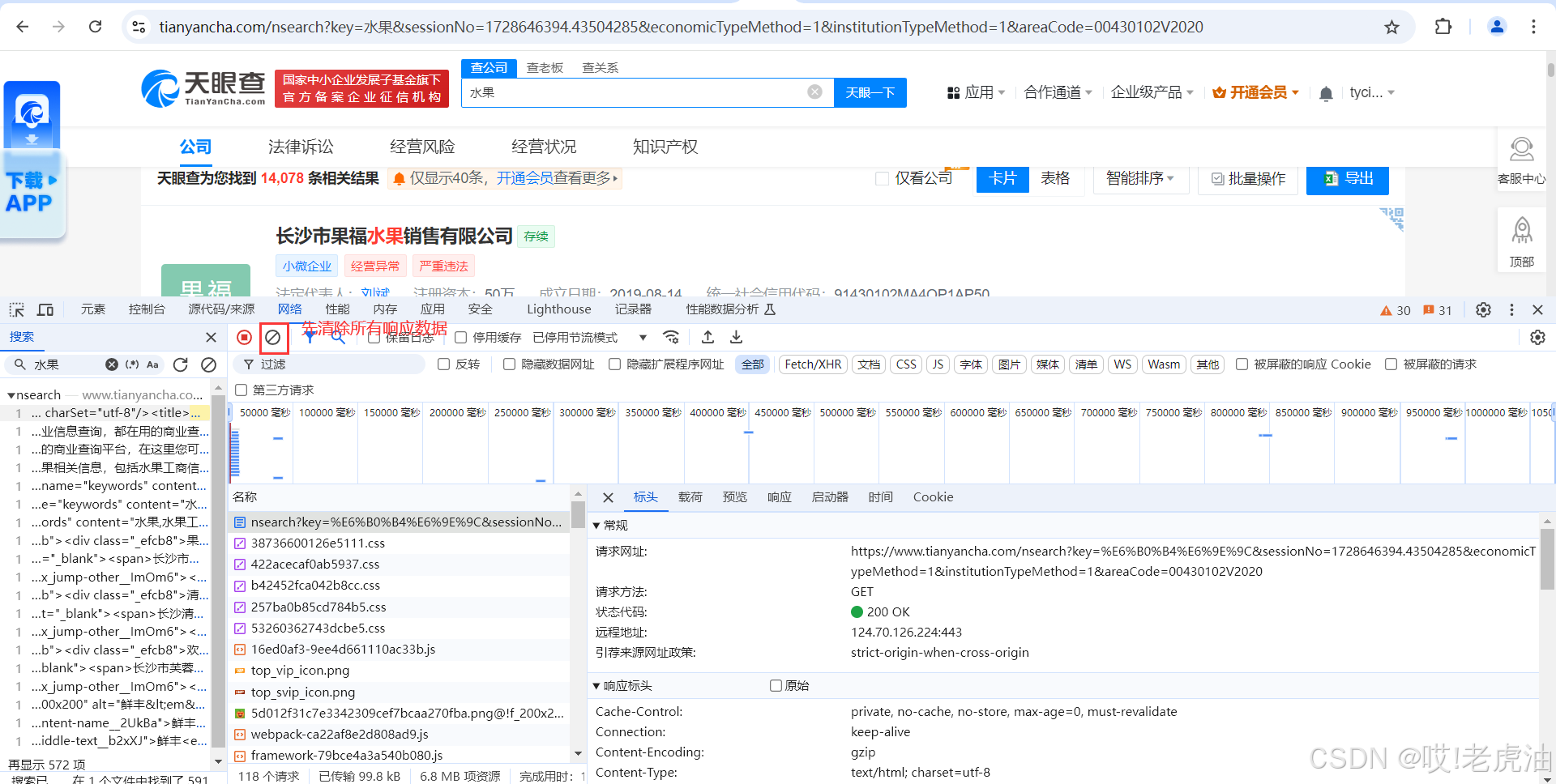

其实这里这个接口是错误的,在这里可以这样操作,先清除所有响应数据,然后直接换到第二页

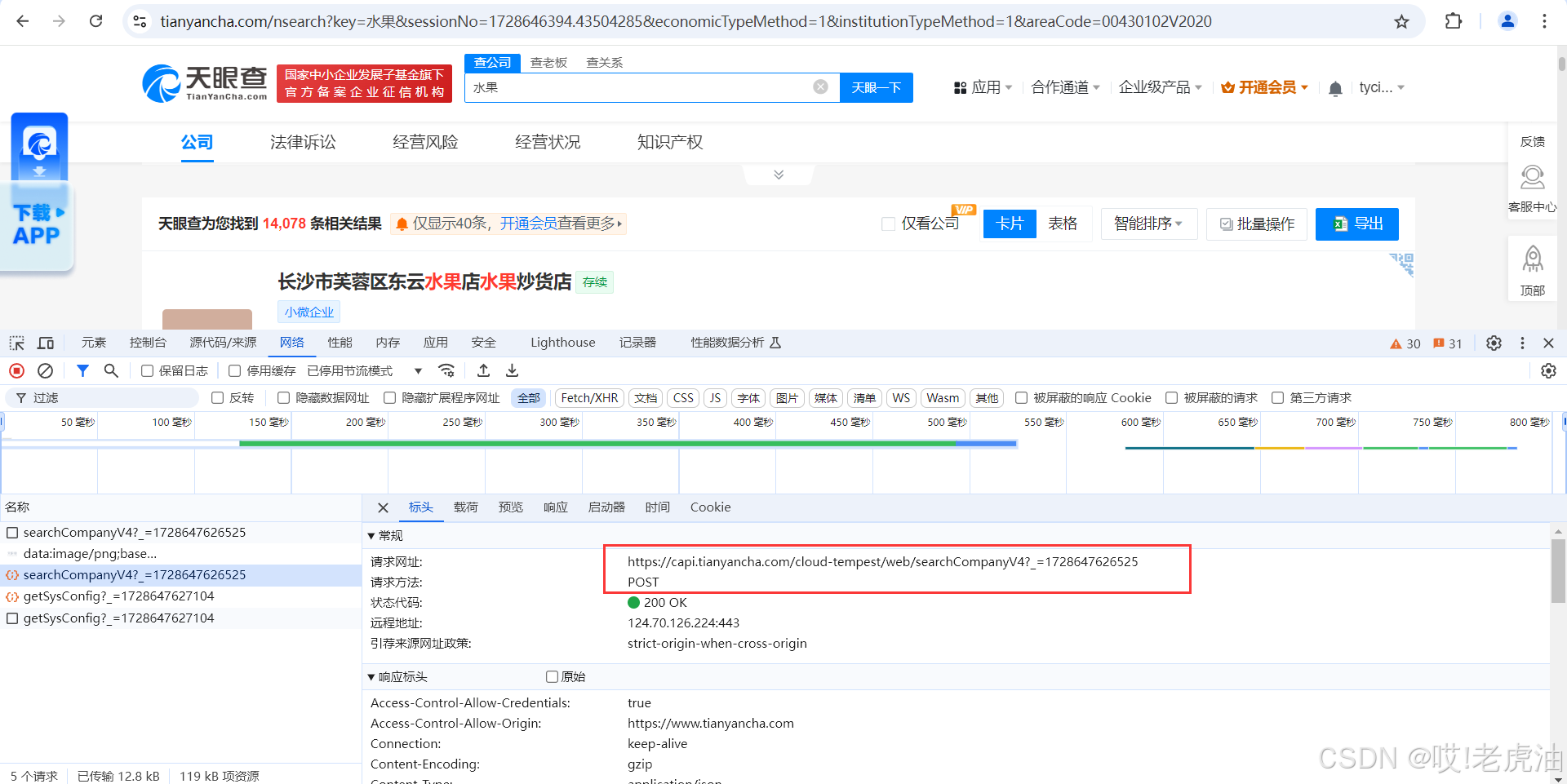

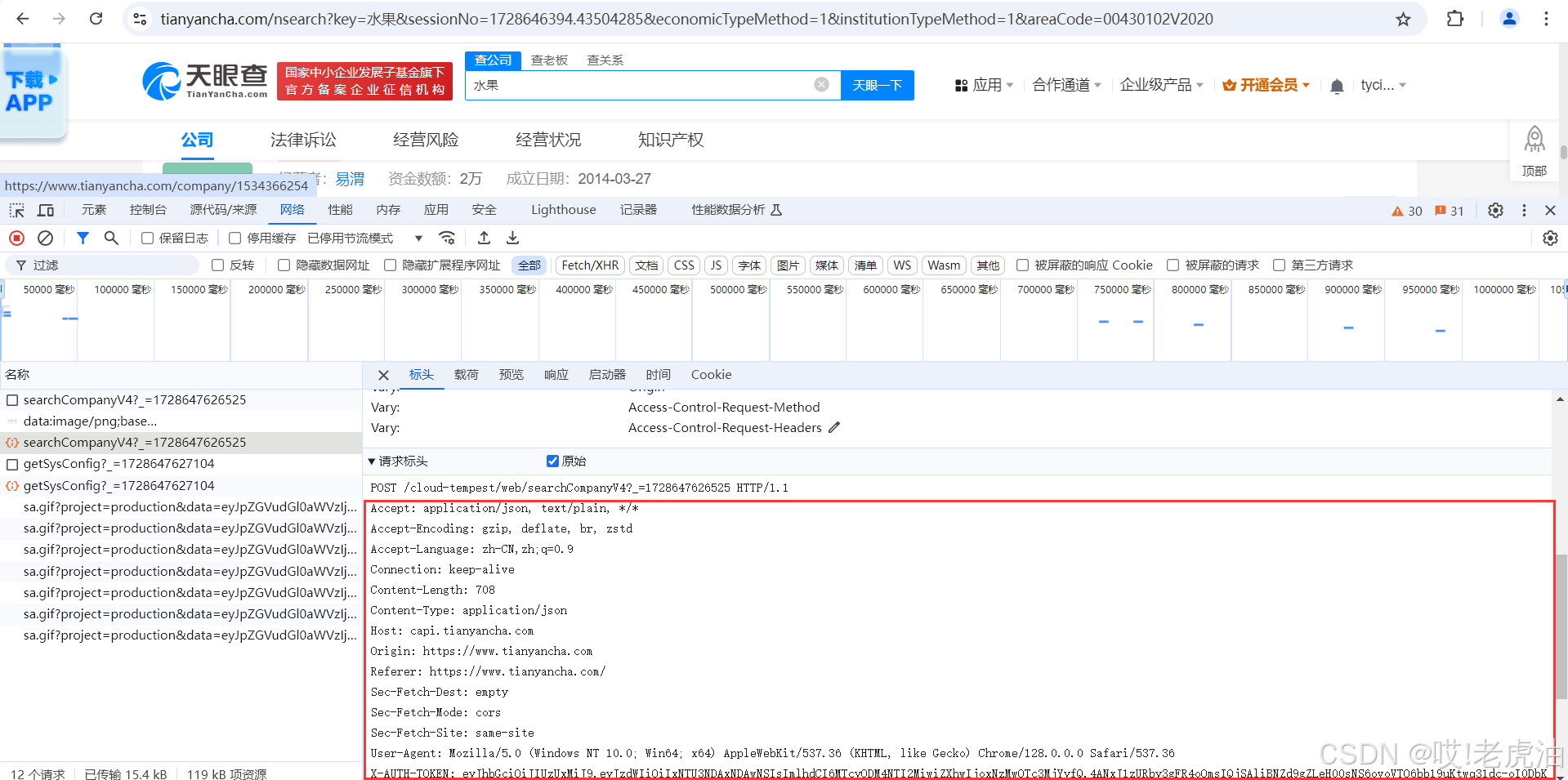

这里就看出来url的不同了,而且是post请求

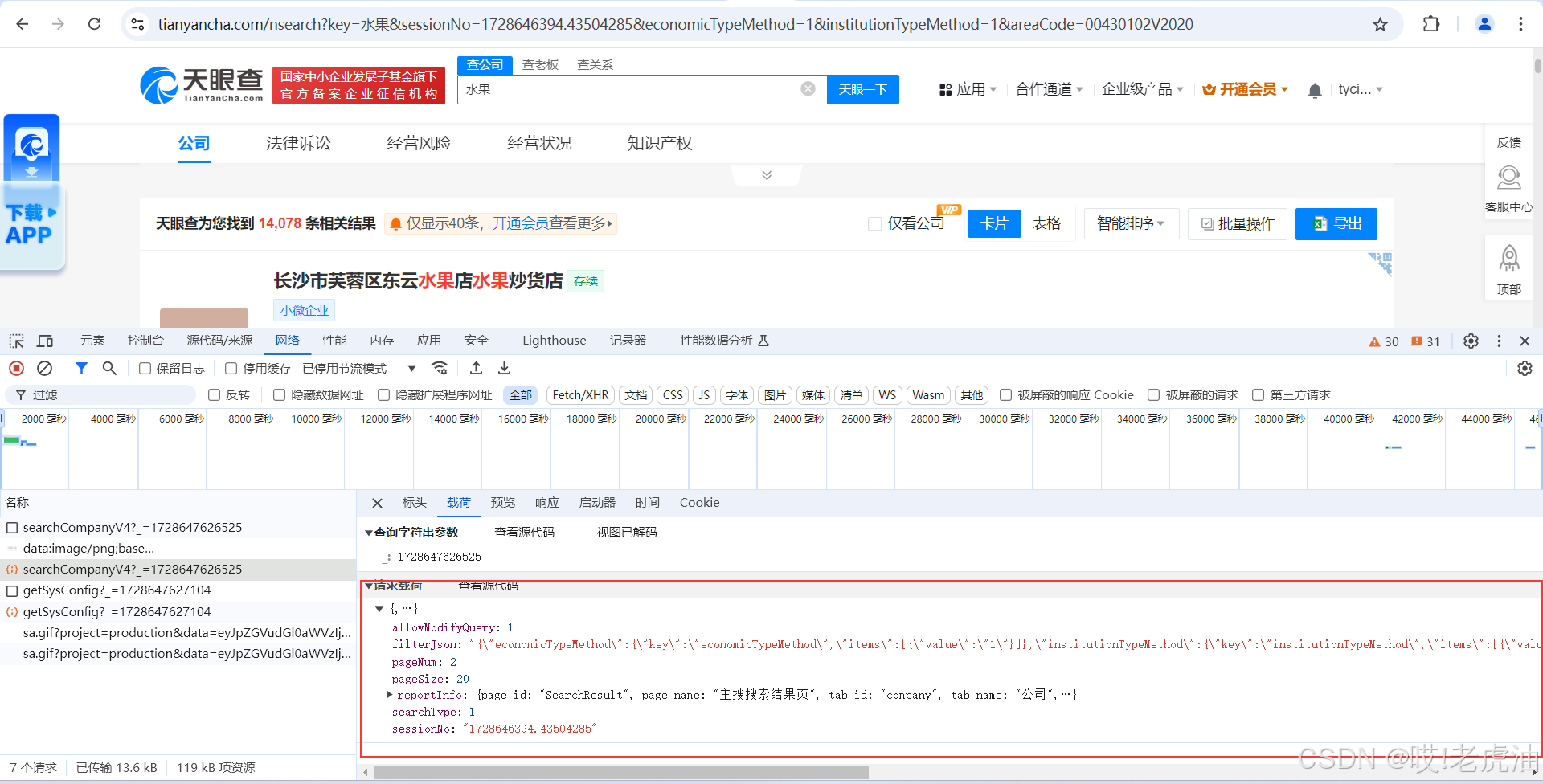

然后我们查看载荷,不难看出pageNum是换页码的,filterJson里可以改省,市,区

然后复制下面所有请求标头

import requests,json

# -*- coding: utf-8 -*-

url = 'https://capi.tianyancha.com/cloud-tempest/web/searchCompanyV4?_=172855916907'

header = {

'Accept': 'application/json, text/plain, */*',

'Accept-Encoding': 'gzip, deflate, br, zstd',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Connection': 'keep-alive',

'Content-Length': '610',

'Content-Type': 'application/json',

'Host': 'capi.tianyancha.com',

'Origin': 'https://www.tianyancha.com',

'Referer': 'https://www.tianyancha.com/',

'Sec-Fetch-Dest': 'empty',

'Sec-Fetch-Mode': 'cors',

'Sec-Fetch-Site': 'same-site',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/128.0.0.0 Safari/537.36',

'X-AUTH-TOKEN': 'eyJhbGciOiJIUzUxMiJ9.eyJzdWIiOiIxNTU3NDAxNDAwNSIsImlhdCI6MTcyODM4NTI2MiwiZXhwIjoxNzMwOTc3MjYyfQ.4ANxJ1zURby3gFR4oOmsIQjSAliBNZd9gZLeH00sNS6ovoVTO6bb19uKtwq31dc-oI0DbK1AKBiYsmhZ3jgyNg',

'X-TYCID': 'a34d0240854711efb7bcefa45e2bcd12',

'eventId': 'i246',

'page_id': 'SearchResult',

'pm': '451',

'sec-ch-ua': '"Chromium";v="128", "Not;A=Brand";v="24", "Google Chrome";v="128"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"Windows"',

'spm': 'i246',

'version': 'TYC-Web',

}在这里可能就用同学有疑问了.

- 使用

json参数时,数据会被序列化为 JSON 格式,适合发送 JSON 数据的场合。 - 使用

data参数时,数据以表单形式发送,适合传统的表单提交。data = { "filterJson": '{"economicTypeMethod":{"key":"economicTypeMethod","items":[{"value":"1"}]},"institutionTypeMethod":{"key":"institutionTypeMethod","items":[{"value":"1"}]},"word":{"key":"word","items":[{"value":"水果"}]},"areaCode":{"key":"areaCode","items":[{"value":"00430000V2020","childList":[{"value":"00430100V2020","childList":[{"value":"00430%dV2020"}]}]}]}}'%102, "searchType": 1, "sessionNo": "1728560135.23681122", "allowModifyQuery": 1, "reportInfo": {"page_id": "SearchResult", "page_name": "主搜搜索结果页", "tab_id": "company", "tab_name": "公司", "search_session_id": "1728560135.23681122", "distinct_id": "322165439"}, "pageNum": 1, "pageSize": 20} req = requests.post(url=url,headers=header,json=data).json() print(req)如果想用data=data传参,就需要进行json格式.

-

data = { "filterJson": '{"economicTypeMethod":{"key":"economicTypeMethod","items":[{"value":"1"}]},"institutionTypeMethod":{"key":"institutionTypeMethod","items":[{"value":"1"}]},"word":{"key":"word","items":[{"value":"水果"}]},"areaCode":{"key":"areaCode","items":[{"value":"00430000V2020","childList":[{"value":"00430100V2020","childList":[{"value":"00430%dV2020"}]}]}]}}'%102, "searchType": 1, "sessionNo": "1728560135.23681122", "allowModifyQuery": 1, "reportInfo": {"page_id": "SearchResult", "page_name": "主搜搜索结果页", "tab_id": "company", "tab_name": "公司", "search_session_id": "1728560135.23681122", "distinct_id": "322165439"}, "pageNum": 1, "pageSize": 20} data = json.dumps(data) req = requests.post(url=url,headers=header,data=data).json() print(req)看都看到这里,点个关注和赞不过分吧